Word Tokens

1 data data

2 statistics101 statistics | 101

3 internationalization international | ization

4 running run | ning

5 better better19 Working with Large Language Models (LLMs)

LLMs, Prompt Engineering, Tokenization

19.1 A Student’s Guide to Prompt Engineering

This section was written based on a conversation with ChatGPT that started with a 156 word prompt and about 25 follow-up prompts to adjust, expand and refine content. Additional editing improved clarity and consistency as well as formatting and adjustments to code and code chunk options. References were checked and adjusted for accuracy and citations were added. Links to inline references were added.Any errors are my responsibility.

19.1.1 Learning Outcomes

By the end of this guide, you should be able to:

- Explain what prompt engineering is and why it matters when working with AI tools.

- Describe how Large Language Models (LLMs) generate responses.

- Understand tokenization and token limits, and how model size affects outputs.

- Construct effective first prompts for coding and data science questions.

- Use follow-up prompts to refine, extend, or debug an AI’s response.

- Critically evaluate responses to detect errors or hallucinations.

19.1.2 References

- OpenAI. (2024). Prompt Engineering (OpenAI 2025)

- Lilian Weng. (2023). LLM Prompt Engineering (Weng 2023)

- Wong, J. (2023). Prompt Engineering Guidebook (DAIR-AI 2025)

Other References for additional exploration.

- Professor Robinson’s notes on Generative AI Models using OLLAMA

- Zhao, W. et al. (2025). A Survey of Large Language Models. (Zhao et al. 2025)

19.2 Prompt Engineering

“Prompt engineering is the process of writing effective instructions for a model, such that it consistently generates content that meets your requirements.” @OpenAI (2025)

A “prompt” can be a question, a request for code, a set of instructions, or even an ongoing conversation with a Large Language Model (LLM).

- Creating or “engineering” a prompt (or series of prompts) to produce the most accurate, useful, and relevant output possible to meet your goals is still a mix of art and science.

Prompt engineering builds on an understanding of how LLMs work (not how they are trained) to create prompts that are effective for your purposes. Characteristics of LLMs can shape effective prompts.

- Non-determinism: LLM responses vary because they are generated from probabilities in a high-dimensional space. Small wording changes can produce very different outputs.

- Asking what is the “most positive” versus the “least negative” sentiment of text.

- Context Sensitivity: LLMs don’t “understand” in the human sense; they generate responses based on patterns in data. How you ask a question strongly influences the quality of the answer.

- Specifying “Give me R code” versus “Explain in plain English” leads to very different outputs.

You can apply guidelines and best practices to improve your chances of getting useful results consistently while building your understanding and skills.

- Efficiency: A well-crafted prompt reduces the need for repeated clarifications.

- Accuracy: Clear context, guidance, and constraints can help minimize errors or hallucinations.

- Improved Collaboration: prompt engineering can be seen as refining or debugging your question prior to collaborating with others or the LLM.

- Multi-language skill: The same techniques apply whether you’re generating R code, Python code, documentation, or explanations.

In short: Prompt engineering is about learning how to “talk to the model” effectively so it can become a productive tool for your goals rather than a source of confusion.

19.3 How LLMs Respond to Prompts

LLMs (like ChatGPT, Claude, or Gemini) do not “understand” like humans. Instead, they:

- Predict the next token (word or subword) given your input and context.

- Use patterns from training data to approximate reasoning.

- Are sensitive to framing: wording, order, specificity, and constraints change the output.

- Can hallucinate: generate confident-sounding but false statements.

19.3.1 Tokenization

LLMs do not read raw text directly. Instead, they break text into tokens (smaller units such as words, subwords, or characters).

- Tokens are not always whole words.

- Example:

"data science"→["data", " science"](2 tokens)

- Example:

"statistics101"→["statistics", "101"](2 tokens)

- Example:

"internationalization"→["international", "ization"](2 tokens)

- Example:

- This is similar to but not the same as Lemmatization which reduces words to their base form (common in NLP preprocessing, not in LLM tokenization).

"running"→"run"

"better"→"good"

Here is a toy example of token splitting.

19.3.2 Token Limits and Conversation Context

- Each model has a maximum token limit (prompt + response). Examples:

- Small models: 2k–4k tokens

- Medium models: 16k–32k tokens

- Large models: up to 200k tokens in advanced systems

- Small models: 2k–4k tokens

- Context accumulation:

- Most LLMs add all previous prompts and responses in a conversation back into each new prompt as context for the new prompts.

- The model itself does not maintain an explicit memory of your prior prompts and its responses so adding them in this way “simulates” a conversation while providing more context for the next response.

- Over time, long conversations with lots of code in prompts and responses can grow very large and approach the token limit.

- If the limit is exceeded, some older context may be truncated, which can lead to loss of information or incorrect responses, or you will be forced to start a new conversation and all context will be lost.

Best Practices to avoid exceeding your token budget.

If your prompt is long (e.g., pasting a large dataset), summarize it, sample it, or provide only the key features.

Start a new conversation for a completely new topic. This prevents context from growing too large and ensures the model doesn’t mix unrelated topics.

19.3.3 Tokens are Converted to Numbers

- LLMs do not operate on tokens as text. Each token is mapped to a numerical vector (an embedding).

- Embeddings are high-dimensional vector representations of tokens or text that capture semantic meaning.

- LLM embeddings are vectors with hundreds or thousands of dimensions, not just 2 or 3, and each dimension encodes some aspect of meaning, context, or syntactic/semantic feature.

- Different models can use different embedding schemes.

- The model performs mathematical operations on these vectors to predict the next token based on mathematical similarity or “closeness.”

Example:

- "dog" → [0.12, -0.03, 0.88, ...]

- "puppy" → [0.14, -0.01, 0.91, ...]

- "car" → [0.80, 0.20, -0.05, ...]

19.3.4 Measuring Closeness

- Similarity between the embedding vectors is measured using metrics like cosine similarity.

\[ \text{cosine similarity} = \frac{\vec{A} \cdot \vec{B}}{\|\vec{A}\| \|\vec{B}\|} \]

Cosine similarity = 1 -> vectors point in the same direction (highly similar meaning).

Cosine similarity = 0 ->vectors are orthogonal (unrelated meaning).

Embeddings allow LLMs to “understand” similar words or phrases as they have embeddings “close” together in vector space.

These metrics are used for:

- Semantic search (finding relevant text)

- Retrieval-Augmented Generation (RAG)

- Clustering or similarity calculations

- Semantic search (finding relevant text)

19.3.5 Visualizing Embeddings in R

This example shows how words with similar meaning might cluster together in the embedding space.

- “dog”, “puppy”, and “cat” cluster closely, reflecting semantic similarity.

- “car” and “bicycle” are farther away, showing unrelated meaning.

- In real embeddings, vectors are high-dimensional, but this 2D example illustrates the concept.

- Cosine similarity measures closeness mathematically in high dimensions, even though they can’t be plotted.

Important nuance:

- These similarity measures are inherently fuzzy.

- Minor variations in your prompt—such as negating a sentence, reordering words, or changing context—can result in large differences in the output, even if the overall meaning seems similar to a human reader.

Example:

- Prompt 1: "List three common R functions for plotting a histogram."

- Prompt 2: "List three uncommon R functions for plotting a histogram." - Prompt 3: "List three popular R functions for plotting a histogram."

Even though only one word changes, the model may:

- Suggest completely different functions.

- Reorder examples differently.

- Include/exclude certain packages.

This happens because embeddings map text to points in a continuous space, and the model predicts outputs based on small differences in those positions.

Takeaway: Always experiment with multiple phrasings and verify results. Treat embeddings and similarity measures as guides, not exact truth.

19.4 Guidelines for Effective Prompts

Good prompts share a few common traits: they are clear, contextual, and iterative. Here are strategies to improve your interactions with AI tools:

- Be Specific

Clearly describe what you want the AI to do. Include the programming language, the type of output, and the level of detail you expect.

Example:- Weak: “Plot the data.”

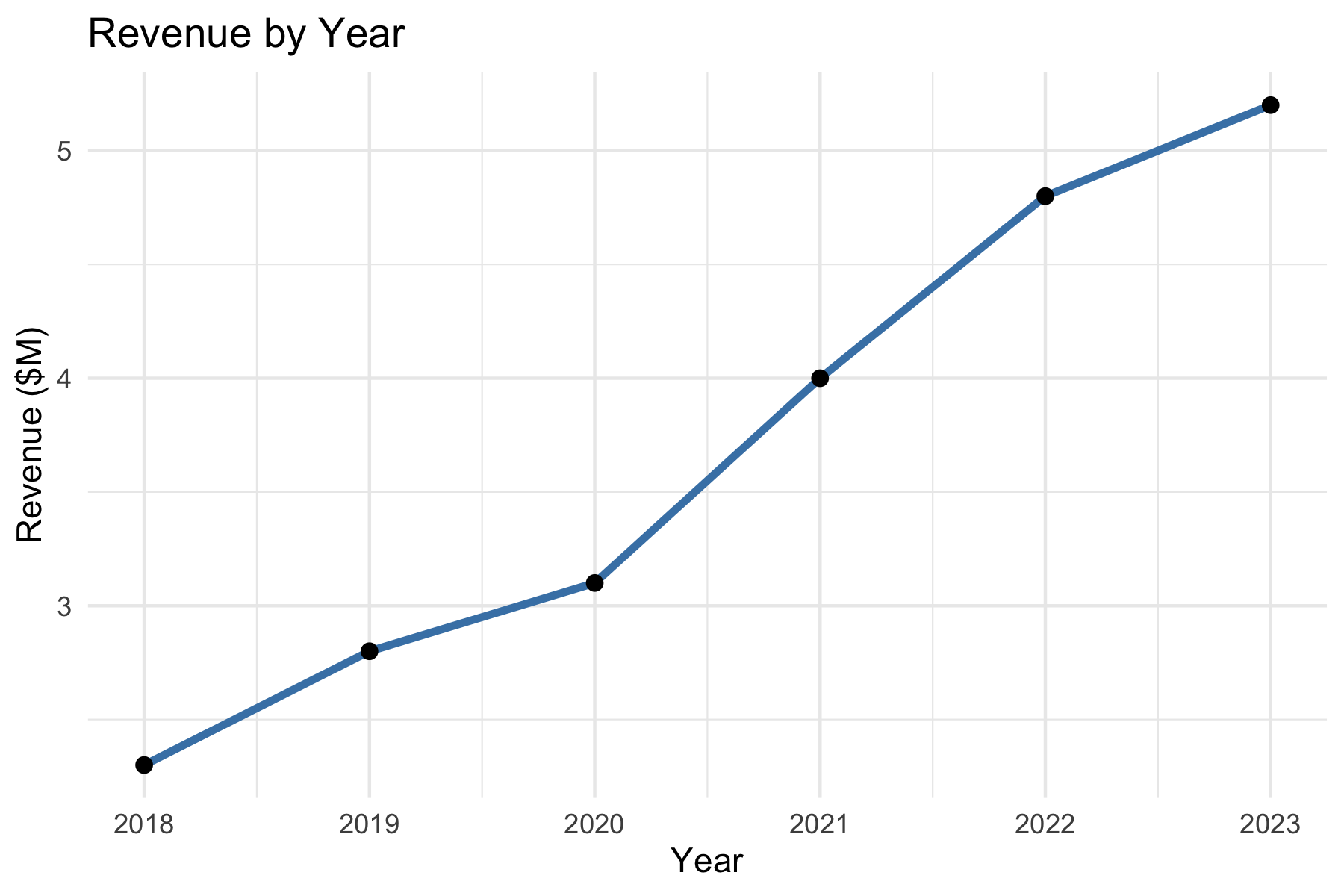

- Strong: “Write R code using ggplot2 to create a line plot of

revenuebyyearwith labeled axes and a descriptive title.”

- Give Context

Provide background information such as the data structure, libraries, or your end goal. This reduces ambiguity and helps the AI tailor its response.

Example:- “I have a pandas data frame with columns

cityandpopulation. Please write Python code using seaborn to create a bar chart of population by city.”

- “I have a pandas data frame with columns

- State Constraints

Specify limitations or requirements for the response, such as the format, length, or assumptions.

Example:- “Give me only the R code, no explanations.”

- “Limit the answer to a single ggplot2 figure.”

- “Assume the data frame has no missing values.”

- Iterate

Think of prompting as a conversation, not a one-shot request. Start simple, review the output, and refine with follow-up prompts.

Example:- First prompt: “Write Python code to read a CSV file and display the first five rows.”

- Follow-up: “Now extend this code to calculate the mean of all numeric columns.”

- Next follow-up: “Format the summary as a neat table.”

- Verify

Never assume the AI is correct. Check the output against your own knowledge, official documentation, or by running the code. Be alert for hallucinations (nonexistent functions, incorrect syntax, or misleading explanations).

Example:- If the AI suggests

robust_cor()in R, search the documentation. If it doesn’t exist, redirect:

“That function doesn’t exist. Could you instead use Spearman’s correlation or show me how to fit a robust regression withMASS::rlm()?”

- If the AI suggests

- Adjust for Creativity or Accuracy

You can control how wide-ranging or precise the response should be by adjusting your wording.

Example:- Creative: “Show three different ways in R to visualize a distribution.”

- Accurate: “Show the single most standard ggplot2 approach for plotting a histogram of a numeric variable.”

- Assign a Role

Guide the style of the response by telling the AI who it should act as.

Example:- “You are a data science tutor. Explain correlation to a beginner and include an R code example.”

- “You are a coding assistant. Provide concise Python code with no explanations.”

19.4.1 Example: Making a Good First Prompt

Weak prompt:

> Plot the data.

Improved prompt:

> I have a data frame in R with columns year and revenue. Please write R code using ggplot2 to create a line plot of revenue by year, with labeled axes and a title.

19.4.2 Example: Building a Conversation

First Prompt:

> Write Python code to read a CSV and summarize the first five rows.

LLM Output:

Code using pandas.read_csv() and df.head().

Follow-Up Prompt:

> Please extend your code to also compute the mean of all numeric columns and print the result.

LLM Output:

Adds df.mean(numeric_only=True).

Next Follow-Up:

> Could you format the summary as a table with column means below the head output?

The model refines until the solution fits your needs.

19.4.3 Example: Checking for Hallucinations

Prompt:

> Write R code to compute a robust correlation coefficient.

LLM Output:

Provides code with a function robust_cor() (a hallucination as the function does not exist).

Student Check: - Get’s an error message and looks up if robust_cor() exists in R.

- If not, ask:

> That function doesn’t seem to exist. Could you instead show how to use MASS::rlm() or another real package to compute robust correlation?

The key is to verify and redirect.

19.4.4 Example: Large vs. Small Model Responses

The same prompt can yield different results depending on the size of the model.

Larger models (billions of parameters) generally produce more detailed and accurate answers, while smaller ones may be faster but less reliable.

Prompt:

> In R, how do I compute the correlation between two variables when the data has outliers?

| Model | Response |

|---|---|

| Large model (e.g., GPT-4, Claude Opus, Llama-70B) | “One option is to use a robust correlation method. For example, you can use Spearman’s rank correlation in R: cor(x, y, method = "spearman"). Another approach is to fit a robust regression using MASS::rlm() if you want to downweight outliers. Both approaches reduce the influence of extreme values compared to Pearson correlation.” |

| Smaller model (e.g., GPT-3.5, Llama-7B) | “You can use cor(x, y) in R. This computes correlation between two vectors.” (Note: does not mention outliers or alternatives like Spearman or robust regression.) |

Takeaway:

- The large model recognizes the nuance (outliers) and suggests multiple valid approaches.

- The smaller model gives a quick but incomplete response.

- Lesson: Always check whether the model has considered your context.

19.4.5 Example: Specifying Roles

When prompting, you can assign a role to the AI which will adjust its response.

Prompt 1 (role: assistant):

> You are a coding assistant. Write R code to plot the distribution of a numeric variable.

Likely Response:

Straightforward R code using hist() or ggplot2::geom_histogram().

Prompt 2 (role: instructor):

> You are a statistics instructor. Explain to a beginner how to plot the distribution of a numeric variable in R, and include an example using ggplot2.

Likely Response:

A step-by-step explanation with annotated code — more teaching-oriented.

19.4.6 Example: Adjusting Creativity vs. Accuracy

LLMs can be “dialed” for creativity (diverse answers, new ideas) or accuracy (precise, deterministic answers).

- This is often controlled by a setting called temperature (higher = more creative, lower = more predictable).

- Even without changing system settings, you can influence style through your prompt wording.

Prompt (creative mode):

> Be imaginative. Show me three different R approaches to visualize the distribution of a variable.

Possible Output:

- Histogram (geom_histogram())

- Density plot (geom_density())

- Boxplot (geom_boxplot())

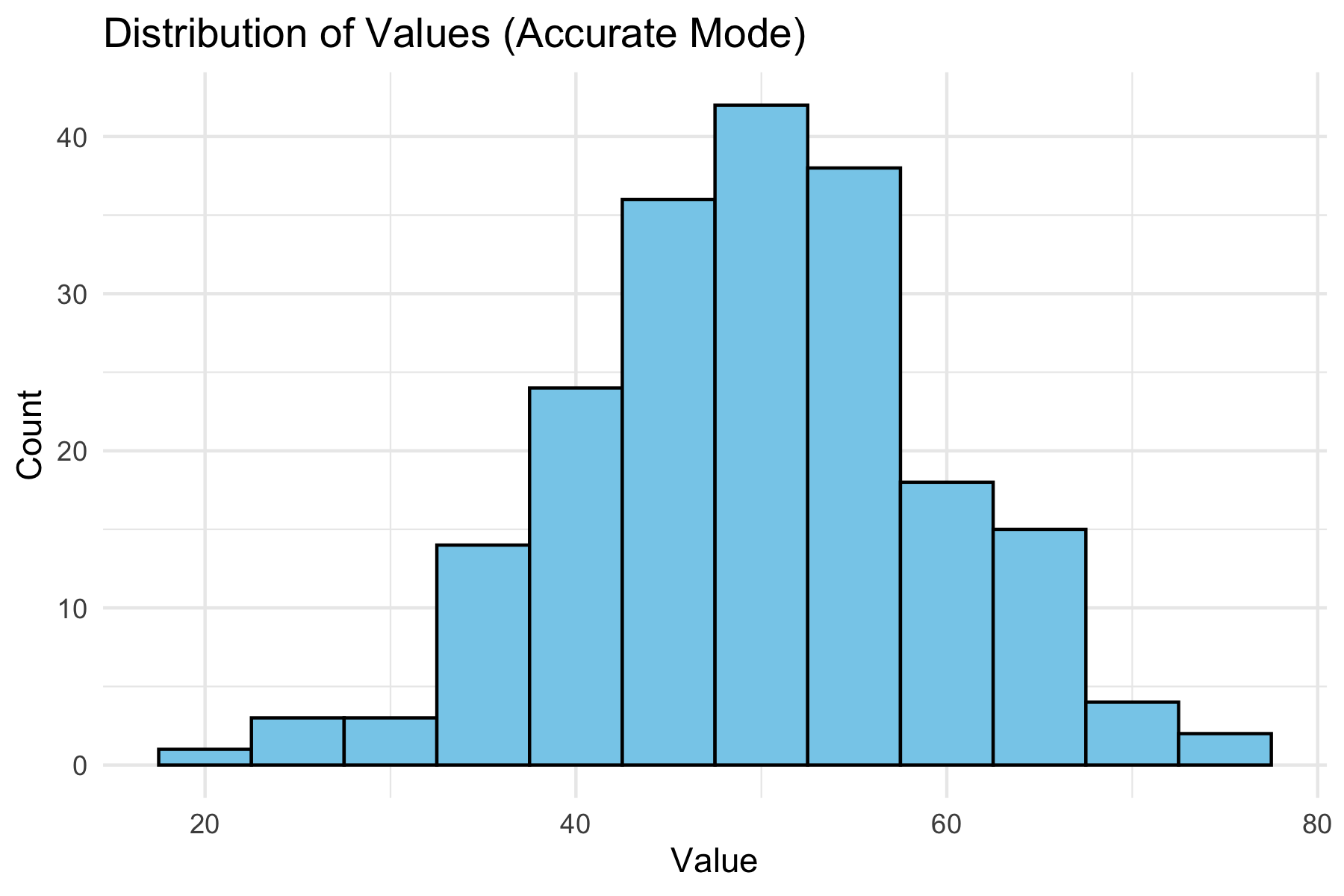

Prompt (accuracy mode):

> Provide the single most standard way in R using ggplot2 to visualize a variable’s distribution.

Possible Output:

One clean example using geom_histogram(), without alternatives.

Below we show a “standard” accurate plot of a distribution: If the AI had been asked in creative mode, it might instead show a density plot, violin plot, or boxplot.

library(ggplot2)

set.seed(42)

values <- rnorm(200, mean = 50, sd = 10)

ggplot(data.frame(values), aes(x = values)) +

geom_histogram(binwidth = 5, fill = "skyblue", color = "black") +

labs(title = "Distribution of Values (Accurate Mode)",

x = "Value", y = "Count") +

theme_minimal()

You can shape the AI’s “persona”(assistant, instructor, critic, tutor) and also control breadth vs. precision of answers depending on their goals.

19.5 Summary

Here is a quick reference sheet you can use when working with AI tools:

| Strategy | What It Means | Example Prompt |

|---|---|---|

| Specify Role | Tell the AI who it should act as (assistant, instructor, critic, tutor). | “You are a statistics instructor. Explain correlation to a beginner with examples in R.” |

| Give Context | Provide background: dataset, libraries, goals. | “I have a data frame in R with columns year and revenue. Use ggplot2 to plot revenue by year.” |

| State Constraints | Limit length, format, or assumptions. | “Give me Python code only, no explanations, using pandas and seaborn.” |

| Iterate | Use follow-up prompts to refine or extend. | “Now add labels to the axes.” |

| Verify | Check outputs against your knowledge or documentation. | “That function doesn’t exist in R. Show me an alternative from MASS or robustbase.” |

| Creativity vs. Accuracy | Ask for one best method (accuracy) or multiple diverse methods (creativity). | “Show three different ways in R to visualize a distribution.” |

| Check for Hallucination | Be skeptical if the AI invents code/functions. Redirect if necessary. | “I can’t find that function. Can you cite the package or suggest a real function?” |

Prompt engineering is not about tricking the AI, but about effective communication.

Think of the AI as a partner:

- You provide structure, clarity, and verification.

- It provides suggestions, alternatives, and explanations.

With practice, you’ll learn when to ask for creativity, when to demand precision, and how to iterate toward a reliable solution.