10 Generative AI Models

ollama, LLM, Prompting, Sentiment analysis, Stop Words

10.1 Introduction

This module investigates multiple large language models to understand how they interact with prompts and use probabilistic methods to generate responses.

Learning Outcomes

- Use Ollama to download LLMs to a local computer

- Interact with the LLMs and analyze the outputs.

- Use {tidytext} functions for basic natural language processing text analysis.

10.1.1 References

- Ollama (“Ollama” 2025)

- Ollama GitHub Repository

- Text Analysis with the {tidytext} package (Silge and Robinson 2023)

10.2 Getting large language models onto your computer

The ollama server needs to be installed on your computer (outside of R) and running in order for this to work. You need to go to https://ollama.com and download it for your operating system.

Once you have ollama installed, you can start it using the terminal window with ollama serve.

You then need to get some models. You can either do this from your operating system’s command prompt in the terminal window, or, within R using ollamar::pull() with the name of the model.

Large language models are, in fact, large, so downloading is very slow. Be patient!

If you have a computer with lots of RAM and a GPU, there are many options to explore: https://ollama.com/models. Feel free to do that!

These are the models I currently have installed on my computer:

name size parameter_size quantization_level

1 deepseek-coder:latest 776 MB 1B Q4_0

2 deepseek-llm:latest 4 GB 7B Q4_0

3 deepseek-r1:latest 4.7 GB 7.6B Q4_K_M

4 llama3:latest 4.7 GB 8.0B Q4_0

5 llama3.2:latest 2 GB 3.2B Q4_K_M

6 mistral:latest 4.1 GB 7.2B Q4_0

7 qwen2.5:0.5b 398 MB 494.03M Q4_K_M

modified

1 2025-05-13T01:29:58

2 2025-05-13T00:25:27

3 2025-05-13T01:39:49

4 2025-05-07T16:44:29

5 2025-01-22T00:05:43

6 2025-05-13T01:49:57

7 2025-05-23T12:27:3110.3 Basic prompting

Let’s talk to one of the models:

[1] "You're talking to me! Yes, I'm here and ready to chat. What's on your mind?"Models are not just monolingual:

[1] "こんにちは!お元気でございます!😊 (Konnichiwa! Ogenki desu ka?) Ah, you're asking if I'm feeling well? 🙏 Yes, I'm functioning properly and ready to help with any questions or tasks you may have! 💻 What's on your mind today? 😃"If llama3 is too big for your computer, that’s OK. The model qwen2.5 is much smaller, and should run with about 4 GB of RAM. It also speaks Chinese! If you want the output to be in a table instead of a string, you can have that too.

10.4 A little exploration into how LLMs work

Generate some text in response to a prompt using a chat bot conversational mode.

[1] "It seems like you started to type \"computer\"! Am I right? Would you like to talk about computers or something else? I'm here to help!"How does that work, exactly? The “model” actually consists of several text stages,

Splicing the prompt into a template (the template may be fixed or somewhat parametric)

Feeding the templated prompt into the predictive model

Formatting the output into something useful, in this case an R string

Here’s how to look at the template for a model:

[1] "{{ if .System }}<|start_header_id|>system<|end_header_id|>\n\n{{ .System }}<|eot_id|>{{ end }}{{ if .Prompt }}<|start_header_id|>user<|end_header_id|>\n\n{{ .Prompt }}<|eot_id|>{{ end }}<|start_header_id|>assistant<|end_header_id|>\n\n{{ .Response }}<|eot_id|>"The template is written in a sub-language originally made for the Go language: https://pkg.go.dev/text/template Each part of the template that can be filled by some text is delineated by something like { .TEXT_GOES_HERE }. Llama3’s template (above) is not too complicated.

Deepseek-r1 uses a more complicated template (notice the loop via range, various if conditions, and the slice):

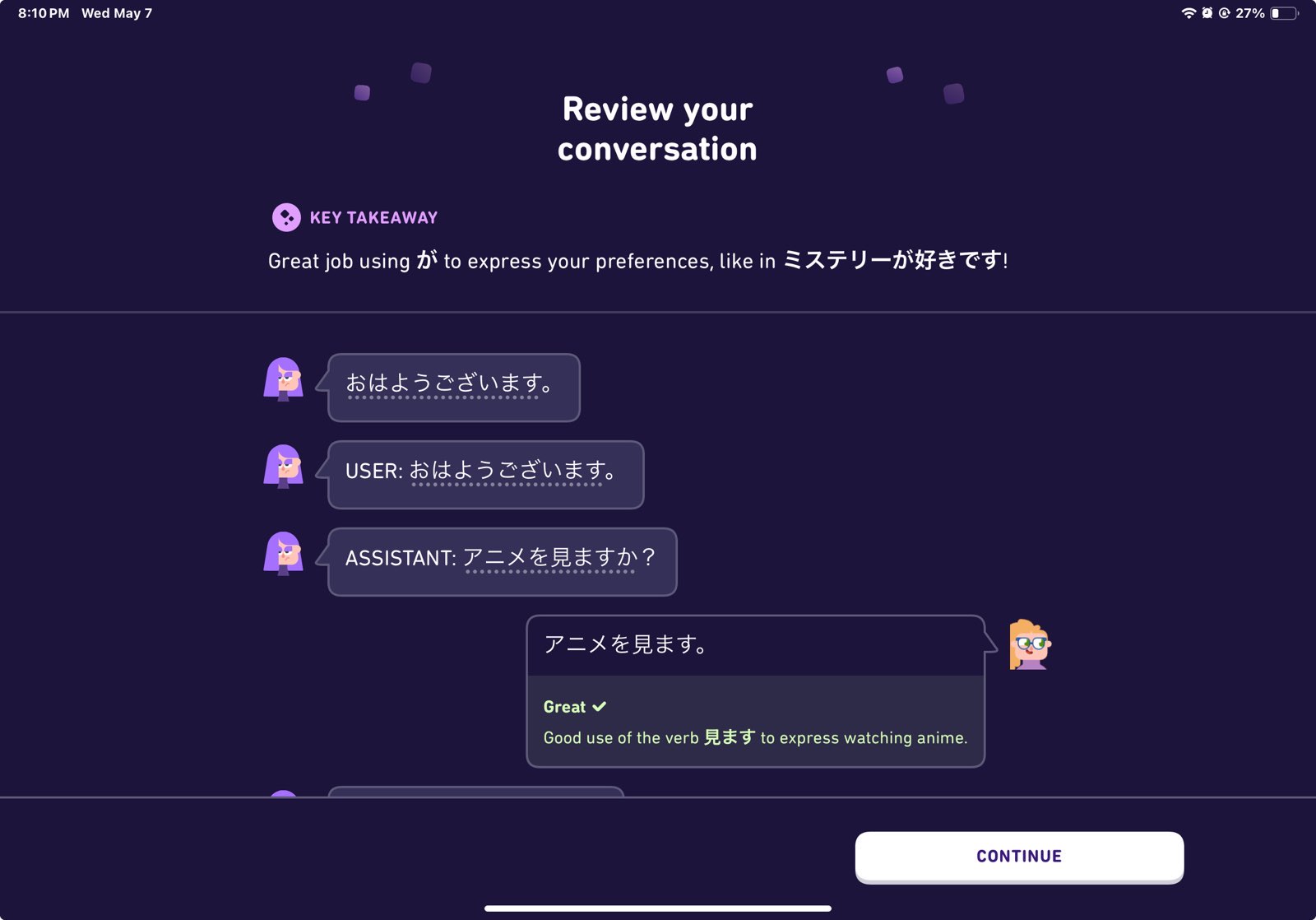

[1] "{{- if .System }}{{ .System }}{{ end }}\n{{- range $i, $_ := .Messages }}\n{{- $last := eq (len (slice $.Messages $i)) 1}}\n{{- if eq .Role \"user\" }}<|User|>{{ .Content }}\n{{- else if eq .Role \"assistant\" }}<|Assistant|>{{ .Content }}{{- if not $last }}<|end▁of▁sentence|>{{- end }}\n{{- end }}\n{{- if and $last (ne .Role \"assistant\") }}<|Assistant|>{{- end }}\n{{- end }}"Templates are supposed to be hidden from the user, but sometimes, the templates escape!

The “USER:” and “ASSISTANT:” are part of the template used to build the chat response (see above).

This is probably because hidden characters slipped into the stream of tokens and confused the logic that decides what the user sees. The “<|” and “|>” tokens in the templates shown above are supposed to prevent that…

After templating, the prompt is given to a predictive text model. The text model performs a random sample from a conditional probability distribution for the next token \(t_{n+1}\) given the tokens that came before it as expressed in Equation 10.1.

\[ p(t_{n+1} | t_n, t_{n-1}, ..., t_1) \tag{10.1}\]

- Here the

|indicates that the values to its right are known to have happened so the probability on the left of|is “conditioned” on those occurring.

The insight that makes this possible is a clever application of “statistical bootstrapping”, which let’s us estimate this probability from far less data than would actually be needed.

- Once trained, you can just play with the distribution that was found, by requesting samples from it.That is what

ollamar::generatedoes.

We can remove the templating process to see how the predictive model works on its own by using the raw = TRUE option.

When you do that, what you pass in the prompt option is what the predictive model sees, without passing through the template first. This has the effect of taking the LLM out of chat bot mode.

Make sure to also pass the num_predict option to specify how many output tokens you want, or it may run for a long time.

For instance …

[1] "ware Arena is a 6,000-seat multi"The predictive model does random sampling, so it doesn’t give you the same response each time you run it.

tests_to_run <- 5

tibble(

model = replicate(tests_to_run, "llama3"),

prompt = replicate(tests_to_run, "What")

) |>

mutate(response = map2(model, prompt, function(x, y) {

generate(x, y,

raw = TRUE,

num_predict = 5,

output = "text"

)

}) |>

unlist())# A tibble: 5 × 3

model prompt response

<chr> <chr> <chr>

1 llama3 What " does it mean to be"

2 llama3 What " are the most common types"

3 llama3 What " is the significance of the"

4 llama3 What " is the best way to"

5 llama3 What " is the main difference between"You can control the randomness level with the temperature parameter.

- Turning it down reduces the randomness.

- Usually,

temperature=0means the model is deterministic–no randomness, so only the most likely response is produced… (“Most likely” according to the model, which can be a bit strange!)

tests_to_run <- 5

tibble(

model = replicate(tests_to_run, "llama3"),

prompt = replicate(tests_to_run, "What")

) |>

mutate(response = map2(model, prompt, function(x, y) {

generate(x, y,

raw = TRUE,

num_predict = 5,

temperature = 0,

output = "text"

)

}) |>

unlist())# A tibble: 5 × 3

model prompt response

<chr> <chr> <chr>

1 llama3 What " is the best way to"

2 llama3 What " is the best way to"

3 llama3 What " is the best way to"

4 llama3 What " is the best way to"

5 llama3 What " is the best way to"10.5 Basic text cleanup

LLMs are a convenient source of raw material for natural language processing methods. Let’s ask for one of the LLMs to write us a poem to play with!

Here’s the poem it made. We are using base R syntax because the LLM tried to format the text a bit. The cat function just prints out the text as it comes…

Fairest beauty, with thy radiant face,

Like sunrise on the horizon's golden space,

Thou dost arise and with each passing day

Illuminate the world's dark, dreary place.

Thy eyes, like stars that shine with gentle might,

Do light the path for wanderers in flight;

And though thou art a fleeting glance of time,

Thou dost enthrall my heart with thy sweet prime.

For in thy presence, all my cares do cease,

Like autumn leaves that rustle to release;

My thoughts, once tangled like a knotted vine,

Unfold and blossom as thy beauty shines.

But alas, fair one, thou art not for me,

A mortal man, bound by mortality.

Note: A traditional Shakespearean sonnet consists of 14 lines, with a rhyme scheme of ABAB CDCD EFEF GG. The poem is written in iambic pentameter, with five feet (syllables) per line, and a consistent pattern of stressed and unstressed syllables.The formatting turns out to be unhelpful if you want to study word usage. So let’s strip out all the punctuation, flatten the case, and make each word a single row.

Have a look at the resulting data frame! You’ll notice that there’s one column, called word.

- If you wanted to call the column something else, you’d replace the

wordin the line above with whatever you wanted to call it. - But most of the tidy text mining tools expect

wordas the column of words, so we’ll use that.

Note that unnest_tokens did a few other things as well. Can you figure out what these are?

OK, let’s count the words! You can do this in several ways depending on what you want to see.

# A tibble: 106 × 2

word n

<chr> <int>

1 14 1

2 a 6

3 abab 1

4 alas 1

5 all 1

6 and 5

7 arise 1

8 art 2

9 as 1

10 autumn 1

# ℹ 96 more rowsor try

# A tibble: 106 × 2

word n

<chr> <int>

1 a 6

2 with 6

3 and 5

4 thy 5

5 like 4

6 of 4

7 the 4

8 thou 4

9 for 3

10 in 3

# ℹ 96 more rowsLook closely at the word frequencies you just produced. Can you explain why some words are more common?

- Some of them just aren’t very informative, since they’re words like “my”, “a”, and such.

- Linguists call these “stop words,” and we’d like to get rid of them for most of our analysis.

- Linguists call these “stop words,” and we’d like to get rid of them for most of our analysis.

- Now, an important question is whether you want to get rid of them, or not get rid of them. Probably the latter, I’m thinking, because there might be some situations where they’re useful. Hold that thought.

One of the things loaded when you brought in the {tidytext} library was a list of modern English stop words in the table stop_words.

# A tibble: 1,149 × 2

word lexicon

<chr> <chr>

1 a SMART

2 a's SMART

3 able SMART

4 about SMART

5 above SMART

6 according SMART

7 accordingly SMART

8 across SMART

9 actually SMART

10 after SMART

# ℹ 1,139 more rowsComing back to the task of removing stop words, what we have is a table poem_tidied, in which there is one column called word, and many rows, one for each word.

We want to remove (temporarily) each row that has a word that’s in the stop_words table.

- If you were writing this in Java or Python, you’d probably use a loop to do that.

- But R makes this process a snap with something called an anti-join. Specifically, an anti-join takes two tables and removes all the rows in the first table according to a matching rule that’s built from the second table.

- This rule matches up two columns – usually called keys – one from each table. (As you might expect, there’s also a join as well that puts two tables together.)

Aside: if you read the documentation for

anti_join, you’ll probably find that it’s a bit mysterious. That’s becauseanti_joinis a generic function, and can do lots of other matching rules, and various other kinds of tricks too! It’s very useful!

OK, enough theory. Let’s get rid of those stop words!

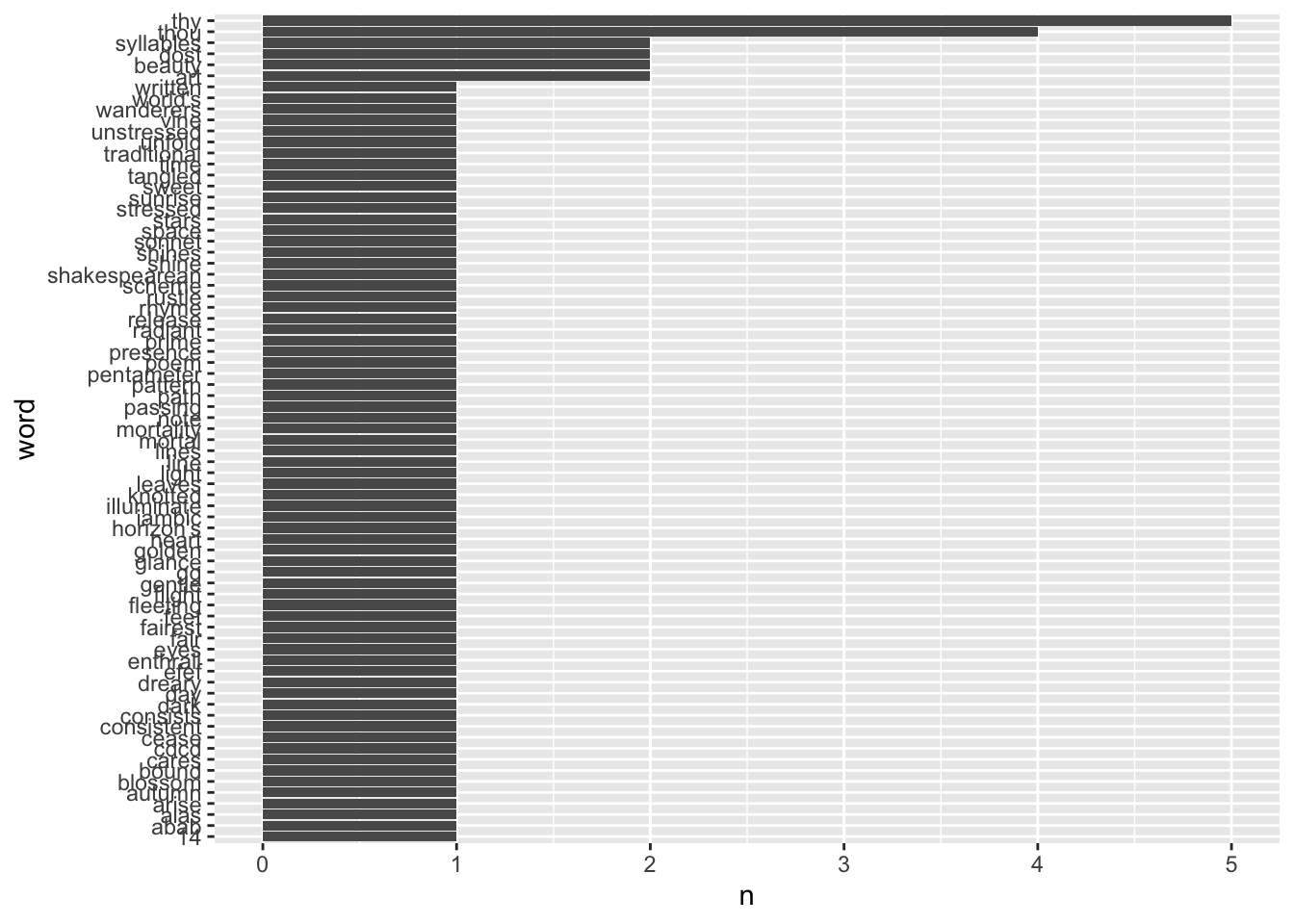

# A tibble: 75 × 2

word n

<chr> <int>

1 thy 5

2 thou 4

3 art 2

4 beauty 2

5 dost 2

6 syllables 2

7 14 1

8 abab 1

9 alas 1

10 arise 1

# ℹ 65 more rowsI think I agree that’s nicer!

Let’s turn our textual word frequency list into something more graphical.

10.6 Sentiment analysis

The main idea of sentiment analysis is that certain words have emotional content: positive, negative, or otherwise.

If you look over the set of words in a document and compare how frequently these different sentiments appear, you might be able to identify whether the document is tragedy or comedy – say.

As you might imagine, this isn’t the end of the story, but it works better than you might expect!

Let’s get some poems written:

happy_text <- generate(

model = model,

prompt = "Write a long joyful poem",

output = "df"

)

cat(happy_text$response[[1]]) In the grandeur of the dawn, where the sun paints the sky,

A symphony awakens, a song that never dies.

Each note is a whisper, each cadence a sigh,

Of nature's embrace, where life and love lie.

The dew-kissed flowers bloom with delight,

Dancing in the wind, bathed in the soft light.

Beneath the canopy of an azure quilt,

Lies a world where peace, joy, and beauty are diligent.

The chirping birds serenade the morn,

A melody that fills hearts with forlorn.

The whispering wind through the trees does sigh,

A lullaby of joy, a sweet bye-bye.

The sun ascends higher, a golden sphere,

Casting its warmth on everything we hold dear.

Fields sway in rhythm to the gentle breeze,

A world where worries cease, and dreams freeze.

Mountains stand tall with a silent grace,

Witnesses to time's eternal pace.

The river flows gently, cradled by earth,

A reflection of life, of its birth.

Ripples of hope upon its surface play,

In this moment, let us pause and say,

This is a world where joy abounds,

Where the echoes of laughter resound.

Let us cherish these moments so true,

A symphony of life, a beautiful view.

For in every sunrise, every morning's light,

Is a reminder that love's always right.

So let your heart dance to the joyful tune,

Bask in the warmth, under the afternoon moon.

Embrace the world with its vibrant hue,

A symphony of life, our sweet adieu.sad_text <- generate(

model = model,

prompt = "Write a long sad poem",

output = "df"

)

cat(sad_text$response[[1]]) Upon the canvas of twilight, where shadows dance and flee,

Lies a tale of sorrow, as old as time itself, I weave.

A heart once brimming with laughter, now echoes only sighs,

In a world that's grown colder, under perpetual goodbyes.

Beneath the silver moonlight, where dreams and reality meet,

Lingers a soul entwined in grief, like an endless reoccurring beat.

A love once vibrant and passionate, now shrouded in silence and gloom,

Is but a distant memory, lost in the depths of the brook.

The whispers of the wind carry tales of years gone astray,

Echoes of laughter that once filled every corner of the day.

Now there is only quietude, as life moves on without pause,

A world bereft of warmth, where love's flame has lost its cause.

In the garden of reminiscence, where memories hold their reign,

Buds of hope and dreams that once flourished, now wither in pain.

The flowers of joy have wilted, choked by tears and despair,

In the soil of forgotten love, they no longer dare to care.

But as I stand in the twilight, watching the sun set so low,

A glimmer of hope flickers, amidst sorrow's constant flow.

For even in the darkest night, stars continue to shine,

And love, though it may falter, shall never truly decline.

So let me not dwell in sorrow, nor let my spirit decay,

In the heart of the moonlight, I find a new pathway.

To carry on, to remember, and heal the wounds within,

A testament to love that refuses to diminish or sin.

And as the stars guide me through the endless night's expanse,

I'll find my way back to the warmth of a new embrace.

The tale of sorrow now fades, giving birth to a brighter day,

Wherein love finds its voice anew, in a world that listens and prays.somber_text <- generate(

model = model,

prompt = "Write a long somber poem",

output = "df"

)

cat(somber_text$response[[1]]) Upon the canvas of twilight, where shadows dance and play,

A melody echoes softly, as if from another day.

The whispers of the wind carry tales untold and old,

Of love that once was passion, now only in memory hold.

Beneath the silent starlight, under the weeping willow tree,

A single tear descends gently, a symbol of remorse and glee.

The moonlight touches tenderly upon the world below,

Caressing each memory with its silvered, gentle glow.

In this place where silence reigns, and dreams are born and die,

I stand upon the precipice of yesterday and cry.

A symphony of sorrow, a requiem for lost time,

A soliloquy of longing that only echoes rhyme.

The echoes whisper secrets, the ghosts of days gone by,

Of laughter and tears, of joy, of pain, beneath the starry sky.

In this somber twilight, my heart is heavy with regret,

For bridges burned, for love not found, for opportunities forgotten.

Yet in the darkness, I find solace, a peace that's all my own,

A realization of what truly matters, as my tears have flown.

In this moment of stillness, where the past and future merge,

I close my eyes and let go, allowing life to re-inverse.

For tomorrow is a promise, a new dawn yet untold,

A canvas upon which to paint the masterpiece that is my soul.

So let me stand beneath the stars, let me dance in twilight's glow,

Let me embrace my memories, for I know the way they grow.

For though I walk alone through this vast and endless night,

I carry within me a love that will forever burn so bright.And let’s tidy them up and pack them together into a single table

poems <- bind_rows(

happy_text |>

unnest_tokens(word, response) |>

anti_join(stop_words) |>

mutate(poem = "happy"),

sad_text |>

unnest_tokens(word, response) |>

anti_join(stop_words) |>

mutate(poem = "sad"),

somber_text |>

unnest_tokens(word, response) |>

anti_join(stop_words) |>

mutate(poem = "somber")

)

poems# A tibble: 397 × 4

model created_at word poem

<chr> <chr> <chr> <chr>

1 mistral 2025-05-23T21:47:41.387884Z grandeur happy

2 mistral 2025-05-23T21:47:41.387884Z dawn happy

3 mistral 2025-05-23T21:47:41.387884Z sun happy

4 mistral 2025-05-23T21:47:41.387884Z paints happy

5 mistral 2025-05-23T21:47:41.387884Z sky happy

6 mistral 2025-05-23T21:47:41.387884Z symphony happy

7 mistral 2025-05-23T21:47:41.387884Z awakens happy

8 mistral 2025-05-23T21:47:41.387884Z song happy

9 mistral 2025-05-23T21:47:41.387884Z dies happy

10 mistral 2025-05-23T21:47:41.387884Z note happy

# ℹ 387 more rowsThe way sentiment analysis works is that there’s a list of words, each tagged with a positive or negative “sentiment” (supposed to mean something like emotional content). There are standard lists for this… and the tidytext library has several.

For instance

Have a look! There are also c("bing","afinn","nrc","loughran") to try.

We can just inner_join these sentiments into our poems, once tidied up – adding in a column for sentiment for each word:

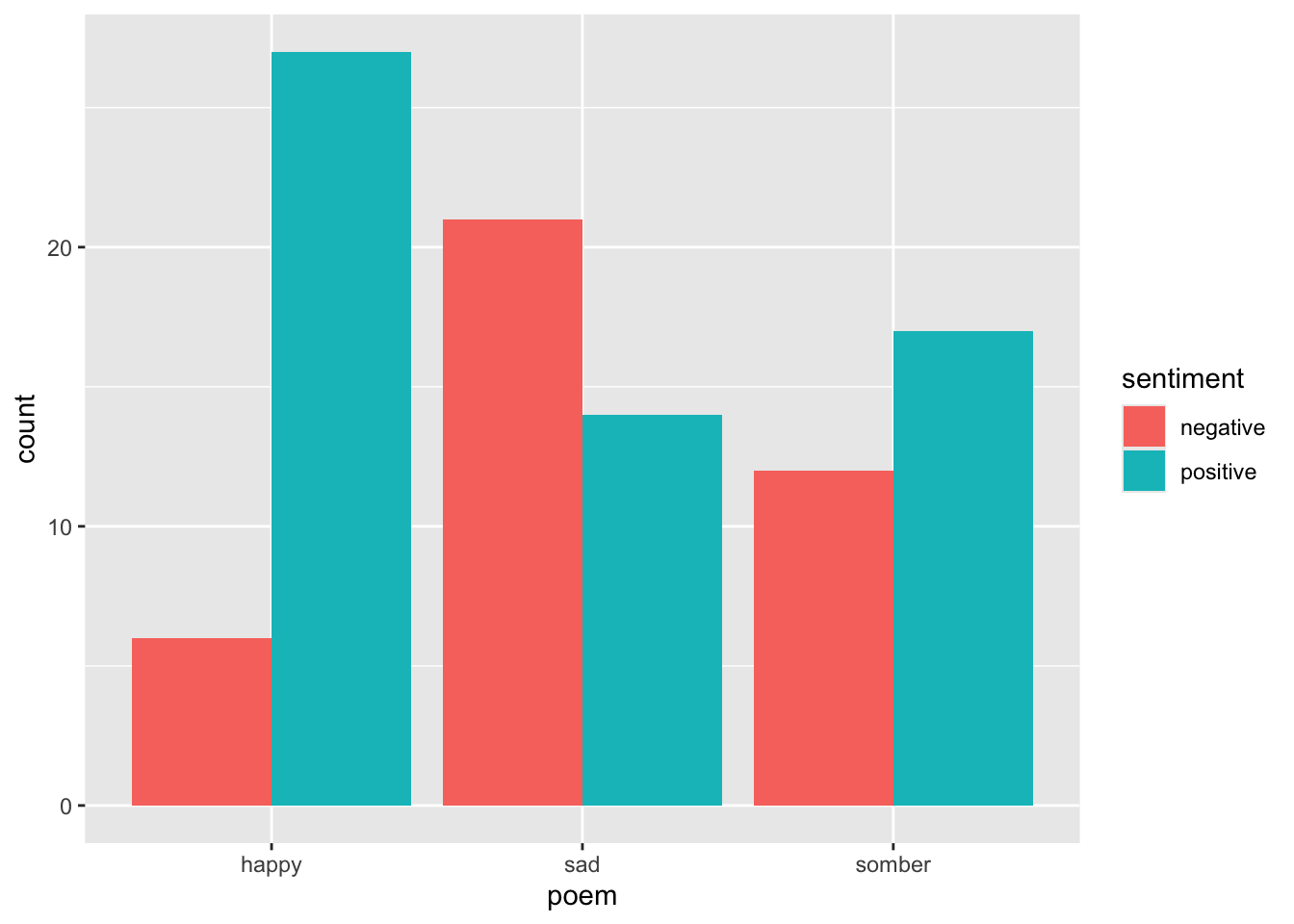

poems |>

inner_join(bing_sentiments) |>

group_by(poem) |>

ggplot(aes(x = poem, fill = sentiment)) +

geom_bar(position = "dodge")

We can see (perhaps not totally clearly) that changing one word in the prompt had the effect of altering the overall word choice in the poems!

10.7 Exercise

Note: Posit Cloud currently does not support ollama, and you’ll need more memory than the free account provides, so you have to install it locally. This exercise may stress your computer! It works best if you have an NVIDIA GPU and at least 16 GB of CPU RAM.

Download and install

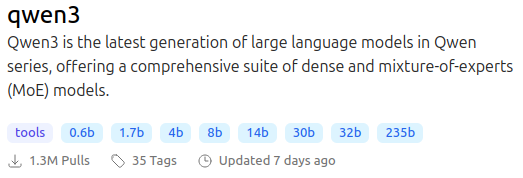

ollamaandollamaron your computer.Get an interesting model. Look over the index at https://ollama.com/library to pick a good one… but beware, the latest-and-greatest model will probably be really slow. There are little tags like these:

The tags on the bottom tell you the sizes of the models in billions of parameters (roughly GB of memory). For instance if you wanted the 4b model, you would use the following command:

If all else fails, you can play with a model in the gpt2 family, since they are quite small. This one will almost certainly run for you. It even runs on my phone!

Since it has no prompt template, GPT2 is just for text completion. You do not need to do

raw=TRUE. It is also comically bad at text generation, so have fun with it!

Play around with the model with

generate()to get an idea of how it responds, including how fast it responds.Look at the template for the model.

Try the model without the template!

Make a longer text using your model.

Produce a histogram of the top 10 most frequent words and their frequencies in the text, with stop words and any header or footer removed.

Use sentiment analysis to see if you can tune the sentiment by adjusting a prompt for your text.