8 Getting Data from SQL Databases

database, relational, sql, duckdb, dbeaver, duckplyr

The code chunks for SQL and R have different colors in this chapter.

- Code chunks for SQL use this color.

- Code chunks for R use this color.

8.1 Introduction

8.1.1 Learning Outcomes

- Understand the organization of relational databases.

- Use Structured Query Language (SQL) to access and manipulate data in SQL databases.

- Use the {dbplyr} package to access and manipulate data in SQL databases.

8.1.2 References:

- R for Data Science 2nd edition Chapter 25 Wickham, Cetinkaya-Rundel, and Grolemund (2023)

- {DBI} package R Special Interest Group on Databases (R-SIG-DB), Wickham, and Muller (2022)

- {odbc} package Hester, Wickham, and Gjoneski (2023)

- {duckdb} package Mühleisen et al. (2023)

- DuckDB Database Management System DuckDB (2023c)

- DuckDB Documentation

- W3 Schools SQL Schools (2023)

- DBeaver SQL IDE DuckDB (2023a)

- DBeaver General User Guide Team (2003)

- R Markdown: Definitive Guide Xie, Alllaire, and Grolemund (2023) Section 2.7.3 on SQL

- {dbplyr} package Wickham, Girlich, and Ruiz (2023)

- Writing SQL with dbplyr Posit (2023a)

- {starwarsdb} package Aden-Buie (2020)

- Parquet File Format Documentation

8.1.2.1 Other References

- Posit Solutions Best Practices in Working with Databases Posit (2023b)

- MariaDB Tutorial Database Theory Foundation (2023b)

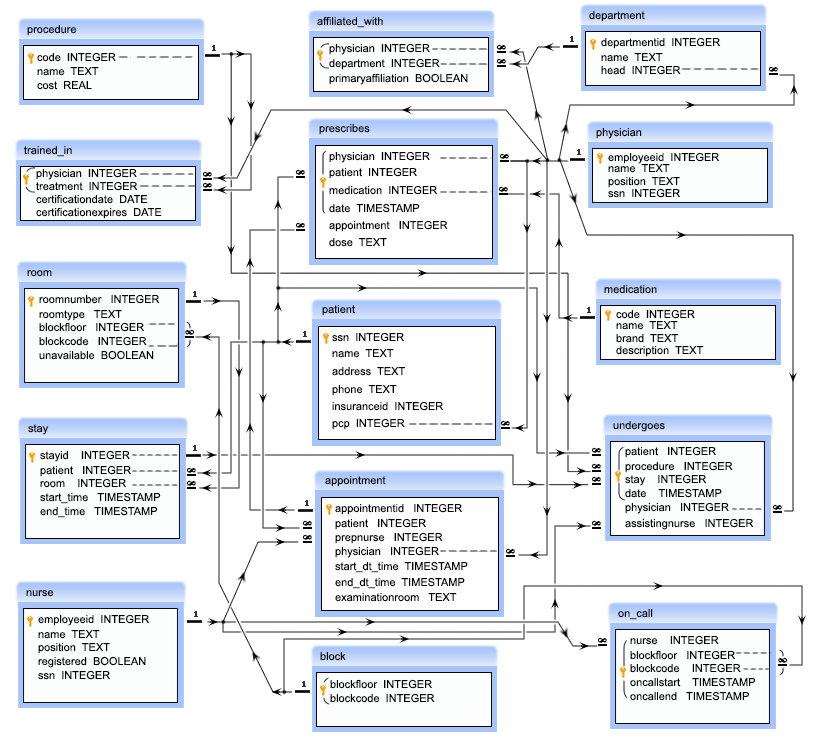

- Customizing Keyboard Shortcuts in the RStudio IDE Ushey (2023)

- Code Snippets in the RStudio IDE Allaire (2023)

- SQL Injection kingthorin and Foundation (2023)

- How to Version Control your SQL Pullum (Swartz) (2020)

- Mermaid Diagramming and Charting Tool mermaid.js (2023)

- DuckDB Installation DuckDB (2023b)

- SQL Style Guide Holywell (2023)

- SQL Style Guide for GitLab Mestel and Bright (2023)

- Basic SQL Debugging Foundation (2023a)

- Best Practices for Writing SQL Queries Metabase (2023)

- 7 Bad Practices to Avoid When Writing SQL Queries for Better Performance Allam (2023)

- 3 Ways to Detect Slow Queries in PostgreSQL Schönig (2018)

- PostgreSQL Documentation on Using EXPLAIN Group (2023)

- SQL Performance Tuning khushboogoyal499 (2022)

8.2 A Brief History of Databases

People and organizations have been collecting data for centuries. As early as the 21st century BCE, people were using clay tablets to make records of farmland and grain production. Records were kept as individual files (clay tablets) that were eventually organized based on conceptual model of the data. That model was typically a hierarchical set of fixed attributes of the records, e.g., subject, date, author, etc. for tracking records in a filing system.

It wasn’t until the 1960s that computerized “databases” began to appear. Early computerized databases focused on storing and retrieving individual records which were organized based on existing data models which used the attributes of the tables and records. Data were stored in tables with rows of values and columns for attributes. Users could find the desired table and row by navigating through the hierarchy. This model resulted in larger and larger tables with many redundant fields as users tried to integrate data at the table level. As an example, a record of a purchase could include all of the details of the purchase to include all of data on the buyer and seller such as name, address, and telephone number. Every record of every purchase by a buyer could include their name, address, and telephone number.

Database Management Systems (DBMS) were developed as the interface between the system user and the raw data in the records. DBMS used the data model to manage the physical, digital storage of the data and provide the navigation to find the right table and record. Users could now retrieve and update records electronically. However, computing and storage was expensive so researchers were looking for ways to reduce the redundant storage and inflexible perspective of the data inherent in the hierarchical data model.

In 1970, IBM researcher E.F. Codd published what became a landmark paper, A relational model of data for large shared data banks. Codd (1970) Codd proposed replacing the fixed hierarchy and its large tables with much smaller tables where the data model now focused on defining relationships among the tables instead of navigating across a hierarchy of attributes or parent child relationships among tables. The relational database model is now ubiquitous for storing extremely large data sets.

A strength and a drawback of the relational database model is the capability to manage not just the data, but all the relationships across the tables of data. This makes relational databases great for working with structured data, where the relationships are known ahead of time and built into the database model. The drawback is it takes time and processing power to enforce those relationships and structure. Thus, other approaches such as Apache HADOOP have been developed to capture and manage the variety, velocity, and volume of data associated with “Big Data” sources, e.g., capturing all the meta data and data associated with billions of transactions a day on a social media site.

For the relational database model to work, it needed a corresponding high-level language for implementation. Users had to be able to create databases and manage the life cycle of their databases and the data they contained. This need led to the development of what were known as “query languages” which eventually evolved into what is now called Structured Query Language or SQL (pronounced as “SEE-quil” or “ESS-q-l”). SQL began with a row-based approach to managing data in tables. Other languages e.g., No-SQL, developed a column-based approach to managing data. There are trade offs between the approaches. Many relational database developers create their own (proprietary) versions of SQL to be optimized for their database that incorporate features of both for speed and distributed processing. Apache has developed Apache Hive as a data warehouse system that sits atop HADOOP. “Hive allows users to read, write, and manage petabytes of data using SQL.”

Relational Databases and HADOOP/Hive-like approaches are not competitors as much as different solutions for different requirements.

This section will focus on the relational database methods and systems which use SQL.

8.3 Database Concepts and Terms

8.3.1 Database Tables

Relational databases use Relational DBMS (RDBMS) to keep track of large amounts of structured data in a single system.

A relational database uses rectangular tables, also called entities to organize its data by rows and/or columns.

Each table is typically designed to contain data on a single type or class of “entity”.

An entity can be a physical object such as a person, building, location, or sales order, or a concept such as this class or metadata on an object.

Each entity must have properties or attributes of interest.

- These become the names for the columns in the table.

- These are also known as the fields for the tables.

Each row in the table is also referred to as a record.

The intersection of row and column contains the value, the data, for that record for that field.

All of the values within a given field should be of the same data type. These include the basic types in R such as character, integer, double, or logical as well as complex objects such as pictures, videos, or other Binary Large Objects.

The tables are similar to R or Python data frames, but with some key differences.

- Database tables are stored on a drive so are not constrained, like R data frames, to having to fit into memory. They can have many columns and many millions of rows, as long as they fit on the drive.

- Unlike a data frame, a database field (column) may have a domain which limits the format and range of values in the field in addition to constraining the data type, e.g., the format for a telephone number may be

xx-xxx-xxx-xxxx. - Database tables are often designed so each record is unique (no duplicate rows) with rules the DBMS uses to enforce the uniqueness.

- Database tables are almost always indexed so each row has a unique value associated with it (behind the scenes).

- The index identifies each record from a physical perspective, much like one’s operating system indexes files for faster search and retrieval.

- The index is what allows a DBMS to find and retrieve records (rows) quickly.

Database designers use the term grain, to describe the conceptual definition of what one row represents or the level of granularity of the table.

In the NYC 13 flights database, the grain of the flights table is one row per individual flight.

- A developer might create a second table whose grain is the number of flights per airline per day between each origin and destination.

- That table would have a more aggregated grain than the original flights table.

Grain describes the level of detail stored in the table. It answers the question:

- What does a single row represent?

Understanding the grain helps identify which fields must be combined to uniquely identify a row.

- To check the grain, identify what one row represents by asking: “What real-world entity or event does each row describe?” (e.g., one flight, one order line, one airport-hour observation). That conceptual level of detail defines the grain.

- Test candidate columns for uniqueness using

COUNT(*)vsCOUNT(DISTINCT (col1, col2, ...)). - When those counts match, the column set defines the table’s grain.

Understanding the table’s grain is also essential when combining tables.

- Errors often occur when joining tables that operate at different levels of granularity.

- If the join condition does not align the grains properly, a join could generate many more rows may unexpectedly.

When using a join, always identify the grain of each table and whether the tables operate at the same or different levels of detail (aggregation/granularity).

8.3.2 Database Table Keys and Relationships

8.3.2.1 Primary, Composite, and Surrogate Keys

Most database tables are designed so that their grain **can be enforced by a primary key, a field (or set of fields) that uniquely identifies each record from a logical perspective across the database.*

- For example, a person’s social security number, which is expected to be unique to one person, could serve as a primary key.

If the grain requires multiple fields together to create a unique identifier, the developer defines a composite key,

- For example, using house number, street name, city, state, and zip code together might form a composite key.

When a composite key becomes large, cumbersome, or subject to change, designers often introduce a surrogate key, a newly created field (such as an auto-incrementing integer) that uniquely identifies each row but has no inherent business meaning.

- Designers may also create surrogate keys for tables with single field grains to abstract any meaning away from the field.

- This may be done to protect privacy or increase robustness of the data model in the event the original primary key’s structure changes over time.

- Surrogate keys are stable over time and help avoid issues when data values that form logical keys might change.

The presence of primary keys enables the RDBMS to define and enforce relationships between tables through foreign keys.

8.3.2.2 Foreign Keys

A common approach to defining relationships in a relational database is to use the primary key of one table as a foreign key in another table. This practice clarifies the logical connections between entities and enables the database to enforce those relationships.

- Adding the primary key from one table as a field in a related table is referred to adding a foreign key in the second table and that added field becomes the foreign key.

- The table containing the primary key is called the parent table.

- The table that references the parent table through a foreign key is called the child table.

Example: Consider an organization tracking data about purchase orders, buyers, products, and the companies that employ the buyers. A database designer might create four related tables:

ordersstores details about each purchase.- Fields: order_id, order_date, buyer_id, product_id, quantity, purchase_price.

- Primary key: order_id.

- Foreign keys: - buyer_id references buyers (buyer_id) - product_id references products (product_id)

buyersstores information about customers making purchases.- Fields: buyer_id, buyer_name, company_id, email, phone, street_address, city, state, postal_code, country.

- Primary key: buyer_id.

- Foreign key:

- company_id references companies (company_id)

productsstores details about products available for sale.- Fields: product_id, product_name, category, weight, volume, unit_price, default_shipper_id.

- Primary key: product_id.

- Foreign key:

- default_shipper_id references companies (company_id)

companiesstores information about companies that employ buyers.- Fields: company_id, company_name, company_address, company_city, company_state, company_country.

- Primary key: company_id.

Each table represents a distinct entity (orders, buyers, products, and companies) and each has a primary key that uniquely identifies its records and all tables but companies contain at least one a foreign key.

For example:

- The

buyerstable includes a foreign key company_id that references thecompaniestable. - The

productstable includes a foreign key default_shipper_id that also references thecompaniestable. - The

orderstable references both thebuyersandproductstables through their primary keys.

If all of this data were stored in a single table, details about buyers, products, and companies would be duplicated many times for each new order. Establishing relationships among multiple tables minimizes redundancy and improves data integrity.

Relational Database Management Systems (RDBMS) use referential integrity constraints to ensure foreign key values in child tables correspond to valid primary key values in parent tables.

For example, if a user attempts to insert or update an orders record with a buyer_id that does not exist in the buyers table, the database will reject the operation.

- The use of foreign keys in referential integrity constraints ensures every order references a valid company, preventing orphaned records and keeping the data consistent across related tables.

Assume the companies table has just two records

| company_id | company_name | company_country |

|---|---|---|

| 1001 | Global Imports LLC | USA |

| 1002 | EuroTrade GmbH | Germany |

If a user attempts to insert an order with a company_id, that does not exist in the companies table, say 999, or tries to update an order to have company_id = 999, the database will reject the operation to maintain referential integrity.

The relationship between parent and child tables—defined through primary keys and foreign keys—is a logical relationship and is not affected by how tables are joined in SQL queries.

Foreign keys don’t occur by accident. They define relationships between entities in a database, e.g., orders linked to buyers, or buyers linked to companies, that reflect how the business actually operates.

- They naturally indicate what kinds of questions or analyses the database is designed to support.

For example:

- Having

orders.buyer_idreferencebuyers.buyer_id, makes it easy to ask: “How many orders has each buyer placed this month?” - Having

orders.product_idreferenceproducts.product_id, makes it easy to ask: “Which products are selling the most?” - Having the foreign key

buyers.company_idreferencecompanies.company_idsupports queries like: “Which companies are our largest customers?” - Having

products.default_shipper_idreferencecompanies.company_id, makes it easy to ask: “Which shipping companies handle the most product deliveries?”

In short, foreign keys reveal the logical connections between data that support business questions such as performance summaries, customer behavior analysis, supplier tracking, and sales trends.

They don’t just enforce integrity, they also shape the analytical structure of the database.

8.3.3 ER Diagrams

Tables and their relationships are usually described in the database schema.

They can be depicted using class or entity-relationship diagrams to show the relationships between tables, fields and keys.

- E-R diagrams can include additional information such as constraints and type of relationship, e.g., one-to-one, or one-to-many.

E-R diagrams can serve as a useful reference for understanding the structure of a large database and designing queries.

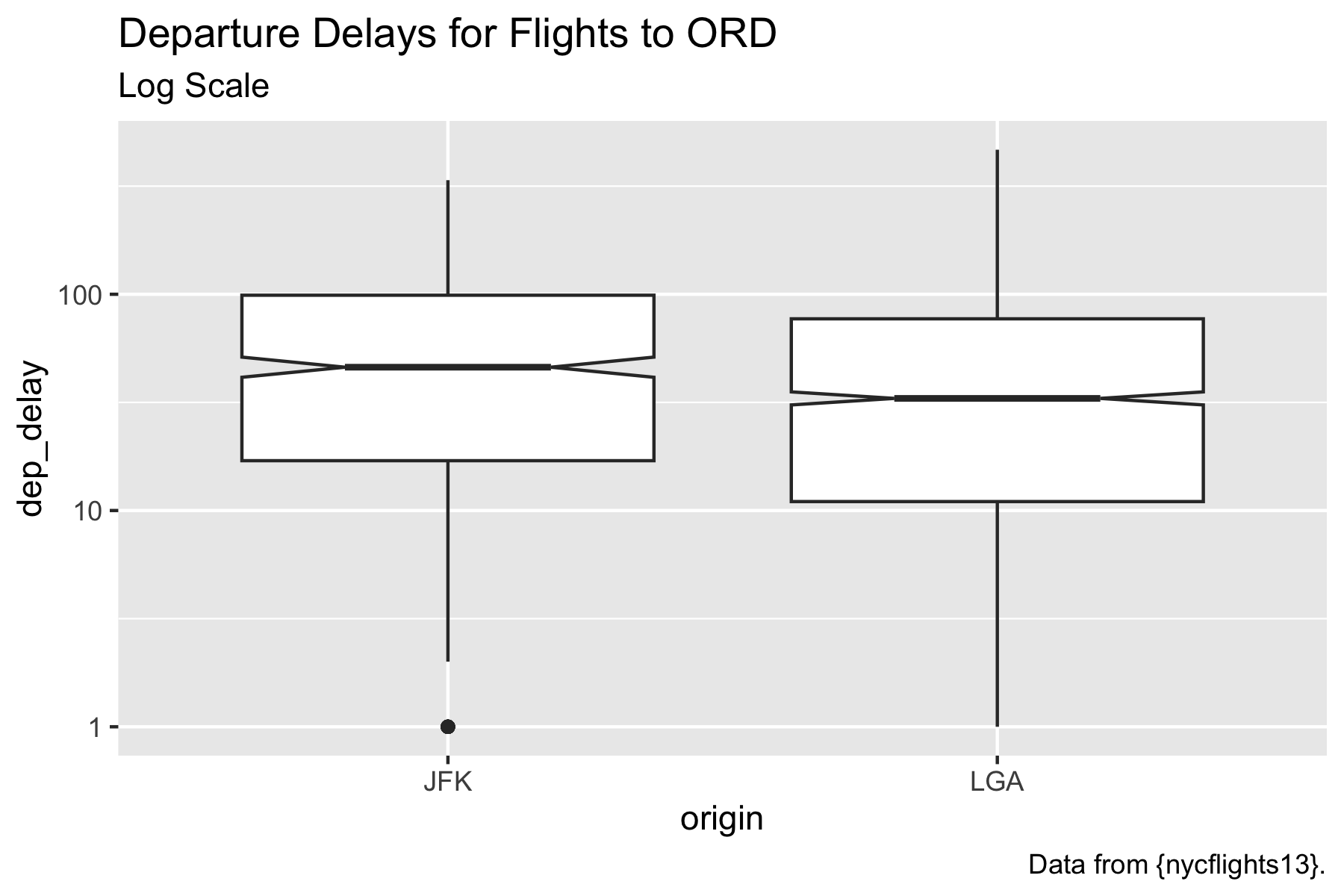

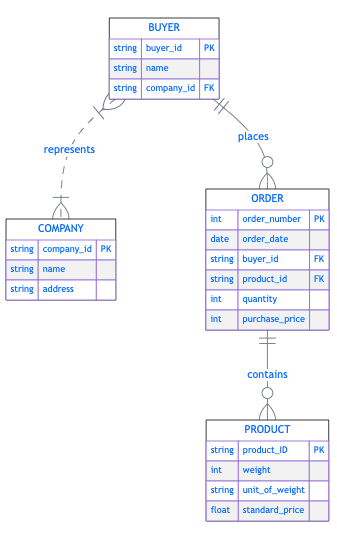

Figure 8.1 is an example of a simplified ER diagram (created in Quarto using Mermaid) showing the relationships between the tables and the associated primary and foreign keys.

Figure 8.2 shows a different style of E-R diagram commonly used for database schema. The arrows link individual fields as primary and foreign keys for the tables and show the type of the relationships.

8.3.4 Bringing Tables Together

Now that we can see the database schema, we can find where the data is that we want.

Suppose we want to answer the question, how many orders were placed in the last month. We can do that with just the data in the ORDERS table.

However, suppose we want to answer the question how many orders were placed from buyers in the states of Virginia and Maryland last month. We would have to get data from both the ORDERS table and the BUYERS table.

We get data from multiple tables by temporarily combining tables using a SQL query that creates a JOIN between two or more tables.

Database joins are the same joins you can execute using {dplyr} verbs but the SQL syntax is slight different.

If you are using the same join over and over in your analysis. SQL allows you to create a database “View” and save it. Then in the future, you can query the “view” instead of having to rejoin the tables.

- This is analogous to writing an R function to create a data frame based on joins and querying the results of the function instead of rewriting the code to join the data frames.

8.3.5 Query Optimization in Database Systems

Database Management Systems (DBMS) do more than store data and execute queries, they also decide how to execute your query.

This process is known as query optimization.

When you write a SQL statement,

- You describe what result you want

- The database engine determines how to retrieve it efficiently.

8.3.5.1 Table Scans vs. Index Lookups

At the most basic level, the database has two broad strategies for locating rows:

- Table Scan (Sequential Scan)

- The database reads each row (or each relevant column in a columnar system) and checks whether it satisfies the condition.

- This is simple and reliable but can be expensive for large tables.

- Index Lookup

- If an index exists on a column used in a

WHERE,JOIN, orORDER BYclause, the database can use that index to jump directly to matching rows instead of scanning the entire table.

- If an index exists on a column used in a

Conceptually, an index acts like a pre-built mapping so it knows:

'JFK' → rows 1, 15, 208, 9042, ...

'LGA' → rows 2, 19, 203, ...Instead of checking every row to find origin = 'JFK', the engine can navigate directly to the relevant row locations.

Most databases automatically create indexes for:

- The PRIMARY KEY for each table.

- UNIQUEness constraints the designer imposes on a table

Additional indexes must be created explicitly using:

CREATE INDEX idx_column

ON table_name(column_name);8.3.5.2 How the Optimizer Chooses

Modern RDBMS use a “cost-based” query optimizer.

- Cost is not about dollars, it is a statistical estimate of system effort.

That effort is primarily measured in terms of:

- Disk I/O (reading and writing data): usually the most “expensive”

- Memory usage: certain queries require large working memory.

- CPU operations: more rows –> more CPU cycles.

- Network transfer: more rows/columns = more bytes transmitted to the front-end client

- Temporary storage usage

- Concurrency impact

The optimizer chooses the plan with the lowest estimated total resource usage.

The optimizer evaluates:

- Table size

- Available indexes

- Estimated number of matching rows

- Join cardinality, e.g., one-to-one, one-to-many, etc.

- [Memory availability]

It then chooses the execution plan with the “lowest estimated cost (resource usage).”

- Importantly: Using an index is not always optimal.

- If a large percentage of rows match a condition, scanning the table may be faster than using the index.

8.3.5.3 Analytical vs. Transactional Systems

In transactional (row-based) systems:

- Users typically use queries focused on retrieving a small numbers of rows.

- Indexes are critical for fast point look-ups.

In analytical (columnar) systems like DuckDB:

- Users often use queries focused on retrieving many rows.

- Thus the DBMS has optimized how it does Full column scans.

- Selecting fewer columns reduces I/O dramatically.

- Indexes are less central than in traditional OLTP systems.

Understanding this distinction explains why some queries scan entire tables and why that is not necessarily bad in an analytical (columnar) database.

8.3.5.4 Why This Matters

Knowing that databases choose between scans and index lookups helps explain:

- Why filtering early improves performance.

- Why

SELECT *increases input and output “costs” (I/O). - Why large joins can become slow.

- Why

ORDER BYandGROUP BYcan require additional memory and sorting.

You do not need to design execution plans manually, but understanding that the database is optimizing behind the scenes makes you a more effective SQL user.

8.3.6 Database Design

Designing databases is its own area of expertise.

A poorly designed database will be slow and have insufficient rules (safeguards) to ensure data integrity.

We are used to operating as single users of our data since it is all in the memory of our machine and we can do what we want.

- A database may have thousands of users at the same time trying to update or retrieve records.

- Only one user can update a record at a time so databases use Record Locking to prevent others from updating the record or even retrieving it while someone else is updating the record.

- RDBMS can enforce locking at higher levels such as the table or even the entire database which can delay user queries. See All about locking in SQL Server for more details.

Database designers make trade offs when designing tables to balance competing desires.

- Storage Efficiency: limit each table to a single entity to minimize the amount of redundant data. This can generate lots of tables.

- Maintenance Efficiency: The more tables you have, the fewer users might be trying to update the table at a time.

- Query Speed: Minimize the number of joins needed to make a query. This leads to fewer tables.

These trade offs were recognized by Codd in his original paper.

- Formal descriptions of the ways to design databases are known as Normal forms.

- The process for determining what form is best for an application is known as Database Normalization. For more info see The Trade-offs Between Database Normalization and Denormalization. Explorer (2023)

Thus database designers may create tables that have redundant data if the most common queries join fields from those tables.

They will use database performance testing to optimize the queries and the database design to get the optimal cost performance trade offs. They can use other approaches to support data integrity in addition to database normalization.

Don’t be surprised to see E-R diagrams that have redundant data in them.

In very large databases, designers often choose to physically partition tables, e.g., by month or year. This only affects how the data is stored, not the logical relationships between tables.

This is not the same construct as the

PARTITION BYclause in Section 8.19.When a query filters on the partition column, the database can skip entire partitions (a process called partition pruning), dramatically improving performance.

This affects speed and (in cloud systems) cost, but not the correctness of results.

Partitioning does not change:

- SQL syntax

- Query logic

- Result correctness

It only changes how efficiently the engine executes the query.

8.3.7 Relational Database Management Systems

8.3.7.1 Database Front and Back Ends

Databases have two main components.

- The Front-end user interface where users create code to generate queries and receive results.

- The Back-end server where the data is stored and the DBMS executes the queries to update or retrieve the data.

The Front-end and Back-end communicate through a Connection that uses an API for handling queries and data.

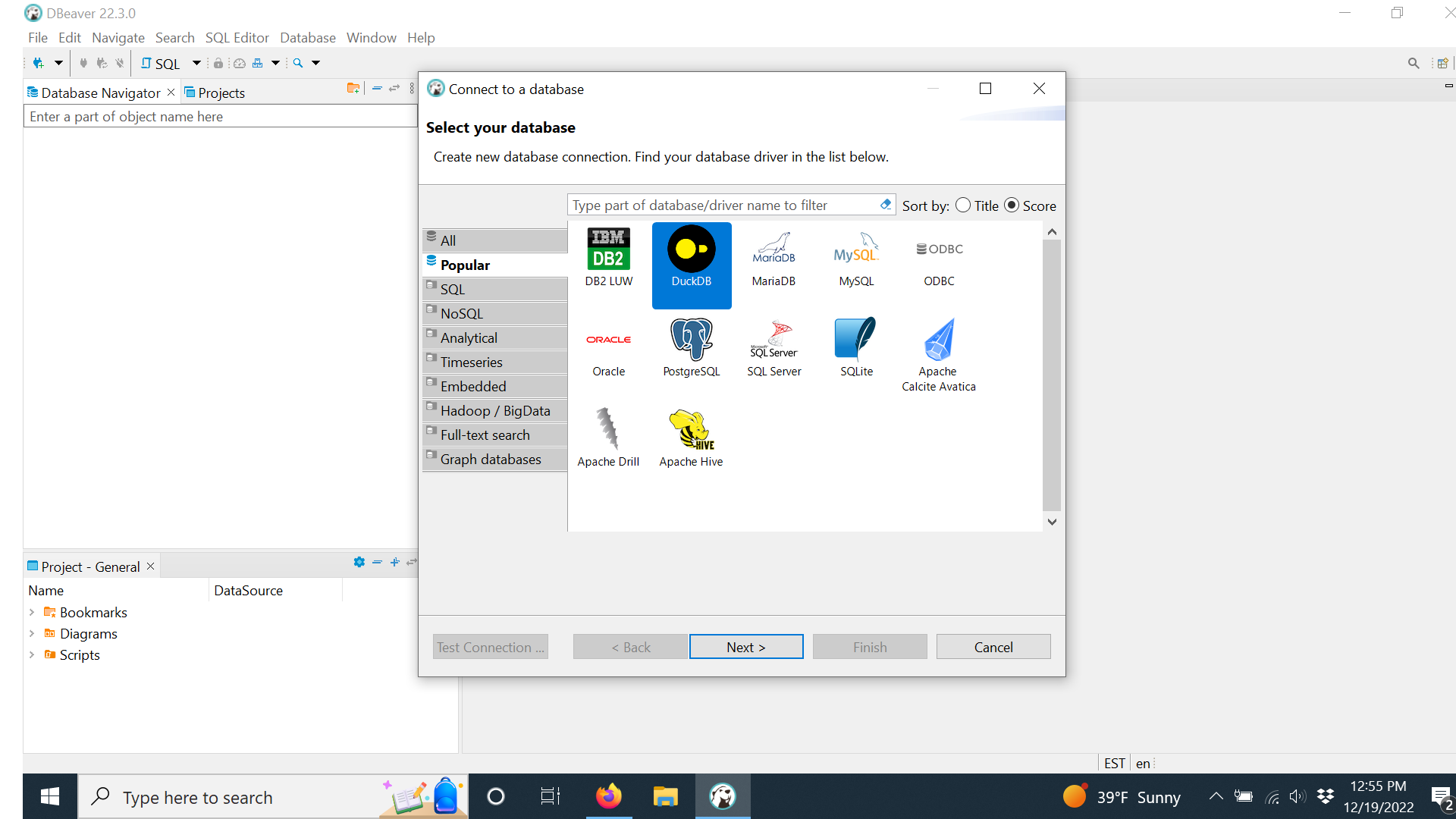

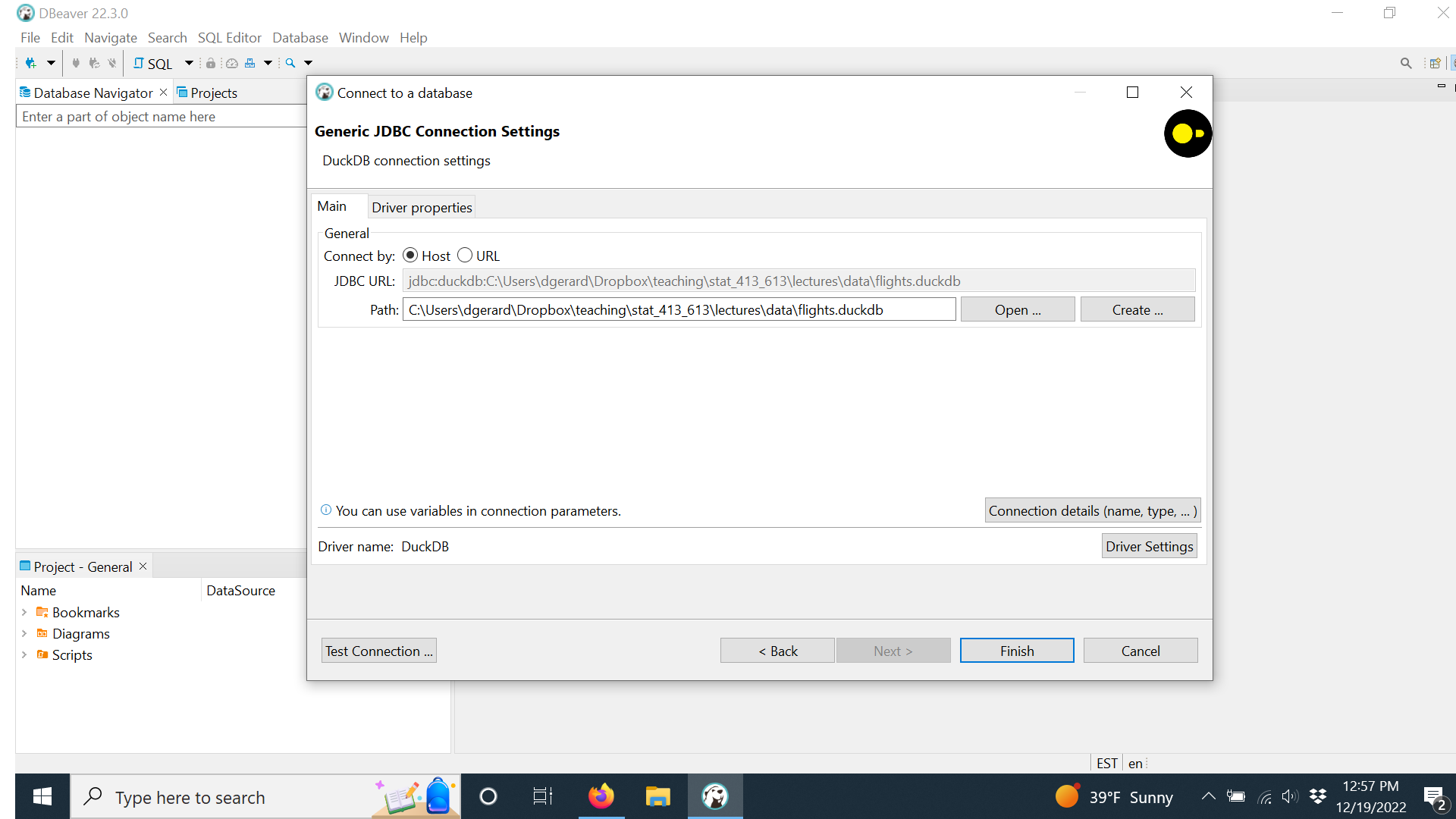

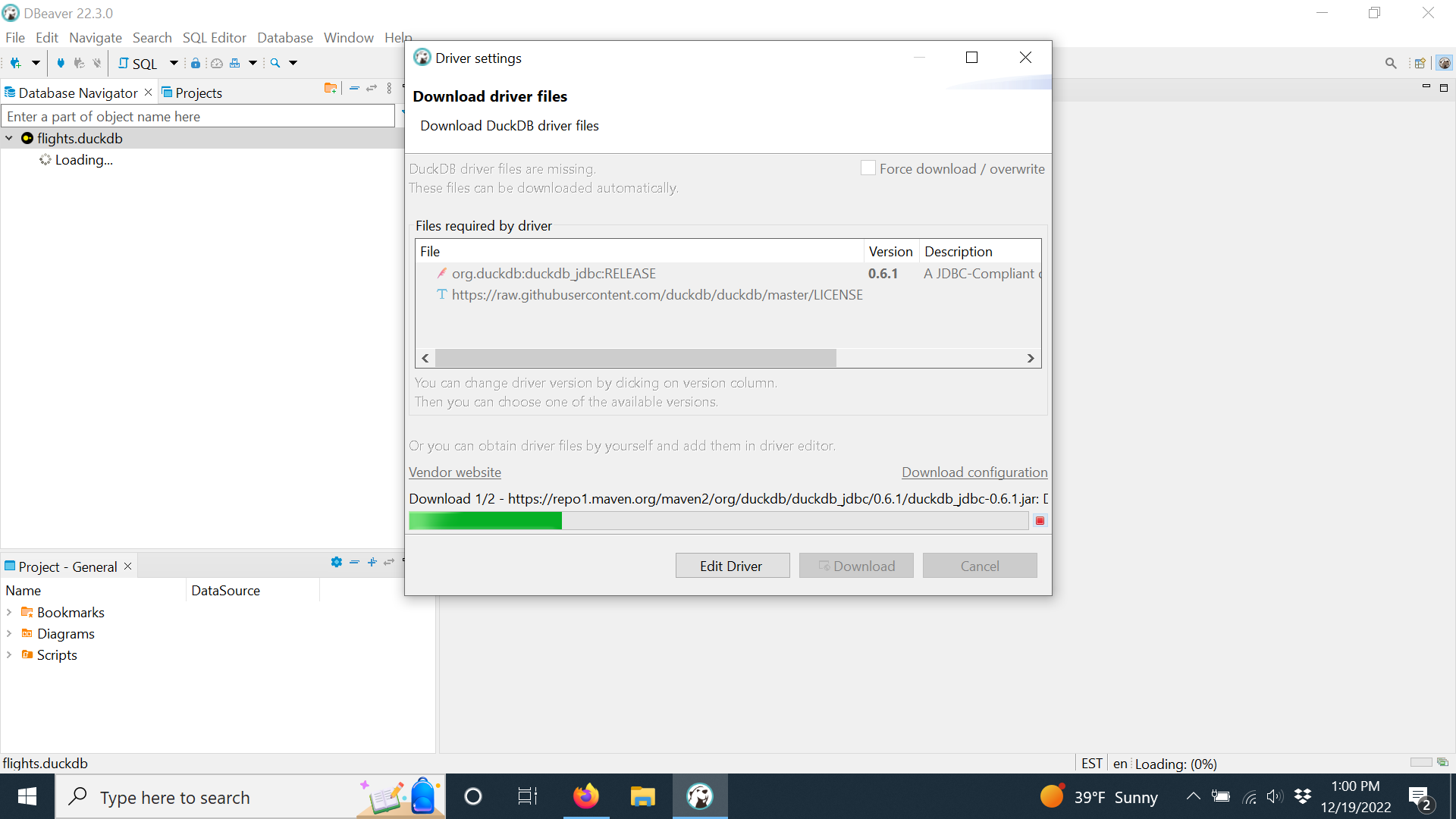

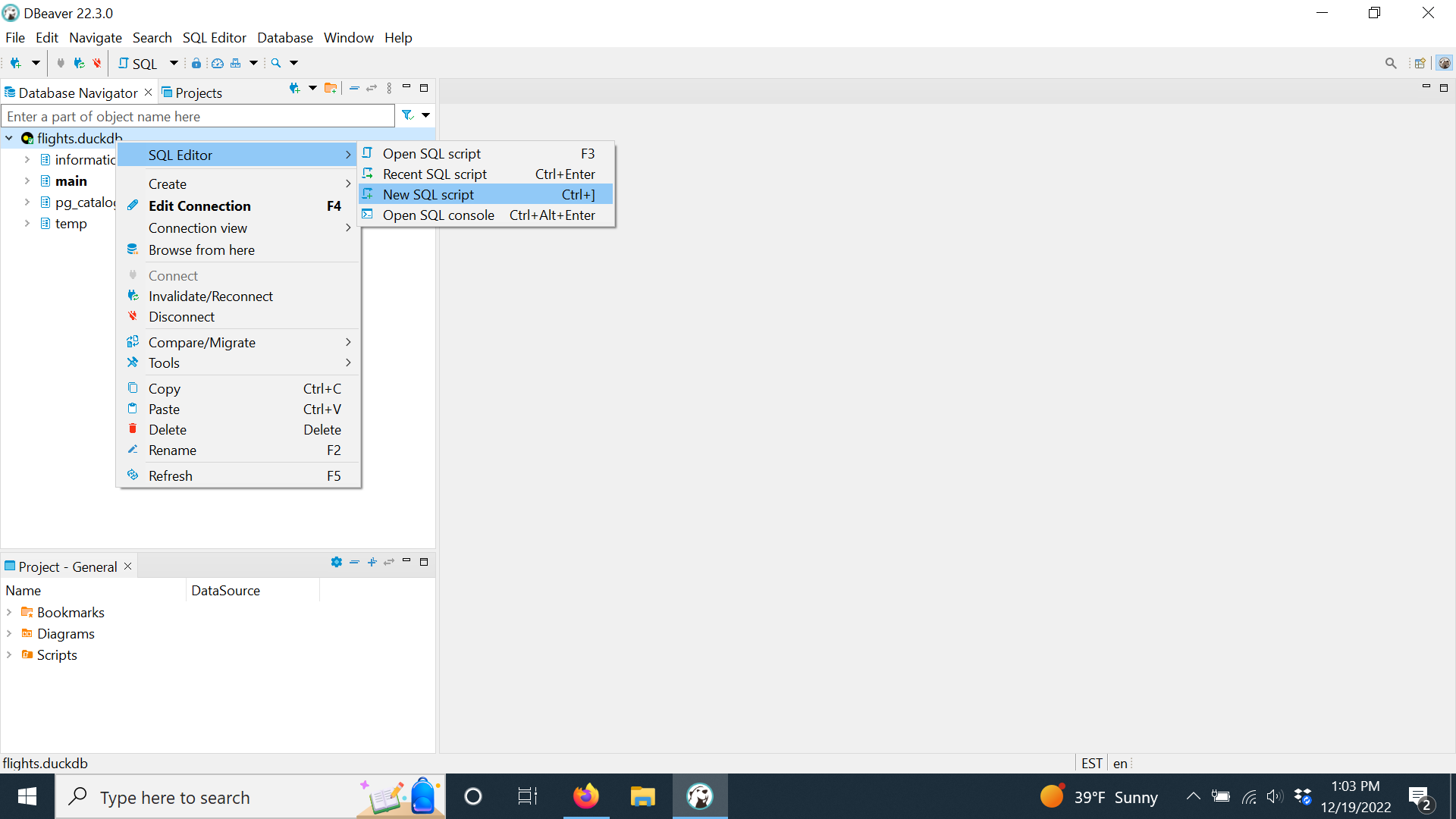

Many RDBMS bundle a Front-end and Back-end together. Examples include Oracle, SAP, and DuckDB has DBeaver.

Most databases allow you to use a Front-end of your choice as long as you have a server driver you can use to configure and open a connection to the Back-end server of the database.

- RStudio can operate as a Front-end to RDBMS for which you have installed a server.

- Excel can operate as a Front-end to a Microsoft SQL Server database.

- DBeaver allows you to make a connection to many different DBMS servers.

- MariaDB identifies over 30 Front-ends that can work with it, including DuckDB’s DBeaver SQL IDE.

There are multiple solutions. We can use RStudio, Positron, DBeaver, or other IDEs.

8.3.7.2 Database Connections: ODBC and the {DBI} Package

The reason there is so much flexibility with connecting Front and Back ends is the standardization of the Microsoft Open Data Base Connectivity (ODBC) Standard. David-Engel (2023)

The ODBC standard was designed for “maximum interoperability”.

The construct is similar to the HTTP interface for web browsers. The DBMS developer creates a driver that serves as an API for their Back-end DBMS that is ODBC-compliant. Then the Front-end developer creates their software to also be ODBC-compliant.

That way, the Front-end can create a connection to and interact with any DBMS back end for which there is an ODBC-compliant driver.

The R {DBI} package (for Data Base Interface) supports the connection between R and over 25 different DBMS Back-ends.

- Popular Back-end packages include: {duckdb}, {RMariaDB}, {RPostgres}, {RSQLite}, and {bigrquery}.

- These packages include the ODBC-compliant driver for the given database.

If no one has written a package but an ODBC-compliant driver exists, the {DBI} package works with the {ODBC} package and the driver to make the connection to the Back-end server.

8.3.7.3 Database Configuration Types

RDBMS can also be categorized based on their configuration or how front and back ends are physically connected and how the data is stored.

- In-process databases run on your computer. The data is stored locally and accessible without a network connection.

- They are optimized for the use case of a single user working with large amounts of data.

- Client-Server databases use a small front-end client on your computer (perhaps an IDE or in a browser) to connect to a robust server over a network. Data can be stored across a set of servers.

- They are optimized for the use case where multiple users can query the database at once and the RDBMS resolves contention.

- Cloud-databases also separate the front and back ends over a network but now the data may be distributed across multiple locations in the cloud.

- These are optimized for the use case of multiple users accessing a wide-variety of very large data and enabling distributed computing and parallel computing.

With the proliferation of cloud computing and software as a service (SAAS), you can expect to see more and more hybrids of client server and cloud databases.

As the amount of collected structured and unstructured data scales to Apache solutions for “Big Data”, the fundamental concepts of exploiting relationships in data using some version of SQL will continue to apply for the foreseeable future.

8.4 SQL Basics

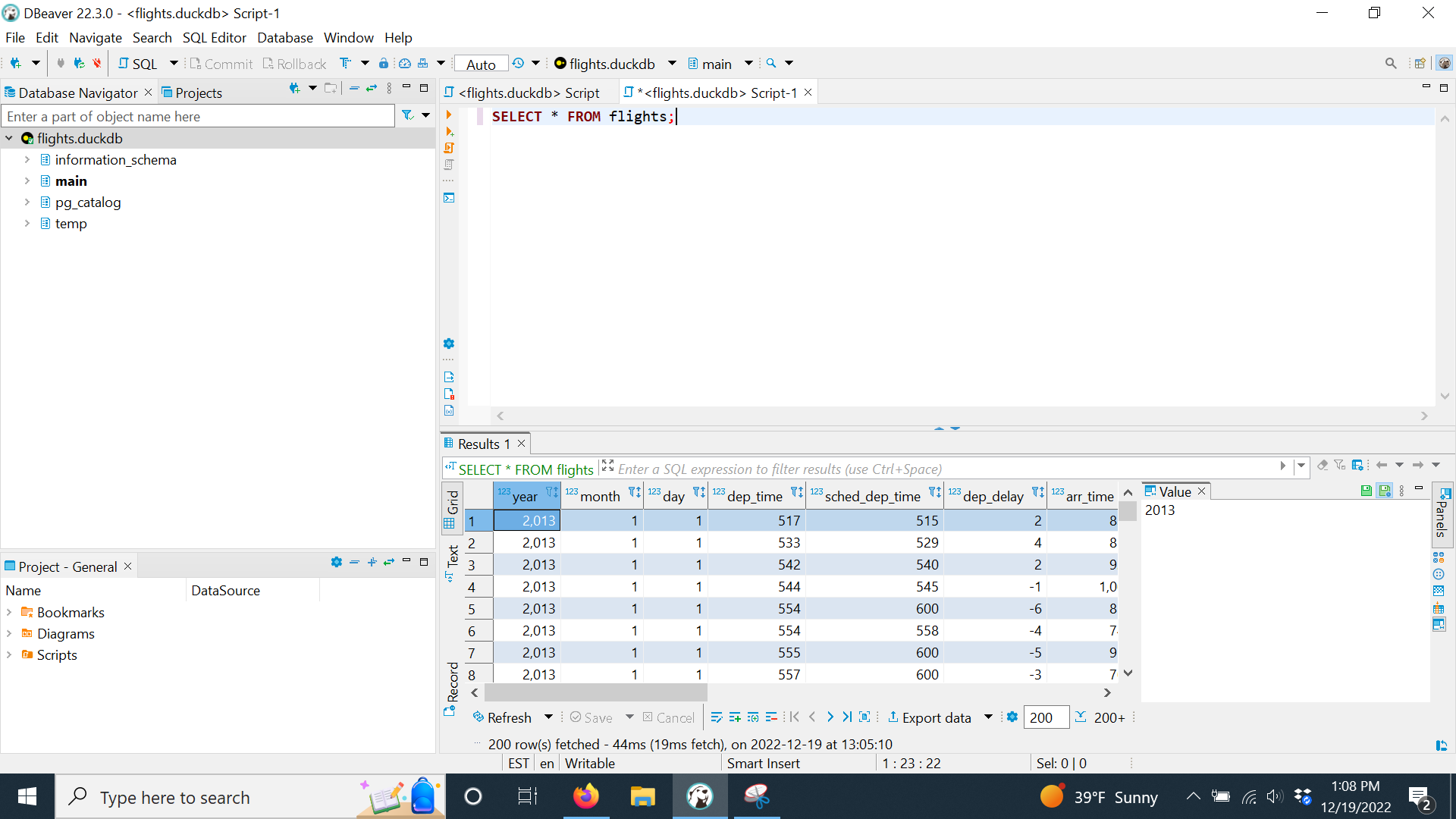

We will use R Studio as the Front-end, DuckDB as the Back-end, and the {DBI} package to support the connection between the front and back ends.

8.4.1 Connecting to a Database

Before you can use SQL to interact with a database, you must have a way to connect the Front-end user interface to the Back-end server with the data.

- When working in R, the {DBI} package can create the connection and allow you to assign a name to it.

- Your database will be created by a specific DBMS which provides the Back-end server.

- If you are not using the Front-End that comes with the DBMS, you need an ODBC-compliant driver for the DBMS to make the connection.

We will use the DuckDB database and the {duckdb} Package for most of this section.

DuckDB is an in-process database so it has no external dependencies which makes it useful for single user analysis of large data. See Why DuckDB for their story.

You can install DuckDB directly on your computer and use the built-in Command Line Interface without using R.

However, the {duckdb} package installs the DuckDB DBMS as well as the ODBC-compliant driver for R.

Use the console to install {DBI} and {duckdb}.

To make the connection between R and DuckDB requires loading the two packages {DBI} and {duckdb}.

We also want to compare the SQL code (working with the database tables) with R code (working with data frames) so load {tidyverse} and {nycflights13} which has the same data as the database we will use.

- You will see the concepts for accessing data are quite similar but the syntax is different.

The DuckDB database with {nycflights13} data is on GitHub at https://github.com/AU-datascience/data/blob/main/413-613/flights.duckdb.

- {duckdb} only works with local data bases, not over a URL

- You can manually download to a

datafolder under your current file’s working directory or use the following once you are sure there is adatadirectory:

Create a connection to flights.duckdb by using DBI::dbConnect() with duckdb() and a relative path to the database and assign the name con to the connection.

- Notice the connection to the database in the environment.

- You will use the

conconnection to interact with the database Back-end.

- When using DuckDB through DBI,

- SQL runs in the database

- Results are pulled into R only when requested

dbGetQuery()materializes the results into memory

Once you have a connection, you can create a basic SQL code chunk like this in R Markdown.

```{sql, connection=con}

--| label: lst-show-tables

-- [SQL:DuckDB] SHOW TABLES is not part of the SQL standard.

SHOW TABLES;

```| name |

|---|

| airlines |

| airports |

| flights |

| planes |

| weather |

In Quarto .qmd files use the chunk option --| connection: con.

```{sql}

--| connection: con

--| label: lst-show-tables-q

-- [SQL:DuckDB] SHOW TABLES is not part of the SQL standard.

SHOW TABLES;

```| name |

|---|

| airlines |

| airports |

| flights |

| planes |

| weather |

Whenever you are using a SQL chunk inside an R Markdown or Quarto document, identify the connection in each code chunk so the SQL interpreter knows with which database to interact.

All the SQL code chunks in this section will have the --| connection my_con chunk option where my_con is the name of the appropriate connection.

8.4.2 Connecting to Parquet Files

DuckDB and other modern databases (e.g., Apache Spark, Presto/Trino, Google BigQuery, Snowflake, Databricks) explicitly support the reading and writing of Parquet files.

- Parquet is extremely common today in big data and analytics; it’s basically the default format for modern data lakes and replacing CSV as a format for data exchange.

- Parquet is a columnar, compressed, self-describing format so systems built with columnar execution in mind (analytics DBs, OLAP engines, distributed query engines) tend to support direct querying of compressed Parquet.

- Traditional RDBMS (Postgres, MySQL, SQL Server, Oracle) may require an extension or pre-processing to ingest them.

Parquet is a storage format, not a relational database.

- It preserves schema information (column names, types, and optional metadata).

- It does not preserve or enforce higher-level relational rules such as: Primary keys, Foreign keys, Indexes, or Constraints (NOT NULL, CHECK, etc.).

In parquet format, each table can be a separate file or a set of files (for really large data).

- The files can be compressed for efficient storage.

Assume there is a directory (“./data/parquet”) of tables where each file is a separate table

- Create a new connection.

- Read individual files by name.

- DESCRIBE a table.

```{sql}

--| connection: con_p

--| label: lst-read_parquet

-- [SQL:DuckDB] DESCRIBE 'file.parquet' treats a file path as a table-like object.

DESCRIBE './data/parquet/flights.parquet';

```| column_name | column_type | null | key | default | extra |

|---|---|---|---|---|---|

| year | INTEGER | YES | NA | NA | NA |

| month | INTEGER | YES | NA | NA | NA |

| day | INTEGER | YES | NA | NA | NA |

| dep_time | INTEGER | YES | NA | NA | NA |

| sched_dep_time | INTEGER | YES | NA | NA | NA |

| dep_delay | DOUBLE | YES | NA | NA | NA |

| arr_time | INTEGER | YES | NA | NA | NA |

| sched_arr_time | INTEGER | YES | NA | NA | NA |

| arr_delay | DOUBLE | YES | NA | NA | NA |

| carrier | VARCHAR | YES | NA | NA | NA |

When you have a DuckDB connection (here called con_p), DuckDB can automatically treat Parquet file paths as virtual tables so you can work with them.

- Here is an example of a

JOINof two parquet files. (Don’t worry about the syntax for now.)

```{sql}

--| connection: con_p

--| label: lst-join_parquet

-- [SQL:DuckDB] DuckDB can query Parquet files by path in FROM/JOIN (non-standard).

SELECT f.flight, a.name AS airline_name

FROM './data/parquet/flights.parquet' AS f

JOIN './data/parquet/airlines.parquet' AS a

ON f.carrier = a.carrier

LIMIT 10;

```| flight | airline_name |

|---|---|

| 461 | Delta Air Lines Inc. |

| 569 | United Air Lines Inc. |

| 4424 | ExpressJet Airlines Inc. |

| 6177 | ExpressJet Airlines Inc. |

| 731 | Delta Air Lines Inc. |

| 684 | United Air Lines Inc. |

| 301 | American Airlines Inc. |

| 1837 | American Airlines Inc. |

| 1279 | Delta Air Lines Inc. |

| 1691 | United Air Lines Inc. |

Using the file names directly though makes the code less portable and harder to read.

To make portable code, create a VIEW for each table (Section 8.25) with an alias of the table name and write your code using the VIEW instead of the file name as working with a DBMS table.

- Let’s close the

con_pconnection

- Use R to create a new connection and the views.

# create connection (here, ephemeral in-memory db)

con_p <- dbConnect(duckdb())

# register all Parquet files in a folder

dbExecute(con_p, "

CREATE VIEW flights AS

SELECT * FROM parquet_scan('./data/parquet/flights.parquet');

CREATE VIEW airlines AS

SELECT * FROM parquet_scan('./data/parquet/airlines.parquet');

")Now we can use the new connection to run the query as if it were standard SQL.

```{sql}

--| connection: con_p

--| label: lst-join_parquet-view

-- [SQL:Mostly standard] This becomes portable once you hide Parquet behind views.

SELECT f.flight, a.name AS airline_name

FROM flights AS f

JOIN airlines AS a

ON f.carrier = a.carrier

LIMIT 10;

```| flight | airline_name |

|---|---|

| 67 | United Air Lines Inc. |

| 373 | JetBlue Airways |

| 764 | United Air Lines Inc. |

| 2044 | Delta Air Lines Inc. |

| 2171 | US Airways Inc. |

| 1275 | Delta Air Lines Inc. |

| 366 | Southwest Airlines Co. |

| 1550 | United Air Lines Inc. |

| 4694 | ExpressJet Airlines Inc. |

| 1647 | Delta Air Lines Inc. |

- Close the connection

8.4.3 SQL Syntax Overview

Like all languages, SQL has standard syntax and reserved words.

However, SQL is declarative, not procedural in that it describes what result you want, not how to compute it.

There is a standard for SQL: SQL-92. However, many database developers see this as a point of departure rather than a destination.

- They use ODBC and SQL-92 to develop their own versions that are optimized for their intended use cases and database design.

- Thus, there are many flavors of SQL in the world.

Be sure to review the SQL implementation for the DBMS you are using to be aware of any special features.

If you use proprietary SQL, it may give you performance improvements, but it will also lock you into that DBMS and you will have to revise your code if the back-end DBMS is changed.

These notes use the SQL flavor used by the DuckDB DBMS.

The Tidyverse uses functions as “verbs” to manipulate data; SQL uses “STATEMENTS” which can contain clauses, expressions, and other syntactic building blocks.

Table 8.1 identifies the common types of building blocks used in SQL along with some examples and their usage.

| Type | Examples | Role / Purpose |

|---|---|---|

| Statements | SELECT, INSERT, UPDATE, DELETE, CREATE, DROP |

Complete SQL commands that tell the database what action to perform. |

| Clauses | FROM, WHERE, GROUP BY, HAVING, ORDER BY, LIMIT, JOIN |

Sub-components of statements that define how the action is carried out. |

| Expressions | CASE, arithmetic (a + b), boolean (x > 5), string concatenation |

Return a single value, often used inside clauses (computed or conditional). |

| Functions | Aggregate: SUM(), AVG(), COUNT() Scalar: ROUND(), UPPER(), NOW() |

Built-in operations that transform values or sets of values. |

| Modifiers | DISTINCT, ALL, TOP, AS (alias) |

Adjust or qualify the behavior of clauses or expressions. |

| Predicates | IN, BETWEEN, LIKE, IS NULL, EXISTS |

Conditions that return TRUE/FALSE, often used in WHERE or HAVING. |

| Operators | =, <, >, AND, OR, NOT, +, -, *, / |

Combine or compare values. |

| Keywords | NULL, PRIMARY KEY, FOREIGN KEY, DEFAULT, CHECK |

Special reserved words that define schema rules or literal values. |

A SQL “statement” is a “complete sentence” that can be executed independently as an executable query.

- A clause is a phrase in the sentence.

- An expression is like a formula inside a clause.

- A function is a built-in calculator you can call inside expressions.

- Modifiers tweak the behavior (like adverbs in English).

- Predicates make true/false checks.

- Operators are the glue (math or logic).

- Keywords are the reserved words that give SQL its grammar.

The following diagram shows how the building blocks are ordered in creating a complete sentence.

--| label: lst-sql-sequence

--| lst-cap: Generalized hierarchy of SQL building blocks

--| echo: true

SQL Statement (e.g., SELECT)

│

├── Clauses (parts of the statement)

│ ├── FROM (which tables?)

│ ├── WHERE (filter rows before grouping)

│ │ └── Predicates (IN, BETWEEN, LIKE, IS NULL, EXISTS)

│ │ └── Expressions (x > 5, col1 + col2)

│ ├── GROUP BY (form groups)

│ ├── HAVING (filter groups after aggregation)

│ │ └── Aggregate Functions (SUM, AVG, COUNT, MIN, MAX)

│ ├── SELECT (choose fields/expressions to return)

│ │ ├── Expressions (CASE, arithmetic, concatenation)

│ │ │ └── Functions (ROUND, UPPER, NOW, SQRT, etc.)

│ │ └── Modifiers (DISTINCT, AS alias)

│ ├── ORDER BY (sort results)

│ └── LIMIT / OFFSET (restrict result size)

│

├── Operators (math: +, -, *, / ; logic: AND, OR, NOT ; comparison: =, <, >)

│

└── Keywords (NULL, DEFAULT, PRIMARY KEY, FOREIGN KEY, CHECK, etc.)SQL is used across the entire database workflow:

- Database setup:

CREATE DATABASE,CREATE TABLE - Data management:

INSERT,UPDATE,DELETE,DROP TABLE,ALTER TABLE - Data retrieval and analysis:

SELECT(with various clauses)

We are most interested in SELECT statements as that is how we get data from a database table or a set of joined tables.

8.4.4 The SELECT statement

The SELECT statement is the SQL workhorse for getting and manipulating data akin to dplyr::select() combined with filter(), arrange(), and summarise().

SELECT has several other clauses or keywords to support tailoring the data returned by a query.

FROM: Required to identify the table or set of tables where the data will be located.WHEREis a clause used to filter which records (rows) should be returned likedplyr::filter().ORDER BYis a keyword for sorting records likedplyr::arrange(). The default is ascending order.GROUP BYis a keyword akin todplyr::group_byand is used for creating summaries of data, often with aggregate functions (SUM(),AVG(),COUNT(), …).

Other useful modifiers include:

AS: rename/alias columns or tables (likedplyr::rename()).IN: test membership (like%in%).DISTINCT: removes duplicate rows (likedplyr::distinct()).LIMIT: restrict number of rows returned (likeutils::head()).

SELECT Statement Operators

- SQL uses

=for comparison instead of==since you don’t assign anything in SQL. - Use

!=for not equal. - SQL uses

NULLinstead ofNA. Test withIS NULLorIS NOT NULL. - SQL uses

AND,ORandNOTas logical operators.

8.4.5 SQL Syntax Rules to Remember

- Case Insensitivity:

- Keywords are case insensitive, i.e.

selectis the same asSELECTis the same asSeLeCt). - Best practice is to have all statement keywords be in UPPERCASE (e.g.,

SELECT) and table/field names in lowercase for readability.

- Order Matters:

- A SQL

SELECTstatement’s clauses and keywords must be in the following order:SELECT,FROM,WHERE,GROUP BY,ORDER BY.

- Whitespace and Formatting:

- SQL ignores newlines and extra spaces.

- Best practice is to put statements, clauses, and keywords on new lines with spaces to align the keywords to preserve a “river” of white space between the keyword and the values.

- Quoting Rules:

- Character string values must be in single quotes, e.g.,

'NYC'. - Identifiers (column/table names): use double quotes (“first name”) if they contain spaces, reserved words, or special characters (same as using back ticks in R).

- It is fine to always use double quotes because it is not always clear what is an invalid variable name in the database management system.

- Comments

- Single-line comments start with two hyphens

--. - Multi-line comments use

/* ... */in most DBMS.

- Statement Termination

- Some databases (e.g., PostgreSQL, Oracle) require a semicolon

;at the end of each statement. - Best practice is to always include

;so multiple statements can be run in one batch.

- Three-valued Logic

- Unlike R or Python, SQL uses three-valued logic as expressions can evaluate to:

TRUE,FALSE, orUNKNOWN(whenNULLis involved)

NULLdoes not mean zero or empty string. It means missing or unknown.

- This affects comparisons, e.g. the following always returns

UNKNOWNnotTRUE.

SELECT *

FROM flights

WHERE arr_delay = NULL; -- This will NOT work- The correct approach is

WHERE arr_delay IS NULL;

WHERE arr_delay IS NOT NULL;NULL = NULLisUNKNOWN, notTRUE.WHEREonly keeps rows where the condition evaluates toTRUE.FALSEandUNKNOWNrows are both filtered out.

- SQL is forgiving about formatting, but unforgiving about logic, especially with

NULLhandling.

Together, these rules make SQL predictable (rigid order) but also forgiving (case, whitespace, optional semicolons).

8.4.6 Creating SQL Code Chunks

If you are creating working a lot with SQL in RStudio, you can manually enter a chunk and change the language engine to sql each time. However there are other ways to be more productive.

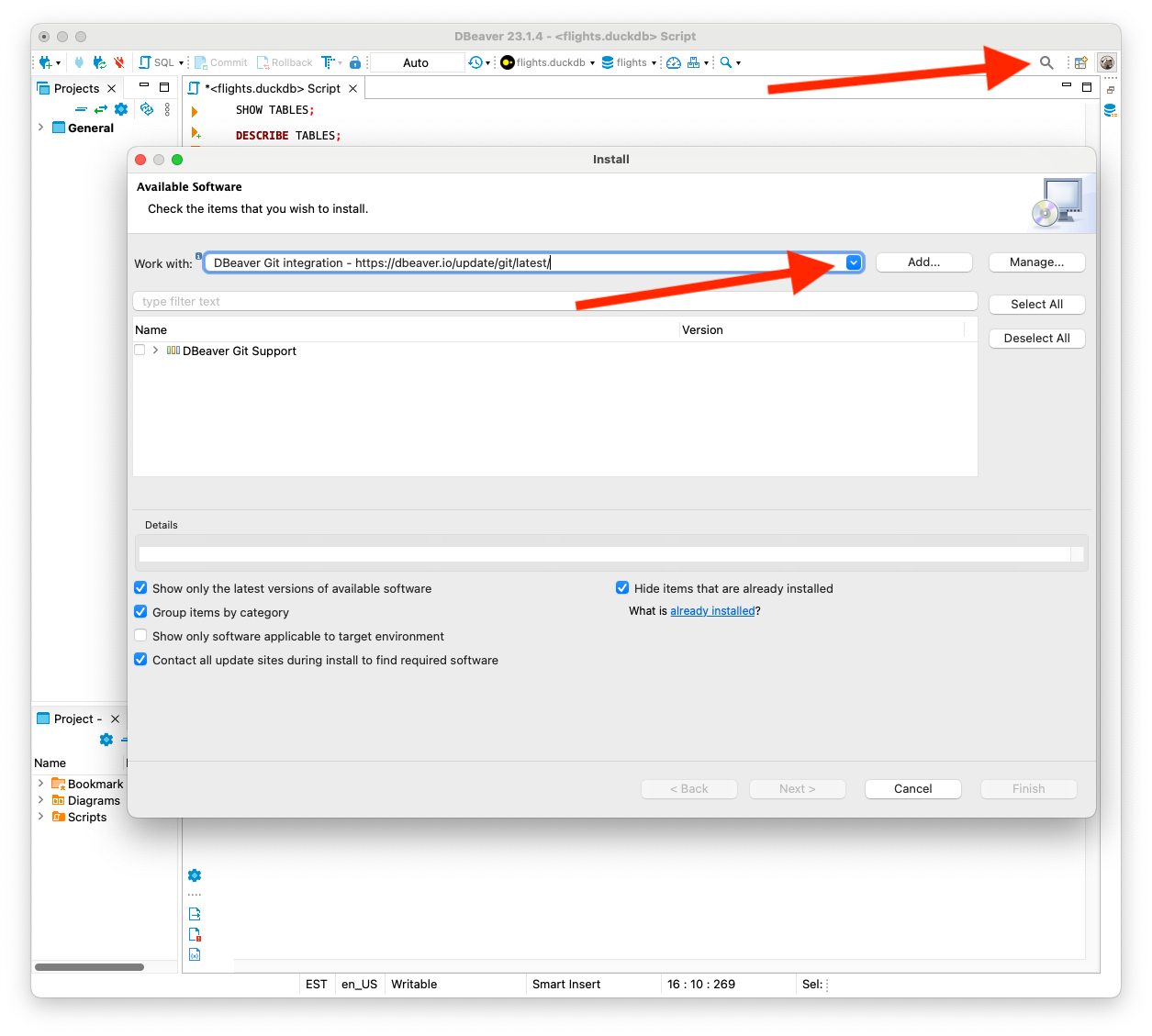

8.4.6.1 Customize a Keyboard Shortcut

RStudio has a built-in capability to create an SQL code chunk with an R Markdown code chunk option for the connection.

To access it quickly, suggest customizing a keyboard shortcut for it per Customizing Keyboard Shortcuts in the RStudio IDE. Ushey (2023)

Go to the RStudio Main Menu and select Tools/Modify Keyboard Shortcuts.

- A pop up pane will appear with many options and the existing keyboard shortcuts.

- Enter

S Qin the search pane and you should see something like Figure 8.3.

- Click in the

Shortcutcolumn to the right of “Insert Chunk S Q L”. - Enter the shortcut combination you want. Figure 8.3 shows the Mac shortcut I chose,

Ctrl + Cmd + s. - Click

Applyand your shortcut will be saved. You can use it right away.

Enter the shortcut in a text area (not an existing chunk) and you should get the following (without the con).

- Make sure you enter in the name of the connection you have created.

- You cannot render a document with an SQL code chunk with invalid or empty connection.

- You have to have created a valid connection object before the SQL code chunk.

- If you have a connection, you must also have a valid SQL line, or set the code chunk option

--| eval: false.

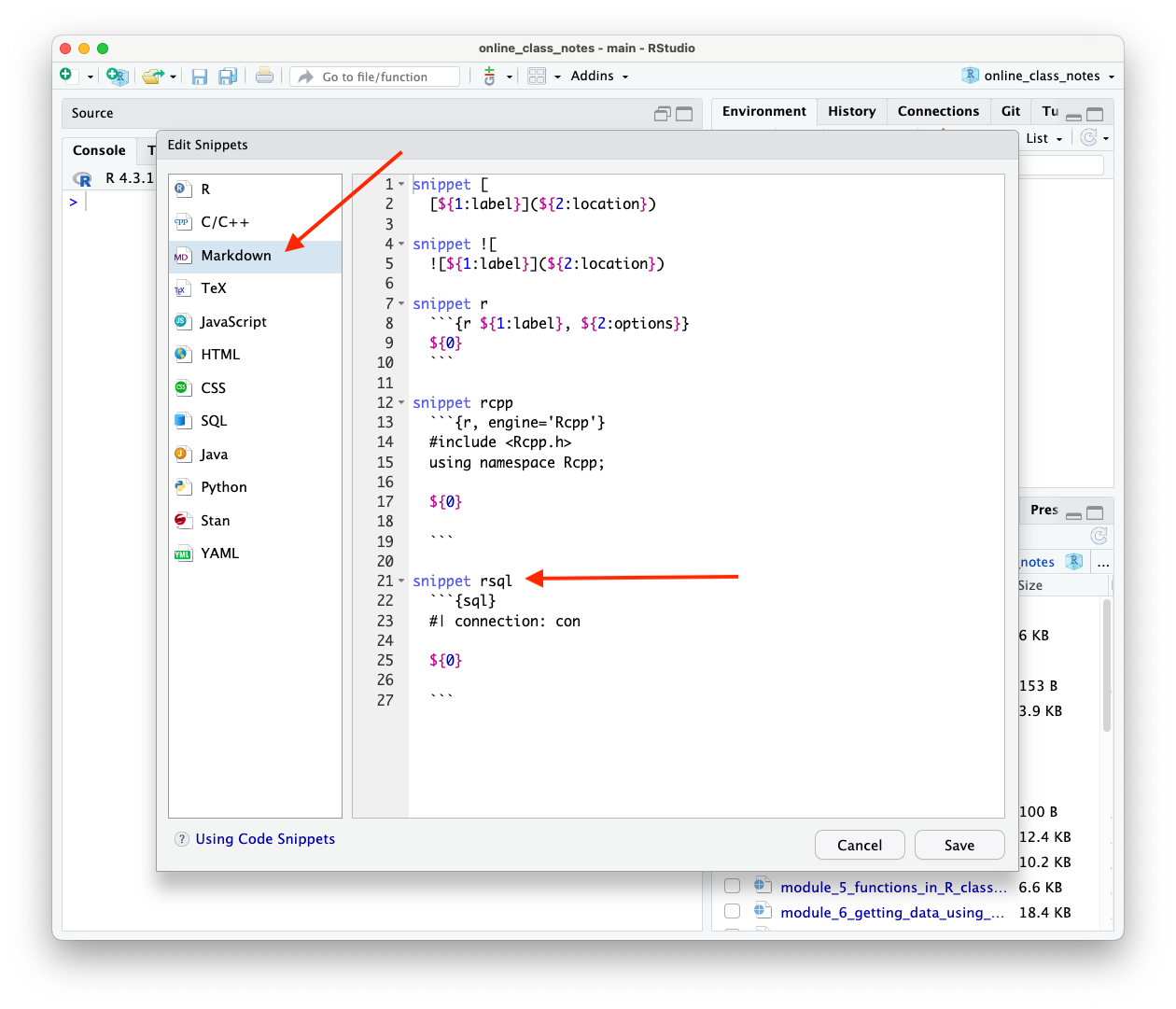

8.4.6.2 Create a Custom Code Snippet

Another option in RStudio is to create a custom code snippet per Code Snippets in the RStudio IDE. Allaire (2023)

- RStudio snippets are templates for code or R Markdown.

To create a snippet for a custom SQL code chunk, go to the RStudio Main Menu and select Tools/Edit Code Snippets.

- A pop-up pane should appear.

- Select

Markdownas you will be using the snippet in a Markdown text section. - You will see something like Figure 8.4 with the existing Markdown code snippets.

- Figure 8.4 already shows the snippet I saved for creating a custom SSQL code chunk.

You can create your own snippet.

- Enter

snippet s_namewhere you enter your name for the snippet instead ofs_name. It does not have to bersql. - Enter the following lines and use the tab key not spaces to indent.

- The

${0}is a placeholder for your SQL code. The0means it is the first place your cursor will go.- You could replace

conwith${0}so you can fill in which connection each time. Then edit the next${0}on the original line to${1}.

- You could replace

- Select

Saveto save it and then you can use it.

To use a snippet, enter the name your created, I use “rsql”, and then SHIFT+TAB (on a mac) aTAB on windows, and the code chunk will appear like below (without the other options).

8.5 A SQL-R-Python Mental Model

Part of being a “tri-lingual” data scientist is moving fluently between SQL, R, and Python.

Doing so efficiently requires more than translating syntax; it requires mapping the underlying mental models of how each language stores, processes, and returns data.

- A key idea is SQL describes the result; R and Python describe the steps.

Table 8.2 provides a concise comparison to help translate core concepts across these three common data science environments.

| Concept | SQL | R | Python (pandas) |

|---|---|---|---|

| Fundamental object | Table (relation) | Data frame / tibble | DataFrame |

| Storage model | Often disk-based | In-memory | In-memory |

| Where execution happens | In the database engine | In R session memory | In Python process memory |

| Evaluation style | Declarative (describe result) | Functional / vectorized | Method chaining / vectorized |

| Execution model | Set-based (engine optimized) | Vectorized in memory | Vectorized in memory |

| Optimization responsibility | Database optimizer chooses plan | User controls order of operations | User controls order of operations |

| Query result | Always returns a new table | Returns object (vector/data frame) | Returns DataFrame/Series |

| Pipelining model | Nested queries / CTEs | Pipe (|>) |

Method chaining (.) |

| Row order | Not guaranteed unless ORDER BY |

Preserved unless changed | Preserved unless changed |

| Missing values | NULL (three-valued logic) |

NA |

NaN / None |

| Test for missing | IS NULL |

is.na() |

.isna() |

| Filtering behavior | WHERE keeps only TRUE |

Logical vector keeps TRUE |

Boolean mask keeps True |

| Joins | Explicit JOIN syntax |

*_join() functions |

merge() / .join() |

| Arithmetic behavior | Depends on type (integer vs numeric) | Numeric by default | Numeric by default |

A mental model helps in seeing that the analytical thinking remains the same; only the execution model changes across SQL, R, and Python.

Now we are ready to go through a number of the SQL Building blocks from Table 8.1 to manipulate and retrieve data using the connection to our DuckDB database.

Since there are many flavors of SQL, and almost every flavor (including DuckDB) uses some non-standard statements or functions, the SQL chunks in this section are annotated as follows to indicate what is standard in DuckDB, what is customized in the DuckDB flavor and what is “mostly standard”.

-- [SQL:Standard]= should run on most DBMS (with minor naming differences)-- [SQL:DuckDB]= DuckDB feature or commonly-nonportable syntax-- [SQL:Mostly standard; note …]= portable core + one portability caveat

8.6 SHOW and DESCRIBE Provide an Overview of the Tables in the Database

DuckDB’s SHOW TABLES returns a list of all of the tables in the database.

```{sql}

--| connection: con

--| label: lst-show-tables-3

-- [SQL:DuckDB] SHOW TABLES is not part of the SQL standard.

-- List all tables in the database

SHOW TABLES;

```| name |

|---|

| airlines |

| airports |

| flights |

| planes |

| weather |

DESCRIBE tablename returns metadata about the table tablename.

- column name, data type, whether NULL is allowed, key info (e.g. primary key), default values.

```{sql}

--| connection: con

--| label: lst-describe-table-airlines

-- [SQL:DuckDB] DESCRIBE table is supported in DuckDB; many DBMS use information_schema instead.

-- Structure of a specific table

DESCRIBE airlines;

```| column_name | column_type | null | key | default | extra |

|---|---|---|---|---|---|

| carrier | VARCHAR | YES | NA | NA | NA |

| name | VARCHAR | YES | NA | NA | NA |

- R equivalents for a data frame

tibble [336,776 × 19] (S3: tbl_df/tbl/data.frame)

$ year : int [1:336776] 2013 2013 2013 2013 2013 2013 2013 2013 2013 2013 ...

$ month : int [1:336776] 1 1 1 1 1 1 1 1 1 1 ...

$ day : int [1:336776] 1 1 1 1 1 1 1 1 1 1 ...

$ dep_time : int [1:336776] 517 533 542 544 554 554 555 557 557 558 ...

$ sched_dep_time: int [1:336776] 515 529 540 545 600 558 600 600 600 600 ...

$ dep_delay : num [1:336776] 2 4 2 -1 -6 -4 -5 -3 -3 -2 ...

$ arr_time : int [1:336776] 830 850 923 1004 812 740 913 709 838 753 ...

$ sched_arr_time: int [1:336776] 819 830 850 1022 837 728 854 723 846 745 ...

$ arr_delay : num [1:336776] 11 20 33 -18 -25 12 19 -14 -8 8 ...

$ carrier : chr [1:336776] "UA" "UA" "AA" "B6" ...

$ flight : int [1:336776] 1545 1714 1141 725 461 1696 507 5708 79 301 ...

$ tailnum : chr [1:336776] "N14228" "N24211" "N619AA" "N804JB" ...

$ origin : chr [1:336776] "EWR" "LGA" "JFK" "JFK" ...

$ dest : chr [1:336776] "IAH" "IAH" "MIA" "BQN" ...

$ air_time : num [1:336776] 227 227 160 183 116 150 158 53 140 138 ...

$ distance : num [1:336776] 1400 1416 1089 1576 762 ...

$ hour : num [1:336776] 5 5 5 5 6 5 6 6 6 6 ...

$ minute : num [1:336776] 15 29 40 45 0 58 0 0 0 0 ...

$ time_hour : POSIXct[1:336776], format: "2013-01-01 05:00:00" "2013-01-01 05:00:00" ...Rows: 336,776

Columns: 19

$ year <int> 2013, 2013, 2013, 2013, 2013, 2013, 2013, 2013, 2013, 2…

$ month <int> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1…

$ day <int> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1…

$ dep_time <int> 517, 533, 542, 544, 554, 554, 555, 557, 557, 558, 558, …

$ sched_dep_time <int> 515, 529, 540, 545, 600, 558, 600, 600, 600, 600, 600, …

$ dep_delay <dbl> 2, 4, 2, -1, -6, -4, -5, -3, -3, -2, -2, -2, -2, -2, -1…

$ arr_time <int> 830, 850, 923, 1004, 812, 740, 913, 709, 838, 753, 849,…

$ sched_arr_time <int> 819, 830, 850, 1022, 837, 728, 854, 723, 846, 745, 851,…

$ arr_delay <dbl> 11, 20, 33, -18, -25, 12, 19, -14, -8, 8, -2, -3, 7, -1…

$ carrier <chr> "UA", "UA", "AA", "B6", "DL", "UA", "B6", "EV", "B6", "…

$ flight <int> 1545, 1714, 1141, 725, 461, 1696, 507, 5708, 79, 301, 4…

$ tailnum <chr> "N14228", "N24211", "N619AA", "N804JB", "N668DN", "N394…

$ origin <chr> "EWR", "LGA", "JFK", "JFK", "LGA", "EWR", "EWR", "LGA",…

$ dest <chr> "IAH", "IAH", "MIA", "BQN", "ATL", "ORD", "FLL", "IAD",…

$ air_time <dbl> 227, 227, 160, 183, 116, 150, 158, 53, 140, 138, 149, 1…

$ distance <dbl> 1400, 1416, 1089, 1576, 762, 719, 1065, 229, 944, 733, …

$ hour <dbl> 5, 5, 5, 5, 6, 5, 6, 6, 6, 6, 6, 6, 6, 6, 6, 5, 6, 6, 6…

$ minute <dbl> 15, 29, 40, 45, 0, 58, 0, 0, 0, 0, 0, 0, 0, 0, 0, 59, 0…

$ time_hour <dttm> 2013-01-01 05:00:00, 2013-01-01 05:00:00, 2013-01-01 0…- DuckDB has a non-standard version of

DESCRIBE, without a table name, where it returns the set of tables with columns for the table names, their column names, and column types.

8.7 SELECT Can Return Specific Fields (columns) FROM a Table

- In most data retrieval queries, the

SELECTstatement is followed by aFROMclause which identifies the source of the data.- That source is a table, a set of joined tables, or a previously-created table view (a saved query like an intermediate data frame variable).

- The data which is returned as a result of executing a

SELECTstatement is stored in a result table, which can also be called the result-set.- This means you can use the output as a table in a new

FROMclause.

- This means you can use the output as a table in a new

- A

SELECTstatement can have other clauses and modifiers to reduce the amount of data that is returned. - There is no equivalent for excluding fields (like

dplyr::select(-year)). Just select the fields you want.

Let’s use this syntax to get all the data in the fields tailnum, year, and model from the planes table.

```{sql}

--| connection: con

--| label: lst-select-planes

-- [SQL:Standard] Quoting identifiers with double-quotes is standard SQL,

-- but not all DBMS default to it.

SELECT "tailnum", "year", "model"

FROM planes;

```| tailnum | year | model |

|---|---|---|

| N10156 | 2004 | EMB-145XR |

| N102UW | 1998 | A320-214 |

| N103US | 1999 | A320-214 |

| N104UW | 1999 | A320-214 |

| N10575 | 2002 | EMB-145LR |

| N105UW | 1999 | A320-214 |

| N107US | 1999 | A320-214 |

| N108UW | 1999 | A320-214 |

| N109UW | 1999 | A320-214 |

| N110UW | 1999 | A320-214 |

- R equivalent

Think of SELECT ... FROM ... as the core skeleton of every SQL query.

- Everything else (

WHERE,GROUP BY,ORDER BY, etc.) adds constraints to shape the result-set, typically to be fewer rows that more focused on the data needed for the question at hand.

8.7.1 SELECT without a FROM

SQL SELECT can do more than retrieving columns from tables; it is the mechanism for evaluating expressions and returning result sets.

- Even when no table is referenced (there is no

FROM),SELECTreturns a one-row result table containing the computed value(s).

This can be useful for:

- Quick calculations

- Testing functions

- Checking database connectivity

- Inspecting system metadata

8.7.1.1 SELECT as a calculator

- This is often used interactively while developing more complex queries.

| (100 * 0.08) |

|---|

| 8 |

8.7.1.2 Using SELECT to Check connectivity

SELECT 1;is commonly used by applications and connection pools to verify the database connection is alive.- It requires no tables and executes quickly.

8.7.1.3 Using SELECT to Get Current Time or Date

```{sql}

--| connection: con

-- [SQL:Mostly standard; note CURRENT_TIMESTAMP] Portable;

-- returned type may differ (timestamp vs timestamptz).

SELECT CURRENT_TIMESTAMP;

```| CURRENT_TIMESTAMP |

|---|

| 2026-02-21 23:11:04 |

- If your system returns type

TIMESTAMP WITH TIME ZONE, convert explicitly toDATE:

```{sql}

--| connection: con

-- [SQL:DuckDB/Postgres-style] :: casting is not standard SQL;

-- use CAST(...) for portability.

-- Also note: time zone handling varies by DBMS

-- (DuckDB can return TIMESTAMP WITH TIME ZONE depending on settings)

SELECT (CURRENT_TIMESTAMP::TIMESTAMP)::DATE AS current_date;

```| current_date |

|---|

| 2026-02-21 |

8.7.1.4 Using SELECT to Test DATE Expressions

- Given the different flavors, DBMS can treat Dates and Times differently, with different default types so it’s good to validate function performance.

- Testing expressions independently is useful before embedding them inside larger queries.

8.7.2 Use SELECT * Rarely and with Caution

You can select every field in a table by using the wildcard *, e.g., SELECT *.

```{sql}

--| connection: con

--| label: lst-select-planes-2

-- [SQL:Standard] SELECT * is portable; LIMIT is not

-- (some DBMS use TOP / FETCH FIRST).

SELECT *

FROM planes

LIMIT 5;

```| tailnum | year | type | manufacturer | model | engines | seats | speed | engine |

|---|---|---|---|---|---|---|---|---|

| N10156 | 2004 | Fixed wing multi engine | EMBRAER | EMB-145XR | 2 | 55 | NA | Turbo-fan |

| N102UW | 1998 | Fixed wing multi engine | AIRBUS INDUSTRIE | A320-214 | 2 | 182 | NA | Turbo-fan |

| N103US | 1999 | Fixed wing multi engine | AIRBUS INDUSTRIE | A320-214 | 2 | 182 | NA | Turbo-fan |

| N104UW | 1999 | Fixed wing multi engine | AIRBUS INDUSTRIE | A320-214 | 2 | 182 | NA | Turbo-fan |

| N10575 | 2002 | Fixed wing multi engine | EMBRAER | EMB-145LR | 2 | 55 | NA | Turbo-fan |

However, this is generally considered a bad practice, especially outside of quick exploration.

SELECT *is Fragile Code- Tables evolve over time. New columns may appear or existing columns may change.

SELECT *may suddenly return unexpected fields and break downstream code.- Explicit column selection makes your query stable and intentional.

SELECT *can Create Performance Issues- On wide tables (hundreds of columns), returning everything is wasteful.

- Extra data must be:

- Retrieved from storage

- Processed by the database

- Transmitted over the connection

- Moved into memory

- Even in columnar systems like DuckDB, selecting fewer columns can significantly reduce I/O.

- Network overhead matters in production environments and shared systems.

- Sending unnecessary data across the network increases latency

- Large result sets consume bandwidth

- Can make queries slower for you and everyone else.

- Joins with

SELECT *can “Explode” making everything above worse.- With joins,

SELECT *can:- Duplicate columns (e.g., two id columns)

- Introduce ambiguous column names

- Make downstream transformations fragile

- Example: flights and weather share multiple columns

- With joins,

```{sql}

--| connection: con

-- [SQL:Standard] JOIN ... ON ... is portable;

-- result will be very wide because of duplicate/shared columns.

SELECT *

FROM flights f

JOIN weather w

ON f.origin = w.origin

AND f.year = w.year

AND f.month = w.month

AND f.day = w.day

AND f.hour = w.hour

LIMIT 10;

```| year | month | day | dep_time | sched_dep_time | dep_delay | arr_time | sched_arr_time | arr_delay | carrier | flight | tailnum | origin | dest | air_time | distance | hour | minute | time_hour | origin | year | month | day | hour | temp | dewp | humid | wind_dir | wind_speed | wind_gust | precip | pressure | visib | time_hour |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2013 | 1 | 1 | 517 | 515 | 2 | 830 | 819 | 11 | UA | 1545 | N14228 | EWR | IAH | 227 | 1400 | 5 | 15 | 2013-01-01 10:00:00 | EWR | 2013 | 1 | 1 | 5 | 39.02 | 28.04 | 64.43 | 260 | 12.65858 | NA | 0 | 1011.9 | 10 | 2013-01-01 10:00:00 |

| 2013 | 1 | 1 | 533 | 529 | 4 | 850 | 830 | 20 | UA | 1714 | N24211 | LGA | IAH | 227 | 1416 | 5 | 29 | 2013-01-01 10:00:00 | LGA | 2013 | 1 | 1 | 5 | 39.92 | 24.98 | 54.81 | 250 | 14.96014 | 21.86482 | 0 | 1011.4 | 10 | 2013-01-01 10:00:00 |

| 2013 | 1 | 1 | 542 | 540 | 2 | 923 | 850 | 33 | AA | 1141 | N619AA | JFK | MIA | 160 | 1089 | 5 | 40 | 2013-01-01 10:00:00 | JFK | 2013 | 1 | 1 | 5 | 39.02 | 26.96 | 61.63 | 260 | 14.96014 | NA | 0 | 1012.1 | 10 | 2013-01-01 10:00:00 |

| 2013 | 1 | 1 | 544 | 545 | -1 | 1004 | 1022 | -18 | B6 | 725 | N804JB | JFK | BQN | 183 | 1576 | 5 | 45 | 2013-01-01 10:00:00 | JFK | 2013 | 1 | 1 | 5 | 39.02 | 26.96 | 61.63 | 260 | 14.96014 | NA | 0 | 1012.1 | 10 | 2013-01-01 10:00:00 |

| 2013 | 1 | 1 | 554 | 600 | -6 | 812 | 837 | -25 | DL | 461 | N668DN | LGA | ATL | 116 | 762 | 6 | 0 | 2013-01-01 11:00:00 | LGA | 2013 | 1 | 1 | 6 | 39.92 | 24.98 | 54.81 | 260 | 16.11092 | 23.01560 | 0 | 1011.7 | 10 | 2013-01-01 11:00:00 |

| 2013 | 1 | 1 | 554 | 558 | -4 | 740 | 728 | 12 | UA | 1696 | N39463 | EWR | ORD | 150 | 719 | 5 | 58 | 2013-01-01 10:00:00 | EWR | 2013 | 1 | 1 | 5 | 39.02 | 28.04 | 64.43 | 260 | 12.65858 | NA | 0 | 1011.9 | 10 | 2013-01-01 10:00:00 |

| 2013 | 1 | 1 | 555 | 600 | -5 | 913 | 854 | 19 | B6 | 507 | N516JB | EWR | FLL | 158 | 1065 | 6 | 0 | 2013-01-01 11:00:00 | EWR | 2013 | 1 | 1 | 6 | 37.94 | 28.04 | 67.21 | 240 | 11.50780 | NA | 0 | 1012.4 | 10 | 2013-01-01 11:00:00 |

| 2013 | 1 | 1 | 557 | 600 | -3 | 709 | 723 | -14 | EV | 5708 | N829AS | LGA | IAD | 53 | 229 | 6 | 0 | 2013-01-01 11:00:00 | LGA | 2013 | 1 | 1 | 6 | 39.92 | 24.98 | 54.81 | 260 | 16.11092 | 23.01560 | 0 | 1011.7 | 10 | 2013-01-01 11:00:00 |

| 2013 | 1 | 1 | 557 | 600 | -3 | 838 | 846 | -8 | B6 | 79 | N593JB | JFK | MCO | 140 | 944 | 6 | 0 | 2013-01-01 11:00:00 | JFK | 2013 | 1 | 1 | 6 | 37.94 | 26.96 | 64.29 | 260 | 13.80936 | NA | 0 | 1012.6 | 10 | 2013-01-01 11:00:00 |

| 2013 | 1 | 1 | 558 | 600 | -2 | 753 | 745 | 8 | AA | 301 | N3ALAA | LGA | ORD | 138 | 733 | 6 | 0 | 2013-01-01 11:00:00 | LGA | 2013 | 1 | 1 | 6 | 39.92 | 24.98 | 54.81 | 260 | 16.11092 | 23.01560 | 0 | 1011.7 | 10 | 2013-01-01 11:00:00 |

```{sql}

--| connection: con

-- [SQL:Standard] Best-practice explicit column list; portable.

SELECT f.time_hour,

f.flight,

f.origin,

f.dest,

w.temp

FROM flights f

JOIN weather w

ON f.origin = w.origin

AND f.year = w.year

AND f.month = w.month

AND f.day = w.day

AND f.hour = w.hour

LIMIT 10;

```| time_hour | flight | origin | dest | temp |

|---|---|---|---|---|

| 2013-02-14 15:00:00 | 67 | EWR | ORD | 41.00 |

| 2013-02-14 15:00:00 | 373 | LGA | FLL | 39.92 |

| 2013-02-14 15:00:00 | 764 | EWR | IAH | 41.00 |

| 2013-02-14 16:00:00 | 2044 | LGA | MIA | 42.08 |

| 2013-02-14 16:00:00 | 2171 | LGA | DCA | 42.08 |

| 2013-02-14 16:00:00 | 1275 | JFK | SLC | 44.06 |

| 2013-02-14 16:00:00 | 366 | LGA | STL | 42.08 |

| 2013-02-14 15:00:00 | 1550 | EWR | SFO | 41.00 |

| 2013-02-14 15:00:00 | 4694 | EWR | MKE | 41.00 |

| 2013-02-14 16:00:00 | 1647 | LGA | ATL | 42.08 |

The best practice is to select only the specific fields of interest.

Benefits:

- More Robust Code: Your query won’t silently change behavior if new, irrelevant columns are added to a table..

- More Efficient Operations:Only the required columns are scanned, processed, and transmitted.

- In columnar databases (like DuckDB, Snowflake, BigQuery), selecting fewer columns can dramatically improve performance because only the referenced columns are physically read from storage.

- This substantially reduces I/O and memory pressure.

- Clearer Intent: The query explicitly documents which variables are required for downstream logic.

- This improves readability, maintainability, and code review quality.

- Safer Joins: Selecting only needed columns:

- Reduces accidental column duplication when using ON

- Makes unintended many-to-many joins easier to detect

- Prevents wide result sets that can mask row-explosion problems

- Reduces accidental column duplication when using ON

- Reduces Contention for Others: On multi-user systems

- Wide scans increase CPU and memory usage

- Larger intermediate results increase the risk of “spill-to-disk” which is much slower.

- Transmitting unnecessary data increases network load

- Wide scans increase CPU and memory usage

Modern databases rarely “lock” tables for simple reads, but poorly scoped queries such as SELECT * can still degrade performance for others.

Exceptions

- Small tables you know won’t change (e.g., a static lookup table).

- Quick interactive exploration and then switch to explicit fields in production queries.

Let’s examine several keywords that modify SELECT to reduce the number of rows or modify the data.

8.7.3 Select Distinct Records to Remove Duplicate Records

Some tables may allow duplicate records or a query may create a result set with duplicate values if only a few fields are selected.

The statement SELECT DISTINCT field1, field2 modifies the result set so it has no duplicate rows.

Let’s select all the distinct (unique) destinations.

```{sql}

--| connection: con

-- [SQL:Standard] DISTINCT is portable.

SELECT DISTINCT "dest"

FROM flights;

```| dest |

|---|

| SFO |

| SJU |

| GSO |

| GSP |

| SAN |

| EGE |

| CRW |

| TYS |

| STT |

| MTJ |

- R equivalent

8.8 WHERE Filters Records (Rows) by Values

To modify the result set based on values in the records, add a WHERE clause after the FROM clause with a condition that returns a logical value.

SELECT column1, column2

FROM table_name

WHERE condition;The

WHEREclauses uses the condition to filter the returned records to those whose values meet the condition, i.e., only rows for which condition evaluates to TRUE will appear in the result-set.The

WHEREclause requires an expression that returns a logical value.

```{sql}

--| connection: con

-- [SQL:Standard] WHERE clause; portable.

SELECT "flight", "distance", "origin", "dest"

FROM flights

WHERE "distance" < 50;

```| flight | distance | origin | dest |

|---|---|---|---|

| 1632 | 17 | EWR | LGA |

- R equivalent:

The allowable operators in WHERE are:

- Comparison:

=,!=,<,>,<=,>= - Logical:

AND,OR,NOT - Membership:

IN - Null check:

IS NULL,IS NOT NULL

To test for equality, use a single equal sign, =.

```{sql}

--| connection: con

-- [SQL:Standard] Equality test uses = ; portable.

SELECT "flight", "month"

FROM flights

WHERE "month" = 12;

```| flight | month |

|---|---|

| 745 | 12 |

| 839 | 12 |

| 1895 | 12 |

| 1487 | 12 |

| 2243 | 12 |

| 939 | 12 |

| 3819 | 12 |

| 1441 | 12 |

| 2167 | 12 |

| 605 | 12 |

- R equivalent

To use a character string you must use single quotes on the string, not double.

```{sql}

--| connection: con

-- [SQL:Standard] String literal in single quotes; portable.

SELECT "flight", "origin"

FROM flights

WHERE "origin" = 'JFK';

```| flight | origin |

|---|---|

| 1141 | JFK |

| 725 | JFK |

| 79 | JFK |

| 49 | JFK |

| 71 | JFK |

| 194 | JFK |

| 1806 | JFK |

| 1743 | JFK |

| 303 | JFK |

| 135 | JFK |

- R equivalent

8.8.1 Combine Multiple Criteria Using the Logical Operators.

Here is an example of using the AND logical operator.

```{sql}

--| connection: con

-- [SQL:Standard] AND / OR / NOT; portable.

SELECT "flight", "origin", "dest"

FROM flights

WHERE "origin" = 'JFK' AND "dest" = 'CMH';

```| flight | origin | dest |

|---|---|---|

| 4146 | JFK | CMH |

| 3783 | JFK | CMH |

| 4146 | JFK | CMH |

| 3783 | JFK | CMH |

| 4146 | JFK | CMH |

| 3783 | JFK | CMH |

| 4146 | JFK | CMH |

| 3783 | JFK | CMH |

| 4146 | JFK | CMH |

| 3650 | JFK | CMH |

- R equivalent:

You can use the OR and NOT logical operators too.

- Put parentheses around the portions of the expression to ensure you get the desired order of operations.

```{sql}

--| connection: con

-- [SQL:Standard] Parentheses + NOT/OR; portable.

SELECT

DISTINCT "flight",

"origin",

"dest"

FROM

flights

WHERE

NOT ("origin" = 'JFK'

OR "origin" = 'LGA')

AND dest = 'CMH';

```| flight | origin | dest |

|---|---|---|

| 3813 | EWR | CMH |

| 3842 | EWR | CMH |

| 5906 | EWR | CMH |

| 4230 | EWR | CMH |

| 5679 | EWR | CMH |

| 4372 | EWR | CMH |

| 4316 | EWR | CMH |

| 4334 | EWR | CMH |

| 5680 | EWR | CMH |

| 3851 | EWR | CMH |

- R equivalent

8.8.2 Filter on Membership using IN or NOT IN

You can simplify and generalize a WHERE condition for membership using IN instead of multiple OR comparisons.

- To get flights whose destination is one of several airports (e.g., BWI, IAD, DCA) and exclude certain origins:

```{sql}

--| connection: con

-- [SQL:Standard] IN / NOT IN; portable (NULL semantics differ,

-- NOT IN can behave unexpectedly if subquery returns NULL).

SELECT DISTINCT

"flight",

"origin",

"dest"

FROM flights

WHERE

"origin" NOT IN ('JFK', 'LGA')

AND "dest" IN ('BWI', 'IAD', 'DCA');

```| flight | origin | dest |

|---|---|---|

| 5818 | EWR | DCA |

| 4312 | EWR | DCA |

| 4509 | EWR | BWI |

| 1037 | EWR | IAD |

| 3827 | EWR | IAD |

| 4103 | EWR | BWI |

| 4113 | EWR | IAD |

| 4108 | EWR | IAD |

| 4316 | EWR | BWI |

| 421 | EWR | BWI |

- R Equivalent

8.8.3 Remove Records with Missing Data

Missing data is NULL in SQL (instead of NA).

We can explicitly remove records with missing values with the clause WHERE field IS NOT NULL.

```{sql}

--| connection: con

-- [SQL:Standard] NULL tests require IS NULL / IS NOT NULL; portable.

SELECT "flight", "dep_delay"

FROM flights

WHERE "dep_delay" IS NOT NULL;

```| flight | dep_delay |

|---|---|

| 1545 | 2 |

| 1714 | 4 |

| 1141 | 2 |

| 725 | -1 |

| 461 | -6 |

| 1696 | -4 |

| 507 | -5 |

| 5708 | -3 |

| 79 | -3 |

| 301 | -2 |

- R equivalent

Just use IS if you want only the records that are missing data.

8.9 String/Text Functions

SQL provides several functions for working with text.

- These are often used in

WHEREclauses to filter data:

Find flights for carriers with “Jet” in their name (case-insensitive).

```{sql}

--| connection: con

-- [SQL:DuckDB/Postgres-style] ILIKE is not standard SQL

-- (case-insensitive LIKE); portable alternative varies by DBMS.

SELECT f.flight, a.name AS airline_name, f.origin

FROM flights f

JOIN airlines a

ON f.carrier = a.carrier

WHERE a.name ILIKE '%jet%' -- ILIKE for case-insensitive matching

LIMIT 10;

```| flight | airline_name | origin |

|---|---|---|

| 725 | JetBlue Airways | JFK |

| 507 | JetBlue Airways | EWR |

| 5708 | ExpressJet Airlines Inc. | LGA |

| 79 | JetBlue Airways | JFK |

| 49 | JetBlue Airways | JFK |

| 71 | JetBlue Airways | JFK |

| 1806 | JetBlue Airways | JFK |

| 371 | JetBlue Airways | LGA |

| 343 | JetBlue Airways | EWR |

| 135 | JetBlue Airways | JFK |

- Extract first characters of the carrier code.

```{sql}

--| connection: con

-- [SQL:Mostly standard; note SUBSTR()] SUBSTR exists in many DBMS;

-- some prefer SUBSTRING().

SELECT carrier, SUBSTR(carrier, 1, 2) AS carrier_prefix

FROM airlines

LIMIT 10;

```| carrier | carrier_prefix |

|---|---|

| 9E | 9E |

| AA | AA |

| AS | AS |

| B6 | B6 |

| DL | DL |

| EV | EV |

| F9 | F9 |

| FL | FL |

| HA | HA |

| MQ | MQ |

- Trim whitespace from airport codes (if any).

```{sql}

--| connection: con

-- [SQL:Standard] TRIM is portable.

SELECT TRIM(origin) AS clean_origin, TRIM(dest) AS clean_dest

FROM flights

LIMIT 10;

```| clean_origin | clean_dest |

|---|---|

| EWR | IAH |

| LGA | IAH |

| JFK | MIA |

| JFK | BQN |

| LGA | ATL |

| EWR | ORD |

| EWR | FLL |

| LGA | IAD |

| JFK | MCO |

| LGA | ORD |

8.9.1 CONCAT Concatenates Multiple Strings or Lists.

SQL has the CONCAT function and an operator for combining strings into a single value.

- This includes concatenating column names. Add an alias so it is easier to reference.

```{sql}

--| connection: con

-- [SQL:Mostly standard; note CONCAT + ILIKE] CONCAT is widely supported;

-- ILIKE is not standard.

SELECT DISTINCT CONCAT(f.carrier, '-', a.name) AS airline_name

FROM flights f

JOIN airlines a

ON f.carrier = a.carrier

WHERE a.name ILIKE '%air%' -- ILIKE for case-insensitive matching

ORDER BY airline_name

LIMIT 10;

```| airline_name |

|---|

| 9E-Endeavor Air Inc. |

| AA-American Airlines Inc. |

| AS-Alaska Airlines Inc. |

| B6-JetBlue Airways |

| DL-Delta Air Lines Inc. |

| EV-ExpressJet Airlines Inc. |

| F9-Frontier Airlines Inc. |

| FL-AirTran Airways Corporation |

| HA-Hawaiian Airlines Inc. |

| MQ-Envoy Air |

Concatenation is so common there is an operator for it.

- Use

||as a binary operator to concatenate the elements on both sides of it.

```{sql}

--| connection: con

-- [SQL:Mostly standard; note || + ILIKE] || concatenation is standard SQL,

-- but not supported by all DBMS (e.g., MySQL uses CONCAT()).

SELECT DISTINCT f.carrier || '-' || a.name AS airline_name

FROM flights f

JOIN airlines a

ON f.carrier = a.carrier

WHERE a.name ILIKE '%air%' -- ILIKE for case-insensitive matching

ORDER BY airline_name

LIMIT 10;

```| airline_name |

|---|

| 9E-Endeavor Air Inc. |

| AA-American Airlines Inc. |

| AS-Alaska Airlines Inc. |

| B6-JetBlue Airways |

| DL-Delta Air Lines Inc. |

| EV-ExpressJet Airlines Inc. |

| F9-Frontier Airlines Inc. |

| FL-AirTran Airways Corporation |

| HA-Hawaiian Airlines Inc. |

| MQ-Envoy Air |

8.9.2 Regular Expressions in SQL

SQL supports regex pattern matching to filter strings more precisely.

- In many SQL dialects you can use

REGEXP(in DuckDB useREGEXP_MATCHES) to match patterns:

Get Flights whose destination airport code starts with ‘O’ and ends with ‘A’.

```{sql}

--| connection: con

-- [SQL:DuckDB] REGEXP_MATCHES is DuckDB-specific naming;

-- other DBMS use REGEXP_LIKE / ~ / RLIKE, etc.

SELECT flight, dest

FROM flights

WHERE REGEXP_MATCHES(dest, '^O.*A$')

LIMIT 10;

```| flight | dest |

|---|---|

| 4417 | OMA |

| 4085 | OMA |

| 4160 | OMA |

| 4417 | OMA |

| 4085 | OMA |

| 4294 | OMA |

| 4085 | OMA |

| 4294 | OMA |

| 4085 | OMA |

| 4085 | OMA |

- Carriers whose name contains ” Air “.

```{sql}

--| connection: con

-- [SQL:DuckDB] REGEXP_MATCHES name varies across DBMS.

SELECT name

FROM airlines

WHERE REGEXP_MATCHES(name, '^.* Air .*')

LIMIT 10;

```| name |

|---|

| Endeavor Air Inc. |

| Delta Air Lines Inc. |

| United Air Lines Inc. |

- Replace tail numbers that begin with “N” with only the digits after the “N”.

```{sql}

--| connection: con

-- [SQL:DuckDB] REGEXP_REPLACE exists in many DBMS but differs in

-- name/flags/backref syntax.

SELECT tailnum,