12 Considerations for Responsible Data Science

responsible data science, data science life cycle, law, policy, ethics, ethcial frameworks

12.1 Introduction

12.1.1 Learning Outcomes

- Differentiate legal, professional, and ethical considerations.

- Identify and interpret professional Codes of Conduct.

- Apply Philosophical Frameworks for ethical reasoning.

- Identify sources of potential ethical issues in Data Science.

- Practice responsible data science from legal, professional, and ethical perspectives.

12.1.2 References:

- See references.

12.1.2.1 Other References

- See links in the notes.

These notes provide general background to facilitate educational discussion.

They do not offer any form of legal advice or recommendations.

12.2 Legal, Professional, and Ethical Considerations

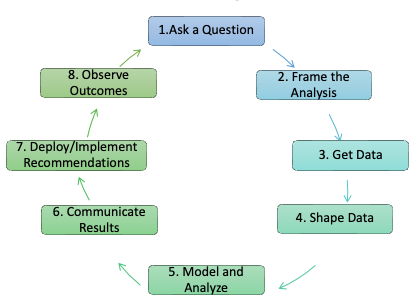

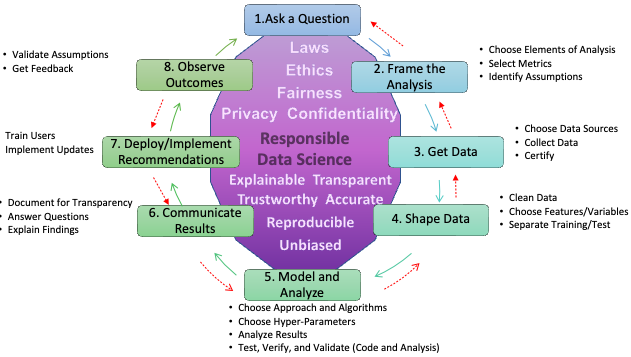

12.2.1 Data Science Follows a Life Cycle

12.2.2 Data Scientists Make Choices When Answering Questions

- What kinds of projects do I work on and What questions do I analyze?

- Who are the Stakeholders?

- Towards what goals do I optimize my models? Accuracy, Fairness, Equity, Equality, …?

- How do I get my data? How do I protectmy data?

- Is my data representative of the population?

- What do I do about “bad” or missing data or “outliers” that “mess up” my results?

- What variables/features/attributes do I use?

- How much effort do I put into checking my results? Are they repeatable?

- How do I leverage/credit other people’s work?

- How do I report my results - what is intellectual propertyand what should be public?

- …

- Choices can involve Legal, Professional, or Ethical Considerations

Choices have consequences, with benefits and risks.

12.2.3 Legal Considerations

Legal considerations address the criminal/civil risks for violating laws.

Laws (statutes) specify permissible and/or impermissible activities as well as potential punishments (judicial, e.g., imprisonment).

Policies (regulations) provide guidance on implementing activities (within legal constraints) along with adjudication procedures and potential punishments (non-judicial, e.g., debarment).

Legal issues in big data include how you gather, protect, and share data, and increasingly, how you use it.

Laws are “local” not universal: Pirates or Privateers

Laws do not keep pace with technology (generative AI anyone?) and can be difficult to interpret.

When in doubt, ask a lawyer competent in the issue.

12.2.4 Laws Proscribe Some Choices

- Health Insurance Portability and Accountability Act (HIPAA) (US DHHS 2015). See HIPAA Compliant Data Analytics: Privacy-Preserving Healthcare Insights (HIPAA Partners Team 2025)

- Family Educational Rights and Privacy Act (FERPA) (DOEd n.d.)

- Fair Credit Reporting Act (FTC 2013b). See FTC Credit Reporting Information (FTC 2018).

- Fair Housing Act (DHUD 2021)

- Equal Employment Opportunity Act (EEOC 2021b)

- Children’s Online Privacy Protect Act (COPPA) and Rule (FTC 2013a)

- Genetic Information Nondiscrimination Act (GINA) (EEOC 2021a) See GINA, Big Data, and the Future of Employee Privacy (Areheart and Roberts 2019)

- European General Data Protection Regulation (GDPR) (Wikipedia n.d.)

- In 2024 the European Artificial Intelligence Act was passed to foster responsible AI development and deployment in the EU. (Commission n.d.)

- 2020 California Consumer Protection Act (Edelman 2020)

- And many more … with the expectation of more to come …

- “In the first half of 2025, at least 34 states introduced over 250 AI-related health bills”. (Susarla 2025)

- Congressional interest in Section 230 of the Communications Decency Act of 1996

- Court Cases: TikTok Ban, Censorship and Antitrust cases for Meta And Google (2), and many more …

- Data Privacy Compliance Guide for Businesses: GDPR, CCPA, State Laws (BRITECITY 2026)

- Regulating Artificial Intelligence: U.S. and International Approaches and Considerations for Congress (Laurie Harris 2025)

12.2.5 Professional Considerations

Professional considerations address the risk of activities related to organizations with which you affiliate.

- Professional Organizations

- Employer Organizations

Organizational bylaws or policies identify and manage risk to the institution.

These may include professional “Codes of Conduct” or “Codes of Ethics”.

- American Statistical Association (ASA 2021)

- Association of Computing Machinery (ACM 2021)

- INFORMS Ethical Guidelines (INFORMS 2021)

- Data Science Association (Association 2021)

Organizational behavior and ethics may conflict with individual ethics.

- Choices can include trying to change the organization or offending individuals; or leaving or being forced to leave the organization, with potential legal issues as well.

When in doubt, ask a mentor or manager you trust.

12.2.6 Ethical Considerations

Ethical considerations arise when asking

- “What should I do?”

- “What is Right”?

Ethical Choices can be hard, especially when choices may require violating a law, regulation, or professional guideline.

Individual principles and cultural norms shape options and guide choices in complex situations.

Often, there is no universally-accepted or even a good “right answer”.

- May have to choose between two bad outcomes as in the The Trolley Problem which has many variations and analogs. (Merriam-Webster 2021)

May have to choose between individual and group outcomes.

Ethical choices can lead to feelings of guilt, group reprobation, civil action (torts), or criminal charges.

Many, many, frameworks attempt to guide Ethical Choices.

12.3 Ethical Frameworks

12.3.1 Some (of many) Ethical Frameworks 1

Consequentialist or Utilitarianism: greatest balance of good over harm (groups/individual).

- Focus is on the consequences.

- Choose the future outcomes that produce the most good.

- Compromise is expected as the end justifies the means.

Duty or Deontology: Do your Duty , Respect the Rules, Be Fair, Follow Divine Guidance.

- Focus is on the rules and whether the act violated the rules regardless of consequences.

- Do what is “right” regardless of the consequences or emotions.

- Everyone has the same duties and ethical obligations at all times.

Virtues: Live a Virtuous Life by developing the proper character traits.

- Focus is on what society would say what a virtuous person would do.

- Ethical behavior is whatever a virtuous person would do.

- Tends to reinforce local cultural norms as the standard of ethical behavior.

Rights

- Focus on the rights of the affected stakeholders, both individuals and groups.

- Ethical behavior does not result in a violation of someone’s rights.

- How do we know agree on is a “right” versus a “privilege” or “benefit”?

Frameworks can conflict with each other or are “wrong” in the extreme.

No single or simple right answer!

12.3.2 Using Frameworks in Ethical Decisions

- Recognize There May Be an Ethical Issue.

- Assess the underlying definitions, facts, and assumptions and constraints

- Consider the Parties Involved, the stakeholders.

- What individuals or groups might be harmed or benefit, and by when.

- Gather all Relevant Information. Are you missing key facts? Are they knowable?

- Formulate Actions and Evaluate under Alternative Frameworks.

- What will produce the most good and do the least harm? ( Utilitarian)

- What will be in accordance with existing rules or principles? (Duty)

- What leads me to act as the sort of person I want to be? (Virtues)

- What respects the rights of everyone affected by the decision? (Rights)

- What treats people equally, equitably, or proportionately? ( Justice)

- What serves the entire community, not just some members? (Common Good)

- Examine Alternatives and Make a Decision.

- Act and Observe.

- Assess and Reflect on the Outcomes.

If you don’t like the outcomes, restart the process.

12.4 Identifying Ethical Issues

12.4.1 Our Biased Brains Helped Us Survive

Our brains have evolved mechanisms to make quick decisions.

These are the source of “Unconscious Biases” or “Implicit Biases”.

- “An unconscious association, belief, or attitude toward any social group” that can lead to stereotyping. (Kendry Cherry MsED 2025)

- Friend or Threat, Familiar or Strange, Us versus Them

- Learn more and test yourself at Project Implicit (Implicit 2022).

- Under stress, we tend to bypass the higher-level cognitive centers that evolved later and take more time to reason.

Humans get comfortable with patterns which can lead to systematic deviations from making rational judgments.

- 12 Major Types of Cognitive Bias Explained. (Psychology 2017)

- Many potential biases could affect our decisions. (Desjardins 2017)

Ethical Challenges can arise from our own implicit biases or the implicit biases of others affecting our data, thoughts, and actions.

Short-term choices under stress may be bad in the long run ….

12.4.2 Can Data and Algorithms Exhibit Harmful Bias?

The question goes back decades; modern AI systems are driving expanded research.

Bias in the (training) data (historical, sampling, …) can drive biased outcomes.

Are algorithms really less biased than people? It depends …

- How do you measure fairness? See Tutorial: 21 Definitions of Fairness …

- How can you tell with “black box” models? See Machine learning transparency

- Algorithms find “hidden” relationships among proxy variables that can distort the results and interpretations.

Trade offs among accuracy, explainability, and transparency are not a zero-sum game.

- These relationships are triangular and depend on problem context, model design, data quality, and choice of metrics. (Li and Li 2025)

Active area for research and publication.

12.4.3 Three Articles for Consideration

Higher error rates in classifying the gender of darker-skinned women than for lighter-skinned men (O’Brien 2019)

Big Data used to generate unregulated e-scores in lieu of FICO scores for Credit in Lending] is on Canvas. (Bracey and Moeller 2018)

Discussion Questions

- Is there an ethical issue or more than one? What is it?

- Who is affected and who is responsible?

- Pick one of the Professional Codes of Conduct or Guidelines. How would it apply?

- What would you do differently or recommend?

12.4.4 More Examples of Ethical Issues

- Contradictions and competition among legal, professional, and ethical guidelines.

- Using biased data ( even unknowingly) or eliminating extreme values or small groups.

- Using Proxies for “Protected” Attributes (even unknowingly).

- Protection of Intellectual Property versus Explainability, Transparency and Accountability

- Law of Unintended Consequences - people will use your products and solutions in “creative” ways that - May not align with your principles, or, - Be technically inappropriate.

So what can you do? What should you do?

12.5 Practicing Responsible Data Science.

What Can You Do? What Should You Do?

12.5.1 Consider Ethical Choices Across the DS Life Cycle

Get Data: How was it collected? Was informed consent required/given? Is there balanced representation ? Selection Bias? Availability Bias? Survivorship Bias?

Shape Data: Are we aggregating distinct groups? How do we treat missing data? Are we separating training and testing data ?

Model and Analyze: How are we documenting assumptions, treating extreme values, or checking over-fitting? Are we checking multiple fairness and performance metrics?

Communicate Results: Are the graphs misleading? Did we cherry pick or data snoop? Are we reporting \(p\)-values and hyper-parameters?

Deploy/Implement: Is the deployment accessible to all?

Observe Outcomes: Can we check assumptions and analyze outcomes for bias?

Are we following professional guidelines from ASA, ACM, INFORMS, …?

12.5.2 Consider Frameworks for Responsible Data Science

Published by the Royal Statistical Society and the Institute and Faculty of Actuaries in A Guide for Ethical Data Science

- Start with clear user need and public benefit

- Be aware of relevant legislation and codes of practice

- Use data that is proportionate to the user need

- Understand the limitations of the data

- Ensure robust practices and work within your skill set

- Make your work transparent and be accountable

- Embed data use responsibly

Department of Defense AI Capabilities shall be:

Responsible. … exercise appropriate levels of judgment and care, while remaining responsible for the development, deployment, and use….

Equitable. … take deliberate steps to minimize unintended bias in AI capabilities.

Traceable. … develop and deploy AI capabilities such that relevant personnel have an appropriate understanding …, including with transparent and auditable methodologies, data sources, and design procedures and documentation.

Reliable. … AI capabilities will have explicit, well-defined uses, and the safety, security, and effectiveness … will be subject to testing and assurance within those defined uses ….

Governable. … design and engineer AI capabilities to fulfill their intended functions while possessing the ability to detect and avoid unintended consequences, and the ability to disengage or deactivate deployed systems that demonstrate unintended behavior.

(DoD 2020)

- Bold innovation: We develop AI that assists, empowers, and inspires people in almost every field of human endeavor; drives economic progress; and improves lives, enables scientific breakthroughs, and helps address humanity’s biggest challenges. Avoid creating or reinforcing unfair bias. …

- Responsible development and deployment: Because we understand that AI, as a still-emerging transformative technology, poses evolving complexities and risks, we pursue AI responsibly throughout the AI development and deployment lifecycle, from design to testing to deployment to iteration, learning as AI advances and uses evolve. …

- Collaborative progress, together: We make tools that empower others to harness AI for individual and collective benefit. …

IBM Principles for Trust and Transparency

- The purpose of AI is to augment human intelligence. The purpose of AI and cognitive systems developed and applied by IBM is to augment – not replace – human intelligence.

- Data and insights belong to their creator.

- IBM clients’ data is their data, and their insights are their insights. Client data and the insights produced on IBM’s cloud or from IBM’s AI are owned by IBM’s clients. We believe that government data policies should be fair and equitable and prioritize openness.

- New technology, including AI systems, must be transparent and explainable.

- For the public to trust AI, it must be transparent. Technology companies must be clear about who trains their AI systems, what data was used in that training and, most importantly, what went into their algorithm’s recommendations. If we are to use AI to help make important decisions, it must be explainable.

(IBM 2019)

A guide to Building “Trustworthy” Data Products

Based on the golden rule: Treat others’ data as you would have them treat your data

Consent - Get permission from the owners or subjects of the data before …

Clarity - Ensure permission is based on a clear understanding of the extent of your intended usage

Consistency - Build trust by ensuring third parties adhere to your standards/agreements

Control (and Transparency) - Respond to data subject requests for access/modification/deletion, e.g., the right to be forgotten

Consequences (and Harm) - Consider how your usage may affect others in society and potential unintended applications.

The FAIR Guding Principles for scientific data management and stewardship aim to improve the “Findability, Accessibility, Interoperability, and Reuse” of digital assets.

- Findable: Metadata and data should be easy to find for both humans and computers. Machine-readable metadata are essential for automatic discovery …

- Accessible: Users need to know how data can be accessed,including authentication and authorization, e.g., make data downloadable from a credible repository …

- Interoperable: Your data should be in non-proprietary, modern formats/software so it can be integrated with other data …

- Reusable: Metadata and data should be well-described so they can be replicated and/or combined in different settings based on proper documentation and a clear and accessible data usage license.

12.5.3 To Be an Ethically Responsible Data Scientist …

Integrate ethical decision making into your environment.

- Recognize legal, professional, and ethical issues surround your work.

- See Weapons of Math Destruction for multiple scenarios. (O’Neil 2017)

- 7 Cognitive Biases That Affect Your Data Analysis (and How to Overcome Them) (Vattikuti 2025)

- See Weapons of Math Destruction for multiple scenarios. (O’Neil 2017)

- Ask Questions of Peers, Managers, Mentors, and Experts to get guidance.

- Document your sources and approach: assumptions, data, code, and references. Use Quarto.

- Protect data: especially HIPPA or Personal Identifiable Info (PII). (Us Dept of Labor 2024)

- Consider Differential Privacy à la the Census Bureau. (Bureau 2023)

- Create reproducible analysis and solicit appropriate reviews and feedback.

- Avoid “Spurious Correlations”. (Vigen 2021), produce results that Reveal, Don’t Conceal. (Weissgerber Tracey L. 2019) and don’t create Examples of Misleading Statistics.(Calzon 2021)

- Follow Publishing and Conference Ethical Guidelines e.g., AutoML’s. (AutoML n.d.)

- Consider your own biases 20 Tips For Addressing Unconscious Bias In The Workplace, Starting From The Top Down.(ExpertPanel 2024)

As Davy Crockett might say, “Try to be sure you are right, then Go Ahead!”

12.5.4 Stay Current on Emerging Ideas

- Review articles on Big Data/Data Science/AI Ethics, e.g.,

- Review the International Inventory of Awesome AI Guidelines (IEAI-ML 2021)

- Consider popular press articles such as

- Investigate Academic Centers, e.g.,

- Check out policy think tanks, e.g., Center for AI and Digital Policy (CAIDP), GovAI, Partnership on AI, or Carnegie AI Policy Labs

- Consider Conferences such as ACM Conference on Fairness, Accountability, and Transparency (ACM n.d.) or Conference on Artificial Intelligence, Ethics, and Society (AAAI ACM n.d.)

Try to stay on the fast moving train that is Responsible Data Science.

12.6 Considerations for Responsible Data Science Summary

You should now be able to better:

- Differentiate legal, professional, and ethical considerations.

- Identify and interpret professional Codes of Conduct.

- Apply Philosophical Frameworks for ethical reasoning.

- Identify sources of potential ethical issues in Data Science.

- Practice responsible data science from legal, professional, and ethical perspectives.

You should also have greater competence in considering choices that practice and promote responsible data science today, and in the future.

Finally, when it comes to responsible Data Science, Jane Addams reminds us that thinking about ethics is not enough, …

“Action indeed is the sole medium of expression for ethics.”