7 Getting Data by Web Scraping

rvest, xml, chromote, rselenium

7.1 Introduction

7.1.1 Learning Outcomes

- Check the Terms of Service and robots.txt prior to scraping.

- Employ Cascading Style Sheet (CSS) selectors in web scraping.

- Use the {rvest} package for web scraping static sites.

- Apply techniques to tidy data after web scraping.

7.1.2 References:

- R for Data Science 2nd Edition Chapter 25 Web Scraping Wickham, Cetinkaya-Rundel, and Grolemund (2023)

- Intro to robots.txt Google (2023)

- {polite} package Perepolkin (2023)

- {rvest} package Wickham (2022)

- {xml2} package Wickham, Hester, and Ooms (2023)

- {chromote} Chang and Schloerke (2024)

- SelectorGadget for Chrome Cantino (2013)

- SelectorGadget vignette Wickham (2023)

- {readxl} package Wickham and Bryan (2023)

- {googlesheets4} package Bryan (2023)

- {selenider} package Ashby Thorpe (2025)

- {rplaywright} package Siregar (2024)

- {promises} package Cheng (2025a)

- {promises} vignetteCheng (2025b)

- {RSelenium} package Harrison (2023a)

- {RSelenium} vignette Harrison (2023b)

- {httpuv} package Joe Cheng et al. (2025)

- {PDF} Data Extractor package Stricker (2023)

- {tabulapdf} package Sepulveda (2024a)

- {tabulapdf} vingette Sepulveda (2024b)

7.1.2.1 Other References

- W3 Schools HTML Tutorial Schools (2023b)

- W3 Schools CSS Tutorial Schools (2023a)

- W3 Schools Javascript Promises Schools (n.d.)

- Document Object Model Docs (2023)

- Playwright Microsoft (n.d.)

- RSelenium Tutorial Atuo (2025)

- The Ultimate CSS Selector Cheat Sheet scrape-it.cloud (2022)

7.2 Web Scraping

Web scraping is a way to extract data from web sites that do not have APIs, or whose APIs do not provide access to the data you need.

A decade ago, web scraping was fairly straightforward.

However, more modern websites create challenges for scraping as they employ “behind-the-page” techniques to optimize speed while balancing openness (so they can be found and useful) with protecting their intellectual property.

- Building a successful web-scraping strategy starts with a key realization:

What you see in a browser is not (necessarily) what your code gets.

The web page you see as a human using a browser may differ substantially from the content returned to your code when it makes a request to the same URL.

- Many pages (especially older ones) are mostly static HTML, where the content of interest is always present directly in the page source.

- Newer web pages, particularly those optimized for performance and interactivity, rely on one or more techniques that dynamically add content only as required.

- Some pages are “rendered” or “hydrated” after the initial load, with content inserted by JavaScript.

- Some pages deliver only partial content initially and load additional content as the user scrolls (“infinite scrolling”).

- Some pages return different content depending on request headers (especially the User-Agent) or cookies related to consent, location, or experiments.

- Many web sites now actively balance being discoverable (so search engines can index their content) with protecting their intellectual capital.

- As a result, sites may selectively limit, reshape, or obscure the data returned to automated clients, while still presenting full content to human users and allowed search engine crawlers.

- Modern web sites also frequently re-implement and refactor their code, and often there is no visible change to the rendered page.

- Front-end frameworks, build pipelines, and A/B testing can change the underlying structure, class names, or data layout while the page looks identical to a human user.

- Scraping code that relies too closely on a specific page structure may fail without warning and without any change in the URL or visible content.

Taken together, these realities mean successful web scraping is less about finding a clever content selector and more about choosing a strategy that is robust to partial content, dynamic content, client-dependent responses, and structural change.

7.3 Web Scraping and Responsible Data Science - To Scrape or Not to Scrape, that is the question

Is web scraping legal? Yes, but …

- Web Scraping - Legal or Illegal?

- Recent Court cases have ruled in favor of the web scrapers in some circumstances.

- In 2024, a U.S. District Court in the Northern District of California dismissed contract/tort claims that sought to bar scraping of publicly available data, holding those claims were preempted by the Copyright Act. This decision has implications for whether website terms of use can limit scraping for commercial purposes or AI training. Recent District Court decision casts doubt on terms of use barring data scraping

- In an ongoing 2025 Canadian case, news organizations sued OpenAI, alleging its training of generative AI models involved scraping copyrighted and other protected content from their websites without permission,raising questions about copyright infringement and fair use in the context of AI training. Scraping the Surface: OpenAI Sued for Data Scraping in Canada

- In 2021, HiQ Labs v. LinkedIn, the Ninth Circuit affirmed scraping publicly accessible profile data does not necessarily violate the federal Computer Fraud and Abuse Act (CFAA), a foundational U.S. precedent on whether automated collection of public web data is lawful. HIQ LABS, INC.,Plaintiff-Appellee, v. LINKEDIN CORPORATION, Defendant-Appellant

- Scraping data for personal use appears to fall under the “fair use” doctrine.

- Commercial use often violates the Terms of Service for the web site owner.

- Laws are being updated in the US and elsewhere to address data privacy, especially for personal identifiable data (PII).

- The post Ethical & Compliant Web Data Benchmark in 2026 argues that ethical web scraping is not just about what data you collect, but how it is collected, including respecting user consent, avoiding deceptive access methods, and choosing infrastructure providers with strong abuse prevention and transparency.

Because laws and regulation are limited, fuzzy, and evolving, responsibility shifts to data scientists and organizations to ensure their scraping practices—and their vendors’ proxy and IP sourcing—do not enable harm, privacy violations, or misuse.

In practice, this means treating web data collection as part of your considerations for responsible data science across the project life cycle, where legal and professional risks arise not only from your code, but from the tools, proxies, and providers you employ.

7.3.1 What is Robots.txt?

Websites use a “conventional signaling mechanism”, the Robots Exclusion Protocol, to express their preferences about automated access to different parts of their site.

- The intent is to balance discoverability (e.g., search engines) with protection of proprietary, sensitive, or monetizable content.

Most websites expose these preferences in a plain-text file located at the root of the web site, e.g., at https://example.com/robots.txt.

- This file is read by crawlers and scraping tools before crawling the site.

- See Introduction to robots.txt for background.

The file defines rules (directives) that apply to one or more automated clients.

- Rules are expressed in terms of URL paths (directory-style structure).

- Rules are scoped by User-agent:

*means “all bots”- Specific bot names (e.g., Googlebot) can be targeted explicitly

- Lines beginning with

#are comments and ignored by crawlers.

Common Examples

- Allow Everybody Everything as nothing is disallowed.

User-agent: *

Disallow:- Disallow Everybody Everything from the root directory

/on down.

User-agent: *

Disallow: /- Everybody is disallowed from scraping the Private Directory

User-agent: *

Disallow: /private/The rules often align with paths listed in sitemap.xml, but they serve a different purpose: sitemaps advertise what exists; robots.txt expresses what should or should not be crawled.

Different organizations adopt very different levels of strictness and complexity in their robots.txt rules. For example:

- IMDB selectively blocks areas like sign-in, user actions, and some dynamic pages.

- Wikipedia is largely open to allow for broad crawling

- LinkedIn is highly restrictive, especially around profiles and search (note the court case above).

- Google has complex rules tailored to many different crawlers.

Reviewing these files is often instructive when deciding whether and how to scrape a site.

robots.txtis not law. It is a preference signal, not a legal enforcement mechanism.- Compliance is voluntary, but:

- Ignoring it may violate site Terms of Service

- It may trigger blocking, rate limiting, or legal scrutiny

- It is widely regarded as a baseline for responsible scraping behavior

From an ethical data science perspective, treat robots.txt should be treated as minimum due diligence:

- check it,

- understand it, and

- factor it into decisions about whether scraping is appropriate, even when access is technically possible.

7.3.2 General Guidelines for Scraping

While web scraping is technically straightforward, doing it responsibly requires additional care.

- Scraping can impose real load on a website’s servers and supporting services.

- Many sites publish expectations or guidance intended to prevent abuse and service degradation.

- Zyte’s Best Practices for Web Scraping outlines common norms such as rate limiting, caching, and respecting site signals.

- While some scrapers deliberately attempt to evade Terms of Service using techniques like proxy rotation or identity masking, this approach increases legal, professional/operational, and ethical risks.

7.3.3 The {polite} Package

When you need to scrape many pages from the same site, or revisit a site repeatedly, consider using the {polite} package.

- {polite} Perepolkin (2023) is designed to support responsible, standards-aware scraping..

- It helps align your scraping behavior with a site’s robots.txt preferences and reasonable access patterns.

- The two core functions are:

bow(): introduces your client to the host and checks permissions via robots.txtscrape(); performs the actual retrieval while respecting those constraints

- This approach is especially useful for multi-page workflows, pagination, or repeated batch jobs.

Using {polite} does not guarantee permission, but it helps ensure that your scraper behaves predictably and defensibly.

- Download once.

- Do not repeatedly download the same pages during development.

- Download the page once, save it locally, and develop against the saved file.

- Develop locally.

- Always separate page acquisition from data extraction logic.

- Re-download only when the source content has genuinely changed or when needed.

Responsible scraping is not just about gaining access to content, it is also about minimizing harm, preserving reproducibility, and reducing legal and professional risk through responsible behavior.

7.4 The {rvest} Package

The {rvest} package is a tidyverse package designed to help users scrape (or “harvest”) data from modern HTML web sites and pages.

- Install {rvest} using the console

install.packages("rvest").

The package has a variety of functions for working with web pages and across pages on website.

- There are functions that work with the elements of web pages and web page forms.

- There are also functions to manage “sessions” where you simulate a user interacting with a website, using forms, and navigating from page to page.

The {rvest} package depends on the {xml2} package, so all the xml functions are available, and {rvest} adds a thin wrapper for working specifically with HTML documents.

- The XML in “xml2” stands for “Extensible Markup Language”. It’s a markup language (like HTML and Markdown), useful for representing data.

- You can parse XML documents with

xml(). - You can extract components from XML documents with

xml_element(),xml_attr(),xml_attrs(),xml_text()andxml_name().

The {xml2} package has functions to write_html() or write_xml() to save the HTML/XML document to a path/file_name for later use.

The {rvest} package has a dependency on the {xml2} package so will install it automatically.

Many functions in {rvest} will call {xml2} functions so you do not need to

library{xml2}to use {rvest}.However, {xml2} has functions that are not used by {rvest} so you may need to library it or use

::, to use those functions e.g,xml2::write_html().

7.5 A Minimal Model of Website Structure

Before discussing web scraping strategies, it is useful to understand the structure of modern web pages.

7.5.1 Technologies

Websites are built using multiple technologies but three are key for web scraping.

- HyperText Markup Language, or HTML: HTML is a markup language that uses paired begin and end tags to identify and organize the content of a web page.

- HTML tags can be nested, allowing elements to be grouped and related to one another.

- When parsed, the HTML document is converted into a structured tree known as the Document Object Model (DOM).

- Web scraping primarily targets this DOM representation.

- Cascading Style Sheets (CSS): CSS is a rule-based language used to control the presentation of HTML elements.

- CSS rules specify how elements look and behave visually (fonts, colors, spacing, layout).

- CSS selectors are also used as a query language for identifying elements in the DOM, even though their original purpose is styling.

- Scrapers commonly reuse these selectors to locate content, even though they were not designed for data extraction.

- JavaScript: JavaScript is the programming language of the web that operates on top of HTML and CSS.

- It enables interactivity and dynamic behavior, including modifying the DOM after the page loads.

- JavaScript is often responsible for fetching data asynchronously, implementing infinite scrolling, or inserting content after initial page load.

- When content is created or modified by JavaScript, it may not be present in the raw HTML returned by an HTTP request.

An HTML page on the web (or as a file on local drive) is a tightly-structured document composed of nested elements.

7.5.2 The Document Object Model (DOM

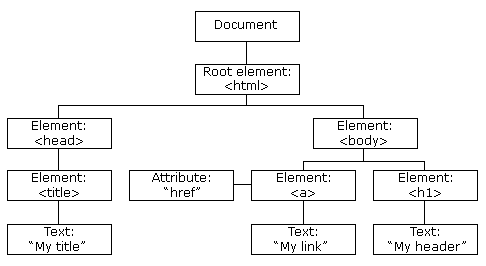

When parsed by a browser, or by code using a scraping library/package, the document structure is expressed in the Document Object Model (DOM):

- The DOM is an upside-down tree structure with the root at the top as seen in Figure 7.1. (Docs 2023)

- Each node corresponds to an element, text node, or attribute.

- All of the properties, methods, and events available for manipulating and creating web pages are organized as objects.

- Parent–child relationships define containment and scope of the nodes.

- Browsers parse the DOM to figure out how to organize the objects for presentation in the browser.

Even though not every node is an HTML element (some are text or attribute nodes), it’s common to refer to the all the nodes in the DOM as elements.

- You will see the term “elements” used to refer to “nodes” regardless of the actual node type.

Scraping libraries in both R and Python work by navigating and querying this DOM tree to find the elements with the content of interest you want to extract.

7.5.3 HTML Elements

Each HTML element consists of:

- A tag name (e.g., div, span, a, h3)

- Optional attributes (e.g., id, class, href, data-*)

- Content (text or nested elements)

When you scrape an HTML document, and convert it into text, you will see the HTML tags marking up the text.

- These tags (always in pairs for begin and end) delineate the nodes in the document, the internal structure of the nodes, and can also convey formatting information.

- There are over 100 standard HTML tags. Some commonly used tags are shown in Table 7.1.

Attributes, especially class, id, and data-* attributes, are often the most stable information for identifying content you want to extract.

7.5.4 CSS Selectors

Every website is formatted with CSS.

In CSS rule syntax, the part before the curly brace is called a selector.

- The selector identifies the HTML elements to which the formatting rules should apply.

- These two rules correspond to the following HTML elements:

- The code inside the CSS curly braces consists of the formatting properties and their values.

- The

h3rule applies to all<h3>elements, making their text red and italic. - The second rule applies to all

<div>elements with class “alert” that are descendants of a<footer>element, hiding them from view.

- The

- CSS applies the same formatting properties to every element matched by the selector.

- Each

h3tag will be styled in red, italicized text.

- Each

- The term “cascading” in CSS refers to how formatting rules are inherited and resolved.

- Styles applied to a parent element may propagate to its children.

- When multiple rules apply, CSS uses a priority system (specificity, order, and importance) to determine which rule takes effect.

There are five categories of CSS selectors

- Simple selectors select elements based on tag name, id, or class.

- A tag (element) selector uses the HTML tag name, such as

h3.- All

<h3>elements in the document will be selected.

- All

- An

IDselector uses#, for example#my_id, to select the single element withid="my_id".- IDs are intended to be unique within a page.

- A

classselector uses., for example.my_class, to select all elements withclass="my_class".

- A tag (element) selector uses the HTML tag name, such as

- Combinator selectors combine simple selectors to narrow the set of selected elements based on a relationships between elements in the DOM.

- As an example,

my_name.my_classselects elements with tag namemy_nameand classmy_class. - The most important combinator for scraping is whitespace, ` ` which represents the descendant relationship.

- For example,

p aselects all<a>elements that are descendants of a<p>element in the DOM tree.

- As an example,

- Attribute selectors select elements based on the presence or value of an attribute.

- For example,

[attribute_name*="my_text"]selects elements whose attribute name value contains “my_text”. - This is especially useful when class names are unstable but attributes contain consistent identifiers.

- For example,

- Pseudo-class selectors select elements based on a state, such as hover or position.

- Pseudo-element selectors select and style part of an element.

In CSS selectors, a space and a dot mean very different things.

The space, ` ` as in .parent_class .child_class indicates a descendant relationship in the DOM tree: Select any element with class child_class that appears anywhere inside an element with class parent_class.

- The selected element with

child_classdoes not need to share the parent’s class, it only needs to benested somewhere inside the parent element. - The relationship is structural, not visual.

- This is the most common and useful pattern for layout-oriented scoping in scraping.

The Combinator . with no space means the element must have both classes: Select elements that have both class parent_class and class child_class.

- The element is not required to be nested inside anything.

- The relationship is descriptive, not hierarchical.

- This is common when developers layer multiple classes on the same element, for example:

- one class for layout (.card),

- one class for styling (.highlighted),

- one class for state (.active).

When to use which?

- Use descendant selectors (space) when you want to scope extraction to a region of the page.

- Use combined class selectors (no space) when you want to target a very specific element type, and match elements that intentionally carry multiple class labels.

XML Path Language (XPath) is a query language for navigating and selecting nodes in the DOM tree based on structure, relationships, and attributes, rather than fixed visual or positional paths.

These path expressions look very much like the path expressions you use with traditional computer file systems.

CSS selectors and XPath expressions are both query languages over the DOM, but XPath provides more explicit control over tree navigation and node relationships.

When scraping, CSS selectors are re-purposed to query the DOM, even though they were originally designed for styling, not data extraction.

- In practice, web scraping often uses simple, combinator, and attribute selectors.

- These selectors provide substantial flexibility for identifying elements of interest, but their robustness depends on how stable the underlying HTML structure is.

- Selector choice is a trade-off between precision and robustness and understanding selector types helps you choose wisely.

- Thinking in terms of DOM structure vs. class membership helps choose the right form and avoid subtle over- or under-selection errors when scraping.

CSS selectors (and XPath expressions) are queries about the current DOM, not fixed paths to content.

- They describe which elements to select, not where elements are located visually.

- A selector that works today may fail if the underlying structure changes, even if the page “looks” the same.

- This distinction is central to understanding why scraping strategies must prioritize stability over convenience.

7.6 A Web Scraping Workflow

Modern web scraping is best understood as a workflow than as a single act of “finding the right selectors.”

- Users check results and make decisions at each step to adjust their approach.

The core steps in a web-scraping workflow are language-independent:

- Request: make an HTTP request to a web site for a resource

- Response: receive a server response (HTML, JSON, error, redirect, etc.)

- Parse: interpret the response into a structured in-memory representation

- Extract: select and transform the relevant content into data values

- Normalize and align: reshape extracted values into a consistent structure

- Clean and validate: prepare the data for analysis and confirm correctness

Most “scraping problems” are not bugs in the code, but failures, or unexpected behavior, at a specific stage in this workflow.

At a high level, most scraping workflows interact with three conceptual layers of a website:

- The HTTP layer, which governs how requests are made and responses are returned.

- The HTML layer, which defines the document structure and content returned by the server.

- The rendered page, which is what a human sees after the browser executes JavaScript, applies styles, and inserts dynamic content.

Web scraping code sends requests through the HTTP layer to interact primarily with the HTML layer, not the rendered page.

- Many scraping failures occur when assume these layers behave with our automated scraping code like they do with a browser driven by an interactive human user.

7.6.1 Strategies for Using the Workflow

Effective Web Scraping strategies follow the steps in the workflow.

- This is more efficient as errors in earlier steps can make debugging in later steps ineffective.

Best Practice web scraping strategies are generally language independent.

- We will use {rvest} in the to address the different strategy examples but the same strategies apply if using Python.

We will focus on two different web sites to start.

- The United Nations web page titled Deliver Humanitarian Aid

- This is a site focused on maximizing access to its content.

- It is a static page that is fairly stable.

- The IMDb page for the Top 100 Greatest Movies of All Time (The Ultimate List)

- IMDb is a more modern site that balances performance (dynamic loading) with protecting its content.

- The page may may block or alter responses when it detects automated clients, add content after initial loading, and deliver partial content.

- The website structure is frequently changed and updated.

7.7 Check for Valid Requests

All web-scraping workflows begin with an HTTP request, even when this is abstracted away by a high-level function such as rvest::read_html().

At this stage, the goal is simple: Receive the intended resource from the server in a form that can be parsed.

Key considerations at the request stage include:

- Target the correct URL

- Confirm you are requesting the canonical page (the real URL), not a redirect, preview, or mobile variant.

- Developer Tools and page metadata can help identify the canonical URL.

- Present a reasonable client identity

- Many modern sites distinguish between browsers and automated clients.

- Setting a browser-like User-Agent can prevent soft blocking or alternate responses.

- Minimize unnecessary requests

- Repeated requests during development can trigger rate limits or gating.

- Once access is confirmed and the response looks correct, save the response locally and work from that copy.

At this stage, you are not selecting elements or extracting data yet. Instead, you are answering: Did the request succeed?

- Common failure modes include status codes 403 (blocked), 429 (rate limited), other non-200 responses, redirects (to other pages), and “interstitial pages” (pages about permissions instead of site content).

- Interstitial pages are pages focused on controlling or mediating access to the page (sometimes called “intermediary pages”).

- They may enforce consent requirements, verify the client, or deter automated access.

- From a scraper’s perspective, these pages often return valid HTML but do not contain the expected content.

- Web sites may use Cookies to control consent state, personalization, or to manage access (access gating or token gating).

- Most scraping packages manage cookies automatically within a session.

- However, cookies can still influence what HTML you receive, even when the HTTP status code is 200.

Both general access gating and more specific token gating are mechanisms for controlling who can access content, but they differ in how access is verified and where the decision is enforced.

Access gating controls access based on request context and client behavior.

- Enforcement is typically server-side and stateful.

- Decisions are based on: Cookies (consent, session state), Headers (User-Agent, language, region), IP reputation or request patterns, and JavaScript challenges or interstitial pages

Access gating is common on public websites that want to be: discoverable by search engines, usable by humans and resistant to automated scraping or misuse

From a scraping perspective: access gating often returns Status 200 with altered HTML so failures are subtle and can masquerade as successful requests.

Token gating controls access based on possession of a credential or token.

- Enforcement is typically explicit and stateless.

- Access requires presenting a valid token with the request.

- Tokens may include: API keys, OAuth tokens, Signed cookies and even Cryptographic or blockchain-based tokens

Token gating is common for: APIs, paid content, authenticated services, or rate-limited or licensed data access

From a scraping perspective: Token gating usually results in clear failures (401/403) as access is binary: you either have the token or you do not.

7.7.1 Check Your Credentials

Credential errors (in the classic sense) tend to return explicit HTTP status codes such as 401 Unauthorized, 403 Forbidden, or sometimes 407 Proxy Authentication Required.

- These are often accompanied by clear messages like “Authentication required”, “Forbidden”, etc.

- You wont see these in an {revest} response but can use a lower-level HTTP tool (e.g., {httr2} to submit a request and you would see these codes directly.

If you have a credential issue then you verify whether credentials are required at all

- Check the site’s documentation or network behavior to determine whether access is gated by tokens (API keys, login sessions) or by softer mechanisms (cookies, headers, behavior).

- A clear 401/403 error typically indicates missing or invalid credentials rather than a scraping or selector issue.

Confirm that credentials are being sent correctly

- Ensure that API keys, tokens, or session cookies are actually included in the request (headers, query parameters, or cookies as required).

- A common failure mode is having valid credentials available locally but not attached to the outgoing request.

Validate credential scope and freshness

- Check whether tokens have expired, are rate-limited, or are restricted to specific endpoints, IP ranges, or usage patterns.

- Credentials that work for one request or endpoint may silently fail for others if their scope is too narrow.

When access or token gating is involved, failures at the request stage must be resolved before any parsing or extraction strategy can succeed.

7.8 Check Your Access at the HTTP layer: Is the response with a valid DOM (Structural Validation)?

Even when a request succeeds (suggesting an HTTP 200 response), the returned page may still be unsuitable for scraping.

Modern web sites increasingly attempt to detect automated scraping tools using code, e.g., bots or services such as from Browse.ai or scraping bot, instead of humans interacting using real browsers.

They may

- return partial HTML scaffolding,

- return consent or verification pages,

- or serve alternate content to suspected bots.

This is often referred to as soft access gating:

- You are allowed to connect, but,

- You are not given the same HTML a human browser would receive.

A critical next step is therefore to confirm: The HTTP layer returned HTML that parses into a valid DOM representing the intended page.

7.8.1 Set a Browser-like User-Agent

If a response object does not parse into a usable DOM (for example, a missing or invalid root element), a simple and often effective first step is to set a browser-like User-Agent.

This does not guarantee success but it frequently resolves soft access gating.

User-Agent strings identify the browser and operating system making the request.

- Many sites use them as a first-pass check to distinguish real browsers from automated clients.

See the Updated List of User Agents for Scraping & How to Use Them and select one for your code.

- They are matched to browser and operating system versions.

- Using a realistic, up-to-date value is preferable to inventing one.

Here are recent examples.

#Chrome 144 and Mac OS 15.17

user_agent_str <- "Mozilla/5.0 (Macintosh; Intel Mac OS X 15_7_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/144.0.0.0 Safari/537.36"

# Chrome 144 and Windows 10/11

user_agent_str <- "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/144.0.0.0 Safari/537.36" Most scraping tools provide a mechanism for attaching a User-Agent to outgoing requests.

- Consult the package documentation for the recommended approach.

Make it a routine practice to consider setting a User-Agent early in your scraping workflow—especially when:

- the parsed document has no usable HTML root,

- expected elements are missing, or,

- code and selectors that previously worked suddenly return zero nodes.

Before modifying selectors or extraction logic, always verify that you successfully accessed the HTML layer you intended to scrape.

7.8.2 Structural Validation Examples: UN and IMDb

Listing 7.1 has some quick checks of the response object for the UN page to see if it returns an HTML response with a valid DOM structure we can try to parse.

This example introduces rvest::read_html(), which is a core function for web scraping in R.

read_html()performs the HTTP request to the specified URL.- It then parses the returned HTML into a document object using the {xml2} package.

- The result is an in-memory representation of the page’s DOM (if it was returned) that we can inspect and query.

- We also use a few {xml2} helper functions to examine structural properties of the returned document.

# 1) Define the target

url <- "https://www.un.org/en/our-work/deliver-humanitarian-aid"

# 2) Attempt to read the page

doc <- read_html(url)

# --- Did read_html() return something parseable? ---

# 3) Check the document object

str_glue("Class Object: ", str_c(class(doc), collapse = "-"))

# 4) Check for a root node

root <- xml2::xml_root(doc)

str_glue("Class Root: ", str_c(class(root), collapse = "-"))

# 5) Check the root node type and name

str_glue("Type Root: ",xml2::xml_type(root))

str_glue("Name Root: ",xml2::xml_name(root))- There is a response so there does not appear to be a credential issue.

- The

docis an xml_document with a valid root node. - The root node is an HTML element (Name Root: html).

- The request successfully reached the HTML layer, returned a complete static page, and produced a usable DOM with a valid structure so we can now try to parse to see if the content is the correct page.

Listing 7.2 uses the same quick checks as Listing 7.1 but with the URL for the IMDb page to see if it returns an HTML response with a valid DOM structure we can try to parse.

# 1) Define the target

url <- "https://www.imdb.com/list/ls055592025/"

# 2) Attempt to read the page

doc <- read_html(url)

# --- Did read_html() return something parseable? ---

# 3) Check the document object

str_glue("Class Object: ", str_c(class(doc), collapse = "-"))

# 4) Check for a root node

root <- xml2::xml_root(doc)

str_glue("Class Root: ", str_c(class(root), collapse = "-"))

# 5) Check the root node type and name

str_glue("Type Root: ",xml2::xml_type(root))

str_glue("Name Root: ",xml2::xml_name(root))- There is a response so there does not appear to be a credential issue.

docis returned as an xml_document, but- the root node is xml_missing.

- The root node has no type and no name (

NA).

Although read_html() returned an object, the response did not parse into a valid HTML document tree.

- This indicates the request did not reach the intended HTML layer to return a valid DOM.

7.8.3 Creating a Session Example: IMDb

If the response is being blocked from the HTML layer, a good next step is to add a non-default user agent to your request to better identify your request.

Do this in R by creating an {rvest} session and setting a browser-like User-Agent for the session.

- A session behaves more like a browser client.

- It lets you set a request identity (User-Agent) explicitly.

- It is the natural starting point for scraping multiple pages later, because the session can carry cookies and other states forward.

Let’s try again by creating a session and setting the user agent for the session.

- We create a session object and attach a browser-like User-Agent to it.

- The session represents a browser-like client rather than a one-off request.

- The same session object can be reused for multiple requests later, since it preserves session state (such as headers and cookies).

This approach keeps request configuration explicit while avoiding repeated setup for each page we scrape.

#Chrome 144 and Mac OS 15.17

user_agent_str <- "Mozilla/5.0 (Macintosh; Intel Mac OS X 15_7_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/144.0.0.0 Safari/537.36"

# Chrome 144 and Windows 10/11

# user_agent_str <- "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/144.0.0.0 Safari/537.36"

# 1) Define the target

url <- "https://www.imdb.com/list/ls055592025/"

# 2) Create a session with a browser-like User-Agent, then read the page from the session

sess <- session(url, httr::user_agent(user_agent_str))

doc <- read_html(sess)

# --- Did read_html() return something parseable? ---

# 3) Check the document object

str_glue("Class Object: ", str_c(class(doc), collapse = "-"))

# 4) Check for a root node

root <- xml2::xml_root(doc)

str_glue("Class Root: ", str_c(class(root), collapse = "-"))

# 5) Check the root node type and name

str_glue("Type Root: ",xml2::xml_type(root))

str_glue("Name Root: ",xml2::xml_name(root))- There is a response so there does not appear to be a credential issue.

- The

docis an xml_document with a valid root node. - The root node is an HTML element (Name Root: html).

- The request successfully reached the HTML layer, and returned a usable DOM with a valid structure so we can now try to parse to see if the content is the correct page.

7.9 Check the Initial Response Object - Is it the right page (Semantically Valid)?

Now that you have a valid object, the next step is to confirm you received the page you think you requested.

Even when a request “succeeds” the response may be a redirected page, or a partial/dynamic scaffold missing all the content you want.

- Inspect the page title.

- Check for the presence of a key phrase you expect.

- Count how many target nodes are present.

- Look for telltale phrases indicating consent or bot pages.

- Treat HTML that is “too small” or missing key sections as a warning sign of blocking or interstitial gating.

- Treat HTML that contains scaffolding but not the data as a warning sign of dynamic loading.

7.9.1 Minimal R Template for Sanity Checks

Listing 7.4 provides some examples of quick checks you can make on a response object to see if it has content for the page of interest.

Let’s start with the UN web page.

- We expect the checks to pass with this page.

We need to set three values for this particular page as part of running the checks.

- The URL

- A “must-have” phrase we expect to see based on our selector

- At least one CSS selector of interest. (We’ll cover how we identified this later on)

The code in Listing 7.4 moves beyond structural validation and performs lightweight semantic validation of the parsed HTML document.

These steps use core {rvest} helper functions that operate on the DOM representation returned by read_html().

html_element()selects the first matching element in the document for a given CSS selector.html_elements()selects all matching elements and returns them as a node set.html_text2()extracts the visible text content from an element or document, collapsing whitespace and removing markup.

Together, these functions allow us to verify the page content matches our expectations before proceeding with more detailed extraction.

url <- "https://www.un.org/en/our-work/deliver-humanitarian-aid"

must_have <- "Deliver humanitarian aid"

css_target <- ".col-sm-12.grey-well"

doc <- read_html(url)

# 1) Page title

page_title <- doc |>

html_element("title") |>

html_text2()

str_glue("Page Title: ", page_title)

# 2) Must-have phrase (choose something you expect on the real page)

has_phrase <- doc |>

html_text2() |>

str_detect(fixed(must_have, ignore_case = TRUE))

str_glue("Has Phrase: ", has_phrase)

# 3) Count target nodes (replace with your selector)

n_target <- doc |>

html_elements(css_target) |>

length()

str_glue("Target nodes: ", n_target)

# 4) Interstitial / gating phrase scan

gate_phrases <- c(

"cookie", "consent", "privacy", "gdpr",

"verify you are human", "captcha",

"access denied", "unusual traffic", "just a moment"

)

page_text <- doc |>

html_text2() |>

str_to_lower()

gate_hits <- gate_phrases[str_detect(page_text, str_to_lower(gate_phrases))]

str_glue("Gate Phrase Matches: ", str_c(gate_hits, collapse = "-"))

# 5) Rough size heuristic

n_chars <- str_length(as.character(doc))

str_glue("Page Characters: ", n_chars)

doc_u <- docThe results are consistent with receiving the intended UN page.

- The page title matches the expected content, indicating that the request returned the correct page rather than an interstitial or redirect.

- The must-have phrase is present (TRUE), confirming that the core content of interest appears in the response.

- One target node was found using the selected CSS selector, which is consistent with this page’s structure and sufficient for continued extraction.

- The presence of the word “privacy” in the gating phrase scan is not unexpected for a UN page and does not, by itself, indicate an access problem. Importantly, no stronger indicators of access gating (such as CAPTCHA or verification language) are present.

- The size of the HTML response is relatively large, which is consistent with a full content page rather than a minimal interstitial or error response.

Taken together, these checks suggest that access at the HTTP layer succeeded and that the response object contains the HTML needed for subsequent parsing and extraction.

Let’s try the the IMDb Page using Listing 7.5.

- All we need to do is update the three items of information and set the session object with user agent

url <- "https://www.imdb.com/list/ls055592025/"

must_have <- "Godfather"

css_target <- ".ipc-title__text"

sess <- session(url, httr::user_agent(user_agent_str))

doc <- read_html(sess)

# 1) Page title

page_title <- doc |>

html_element("title") |>

html_text2()

str_glue("Page Title: ", page_title)

# 2) Must-have phrase (choose something you expect on the real page)

has_phrase <- doc |>

html_text2() |>

str_detect(fixed(must_have, ignore_case = TRUE))

str_glue("Has Phrase: ", has_phrase)

# 3) Count target nodes (replace with your selector)

n_target <- doc |>

html_elements(css_target) |>

length()

str_glue("Target nodes: ", n_target)

# 4) Interstitial / gating phrase scan

gate_phrases <- c(

"cookie", "consent", "privacy", "gdpr",

"verify you are human", "captcha",

"access denied", "unusual traffic", "just a moment"

)

page_text <- doc |>

html_text2() |>

str_to_lower()

gate_hits <- gate_phrases[str_detect(page_text, str_to_lower(gate_phrases))]

str_glue("Gate Phrase Matches: ", str_c(gate_hits, collapse = "-"))

# 5) Rough size heuristic

n_chars <- str_length(as.character(doc))

str_glue("Page Characters: ", n_chars)

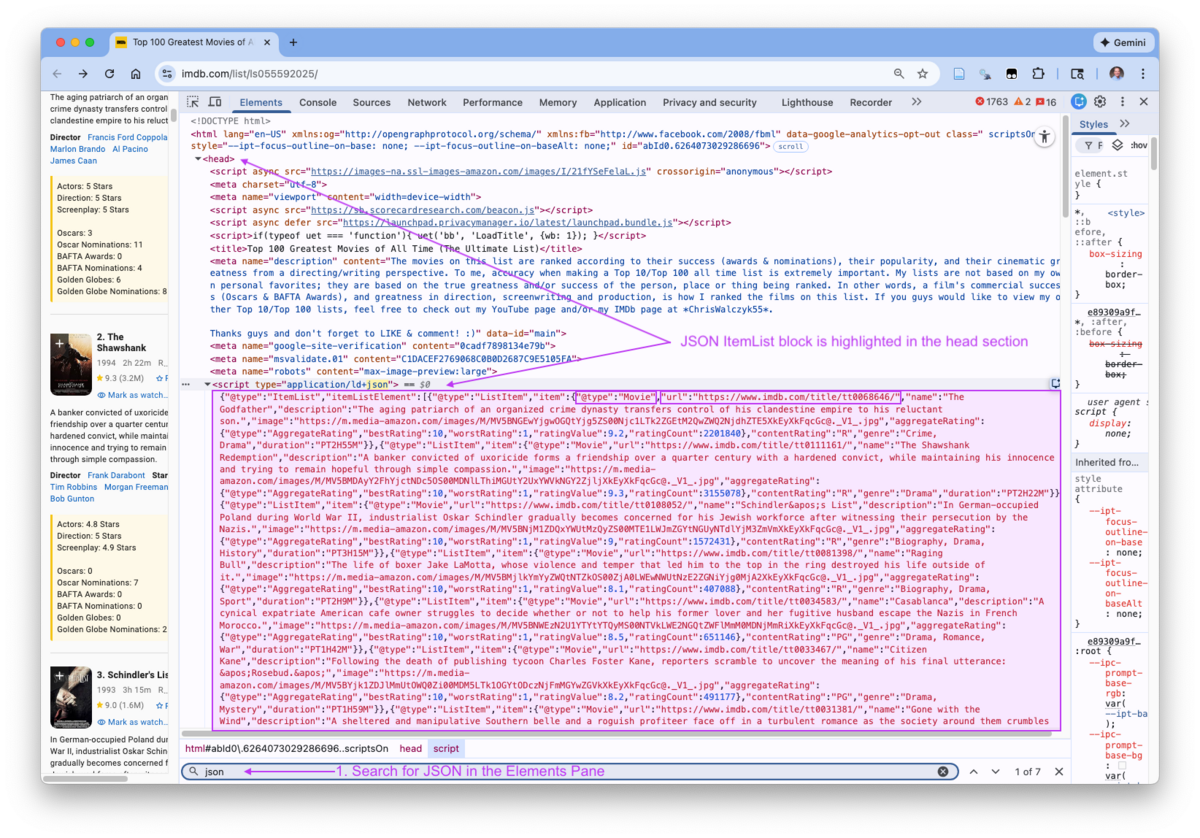

doc_i <- docThe results are largely consistent with receiving the intended IMDb page, but they also reveal important structural considerations.

- The page title matches the expected content, indicating that the request returned the correct page rather than an interstitial or redirect.

- The must-have phrase is present (TRUE), confirming that key textual content associated with the page appears in the response.

- Only 27 target nodes were found using the selected CSS selector, which is fewer than the expected 100 items.

- This suggests some content may be dynamically loaded, partially rendered, or structured differently than anticipated.

- The presence of gating-related terms such as cookie, consent, privacy, and GDPR is common on modern commercial sites like IMDb and does not, by itself, indicate access denial.

- Importantly, there are no stronger indicators of blocking, such as CAPTCHA prompts or explicit verification language.

- The size of the HTML response is very large, which is consistent with a full content page rather than a minimal interstitial or error response.

Taken together, these checks indicate that access at the HTTP and HTML layers succeeded, but that the visible list content is not fully represented in the static HTML.

This strongly suggests the use of dynamic loading or client-side rendering for portions of the page, which affects how extraction strategies must be designed.

7.9.2 Work on a Saved Copy of the Response Object

Once you are satisfied the response parses into a valid HTML document and basic semantic checks look correct, a best practice is to save a copy of the page to disk so you do not have to repeatedly re-request it from the website.

- Save the raw HTML response to disk (locally or cloud).

- Use the saved copy while developing and debugging extraction code.

- Re-fetch from the website only when you are confident the rest of the workflow is correct, or when you intentionally want refreshed data.

In R, you can write the parsed document back to an HTML file using {xml2}. The code below ensures a ./data directory exists and then saves the page.

library(xml2)

# Create ./data if it does not exist

dir.create("./data", showWarnings = FALSE)

# Choose a descriptive filename (include date if the page may change)

out_file <- "./data/un_deliver_humanitarian_aid.html"

out_file_i <- str_glue("./data/imdb_top_100_{Sys.Date()}.html")

# Save the parsed HTML document

write_html(doc_u, out_file)

write_html(doc_i, out_file_i)

out_file

out_file_i7.10 Find CSS Selectors for Elements of Interest

Before writing any scraping code, you need to find CSS selectors that identify which elements in the HTML document correspond to the data you want.

- This requires understanding how elements are labeled and organized in the DOM and how to use that structure to identify the elements of interest using the CSS selectors.

There are two complementary approaches:

- SelectorGadget, for fast, interactive discovery of candidate selectors on mostly static pages.

- Browser Developer Tools, for precise inspection of the DOM when pages are more complex or dynamic.

Neither tool is required for programmatic scraping, but both can be useful for understanding the structure of a page before writing code.

7.10.1 Using SelectorGadget with Chrome

SelectorGadget is an open-source extension for the Google Chrome browser.

SelectorGadget is especially useful during exploratory work.

- It helps you quickly identify candidate selectors.

- It does not guarantee a selector is optimal, stable, or complete.

To install SelectorGadget, go to Chrome Web Store.

Once installed, manage your chrome extensions to enable it and “pin” it so to appears as an icon, ![]() , on the right end of the search bar.

, on the right end of the search bar.

- Click on the icon to turn SelectorGadget on and off.

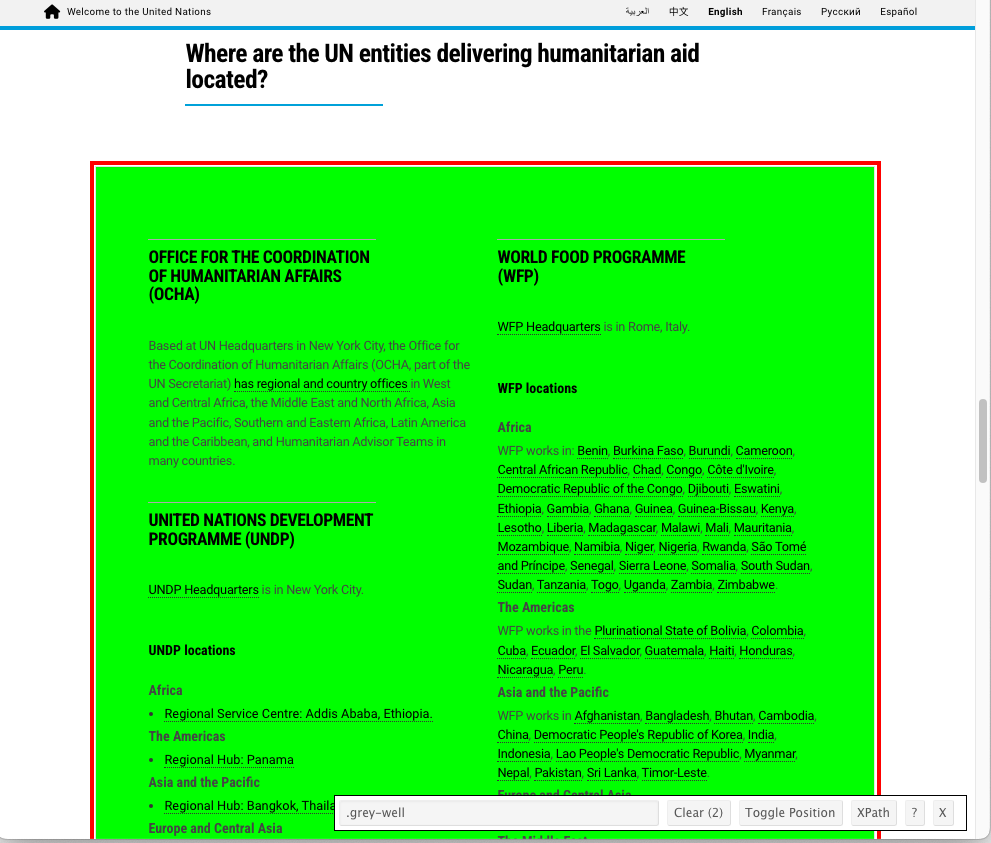

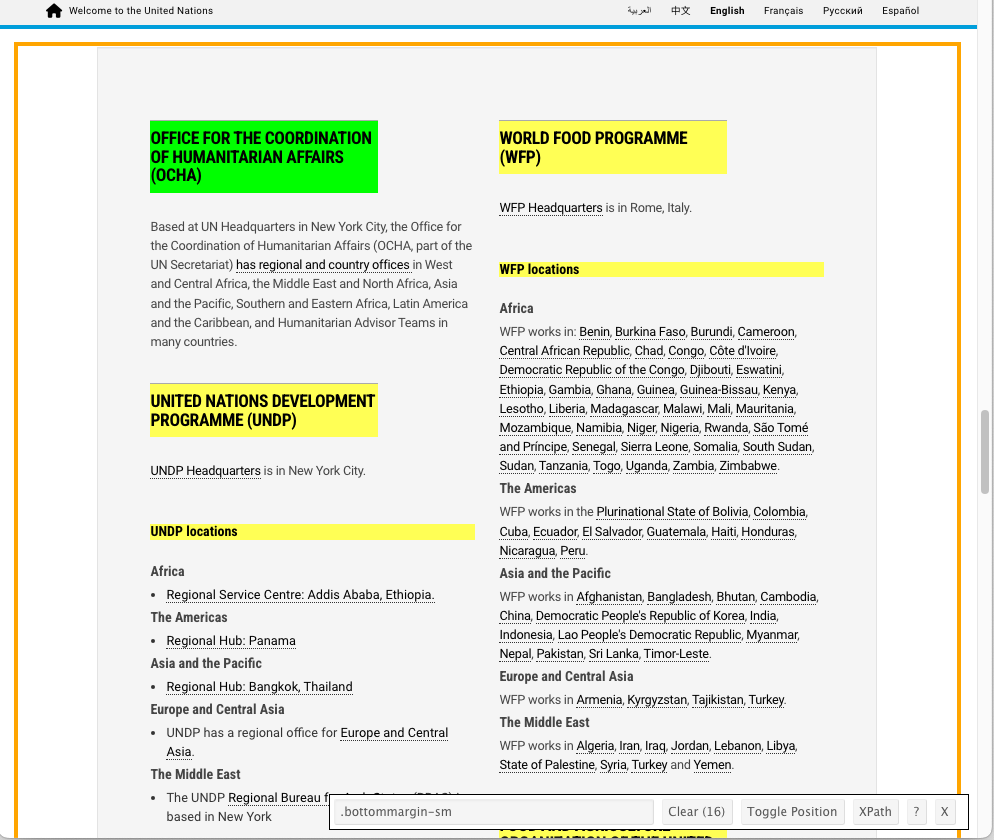

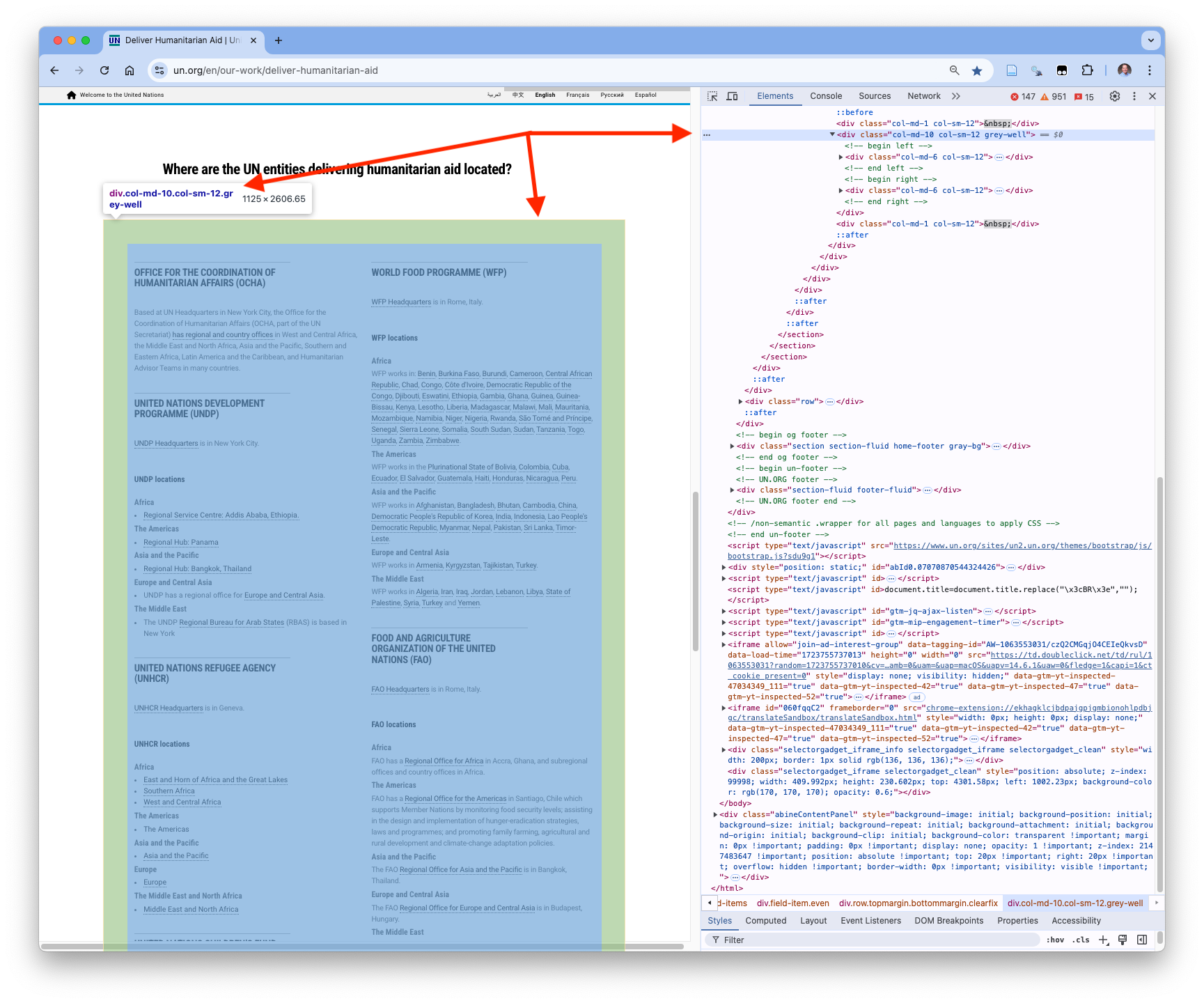

7.10.1.1 United Nations Web Page on Deliver Humanitarian Aid.

Go to Deliver Humanitarian Aid in a Chrome browser.

- Scroll down to the section called Where are the UN entities delivering humanitarian aid located?

Turn on SelectorGadget and click near the edge of the grey box containing the agencies and locations.

- The background should turn green (indicating what we have selected) as in Figure 7.2.

- Other elements matched by the same selector appear in yellow.

SelectorGadget displays information at the bottom of the page:

- It shows the current selector

.grey-wellassociated with the box we clicked on. - It shows the number of elements matched by the selector.

- Toggle Position moves the information bar to the top of the page (or back to the bottom)

- The “XPath” button will pop up the XPath for the same selection

- The

.grey-wellselector XPath is//*[contains(concat( " ", @class, " " ), concat( " ", "grey-well", " " ))]

- The

- The “?” is the help button.

Scroll through the entire page to visually inspect all the selected elements.

SelectorGadget has no knowledge of which element you intend to scrape.

- It doesn’t always get all of what you want, or it sometimes gets too much.

- It can select hidden elements that don’t show up until you actually start working with the data.

- Sometimes not every child element is present for every parent element on the page as well.

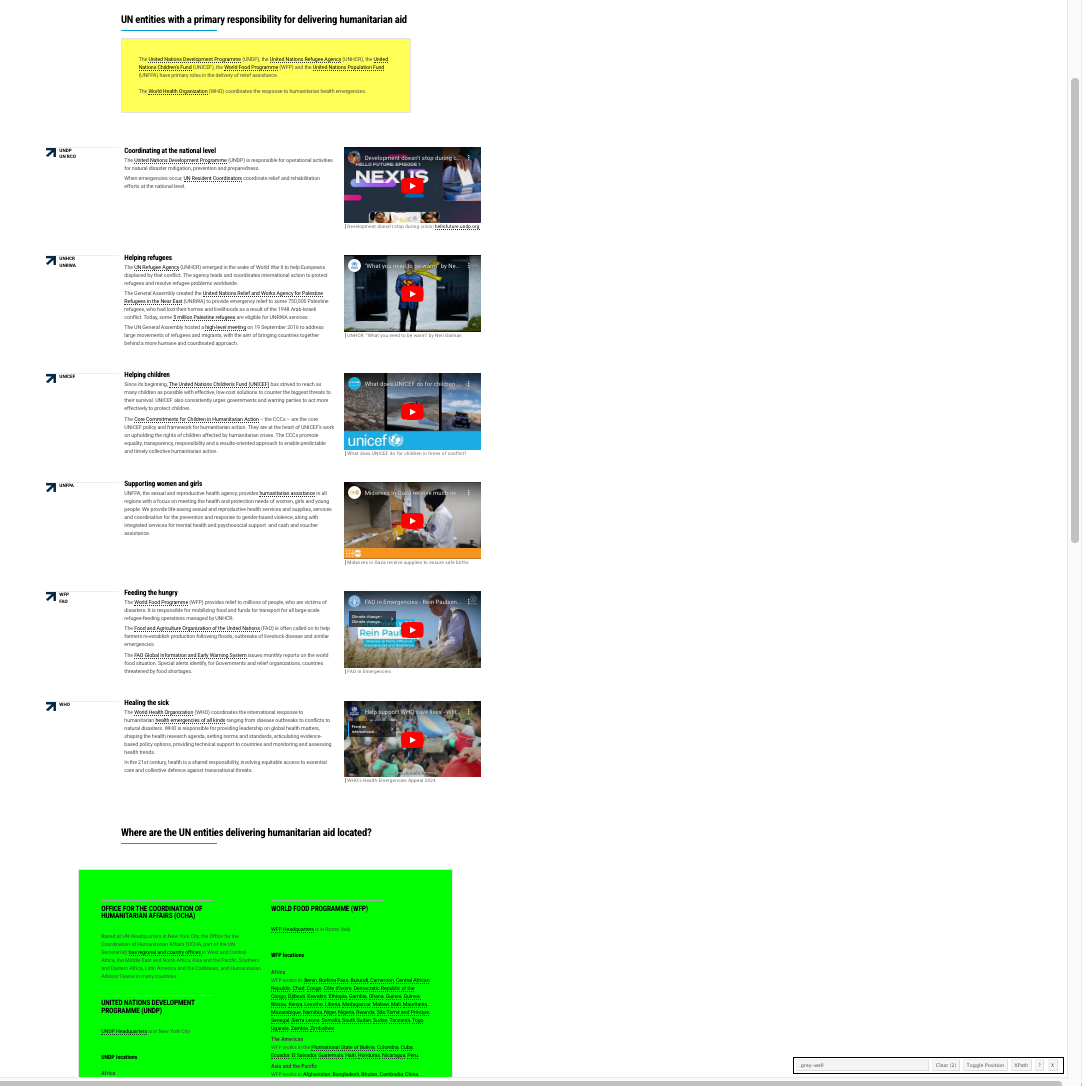

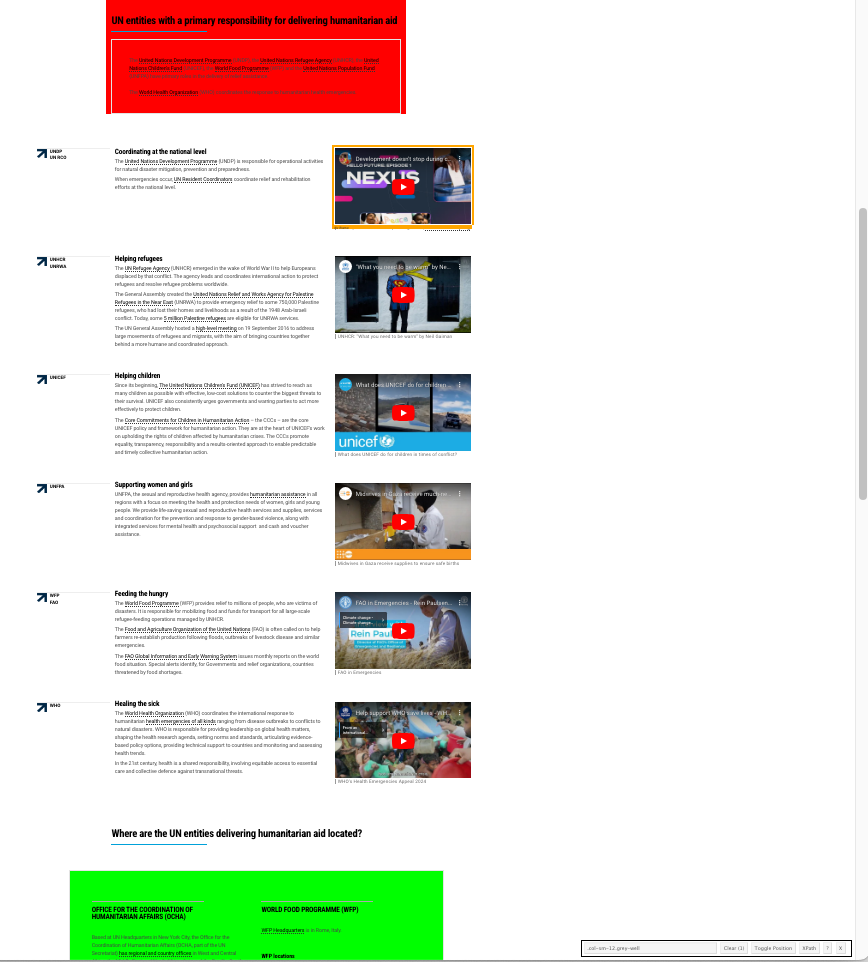

Figure 7.3 shows two elements were matched by the CSS selector and we expected only one.

- If we scroll up we can see another grey-box element highlighted in yellow which means it is currently matched as well (Figure 7.3).

grey-boxes.

- To de-select the yellow grey-box, click on the yellow item we don’t want. It turns red to indicate it is no longer selected.

- However, there is a lot more yellow showing on the page, all elements we do not want.

- Continue to click on the yellow areas until they go away and just the grey box is left in Green and there is no yellow.

- Your screen should look like Figure 7.4 with Selector Gadget showing one item is selected.

- The Selector Gadget selector is now

.col-sm-12.grey-well. - This is more specific CSS selector that matches elements with both class

col-sm-12and classgrey-well. In CSS, writing `.a.b` selects elements that have **both classes a and b, not elements nested inside one another*.- Combining multiple class selectors in this way narrows the match and reduces the chance of selecting unintended elements.

The good news is we now have a CSS selector that identifies the one element of interest.

- However, scraping the whole box at once will give us a lot of text with no clear way to organize it. Let’s try to select individual elements inside the box.

7.10.1.2 Selecting Nested Elements

Often, scraping the entire container (here, the grey box) is not ideal because it produces unstructured text.

Instead, we want to identify repeated sub-elements within the container.

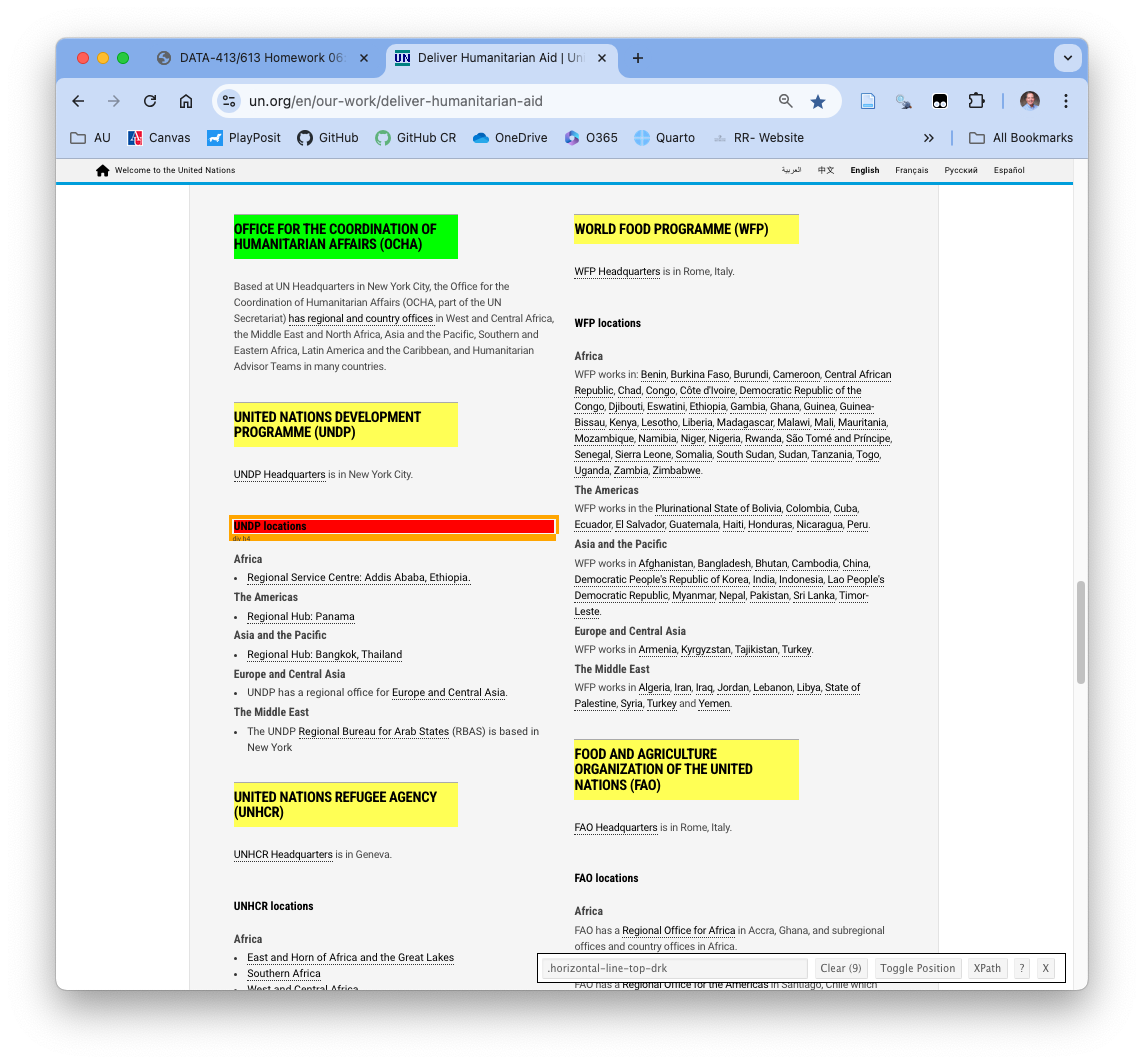

To start over, turn Selector Gadget off and then on (or click on the edge of the grey box to deselect it).

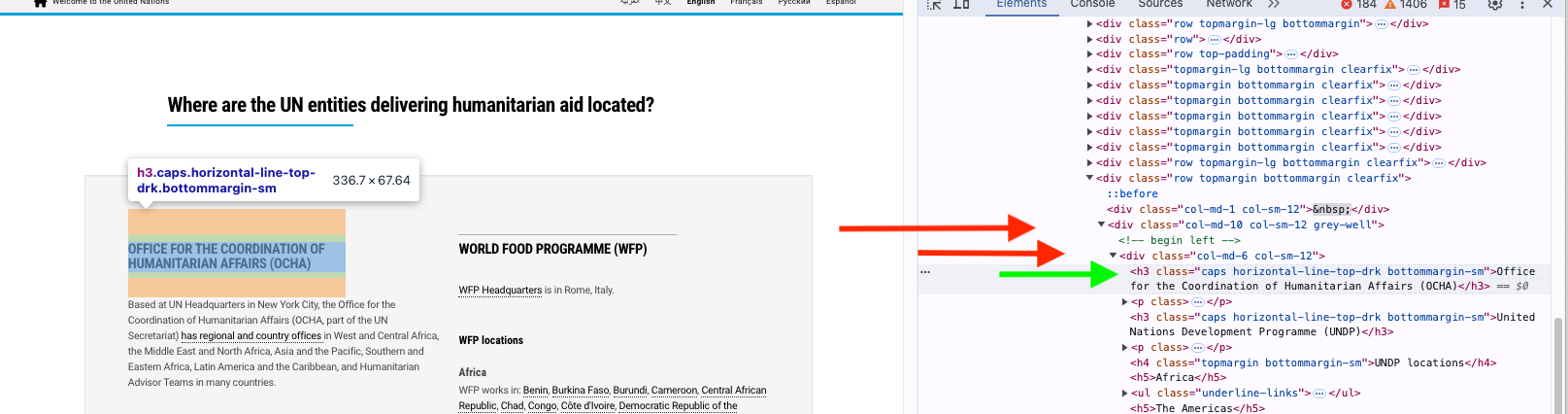

Now click on the edge of “OFFICE FOR THE COORDINATION OF HUMANITARIAN AFFAIRS (OCHA)” inside the grey box.

It should turn Green and other offices should turn yellow. The yellow indicates all the other elements with the same selectors as OCHA. namely,

.bottommargin-sm, as in Figure 7.5.Note there are 16 items that were selected.

- However, in addition to selecting the office name element, it selected headings for office locations, e.g. “UNDP Locations”, which we do not want to include with the office names if we can avoid it.

- If we click one of the yellow items we don’t want, it turns red. This indicates we don’t want to select it as in Figure 7.6.

- We now see the selector as

.horizontal-line-top-drkand there are 9 of them which we can verify are correct by scrolling the page up and down.

- One click was all we needed here. On other pages you may need to click several times until only the elements of interest remain in Green or Yellow.

This illustrates a common pattern:

- Start with a broad selection.

- Iteratively remove “false positives.”

- Stop when the selection matches the “elements” you want.

- What are the CSS selectors for the headquarters locations?

- How many elements are selected?

- It takes a few clicks but you can get to just the HQs locations with

.horizontal-line-top-drk+ p. - There should be 9 items.

Selector Gadget can provide a useful tool for understanding the selectors associated with elements of interest on a static web page.

7.10.1.3 When SelectorGadget Is Not Enough

SelectorGadget works best on static pages with visible HTML content.

On more dynamic or heavily scripted pages:

- Clicking may activate links instead of selecting elements.

- SelectorGadget may not identify a stable selector.

- The selector may match elements that are not present in the static HTML.

In these cases, you should switch to the browser’s Developer Tools.

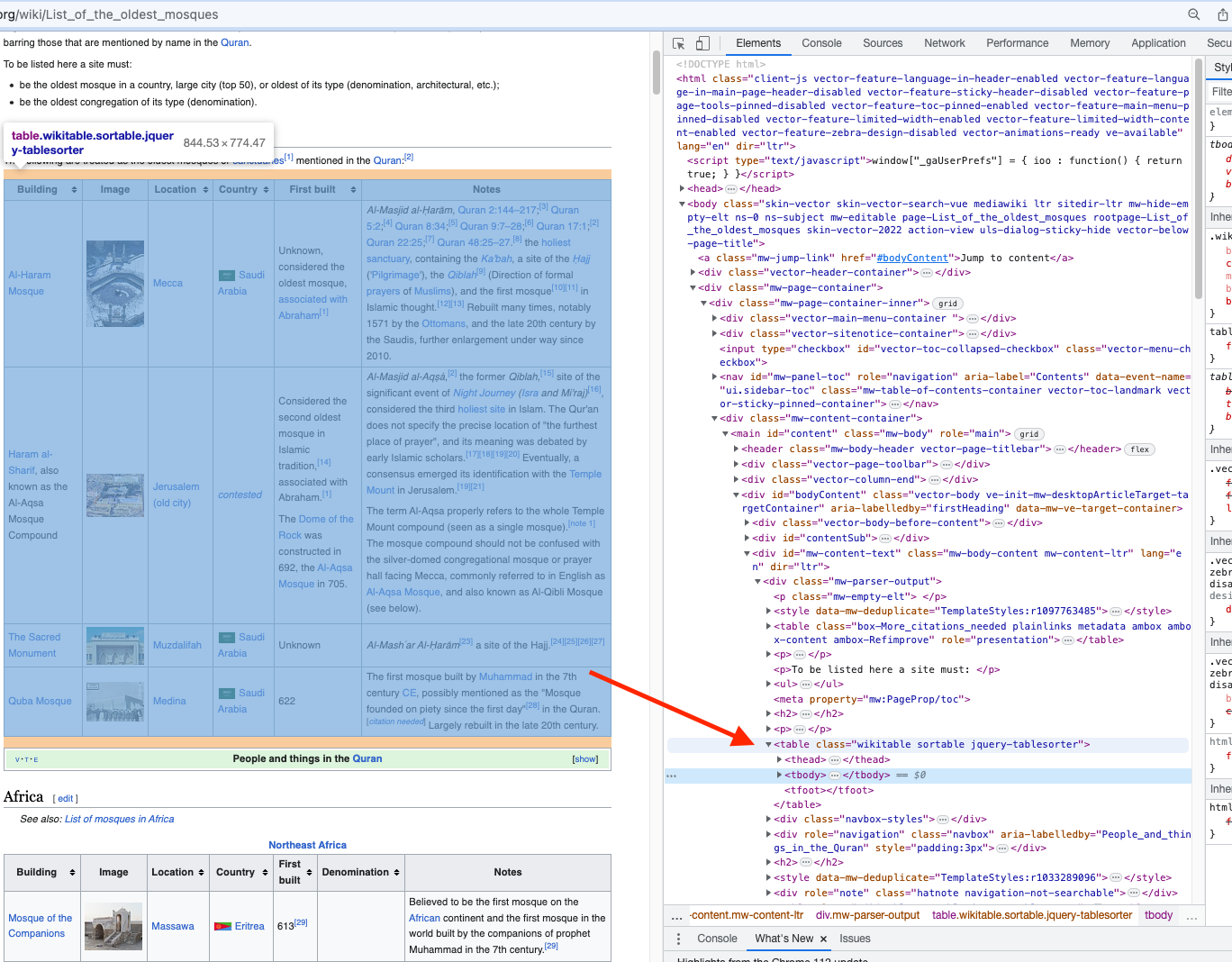

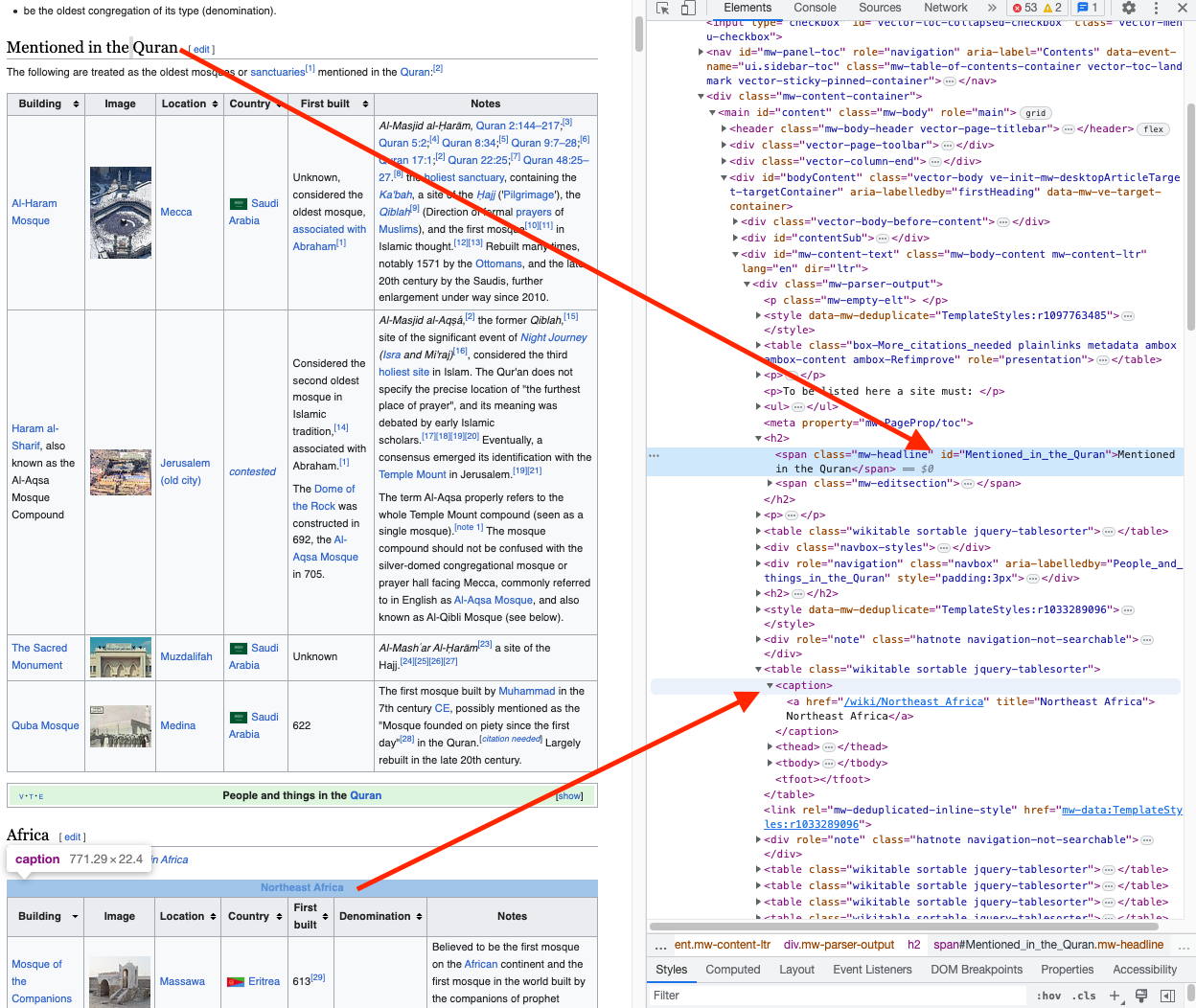

7.10.2 Using Chrome Developer Tools (DOM Inspection)

Modern browser have Developer Tools to help web page developers build, inspect, and test their web pages.

These Browser Developer Tools allow one to inspect the actual DOM tree and examine element names, classes, ids, and attributes directly.

To open the Chrome developer tools

- Use the hamburger menu on the top right

⋮ \> More tools \> Developer tools, or, - Right-click on any element in the web page and select

Inspect.

to get a result as in Figure 7.8.

We are most interested in the Elements pane as it shows:

- The DOM tree structure.

- HTML element names (div, h3, p, etc.).

- Attributes such as class and id.

As you move your cursor up and down the DOM tree in the Elements pane, the corresponding region of the web page is highlighted and pop-ups may appear with the CSS for that element.

- If it is unclear where you are, right-click on any element in the web page and select

Inspectand the Elements pane will scroll to highlight the inspected element.

This makes it easier to:

- See how elements are nested.

- Identify stable parent containers.

- Distinguish presentation-related classes from structural ones.

Chrome Developer Tools provide several panes that are useful for understanding how a web page is built and how data is delivered.

Elements: Shows the DOM tree for the page. This is the most important pane for scraping.

- Inspect element names, classes, ids, and attributes.

- Understand parent–child relationships and nesting.

- Identify stable containers and repeated item structures.

Styles: Displays the CSS rules applied to the selected element.

- Helps distinguish structural classes from purely visual ones.

- Useful for recognizing auto-generated or presentation-only classes that may be brittle for scraping.

Console: Allows you to run JavaScript and view messages from the page that may be useful for checking whether content is generated dynamically.

Network: Shows all network requests made by the page which may be useful for identifying JSON payloads, pagination requests, and dynamic data sources when working with live or JavaScript-rendered pages.

Application: Displays cookies, local storage, and session storage which may be useful for understanding consent gates and session-based access.

For static pages, the Elements pane is often sufficient.

For dynamic pages and live sessions, the Network and Console panes become essential tools for diagnosing how and when data is loaded.

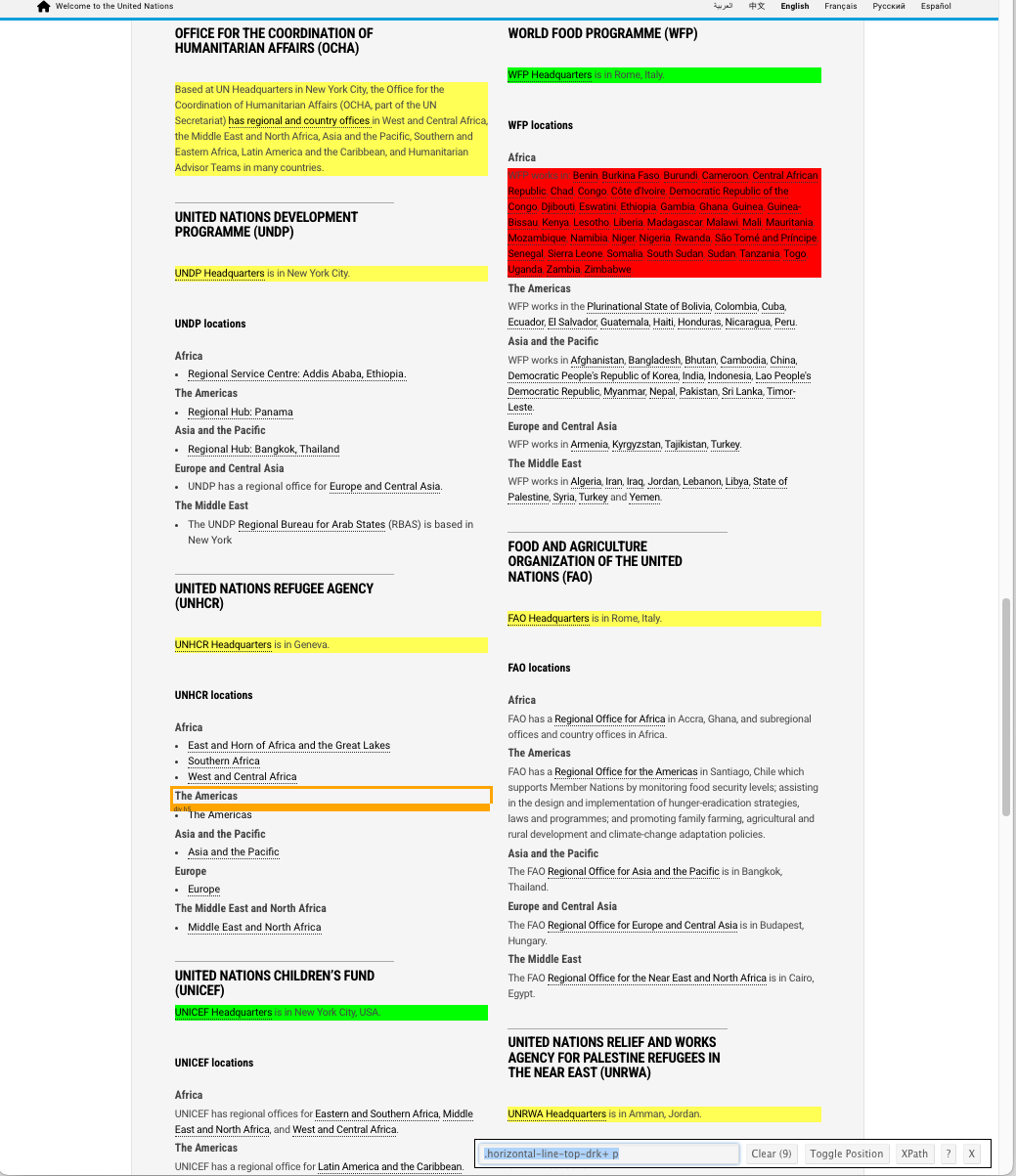

As an example, right click on the grey background from before, choose Inspect, and you should see something like in Figure 7.9.

Look for the

name,id,classand other attributes. They may be higher in the tree structure.If the name is the same as the HTML element, e.g.,

divyou will not see a specificname=attribute.Use the arrows to the left of each element to collapse and expand the element to see any nested elements (further down in its branch of the DOM tree).

Right click on a specific element in the Elements pane and you can add or edit attributes of the element (not for us).

You can also:

Copy element: useful for seeing the raw HTML.

Copy selector: often too specific for scraping multiple items.

Copy XPath / Copy full XPath: precise but often brittle paths to a single element.

- Use the developer tools to inspect the Title for the OCHA.

- What is the class for it? What is the CSS selector?

- What is the XPath for it?

- Right click on the OCHA title in the web page and Inspect the element or go to the Elements pane and click on the down arrows and scroll till you can see the element (and it is highlighted on the web page) as in Figure 7.10

- You can see the class in the Elements pane, or.

- Right Click and

Copy elementand paste into your file to get<h3 class="caps horizontal-line-top-drk bottommargin-sm">Office for the Coordination of Humanitarian Affairs (OCHA)</h3> - You can see the cascading classing in this. Note that you can pick out the

horizontal-line-top-drkfound by selector gadget earlier. - Add the ‘.’ to the front to designate it as a CSS class selector or

".horizontal-line-top-drk".

- The Xpath and Full Xpath are:

//\*[@id="node-120624"]/div/div\[3\]/div/div/div\[11\]/div\[2\]/div\[1\]/h3\[1\]/html/body/div\[2\]/div\[1\]/div\[1\]/div/section/section/section/div/div/div\[3\]/div/div/div\[11\]/div\[2\]/div\[1\]/h3\[1\]

- Note they are precise but not as useful for our purposes unless we need to select a single element.

- The XPath language can be very flexible though for identifying groups of elements based on locations in the DOM.

7.10.3 How These Tools Fit into the Workflow

- SelectorGadget is best for rapid discovery and hypothesis generation.

- Developer Tools are best for confirming structure and understanding why a selector works (or fails).

- Neither tool replaces careful validation in code.

The goal is not to find a selector, but to find a selector that is:

- Conceptually aligned with the data you want,

- Robust to small layout changes, and,

- Appropriate for repeated extraction.

7.11 Develop Extraction Strategies that Emphasize Stability Over Convenience

Selectors are not the goal. Reliable, maintainable data extraction is the goal.

Modern web scraping succeeds by choosing what layer to extract from and how to anchor that extraction so it remains robust when pages evolve.

- In practice, this usually means preferring strategies that rely on structure and meaning at the HTML layer, rather than what you see at the browser’s interface presentation.

7.11.1 Strategy 1: Extract Content from Element Attributes if possible

When possible, extract data from HTML attributes rather than the visible text.

It is quite common for HTML elements to have attributes that duplicate or partially summarize their visible content.

- This happens most often when the content serves an additional purpose beyond display, such as a navigation link, accessibility, indexing, or interaction with JavaScript.

In practice this means:

- Select the element you care about (often a link, image, or container).

- Extract the attribute values from those elements (for example

href,src,data-*,aria-*).

Attributes are often more stable than visible text because they support core page behavior (navigation, accessibility, SEO), so developers are less likely to change them casually.

Common examples include:

- Links (

<a>)- The visible link text is often paired with a meaningful href attribute.

- The href may contain identifiers or structured information not visible on the page.

- Accessibility attributes (

aria-*,title,alt)- These attributes frequently repeat or paraphrase visible text to support screen readers and assistive technologies.

- As a result, they often provide cleaner or more complete versions of the content.

- Data attributes (

data-*)- Developers often store canonical or machine-readable values in attributes while rendering a formatted or abbreviated version as visible text.

- Metadata (

<meta>,<link>)- These elements may contain information that is not directly visible on the page but represents the same underlying content (e.g., page titles, descriptions, canonical URLs).

Attribute-first extraction is frequently more stable than scraping visible text since the attributes often support core functionality (navigation, accessibility, SEO).

- Developers are less likely to rename or remove them casually.

- They can exist even when visible text is dynamically generated.

In the IMDb example, each movie title is wrapped in an anchor <a> tag whose href contains the movie’s unique identifier.

- The

hrefoften contains a stable title identifier (for example anhref = "/title/tt.../ path"), which is usually more stable than the surrounding layout or text.

However, it is not safe to assume that every element will expose its content in attributes.

- Some attributes contain only identifiers or flags.

- Some content exists only as rendered text.

- Some attribute values may be incomplete or intentionally abbreviated.

For scraping, the practical rule is:

**Always inspect both the visible text and the attributes of candidate elements, and prefer attributes when they provide a stable, structured representation of the same information.*

Attribute-first extraction is a strategy for robustness, but not something you can always do for all the content of interest.

7.11.2 Strategy 2: Layout-Oriented Scoping (Page-Region Selection)

The first step in stable extraction is often to limit the scrape to the section of the page layout that contains the content of interest.

Modern pages frequently include multiple regions that share similar classes or elements, such as:

- navigation menus,

- sidebars,

- promotional panels,

- “related content” sections,

- footers.

Layout-oriented scoping means excluding unrelated regions so you can focus on the content of interest before attempting fine-grained extraction.

- Identify a container element (often a

<div>) that defines the relevant page region, e.g., in Section 7.10.1.1, the.col-sm-12container for the UN box with the grey background. - Use that container as the leading (leftmost) part of your selector, when helpful, e.g.,

.col-sm-12.grey-well.

At this stage, the goal is not to extract individual fields, but to answer: Which part of the page should I scrape at all?

Example (conceptual): Instead of selecting .ipc-title__text globally across the DOM, scope it to a layout region: .main-content .ipc-title__text

- This reduces accidental matches from headers, side panels, or repeated page components.

7.11.3 Strategy 3: Logical Extraction Within (Parent–Child) Items

Once the correct page region is isolated, focus shifts from layout to logical structure within the DOM.

Suppose we want to scrape multiple fields from a page where the same CSS selectors repeat. e.g., the titles, rankings, and ratings of movies in the IMDb Top 100 list.

- We can often identify CSS selectors for each individual field.

- However, extracting these fields globally can lead to subtle problems.

- If one movie is missing a rating or year, you may extract 99 values instead of 100.

- At that point, it can be difficult to determine which item is missing data without extensive post-cleaning.

Logical extraction addresses this problem by exploiting the hierarchical relationships in the DOM.

Instead of extracting child elements directly, we:

- extract parent elements that represent complete logical items first, and

- extract child elements relative to each parent.

Logical extraction ensures

- Correct alignment of fields within each item.

- Robust handling of optional or missing child elements as they are correctly associated with their parent.

- Stability when the page layout changes but the item structure remains consistent.

Logical extraction workflow:

- Identify a parent element that represents one logical item (for example, a movie, product, or article).

- It should contain the child elements of interest

- Identify the CSS selector for the parent element and each child element of interest.

- Extract all the parent elements first.

- For each parent element, extract child elements using selectors scoped within that parent (parent CSS as the left of the CSS selector for each child, separated by a space).

- If a child element is missing for a given parent, record its value as

NA.

This strategy is also know as anchoring but this does not refer to the HTML <a> (anchor) tag.

- Anchoring means scoping selectors to a stable parent element in the DOM, so child selectors match elements only within the intended logical context.

You should always validate the result using element counts and spot checks and inspection of missing elements.

Anchoring selectors in this way reduces over-selection, improves robustness to layout changes, and makes extraction code easier to understand and maintain.

IMDb example: Anchoring a Parent to a Child selector

- First extract all movie containers using the parent selector

.dli-parent - Then, for each parent (container), extract title, year,, score, link, …. using selectors scoped within that parent.

This parent-first approach is quite helpful when scraping complex pages with repeated, partially optional content.

7.11.4 Strategy 4: Treat Tables as Data

If a page contains actual HTML tables, the scraping package may provide functions specifically designed to extract tabular data rather than working with plain text.

rvest::html_table()works well with well-formed, nearly rectangular tables, returning the result directly as a tibble.- This is usually much faster and more reliable than scraping text and manually restructuring it into rows and columns.

- Header rows, column alignment, and basic type coercion are often handled automatically.

That said, table-based extraction still requires care:

- Table structure can vary across pages (missing columns, extra header rows, merged cells).

- Column names and ordering may differ slightly between tables.

- Normalization is often required before combining tables from multiple pages.

When available, HTML tables are often the most convenient and least brittle source of structured data, but you should still validate shape, column meanings, and consistency before analysis.

If your target page contains clean HTML tables:

- In R: use

html_table(). - In Python: try

pandas.read_html()first.

If tables are irregular, nested, or mixed with non-tabular content:

- Use DOM-based extraction ({rvest} or BeautifulSoup) at the table element level and build the table manually.

Stable scraping depends more on understanding page structure than on the language or library used.

7.12 From Page Access to Data Extraction: A Practical Workflow

Once you have confirmed access to a page and verified it returns a valid HTML document, the focus shifts from requesting content to extracting data.

- While the underlying concepts are language-independent, we will use {rvest} (with {xml2}) in the examples to demonstrate the workflow in R.

At this stage, a best practice is to work from a saved copy of the page rather than repeatedly requesting it from the website.

- This avoids unnecessary network traffic and rate limits, makes your work reproducible and separates extraction logic from access and gating issues.

In the sections that follow, we assume:

- The HTML page has already been retrieved successfully, e.g., with

read_html(url)orread_html(sess) - A local copy of the page is available on disk.

- We are working purely at the HTML / DOM layer.

Before writing extraction code, you must identify the selectors for the parent containers and child elements of interest, guided by the extraction strategies described earlier (layout scoping, logical parent–child structure, attributes, tables, or embedded data).

- When tables are present, prefer table-aware extraction; otherwise, select and extract child elements relative to their parent containers to preserve alignment and robustness.

The basic workflow revisited:

- Use

read_html()to access the HTML document (from a URL, an HTML file, or an HTML string) to create a copy in memory.- Since

read_html()is an {xml2} function, the result is a list object with classxml_document.

- Since

- Use

html_element(doc, CSS)orhtml_elements(doc, CSS)to extract the element/elements of interest from the xml_document object with the CSS selectors you have identified.- The result is a list object with class

xml_nodeif one element is selected or,xml_nodeset, if more than one element is selected.

- The result is a list object with class

- Extract data from the selected elements using the appropriate helper function:

html_name(x): extract the HTML tag name of the element(s) (xml_document,xml_node, orxml_nodeset)html_attr()orhtml_attrs(): extract one or more attribute(s) of the element(s). The result is always a string so may need to be cleaned.html_text2(): extract the text inside the element with browser-style formatting of white space orhtml_text()for original text code formatting (which may look messy).html_table(): extract data structured as an HTML table. It will return a tibble (if one element is selected) or a list of tibbles (when multiple elements are selected).

This workflow provides a consistent foundation for extracting, restructuring, and validating data from web pages using R.

The following examples demonstrate how the extraction and strategies fit together.

7.13 Example 1: Scraping a Static Page (UN Humanitarian Aid)

For reproducibility (and to avoid unnecessary requests), we will work from the HTML file we saved earlier.

- When reading from disk, you are working entirely at the HTML/DOM layer and are insulated from changes in access gating, rate limits, and the page DOM that may have occurred since you downloaded the file.

- When done, you can create and save a new file to confirm the code still works.

- Load the Saved HTML file

Use read_html() to read from a URL, a file path, or an HTML string.

- Extract Elements from the Saved Document

We can use Selector Gadget to find the selector for the box with the grey background is

".col-sm-12.grey-well"Use

html_element()when you expect one match, andhtml_elements()when you expect multiple matches.- We expect one box

- Extract Text from the Selected Element

html_text2()returns browser-style normalized text (typically what you want for scraping).

We have “scraped” the data of interest and converted it into a character vector.

- However, as suspected, it is a lot of text with only new line characters to indicate any kind of structure It would take a lot of cleaning to figure this out.

Instead, let’s try selecting individual elements to see if that is better.

- Use the CSS selectors for the Office Names and their HQs locations and extract the text for those elements.

- You should get a character vector of 9 + 9 = 18 elements.

- Convert into a tidy data frame.

- Approach 1. Scrape each individually and combine into data frame (works here because both vectors are complete and aligned).

- Approach 2. Extract together, then reshape (more general when you extend to more fields).

html_elements(un_html_obj,

1 css = ".horizontal-line-top-drk, .horizontal-line-top-drk+ p") |>

2 html_text2() |>

3 tibble(un_text = _) |>

4 mutate(

office_id = rep(1:9, each = 2),

5 id = if_else(row_number() %% 2 == 1, "office", "location")

) |>

6 pivot_wider(names_from = id, values_from = un_text) |>

7 select(-office_id)

un_aid_offices - 1

- Combine the two (or more) selectors into a character string separated by commas.

- 2

- Extract the text as a character vector.

- 3

-

Convert the vector into a tibble with column name

un_text. - 4

- Add a column to uniquely identify each pair of office and location.

- 5

-

Add a column

idwith what will be the new column names. - 6

-

Pivot wider based on the new column names column and

un_text. - 7

-

Remove the

office_idas no longer needed.

Now that you have a tidy tibble, you can clean the text as desired, e.g.,

7.14 Example 2: Scraping a Modern Site (IMDb)

This example enables us to practice:

- Identifying CSS selectors using the Developer Tools

- Recognizing the potential for Layout-oriented Scoping

- Recognizing when classes are absent or brittle and need parent context

- Using Parent-element alignment for item-level extraction (Logical Extraction)

- Identifying when “only the first N items are present” due to infinite scroll

- Considering Embedded Data

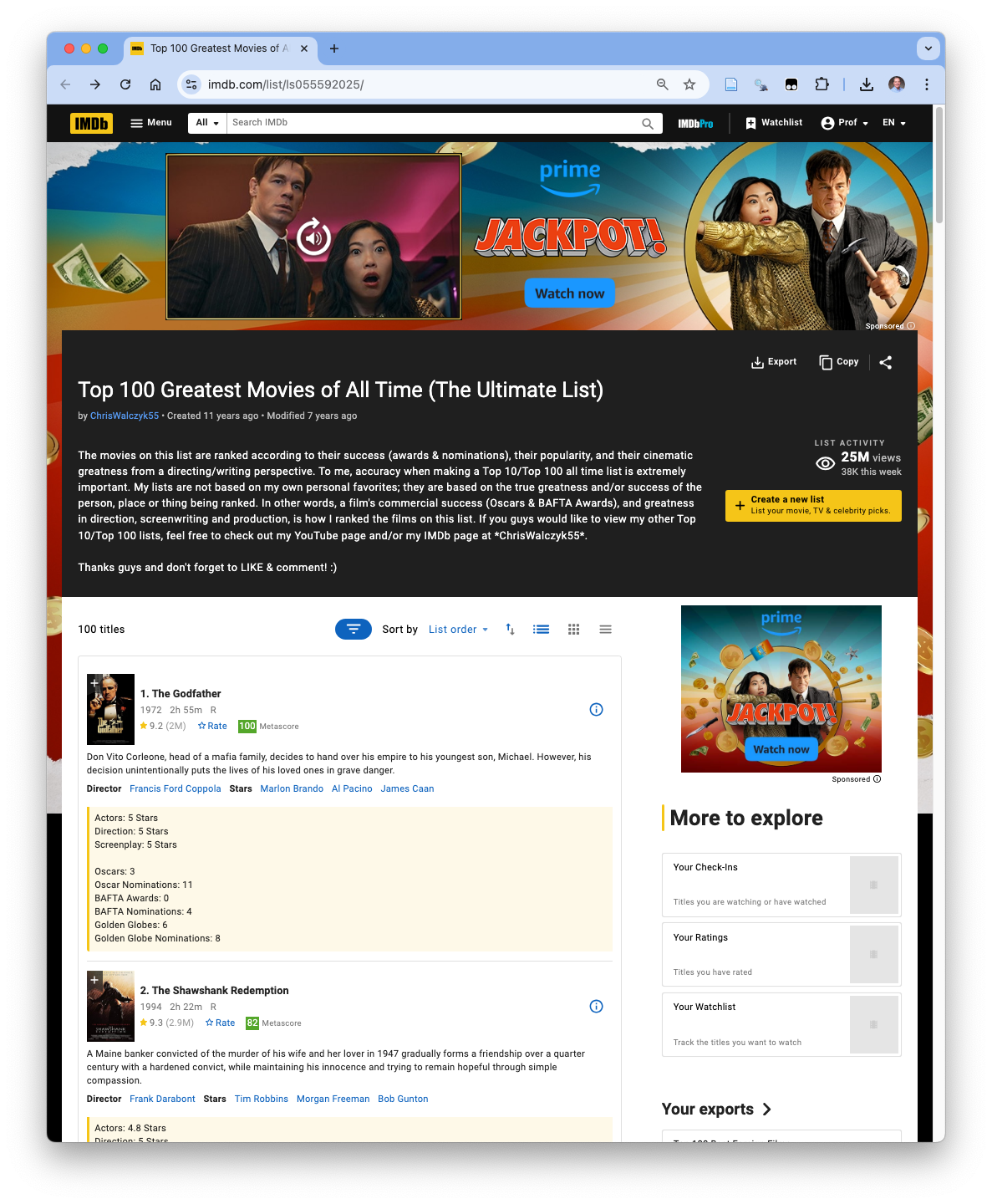

We want to scrape data about the top 100 greatest movies of all time from IMDB (per Chris Walczyk55).

- The URL: https://www.imdb.com/list/ls055592025/ shows a page that looks something like Figure 7.11.

- We are pretending IMDB did not add an export option to download all the data in a spreadsheet….

7.14.1 Load the Saved HTML page

We saved the page as an HTML file so we can now read it in with read_html("path/filename")

The web page R object is an R list where the $head and $body elements contain pointers to the elements in the parts of document.

- The web page document is the top level, single node, for the page so the parent class is

xml_nodeinstead of"node_set".

We can extract the element name and the attributes for the page as well.

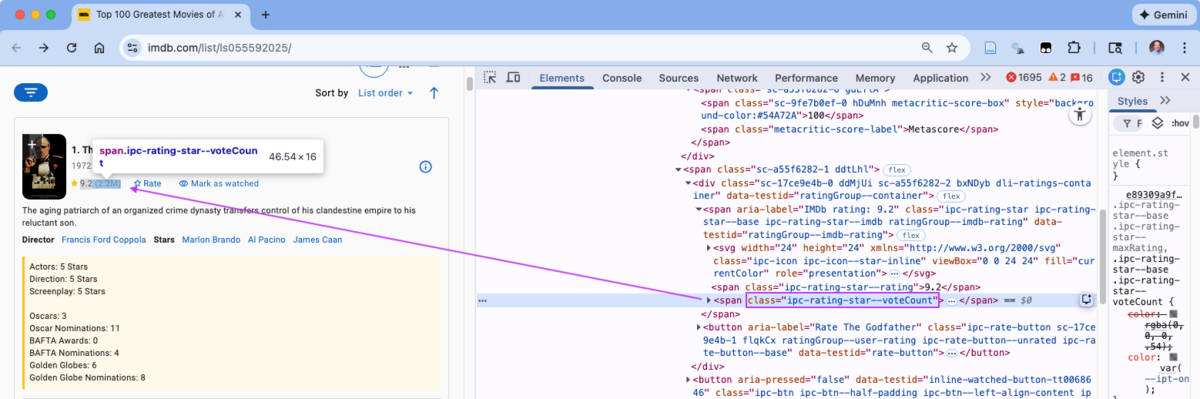

We want to get the data for each of the 100 movies for the following elements

- Rank

- Title

- Metascore

- Year

- Votes

7.14.2 Identifying CSS Selectors with Selector Gadget

7.14.2.1 Rank and Title

When we start Selector Gadget and try to select the ranking alone, SelectorGadget does not isolate it cleanly.

- Instead, it consistently highlights the rank and title together as a single text block.

- In practice, this means our first selector will likely return a combined value such as “1. The Godfather”.

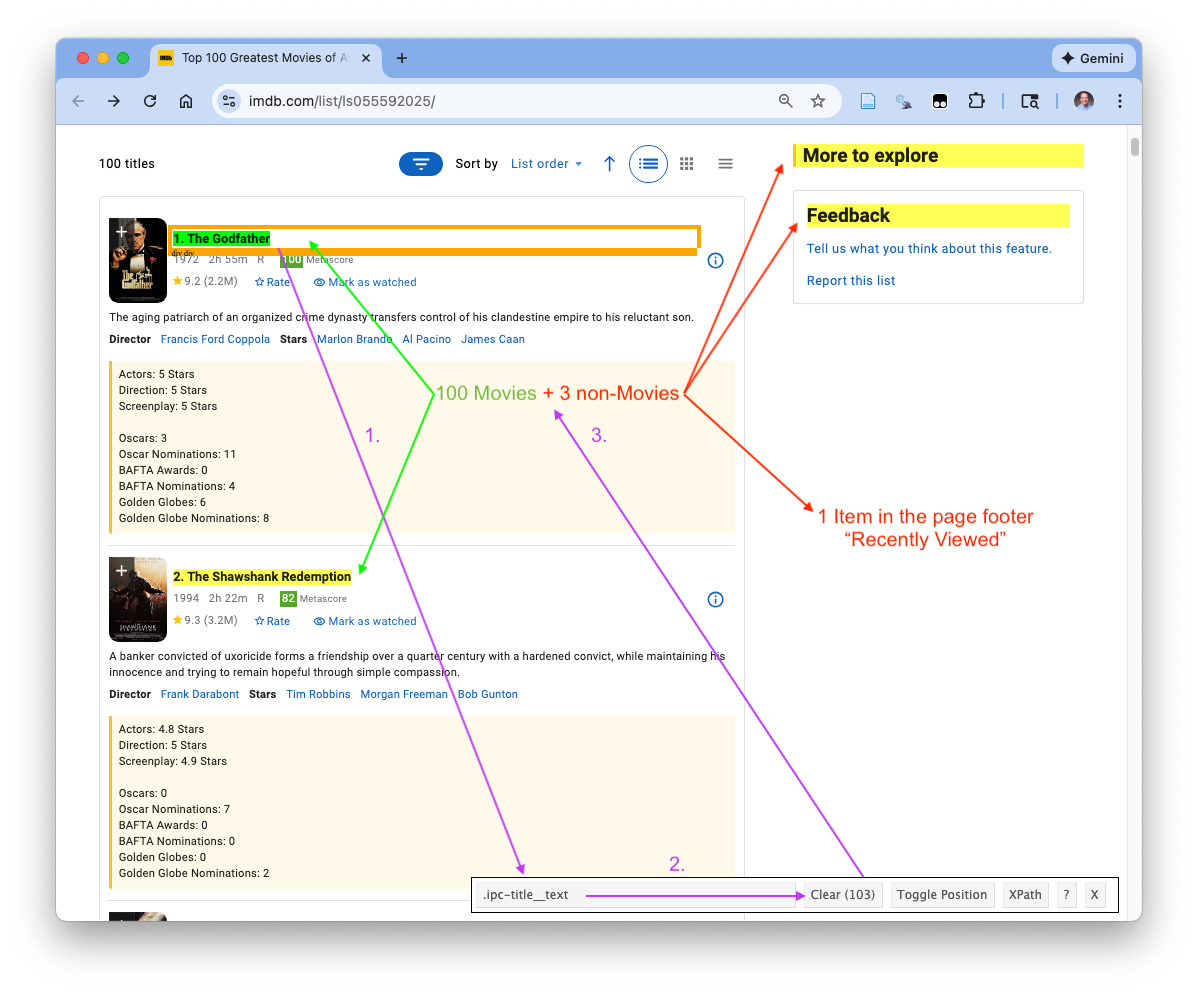

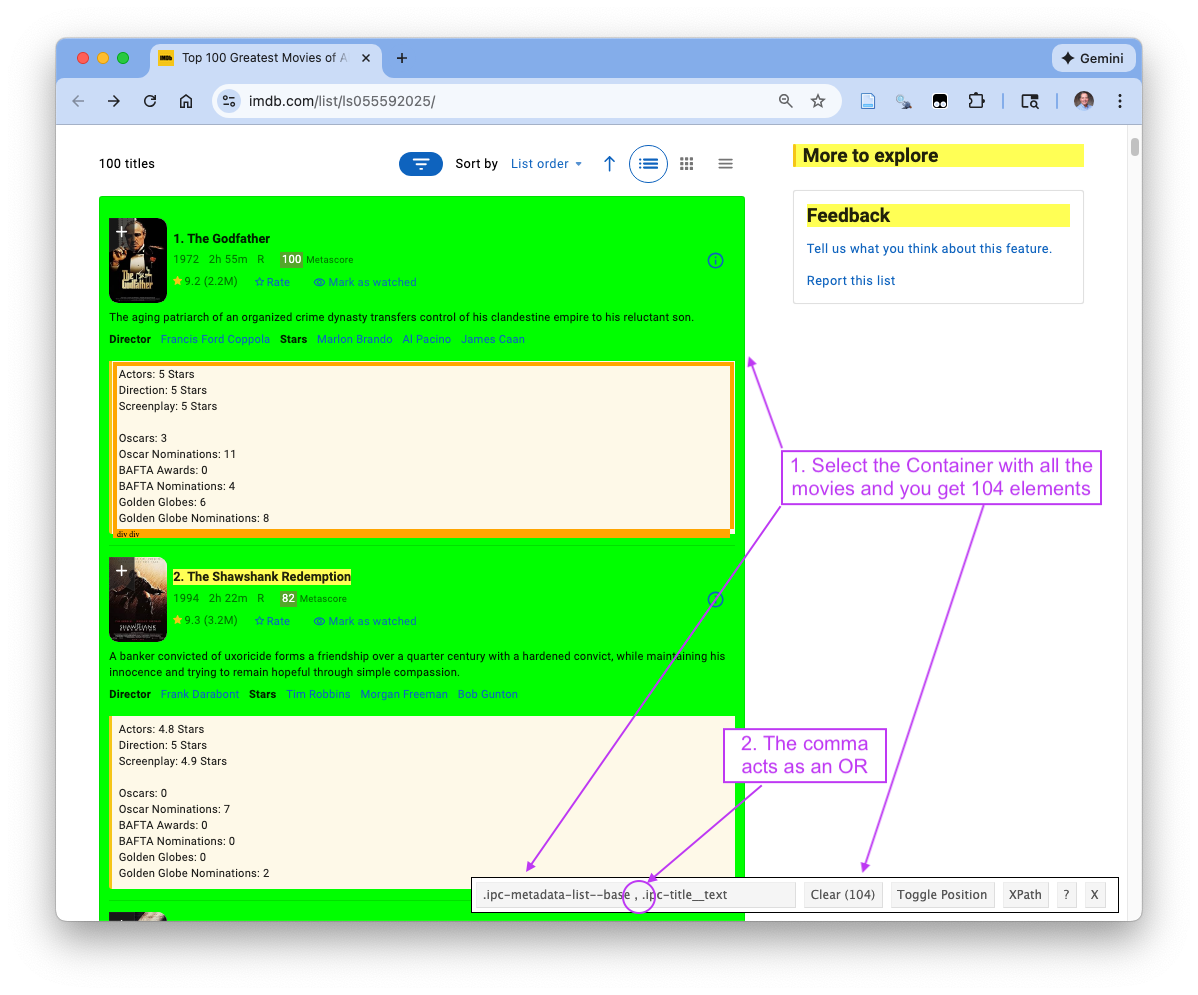

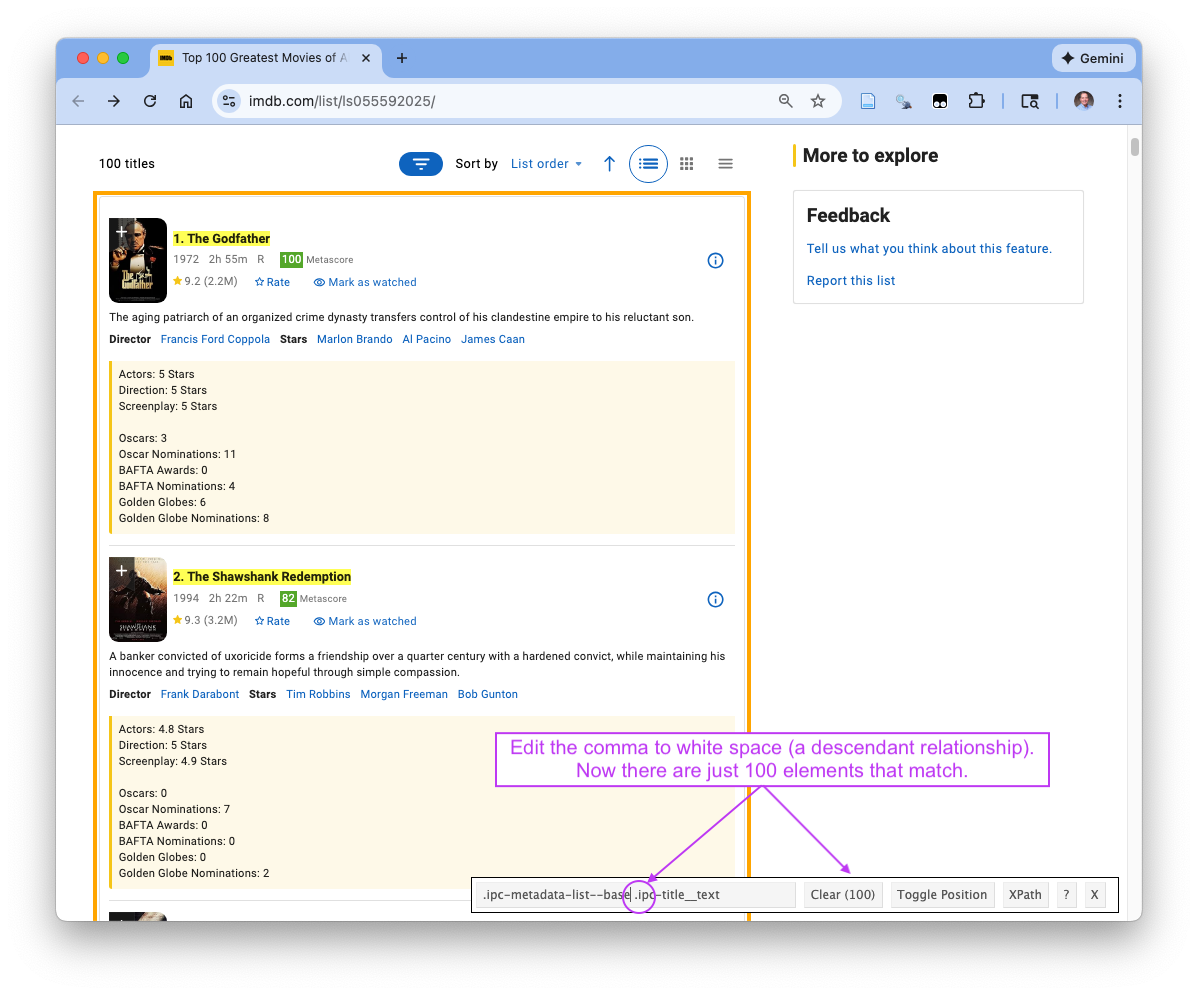

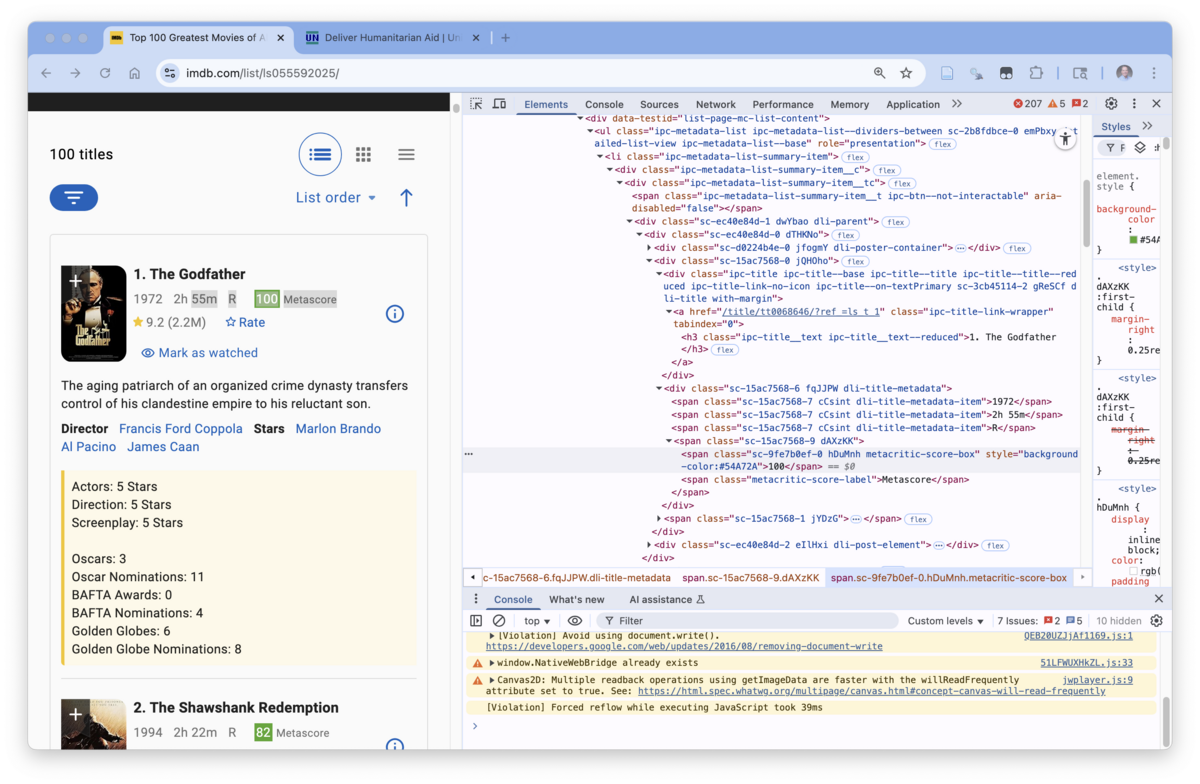

Figure 7.12 shows that the selector ipc-title_text matches 103 items which is more than we want.

- Some of these matches come from other headings or title-like elements elsewhere on the page.

- This is a common failure mode when a class is reused across multiple page regions.

- The fix is layout-oriented scoping: restrict the selector to the region of the DOM that contains only the movie list.

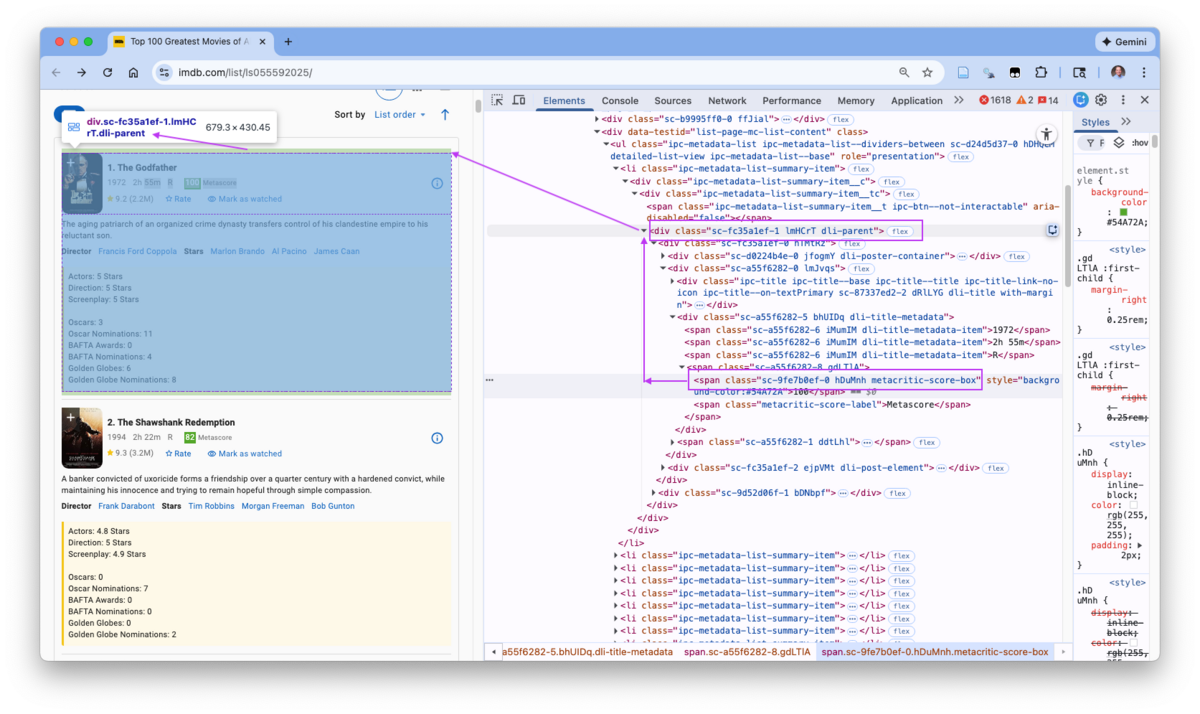

Figure 7.13 shows Selector Gadget can also identify a container element that encloses the movie list.

- However, this still gets use 104 elements (we added the container)

- Looking at the selector in the information box, there a comma between the container class selector and the Rank and Title class selector which in CSS means “either/or” (a union).

- It is matching elements that are the container or the Rank and Title selector, thus the 104.

- To scope the titles within the container, we need a descendant selector:

A B(with a space) matches elements that matchBthat occur anywhere insideA.

- So we replace the comma with a single space.

Figure 7.14 now shows that replacing with a single whitespace creates the proper selector that yields 100 elements for the Rank and Title.

- Visually inspect the page to confirm all the movies ranks and title are selected (in green or yellow) and that no unrelated headings are included.

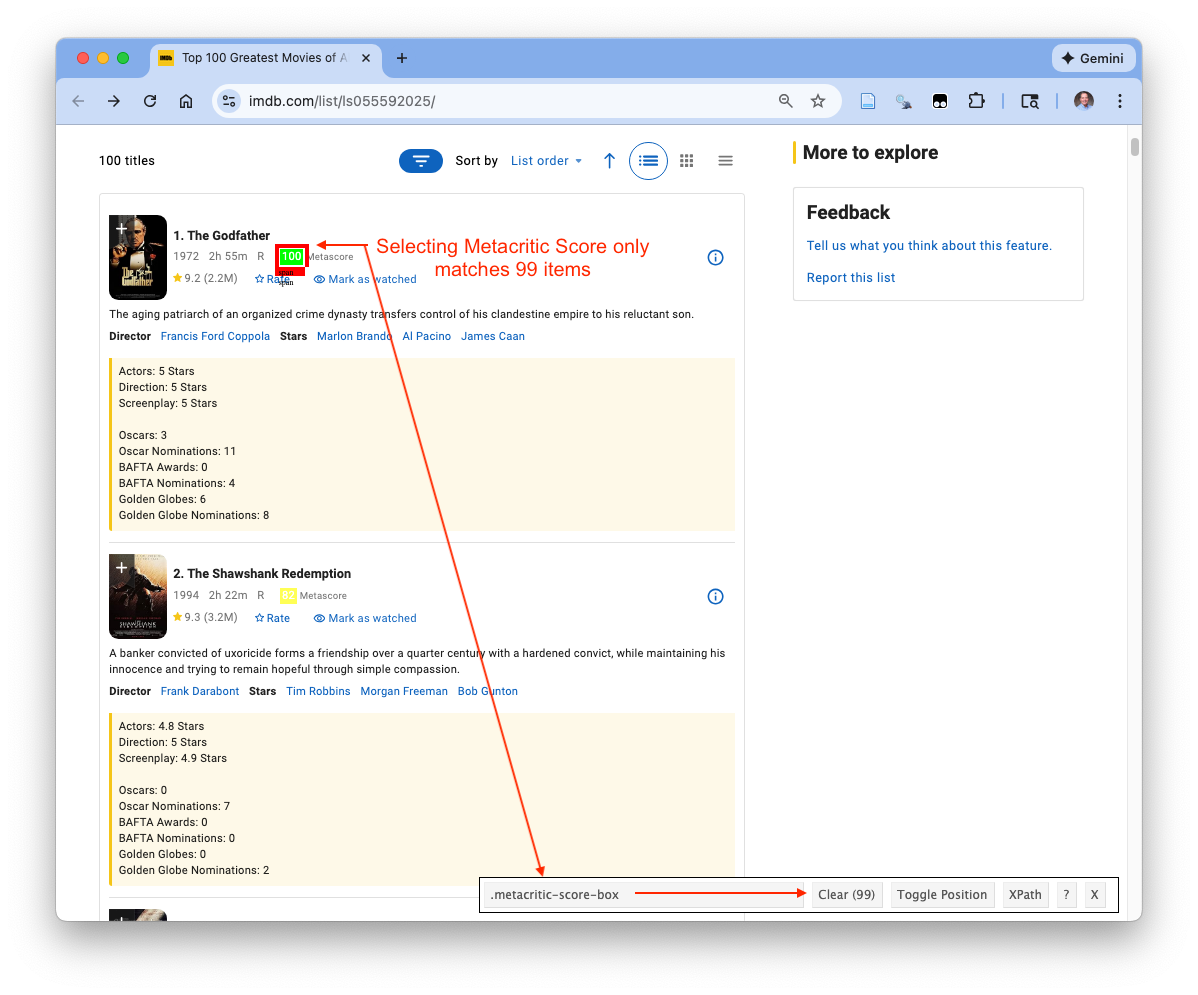

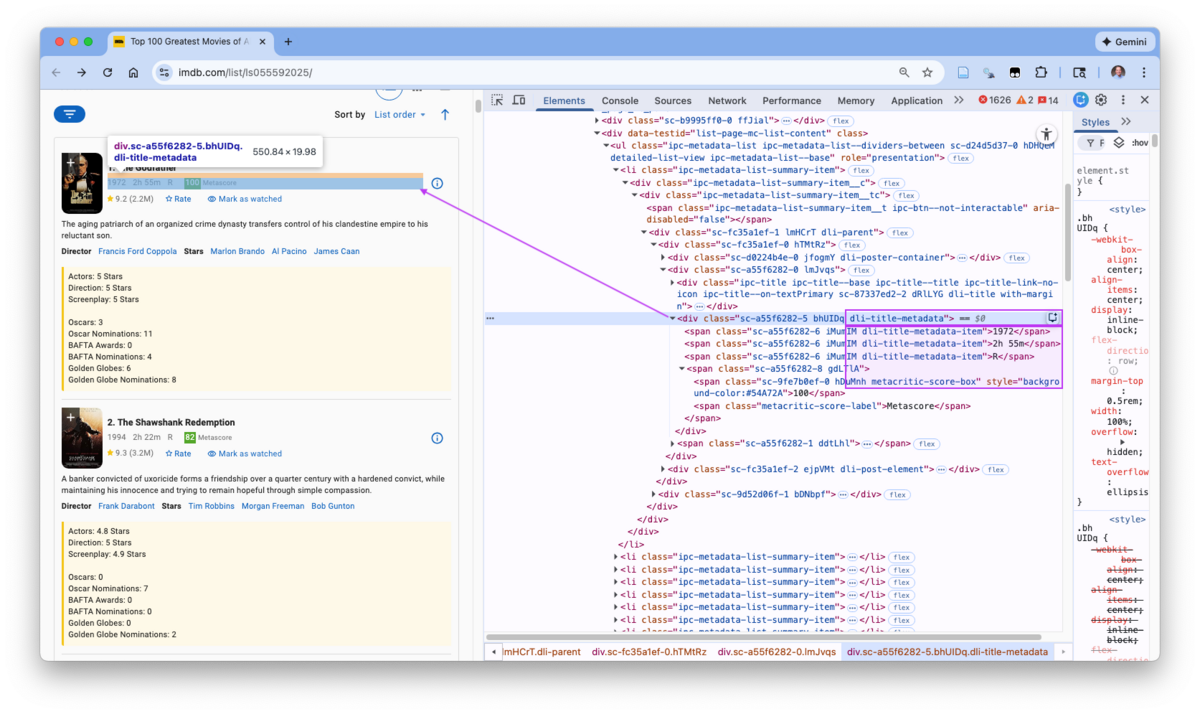

7.14.2.2 Metascore

Figure 7.15 shows SelectorGadget can identify a selector for the Metascore element.

- However, we do not get a clean 100-to-100 match:

- The selector returns 99 elements, which strongly suggests that at least one movie does not have a Metascore element (or it is represented differently for that movie).

- A movie is missing the element but we don’t know which one.

- If we scrape Metascore as a standalone vector, we immediately create an alignment problem: we have no reliable way to know which movie is missing the value.

This is exactly the situation where logical extraction using parent items is safer:

First select the parent element for each movie (one parent per movie).

Then, within each movie parent, extract the Metascore child element (or return NA if it is missing).

Even though the rendered page layout suggests an obvious “movie card” container, the best practice is to confirm the true parent structure in the DOM using browser Developer Tools before committing to the selector.

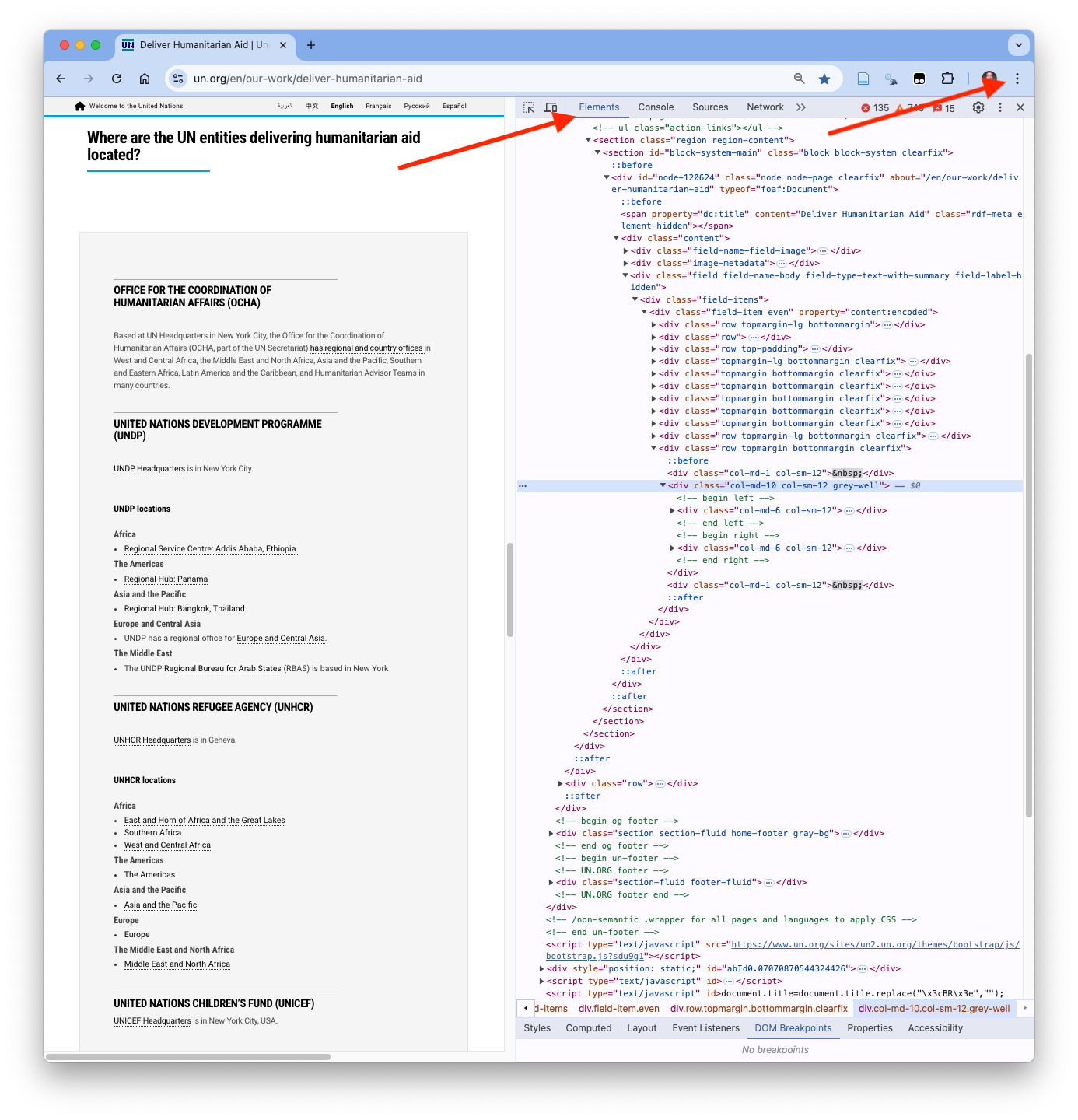

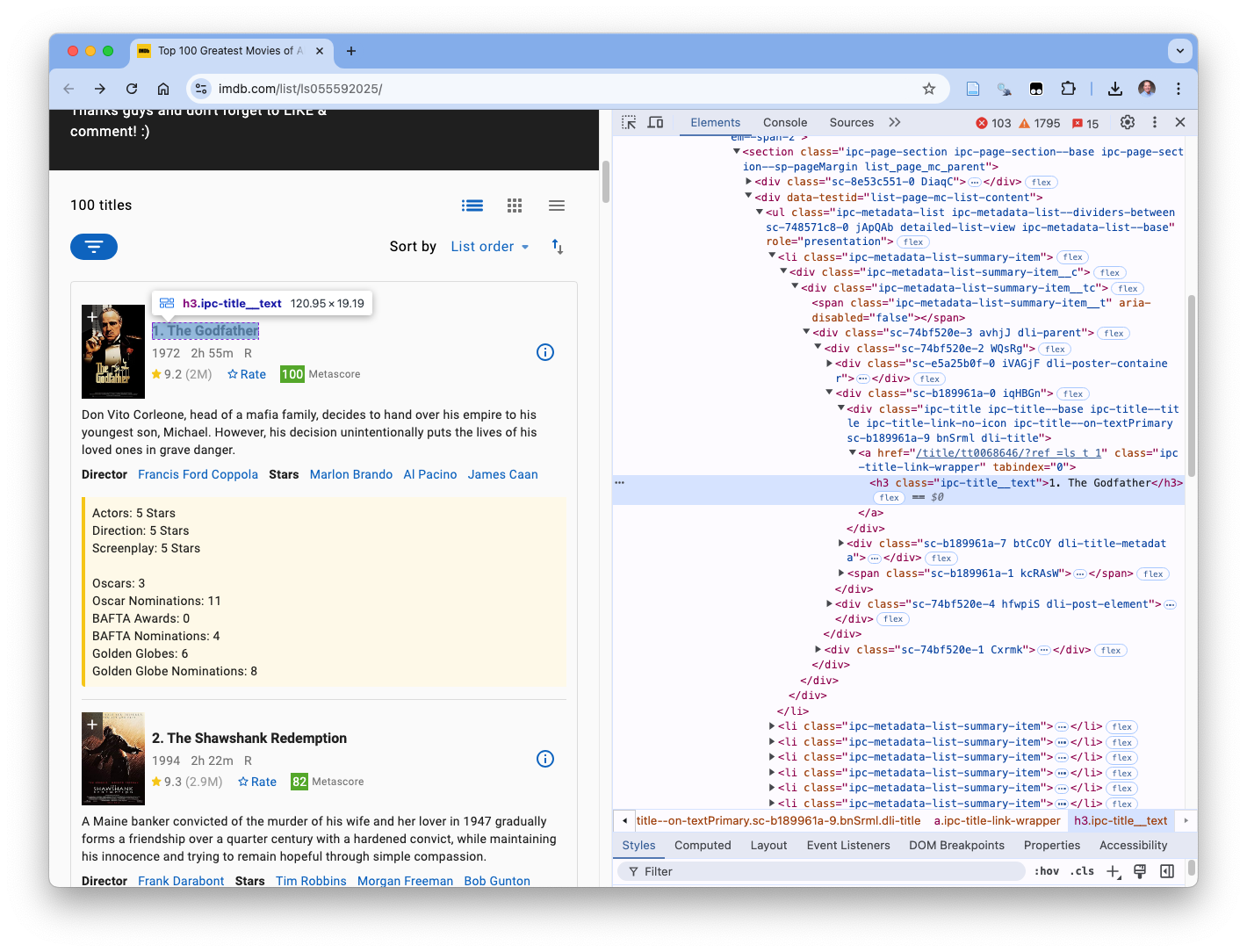

7.14.3 Identifying CSS Selectors with Browser Developer Tools

Whereas Selector Gadget navigated the DOM behind the scenes using heuristics to identify a selector, we will not navigate the DOM explicitly to find CSS selectors.

- This is a more effective way to integrate the Extraction Strategies from Section 7.11 into our workflow.

7.14.3.1 Rank and Title

Go to the web page (make sure Selector gadget is off) and right-click on the first movie’s rank (1), and chose Inspect.

Figure 7.16 shows the rank and title are in a single element with class ipc-title__text.

- This explains why Selector Gadget could not identify a selector just for Rank as there was none.

- The Rank number is a text value in the element along with the title.

7.14.3.2 Metascore (Logical Scoping)

Figure 7.17 shows that if we Inspect Metascore, it has its own element with class, metacritic-score-box.

- The Selector Gadget CSS selector of

.metacritic-score-boxis accurate. - However, we need to find a parent element for logical scoping since there are only 99 of the elements.

- Inspect the Metascore element on the left to find its line in the DOM in the Elements pane.

- Work your way up the DOM tree element by element.

- As each element is highlighted it may highlight the corresponding element on the rendered page on the left.

- Eventually a “movie” element is highlighted in the rendered page on the left and also highlighted in the DOM tree in the Elements Pane.

Figure 7.18 shows the path from the metascore element to the “movie” element and that the movie element is the parent of metascore.

- Both the pop-up on the rendered page and the element line in the Elements Pane show the class of the movie element is

dli-parent.