15 Text Analysis 1

text analysis, tokens, bag of words, tidytext, frequency analysis, sentiment analysis

15.1 Introduction

15.1.1 Learning Outcomes

- Create strategies for analyzing text.

- Manipulate and analyze text data from a variety of sources using the {tidytext} package for …

- Frequency Analysis

- Relationships Among Words

- Sentiment Analysis

- Build Word Cloud plots.

15.1.2 References:

- Text Mining with R by Julia Silge and David Robinson Silge and Robinson (2022)

- {tidytext} package Silge and Robinson (2016)

- {tidytext} website Silge and Robinson (2023a)

- {tidytext} GitHub Silge and Robinson (2023b)

- {janeaustenr} package Silge (2023)

- {gutenbergr} package Robinson and Johnston (2023)

- {wordcloud} package Fellows (2018)

15.1.2.1 Other References

- {scales} package Wickham and Seidel (2022)

15.2 Text Analysis with {tidytext}

- Most of this material is taken from Text Mining with R by Julia Silge and David Robinson.

Text Mining can be considered as a process for extracting insights from text.

- Computer-based Text Mining has been around since the 1950s with automated translations, or the 1940s if you want to consider computer-based code-breaking Trying to Break Codes.

The CRAN Task View: Natural Language Processing (NLP) lists over 50 packages focused on gathering, organizing, modeling, and analyzing text.

In addition to text mining or analysis, NLP has multiple areas of research and application.

- Machine Translation: translation without any human intervention.

- Speech Recognition: Alexa, Hey Google, Siri, … understanding your questions.

- Sentiment Analysis: also known as opinion mining or emotion AI.

- Question Answering: Alexa, Hey Google, Siri, … answering your questions so you can understand.

- Automatic Summarization: Reducing large volumes to meta-data or sensible summaries.

- Chat bots: Combinations of 2 and 4 with short-term memory and context for specific domains.

- Market Intelligence: Automated analysis of your searches, posts, tweets, ….

- Text Classification: Automatically analyze text and then assign a set of pre-defined tags or categories based on its content e.g., organizing and determining relevance of reference material

- Character Recognition.

- Spelling and Grammar Checking.

Text Analysis/Natural Language processing is a basic technology for generative AIs What is generative AI?.

15.3 Organizing Text for Analysis and Tidy Text Format

There are multiple ways to organize text for analysis:

- strings: character data in atomic vectors or lists (data frames)

- corpus: a library of documents structured as strings with associated metadata, e.g., the source book or document

- Document-Term Matrix (DTM): a matrix with a row for each document and a column for every unique term or word across every document (i.e., across all rows).

- The entries are generally counts or tf-idf (term frequency - inverse document freq) scores for the column’s word in the row’s document.

- With multiple rows, there are a lot of 0s, so usually stored as a sparse matrix.

- The Term-Document Matrix (TDM) is the transpose of the DTM.

We will focus on organizing bodies of text into Tidy Text Format (TTF).

- Tidy Text Format requires organizing text into a tibble/data frame with the goal of speeding analysis by allowing use of familiar tidyverse constructs.

In a TTF tibble, the text is organized so as to have one token per row.

- A token is a meaningful unit of text where you decide what is meaningful to your analysis.

- A token can be a word, an n-gram (multiple words), a sentence, a paragraph, or even larger sets up to whole chapters or books..

The simplest approach is analyzing single words or n-grams without any sense of syntax or order connecting them to each other.

- This is often called a “Bag of Words” as each token is treated independently of the other tokens in the document; only the counts or tf-idfs matter.

More sophisticated methods are now using neural word embeddings where the words are encoded into vectors that attempt to capture (through training) the context from other words in the document (usually based on physical or semantic distance.

- Word2Vec and Google’s BERT are two examples See Beyond Word Embeddings Part 2.

15.3.1 General Concepts and Language Specifics

We will be only looking at text analysis for the English language.

- The techniques may be similar for many other proto-indo-european languages that have similar structure.

While the concepts we will use apply to other languages, it can be more complex.

- See Chinese Natural Language Processing and Speech Processing, a Text Mining Toolkit for chinese in R,

- Indic NLP (Python), or

- {KoNLP} Korean NLP R Package, or the

- {udpipe} R package for NLP with non-English Languages.

Research is continuing with other languages, e.g., the release of a multi-lingual version of BERT.

15.4 The {tidytext} package

The {tidytext} package contains many functions to support text mining for word processing and sentiment analysis.

- It is designed to work well with other tidyverse packages such as {dplyr} and {ggplot2}.

- Use the console to install the package and then load {tidyverse} and {tidytext}.

15.4.1 Let’s Organize Text into Tidy Text Format

Example 1: A famous love poem by Pablo Neruda.

Read in the following text from the first stanza.

text <- c(

"If You Forget Me",

"by Pablo Neruda",

"I want you to know",

"one thing.",

"You know how this is:",

"if I look",

"at the crystal moon, at the red branch",

"of the slow autumn at my window,",

"if I touch",

"near the fire",

"the impalpable ash",

"or the wrinkled body of the log,",

"everything carries me to you,",

"as if everything that exists,",

"aromas, light, metals,",

"were little boats",

"that sail",

"toward those isles of yours that wait for me."

)

text [1] "If You Forget Me"

[2] "by Pablo Neruda"

[3] "I want you to know"

[4] "one thing."

[5] "You know how this is:"

[6] "if I look"

[7] "at the crystal moon, at the red branch"

[8] "of the slow autumn at my window,"

[9] "if I touch"

[10] "near the fire"

[11] "the impalpable ash"

[12] "or the wrinkled body of the log,"

[13] "everything carries me to you,"

[14] "as if everything that exists,"

[15] "aromas, light, metals,"

[16] "were little boats"

[17] "that sail"

[18] "toward those isles of yours that wait for me."Let’s get some basic info about our text.

- Check the length of the vector.

- Use

map()to check the number of characters in each element. - Use

map_dbl()to count the number of words in each element and total number of words. - Use

map_dbl()to count the total number of words.

[1] 18 [1] 16 15 18 10 21 9 38 32 10 13 18 32 29 29 22 17 9 45 [1] 4 3 5 2 5 3 8 7 3 3 3 7 5 5 3 3 2 9[1] 80- You get a character variable of length 18 with 80 words.

- Each element has different numbers of words and letters.

This is not a tibble so it can’t be tidy text format with one token per row.

We’ll go through a number of steps to gradually transform the text vector to tidy text format and then clean it so we can analyze it.

15.4.1.1 Convert the text Vector into a Tibble

Convert text into a tibble with two columns:

- Add a column

linewith the “line number” from the poem for each row based on the position in the vector. - Add a column

textwith the each element of the vector in its own row.- Adding a column of indices for each token is a common technique to track the original structure.

# A tibble: 10 × 2

line text

<int> <chr>

1 1 If You Forget Me

2 2 by Pablo Neruda

3 3 I want you to know

4 4 one thing.

5 5 You know how this is:

6 6 if I look

7 7 at the crystal moon, at the red branch

8 8 of the slow autumn at my window,

9 9 if I touch

10 10 near the fire 15.4.1.2 Convert the Tibble into Tidy Text Format with unnest_tokens()

The function unnest_tokens(text_df) converts a column of text from a data frame into tidy text format.

- Look at help for

unnest_tokens(), not the olderunnest_tokens_(). - The first argument,

tbl, is the input tibble so piping works. - The order might be un-intuitive as

outputis next, followed by theinputcolumn.

Like unnesting list columns, unnest_tokens() splits each element (row) in the column into multiple rows with a single token.

- The value of the token is based on the value of the argument

token =which recognizes multiple options - “words” (default), “characters”, “character_shingles”, “ngrams”, “skip_ngrams”, “sentences”, “lines”, “paragraphs”, “regex”, “tweets” (tokenization by word that preserves usernames, hashtags, and URLS), and “ptb” (Penn Treebank).

- The default for the

token =argument is “words”.

# A tibble: 10 × 2

line word

<int> <chr>

1 1 if

2 1 you

3 1 forget

4 1 me

5 2 by

6 2 pablo

7 2 neruda

8 3 i

9 3 want

10 3 you # A tibble: 10 × 2

line word

<int> <chr>

1 1 if

2 1 you

3 1 forget

4 1 me

5 2 by

6 2 pablo

7 2 neruda

8 3 i

9 3 want

10 3 you - This converts the data frame to 80 rows with a one-word token in each row.

- Punctuation has been stripped.

- By default,

unnest_tokens()converts the tokens to lowercase.- Use the argument

to_lower = FALSEto retain case.

- Use the argument

15.4.2 Remove Stop Words with an anti_join() on stop_words

We can see a lot of common words in the text such as “I”, “the”, “and”, “or”, ….

These are called stop words: extremely common words not useful for some types of text analysis.

- Use

data()to load the {tidytext} package’s built-in data frame calledstop_words. stop_wordsdraws on three different lexicons to identify 1,149 stop words (see help).

Use anti_join() to remove the stop words (a filtering join that removes all rows from x where there are matching values in y).

Save to a new tibble.

- How many rows are there now?

data(stop_words)

text_df |>

unnest_tokens(word, text) |>

anti_join(stop_words, by = "word") |> ## get rid of uninteresting words

count(word, sort = TRUE) -> ## count of each word left

text_word_count

text_word_count# A tibble: 26 × 2

word n

<chr> <int>

1 aromas 1

2 ash 1

3 autumn 1

4 boats 1

5 body 1

6 branch 1

7 carries 1

8 crystal 1

9 exists 1

10 fire 1

# ℹ 16 more rows[1] 26These are the basic steps to get your text ready for analysis:

- Convert text to a tibble, if not already in one, with a column for the text and an index column with row number or other location indicators.

- Convert the tibble to Tidy Text format using

unnest_tokens()with the appropriate arguments. - Remove stop words if appropriate (sometimes we need to keep them as we will see later).

- Save to a new tibble.

15.5 Tidytext Example 2: Jane Austen’s Books and the {janeaustenr} Package

Let’s look at a larger set of text, all six major novels written by Jane Austen in the early 19th century.

The {janeaustenr} package has this text already in a data frame based on the free content in the Project Gutenberg Library.

Use the console to install the package and then load it in your file.

15.5.1 Get the Data for the Corpus of Six Books and Add Metadata

Use the function austen_books() to access the data frame of the six books.

The data frame has two columns:

textcontains the text of the novels divided into elements of up to about 70 characters each.bookcontains the titles of the novels as a factor, with the levels in order of publication.

We want to track the chapters in the books.

Let’s use REGEX to see how the different books indicate their chapters.

# A tibble: 20 × 2

text book

<chr> <fct>

1 "SENSE AND SENSIBILITY" Sens…

2 "" Sens…

3 "by Jane Austen" Sens…

4 "" Sens…

5 "(1811)" Sens…

6 "" Sens…

7 "" Sens…

8 "" Sens…

9 "" Sens…

10 "CHAPTER 1" Sens…

11 "" Sens…

12 "" Sens…

13 "The family of Dashwood had long been settled in Sussex. Their estate" Sens…

14 "was large, and their residence was at Norland Park, in the centre of" Sens…

15 "their property, where, for many generations, they had lived in so" Sens…

16 "respectable a manner as to engage the general good opinion of their" Sens…

17 "surrounding acquaintance. The late owner of this estate was a single" Sens…

18 "man, who lived to a very advanced age, and who for many years of his" Sens…

19 "life, had a constant companion and housekeeper in his sister. But he… Sens…

20 "death, which happened ten years before his own, produced a great" Sens…Chapters start on their own line it appears.

austen_books() |>

filter(str_detect(text, "(?i)^chapter")) |> # Case insensitive

slice_sample(n = 10)# A tibble: 10 × 2

text book

<chr> <fct>

1 Chapter 19 Persuasion

2 Chapter 55 Pride & Prejudice

3 CHAPTER XII Emma

4 Chapter 19 Pride & Prejudice

5 CHAPTER VI Mansfield Park

6 Chapter 14 Persuasion

7 CHAPTER XXXIII Mansfield Park

8 CHAPTER 45 Sense & Sensibility

9 CHAPTER XIV Emma

10 CHAPTER XXII Mansfield Park - Chapters start with the word chapter in both upper and sentence case followed by a space then the chapter number in either Arabic or Roman numerals.

Let’s add some metadata to keep track of things when we convert to tidy text format.

- Group by book.

- Add an index column with a row number for the rows from each book (they are grouped).

- Add an index column with the number of the chapter.

Use stringr::regex() with argument ignore_case = TRUE.

regex()is a {stringr} modifier function with options for how to modify the regex pattern.- See help for

modifiers. For information on line terminators see Regular-expression constructs.

Save to a new data_frame with line number, text, and book.

austen_books() |>

group_by(book) |>

mutate(

linenumber = row_number(),

chapter = cumsum(str_detect(

text,

regex("^chapter [\\divxlc]",

ignore_case = TRUE

)

)),

.before = text

) |>

ungroup() |>

select(book, chapter, linenumber, text) ->

orig_books

head(orig_books)# A tibble: 6 × 4

book chapter linenumber text

<fct> <int> <int> <chr>

1 Sense & Sensibility 0 1 "SENSE AND SENSIBILITY"

2 Sense & Sensibility 0 2 ""

3 Sense & Sensibility 0 3 "by Jane Austen"

4 Sense & Sensibility 0 4 ""

5 Sense & Sensibility 0 5 "(1811)"

6 Sense & Sensibility 0 6 "" [1] 73422[1] 729533We can now see the book, chapter, and line number for each of the 73,422 text elements with almost 730K (non-unique) individual words.

15.5.2 Convert to Tidy Text Format, Clean, and Sort the Counts

- Unnest the text with the tokens being each word.

- Clean the words to remove any formatting characters.

- Project Gutenberg uses pairs of formatting characters, before and after a word, to denote bold or italics e.g., “

_myword_” means myword. - We want to extract just the words without any formatting symbols.

- Project Gutenberg uses pairs of formatting characters, before and after a word, to denote bold or italics e.g., “

- Remove stop words.

- Save to a new tibble.

Look at the number of rows and the counts for each unique word.

orig_books |>

unnest_tokens(word, text) |> ## nrow() #725,055

## use str_extract to get just the words inside any format encoding

mutate(word = str_extract(word, "[a-z']+")) |>

anti_join(stop_words, by = "word") -> ## filter out words in stop_words

tidy_books

nrow(tidy_books)[1] 216385# A tibble: 13,464 × 2

word n

<chr> <int>

1 a'n't 1

2 abandoned 1

3 abashed 1

4 abate 2

5 abatement 4

6 abating 1

7 abbey 71

8 abbeyland 1

9 abbeys 2

10 abbots 1

# ℹ 13,454 more rows[1] 13464# A tibble: 13,464 × 2

word n

<chr> <int>

1 miss 1860

2 time 1339

3 fanny 862

4 dear 822

5 lady 819

6 sir 807

7 day 797

8 emma 787

9 sister 727

10 house 699

# ℹ 13,454 more rows- There are 216,385 instances of 13,464 unique (non-stop word) words across the six books.

The data are now in tidy text format and ready to analyze!

15.5.3 Plot the Most Common Words

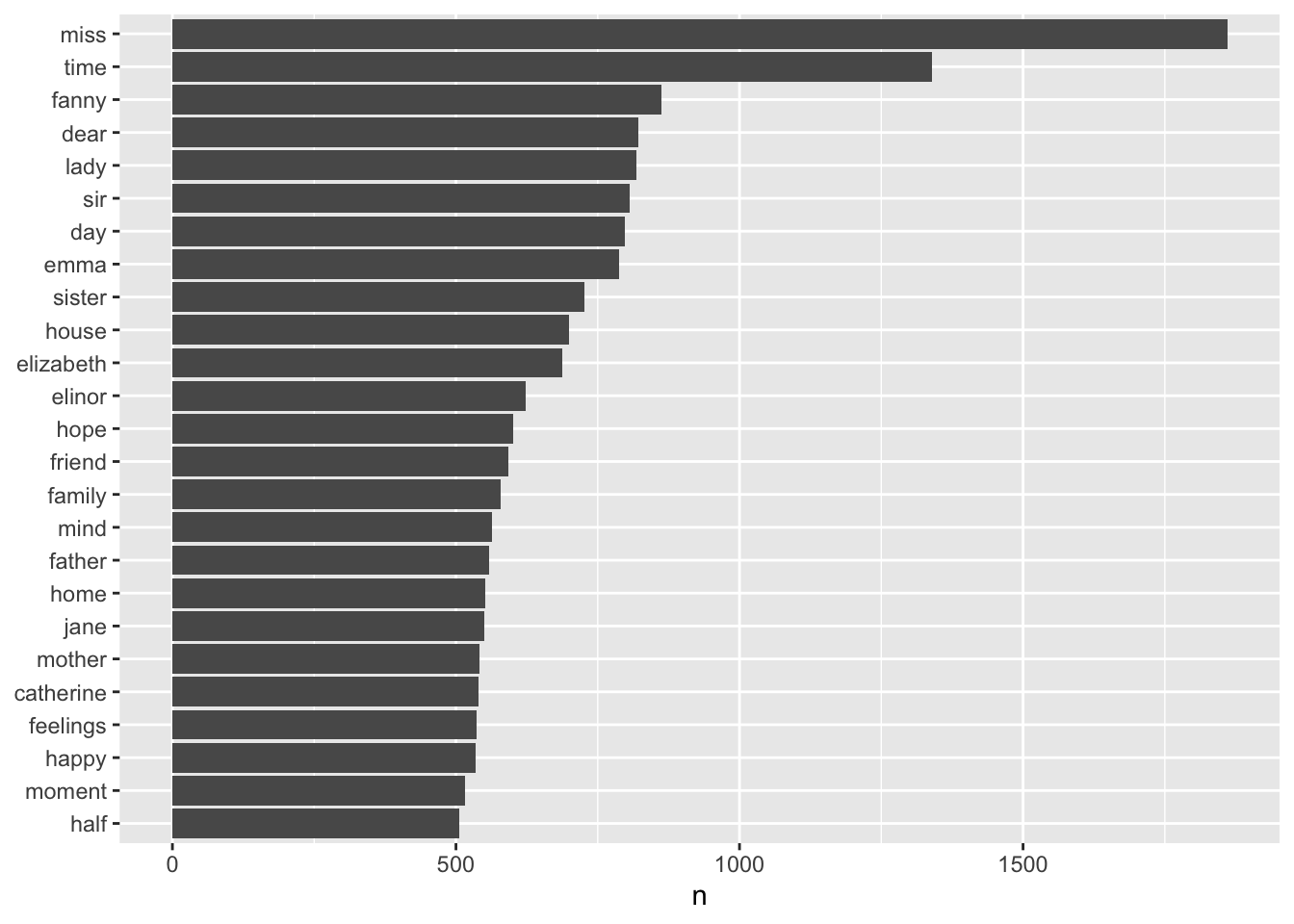

Let’s plot the “most common” words (defined for now as more than 500 occurrences) in descending order by count.

tidy_books |>

count(word, sort = TRUE) |>

filter(n > 500) |>

mutate(word = fct_reorder(word, n)) |>

ggplot(aes(word, n)) +

geom_col() +

xlab(NULL) +

coord_flip()

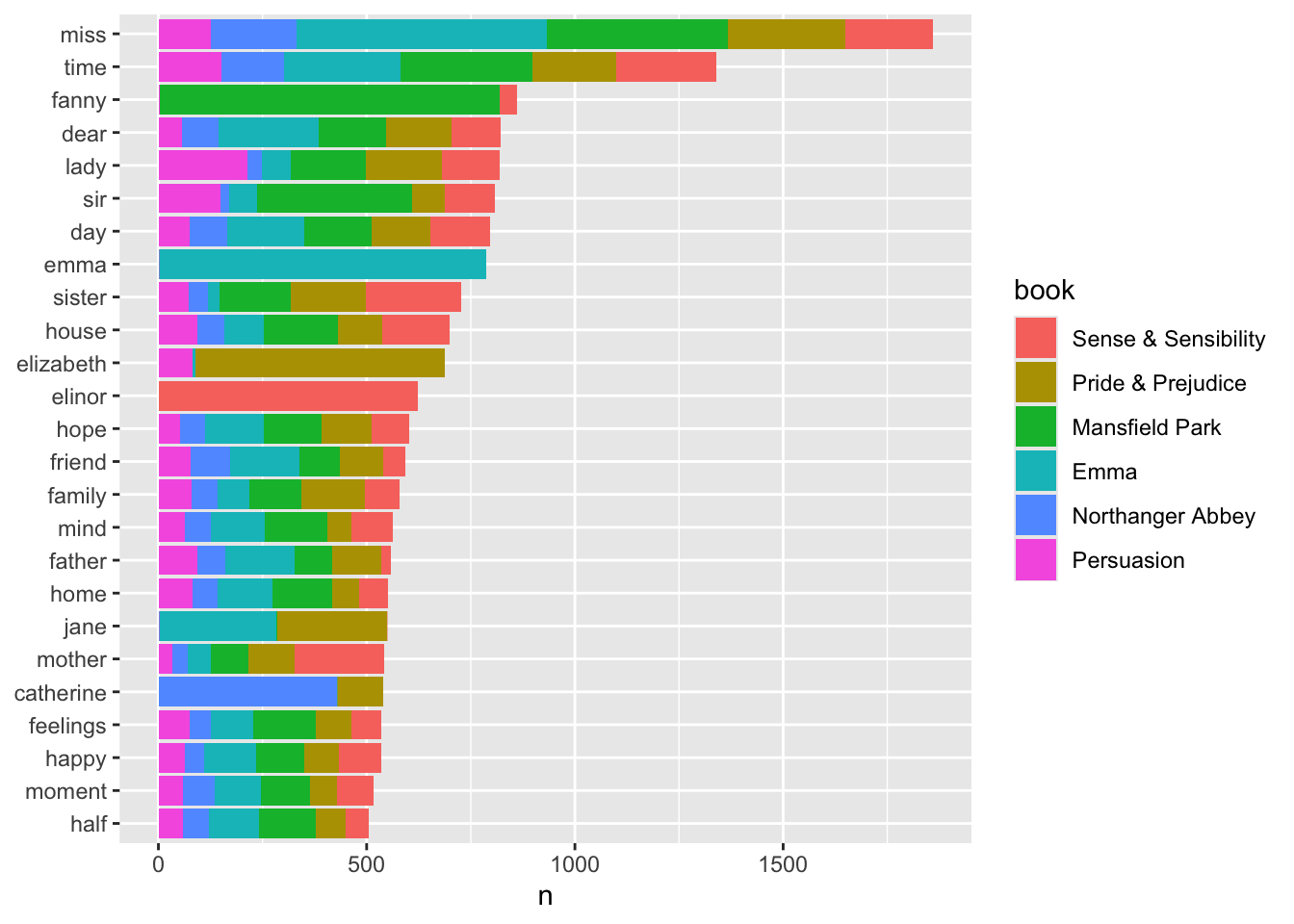

- Plot the most common words in descending order by count while using color to indicate the counts for each book.

Show code

tidy_books |>

group_by(book) |>

count(word, sort = TRUE) |>

group_by(word) |>

mutate(word_total = sum(n)) |>

ungroup() |>

filter(word_total > 500) |> ## 370

mutate(word = fct_reorder(word, word_total)) |>

ggplot(aes(word, n, fill = book)) +

geom_col() +

xlab(NULL) +

coord_flip() +

scale_fill_viridis_d(end = .9, direction = -1)

- Find the words that occur the most in each book but that do not occur in any other book.

- Hint: Consider using

pivot_wider()to create a temporary data frame with the counts for each book.

- Hint: Consider using

- Then, check how many books a word does not appear in, and filter to those that do not appear in five books.

- Hint: consider using the magrittr pipe to be able to use the

.pronoun.

- Hint: consider using the magrittr pipe to be able to use the

- Then,

pivot_longer()to get back to one column with the book names.

Show code

tidy_books |>

group_by(book) |>

count(word, sort = TRUE) |>

ungroup() |>

pivot_wider(names_from = book, values_from = n) %>% # view()

mutate(tot_books = is.na(.$`Mansfield Park`) +

is.na(.$`Sense & Sensibility`) +

is.na(.$`Pride & Prejudice`) +

is.na(.$`Emma`) +

is.na(.$`Northanger Abbey`) +

is.na(.$`Persuasion`)) |>

filter(tot_books == 5) |>

select(-tot_books) |>

pivot_longer(-word,

names_to = "book", values_to = "count",

values_drop_na = TRUE

) |>

group_by(book) |>

filter(count == max(count)) |>

arrange(desc(count))# A tibble: 6 × 3

# Groups: book [6]

word book count

<chr> <chr> <int>

1 elinor Sense & Sensibility 623

2 crawford Mansfield Park 493

3 weston Emma 389

4 darcy Pride & Prejudice 374

5 elliot Persuasion 254

6 tilney Northanger Abbey 196- How would you change your code if you did not know how many books there were or there were many books?

Show code

## Without knowing how many books or titles

tidy_books |>

group_by(book) |>

count(word, sort = TRUE) |>

ungroup() |>

pivot_wider(names_from = book, values_from = n) |>

mutate(across(where(is.numeric), is.na, .names = "na_{ .col}")) |>

rowwise() |>

mutate(tot_books = sum(c_across(starts_with("na")))) |>

ungroup() |> ## have to ungroup after rowwise

filter(tot_books == max(tot_books)) |>

select(!(starts_with("na_") | starts_with("tot"))) |>

pivot_longer(-word,

names_to = "book", values_to = "count",

values_drop_na = TRUE

) |>

group_by(book) |>

filter(count == max(count)) |>

arrange(desc(count))# A tibble: 6 × 3

# Groups: book [6]

word book count

<chr> <chr> <int>

1 elinor Sense & Sensibility 623

2 crawford Mansfield Park 493

3 weston Emma 389

4 darcy Pride & Prejudice 374

5 elliot Persuasion 254

6 tilney Northanger Abbey 19615.7 Sentiment Analysis

15.7.1 Overview

When humans read text, we use our understanding of the emotional intent of words to infer whether a section of text is positive or negative, or perhaps characterized by some other more nuanced emotion like surprise or disgust.

- Especially when authors are “showing not saying” the emotional context

Sentiment Analysis (also known as opinion mining) uses computer-based text analysis, or other methods to identify, extract, quantify, and study affective states and subjective information from text.

- Commonly used by businesses to analyze customer comments on products or services.

The simplest approach: get the sentiment of each word as a individual token and add them up across a given block of text.

- This “bag of words” approach does not take into account word qualifiers or modifiers such as, in English, not, never, always, etc..

- If we were add up the total positive and negative words across many paragraphs, the positive and negative words will tend to cancel each other out.

We are usually better off using tokens at either the sentence level, or by paragraph, and adding up positive and negative words at that level of aggregation.

This provides more context than the “bag of words” approach.

15.7.2 Sentiment Lexicons Assign Sentiments to Words (based on “common” usage)

15.7.2.1 Why multiple lexicons?

There are several sentiment lexicons available for use in text analysis.

- Some are specific to a domain or application.

- Some focus on specific periods of time as words change meaning over time due to semantic drift or semantic change so comparing sentiments of documents from two different eras may require different sentiment lexicons.

- This is especially true for spoken or informal writing and over longer periods. See Semantic Changes in the English Language.

15.7.2.2 {tidytext} has functions to access three common lexicons in the {textdata} package

- bing from Bing Liu. Collaborators assigns words as positive or negative.

- bing is also the

sentimentsdata frame in tidytext.

- bing is also the

- AFINN from Finn Arup Nielsen assigns words values from -5 to +5.

- nrc from Saif Mohammad and Peter Turney assigns one of ten emotions to each word.

- Note: a word may have more than one sentiment and many do …

We usually just pick one of the three for a given analysis.

15.7.2.3 Accessing Sentiments in {tidytext}

We can use get_sentiments() to load the sentiment of interest.

Install the {textdata} package using the console and then load it with library(textdata).

# A tibble: 10 × 2

word sentiment

<chr> <chr>

1 2-faces negative

2 abnormal negative

3 abolish negative

4 abominable negative

5 abominably negative

6 abominate negative

7 abomination negative

8 abort negative

9 aborted negative

10 aborts negative # A tibble: 10 × 2

word sentiment

<chr> <chr>

1 immaculate positive

2 infringe negative

3 incessant negative

4 unusable negative

5 ho-hum negative

6 doubtless positive

7 obsessiveness negative

8 well-mannered positive

9 resounding positive

10 jovial positive # A tibble: 10 × 2

word value

<chr> <dbl>

1 warm 1

2 strengthening 2

3 ability 2

4 disparaged -2

5 whore -4

6 bomb -1

7 disrespect -2

8 broken -1

9 pissing -3

10 tension -1# A tibble: 10 × 2

word sentiment

<chr> <chr>

1 incurable fear

2 affront surprise

3 lovemaking joy

4 thoughtless negative

5 unhappy disgust

6 restrain anger

7 wretch disgust

8 dreadfully disgust

9 stealthily surprise

10 catastrophe anger [1] "anger" "anticipation" "disgust" "fear" "joy"

[6] "negative" "positive" "sadness" "surprise" "trust" get_sentiments("nrc") |>

group_by(word) |>

summarize(nums = n()) |>

filter(nums > 1) |>

nrow() / nrow(get_sentiments("nrc")) ## % words more than 1 sentiment[1] 0.2654268# A tibble: 10 × 2

word sentiment

<chr> <chr>

1 feeling anger

2 feeling anticipation

3 feeling disgust

4 feeling fear

5 feeling joy

6 feeling negative

7 feeling positive

8 feeling sadness

9 feeling surprise

10 feeling trust [1] 13872[1] 645315.7.3 Example: Using nrc “Fear’ Words

Since the nrc lexicon gives us emotions, we can look at just words labeled as “fear” if we choose.

Let’s get the Jane Austen books into tidy text format.

- No need to filter out the stop words as we will be filtering on select “fear” words which do not include stop words.

austen_books() |>

group_by(book) |>

mutate(

linenumber = row_number(),

chapter = cumsum(str_detect(

text,

regex("^chapter [\\divxlc]",

ignore_case = TRUE

)

))

) |>

ungroup() |>

## use `word` as the output so the inner_join will match with the nrc lexicon

unnest_tokens(output = word, input = text) ->

tidy_books

head(tidy_books)# A tibble: 6 × 4

book linenumber chapter word

<fct> <int> <int> <chr>

1 Sense & Sensibility 1 0 sense

2 Sense & Sensibility 1 0 and

3 Sense & Sensibility 1 0 sensibility

4 Sense & Sensibility 3 0 by

5 Sense & Sensibility 3 0 jane

6 Sense & Sensibility 3 0 austen Save only the “fear” words from the nrc lexicon into a new data frame

Let’s look at just Emma and use an inner_join() to select only those rows in both Emma and the nrc “fear” data frame.

Then let’s count the number of occurrences of the “fear” words in Emma.

get_sentiments("nrc") |>

filter(sentiment == "fear") ->

nrcfear

tidy_books |>

filter(book == "Emma") |>

inner_join(nrcfear, by = "word",

relationship = "many-to-many") |>

count(word, sort = TRUE)# A tibble: 364 × 2

word n

<chr> <int>

1 doubt 98

2 ill 72

3 afraid 65

4 marry 63

5 change 61

6 bad 60

7 feeling 56

8 bear 52

9 creature 39

10 obliging 34

# ℹ 354 more rowsLooking at the words, it is not always clear why a word is a “fear” word and remember that words may have multiple sentiments associated with them in the lexicon.

How many words are associated with the other sentiments in nrc?

# A tibble: 10 × 2

# Groups: sentiment [10]

sentiment n

<chr> <int>

1 anger 1245

2 anticipation 837

3 disgust 1056

4 fear 1474

5 joy 687

6 negative 3316

7 positive 2308

8 sadness 1187

9 surprise 532

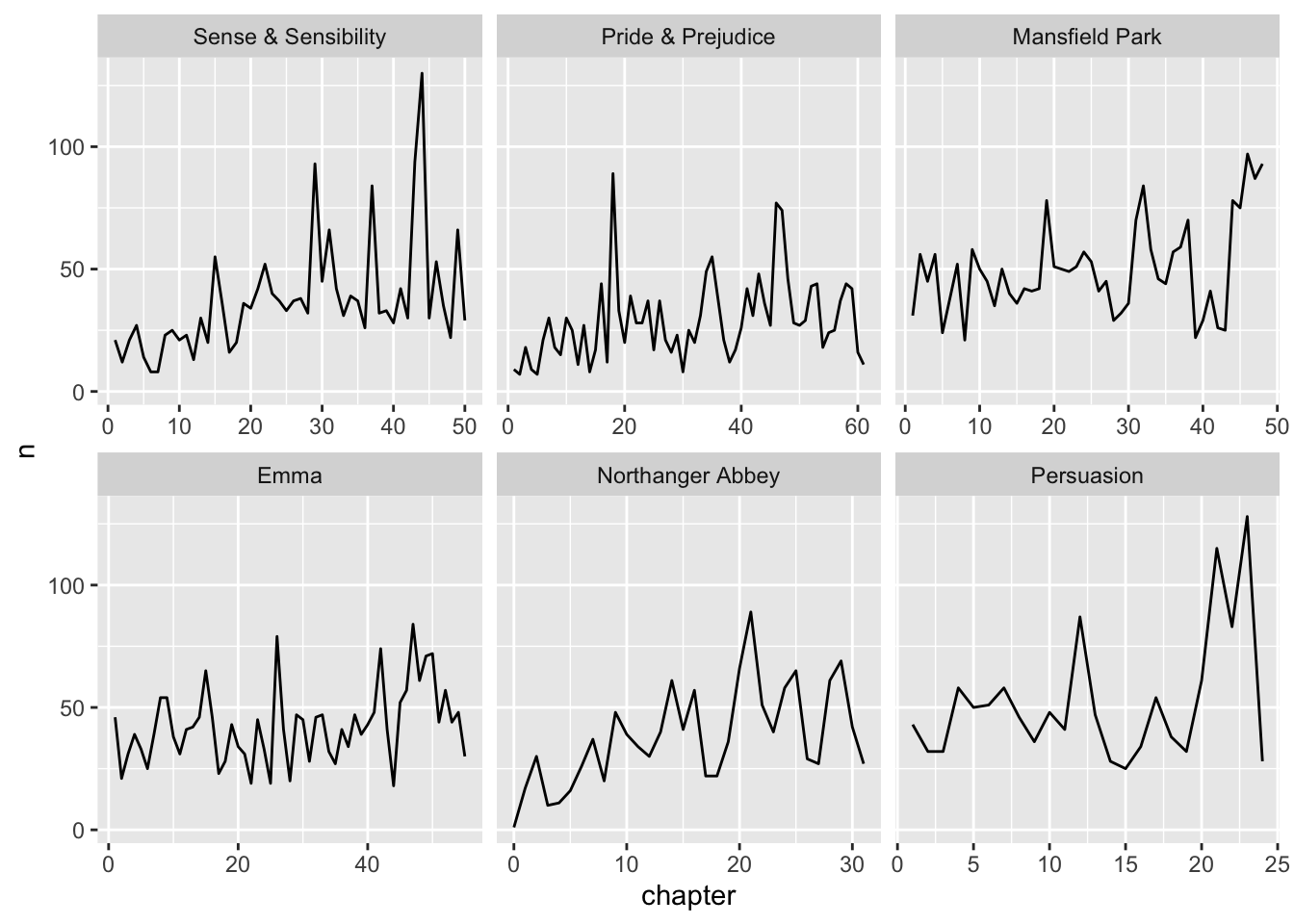

10 trust 1230Plot the number of fear words in each chapter for each Jane Austen book.

- Consider using

scales = "free_x"infacet_wrap().

Show code

# A tibble: 6 × 3

# Groups: book, chapter [6]

book chapter n

<fct> <int> <int>

1 Sense & Sensibility 1 21

2 Sense & Sensibility 2 12

3 Sense & Sensibility 3 21

4 Sense & Sensibility 4 27

5 Sense & Sensibility 5 14

6 Sense & Sensibility 6 8Show code

15.7.3.1 Looking at Larger Blocks of Text for Positive and Negative

Let’s break up tidy_books into larger blocks of text, say 80 lines long.

We can use the bing lexicon (either positive or negative) to categorize each word within a block.

- Recall, the words in

tidy_booksare in sequential order by line number.

Steps

- Use

inner_join()to filter out words intidy_textnot in bing while adding the sentiment column from bing - Use

count(), and inside the call, create an index variable for the 80-line block of text source for the word while keeping book and sentiment variables- Use

index = line_number %/% 80 - Note, most blocks will have far fewer than 80 words since we are only keeping the words that are in bing.

- Use

- Use

pivot_wider()on sentiment to get the positive and negative word counts in separate columns and set missing values to 0 withvalues_fill(). - Add a column with the difference in overall block sentiment with

net = positive - negative - Plot the

netsentiment across each block and facet by book.- Use

scales = "free_x"since the books are of different lengths.

- Use

tidy_books |>

inner_join(get_sentiments("bing"), by = "word",

relationship = "many-to-many") |>

count(book, index = linenumber %/% 80, sentiment) |>

pivot_wider(

names_from = sentiment, values_from = n,

values_fill = list(n = 0)

) |>

mutate(net = positive - negative) ->

janeaustensentiment

janeaustensentiment |>

ggplot(aes(index, net, fill = book)) +

geom_col(show.legend = FALSE) +

facet_wrap(~book, ncol = 2, scales = "free_x") +

scale_fill_viridis_d(end = .9)

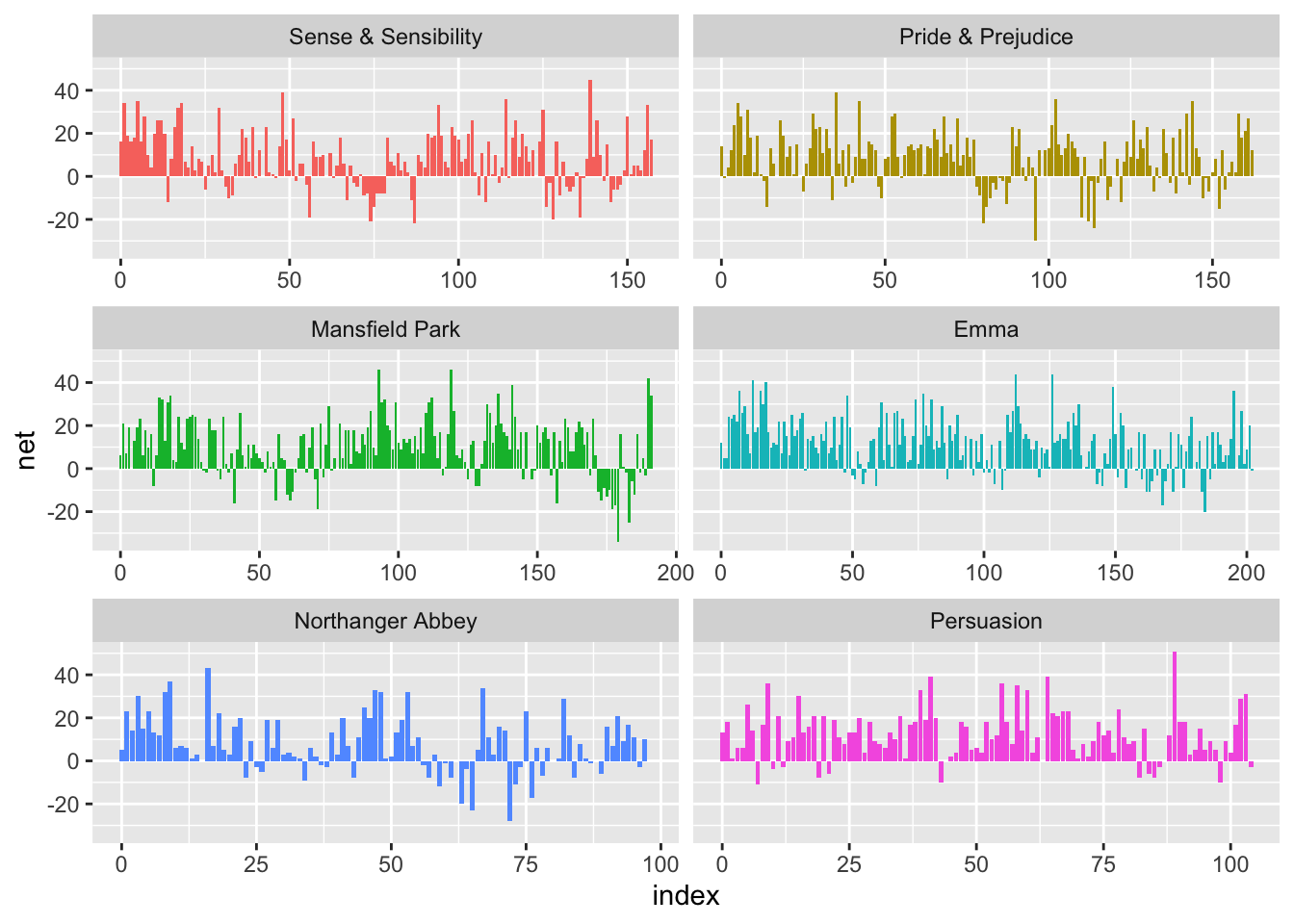

We can see the books differ in the number and placement of positive versus negative blocks.

15.7.4 Adjusting Sentiment Lexicons

Consider the Genre/Context for the Sentiment Words. Do they mean what they mean?

- These are modern lexicons and 200 year old books.

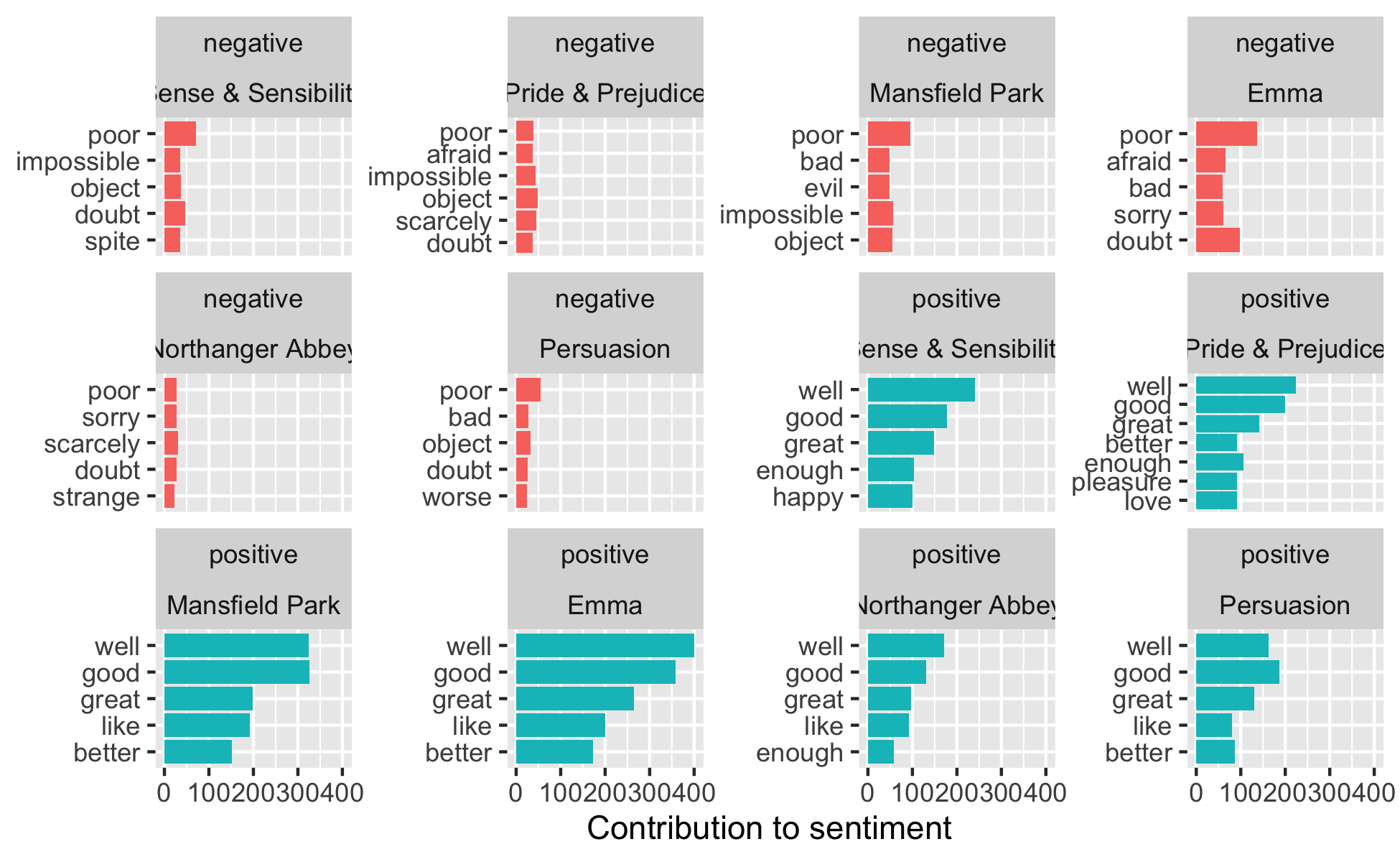

We should probably look at which words contribute most to the positive and negative sentiment and be sure we want to include them as part of the sentiment.

Let’s get the count of the most common words and their sentiment.

tidy_books |>

inner_join(get_sentiments("bing"), by = "word",

relationship = "many-to-many") |>

count(word, sentiment, sort = TRUE) ->

bing_word_counts

bing_word_counts# A tibble: 2,585 × 3

word sentiment n

<chr> <chr> <int>

1 miss negative 1855

2 well positive 1523

3 good positive 1380

4 great positive 981

5 like positive 725

6 better positive 639

7 enough positive 613

8 happy positive 534

9 love positive 495

10 pleasure positive 462

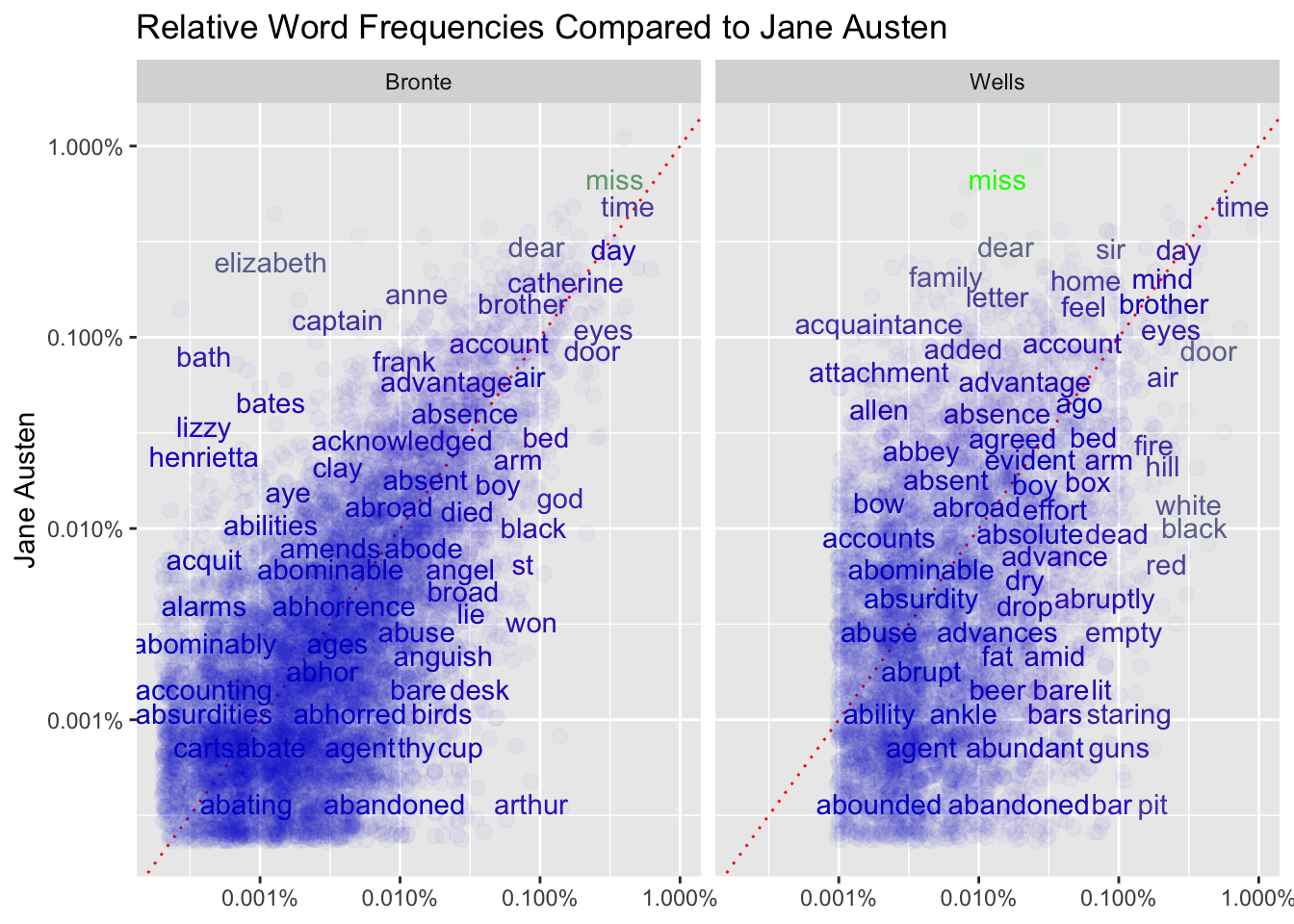

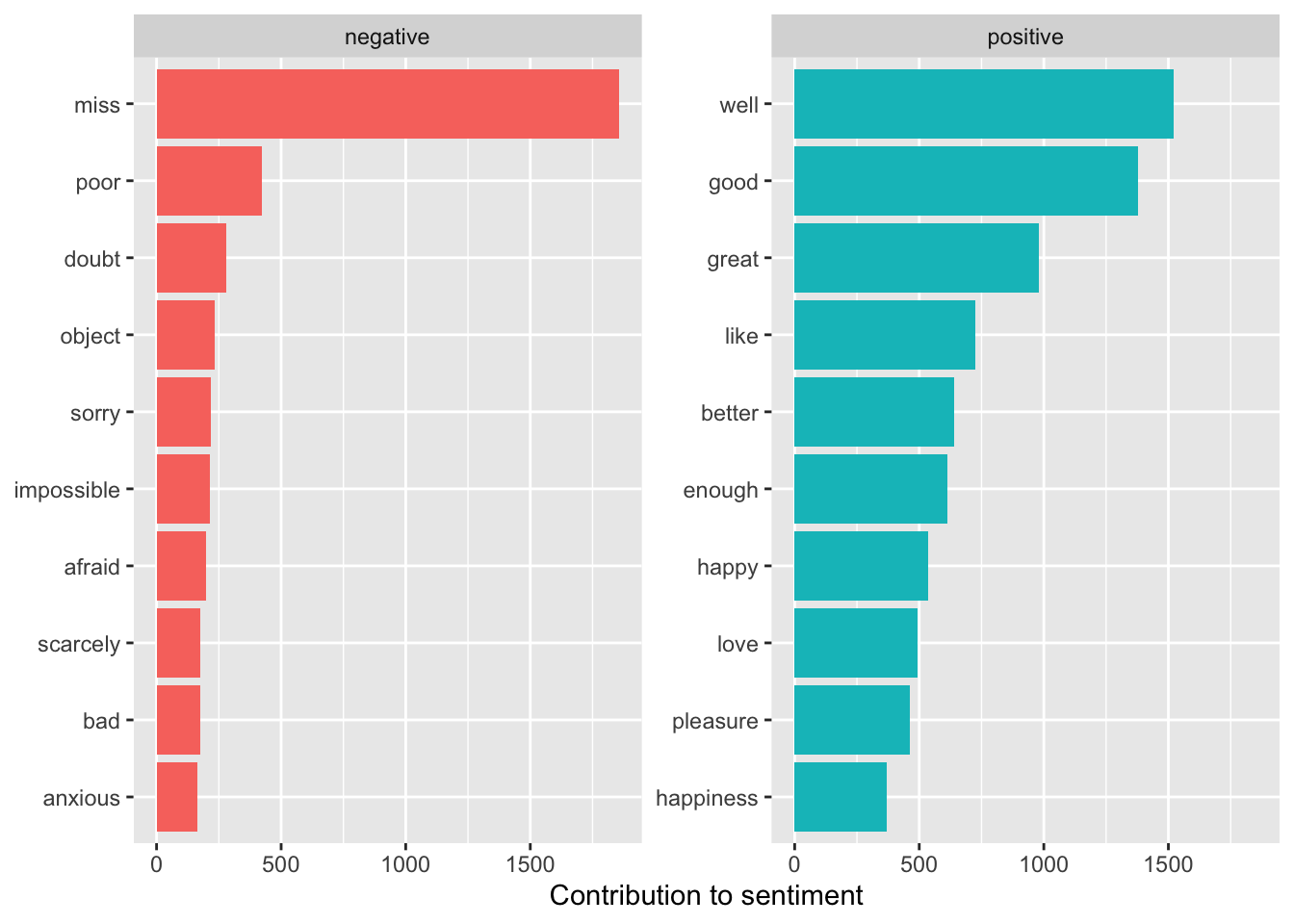

# ℹ 2,575 more rowsWe can see “miss” might not be a good fit to consider as a negative word given the context/genre.

Let’s plot the top ten, in order, for each sentiment.

bing_word_counts |>

group_by(sentiment) |>

slice_max(order_by = n, n = 10) |>

ungroup() |>

mutate(word = fct_reorder(word, n)) |>

ggplot(aes(word, n, fill = sentiment)) +

geom_col(show.legend = FALSE) +

facet_wrap(~sentiment, scales = "free_y") +

labs(y = "Contribution to sentiment", x = NULL) +

coord_flip() +

scale_fill_viridis_d(end = .9)

Something seems “amiss” for Jane Austen novels! “Miss” is probably not a negative word, but rather refers to a young woman.

15.7.4.1 Adjusting an Improper Sentiment: Two Approaches

- Take the word “miss” out of the data before doing the analysis (add to the stop words), or,

- Change the sentiment lexicon to no longer have “miss” as a negative.

15.7.4.1.1 Approach 1

Remove “miss” from the text by adding to the stop words data frame and repeating the analysis.

# A tibble: 2 × 2

word lexicon

<chr> <chr>

1 a SMART

2 a's SMART custom_stop_words <- bind_rows(

tibble(word = c("miss"), lexicon = c("custom")),

stop_words

)

## SMART is another lexicon

head(custom_stop_words)# A tibble: 6 × 2

word lexicon

<chr> <chr>

1 miss custom

2 a SMART

3 a's SMART

4 able SMART

5 about SMART

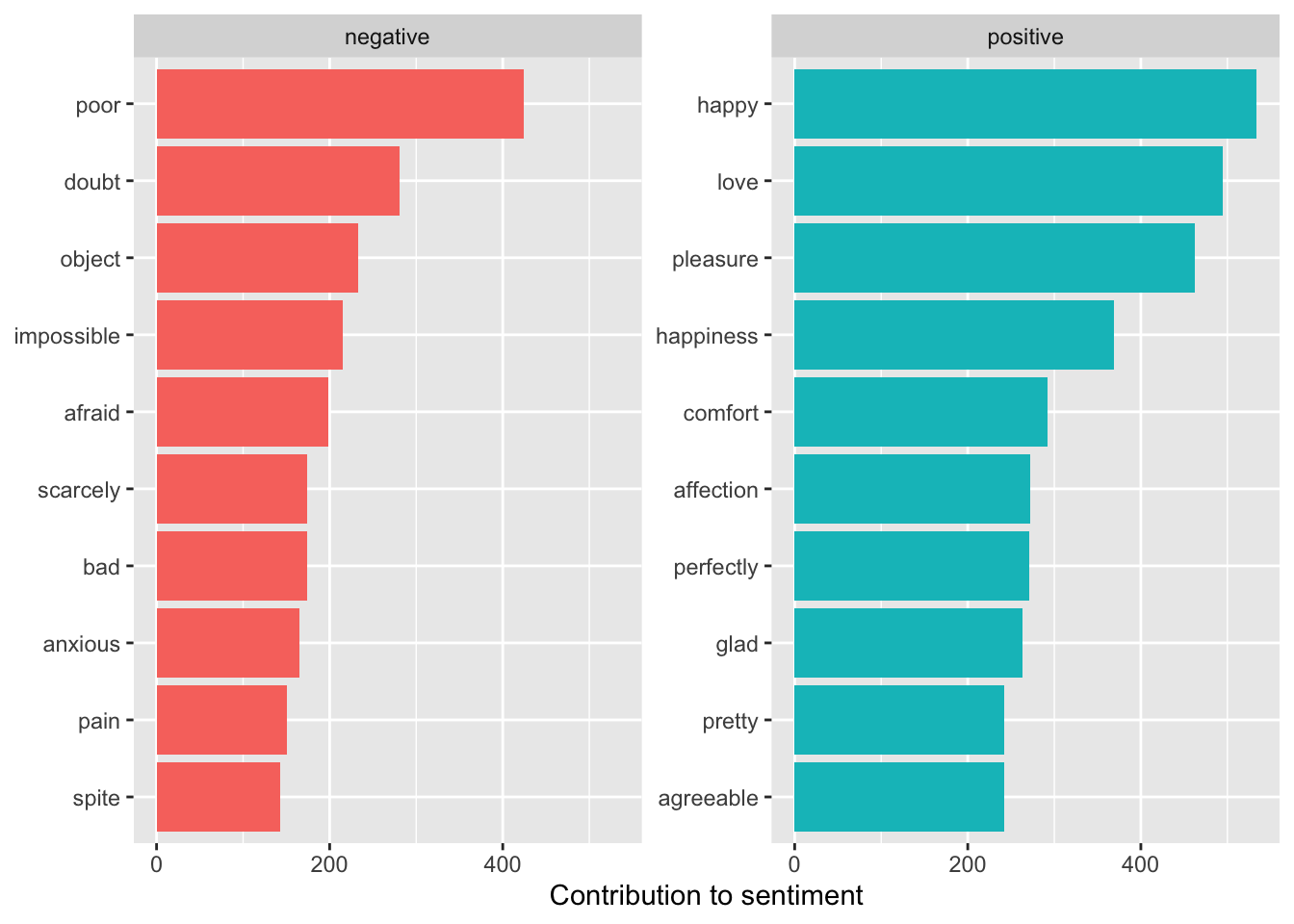

6 above SMART - Now let’s redo the analysis with the new stop words.

austen_books() |>

group_by(book) |>

mutate(

linenumber = row_number(),

chapter = cumsum(str_detect(

text,

regex("^chapter [\\divxlc]",

ignore_case = TRUE

)

))

) |>

ungroup() |>

## use word so the inner_join will match with the nrc lexicon

unnest_tokens(word, text) |>

anti_join(custom_stop_words, by = "word") ->

tidy_books_no_miss

tidy_books_no_miss |>

inner_join(get_sentiments("bing"), by = "word",

relationship = "many-to-many") |>

count(word, sentiment, sort = TRUE) ->

bing_word_counts

head(bing_word_counts)# A tibble: 6 × 3

word sentiment n

<chr> <chr> <int>

1 happy positive 534

2 love positive 495

3 pleasure positive 462

4 poor negative 424

5 happiness positive 369

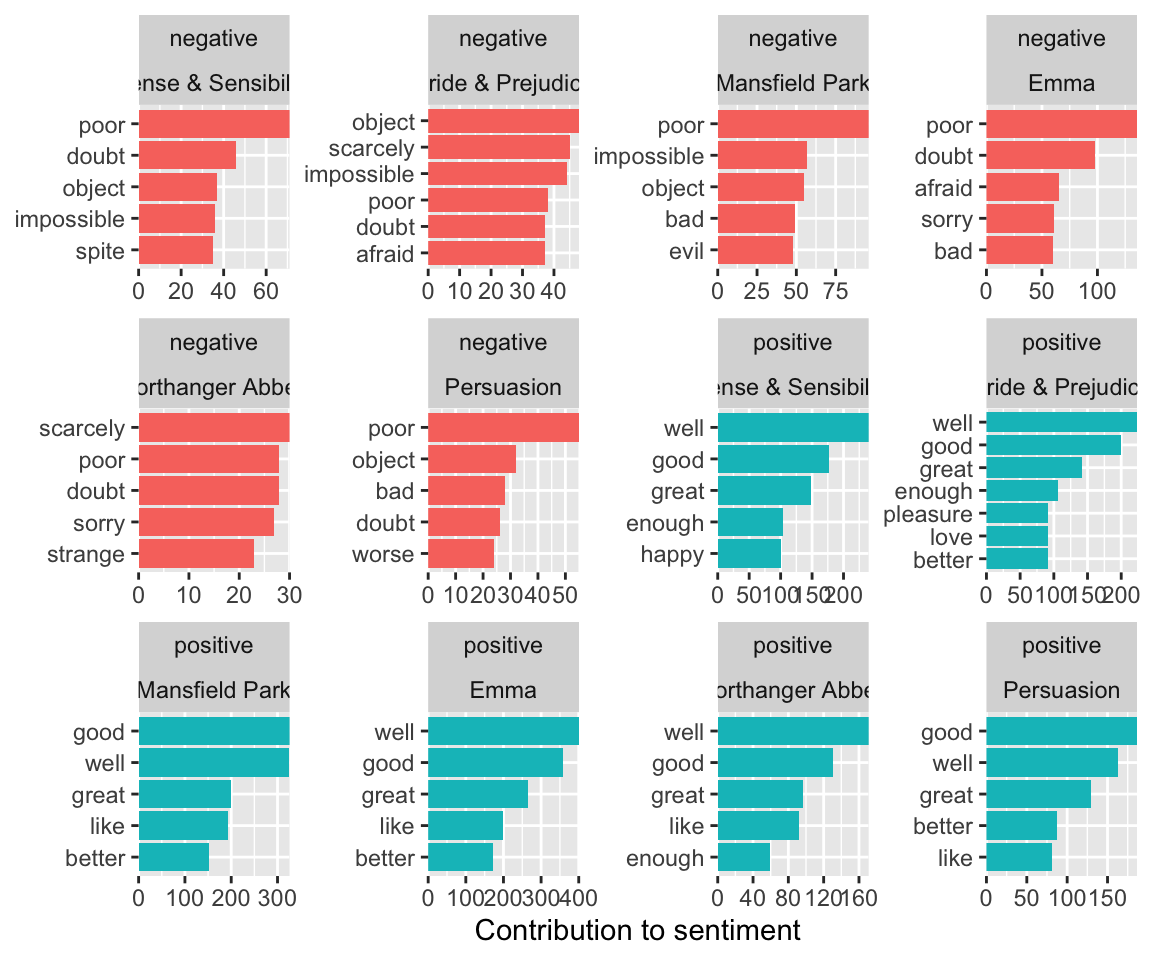

6 comfort positive 292bing_word_counts |>

group_by(sentiment) |>

slice_max(order_by = n, n = 10) |>

ungroup() |>

mutate(word = fct_reorder(word, n)) |>

ggplot(aes(word, n, fill = sentiment)) +

geom_col(show.legend = FALSE) +

facet_wrap(~sentiment, scales = "free_y") +

labs(y = "Contribution to sentiment", x = NULL) +

coord_flip() +

scale_fill_viridis_d(end = .9)

15.7.4.1.2 Approach 2

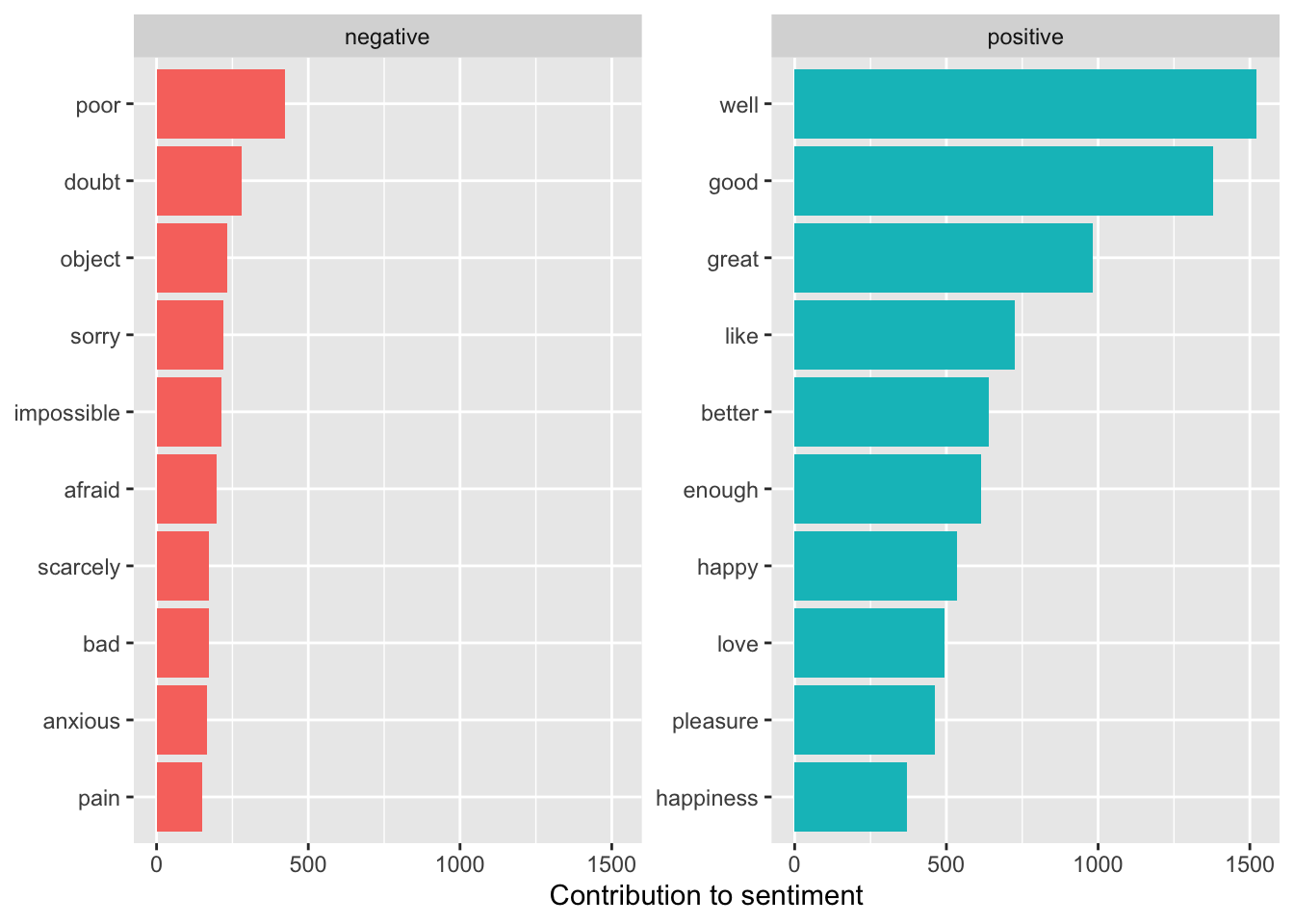

Remove the word “miss” from the bing sentiment lexicon.

Redo the Analysis from the beginning:

tidy_books |>

inner_join(bing_no_miss, by = "word",

relationship = "many-to-many") |>

count(word, sentiment, sort = TRUE) ->

bing_word_counts

bing_word_counts# A tibble: 2,584 × 3

word sentiment n

<chr> <chr> <int>

1 well positive 1523

2 good positive 1380

3 great positive 981

4 like positive 725

5 better positive 639

6 enough positive 613

7 happy positive 534

8 love positive 495

9 pleasure positive 462

10 poor negative 424

# ℹ 2,574 more rows## visualize it

bing_word_counts |>

group_by(sentiment) |>

slice_max(order_by = n, n = 10) |>

ungroup() |>

mutate(word = fct_reorder(word, n)) |> #

ggplot(aes(word, n, fill = sentiment)) +

geom_col(show.legend = FALSE) +

facet_wrap(~sentiment, scales = "free_y") +

labs(

y = "Contribution to sentiment",

x = NULL

) +

coord_flip() +

scale_fill_viridis_d(end = .9)

15.7.4.2 Repeat the Plot by Chapter

Original and “No Miss” plots.

- We’ll use the {patchwork} package to put side by side.

library(patchwork)

## Original

tidy_books |>

inner_join(get_sentiments("bing"), by = "word",

relationship = "many-to-many") |>

count(book, index = linenumber %/% 80, sentiment) |>

pivot_wider(

names_from = sentiment, values_from = n,

values_fill = list(n = 0)

) |>

mutate(net = positive - negative) ->

janeaustensentiment

# No Miss

tidy_books |>

inner_join(bing_no_miss, by = "word",

relationship = "many-to-many") |>

count(book, index = linenumber %/% 80, sentiment) |>

pivot_wider(

names_from = sentiment, values_from = n,

values_fill = list(n = 0)

) |>

mutate(net = positive - negative) ->

janeaustensentiment2

janeaustensentiment |>

ggplot(aes(index, net, fill = book)) +

geom_col(show.legend = FALSE) +

ggtitle("With Miss as Negative") +

scale_fill_viridis_d(end = .9) +

facet_wrap(~book, ncol = 2, scales = "free_x") -> p1

janeaustensentiment2 |>

ggplot(aes(index, net, fill = book)) +

geom_col(show.legend = FALSE) +

ggtitle("Without Miss as Negative") +

scale_fill_viridis_d(end = .9) +

facet_wrap(~book, ncol = 2, scales = "free_x") -> p2

p1 + p2

Compare the average net difference in sentiment in the two cases.

janeaustensentiment |>

summarize(means = mean(net, na.rm = TRUE)) |>

bind_rows(

(janeaustensentiment2 |>

summarize(means = mean(net, na.rm = TRUE))

)

)# A tibble: 2 × 1

means

<dbl>

1 9.71

2 11.7 - Notice some minor variations in several places (Emma - block 110) and the average sentiment is over 2 points more positive.

We have used a bag of words sentiment analysis and a larger block of text (80 lines) to characterize Jane Austen’s books.

We have also adjusted the lexicon to remove words that appeared inappropriate for the context/genre.

15.7.5 {tidytext} Plotting Functions for Ordering within Facets

- See Julia Silge’s blog.

We were able to reorder the words above when we were just faceting by sentiment.

If we wanted to see the top five sentiments by book and sentiment instead of just overall across books, we could summarize by facet by both book and sentiment.

tidy_books |>

inner_join(bing_no_miss, by = "word",

relationship = "many-to-many") |>

count(word, sentiment, book, sort = TRUE) ->

bing_word_counts

head(bing_word_counts)# A tibble: 6 × 4

word sentiment book n

<chr> <chr> <fct> <int>

1 well positive Emma 401

2 good positive Emma 359

3 good positive Mansfield Park 326

4 well positive Mansfield Park 324

5 great positive Emma 264

6 well positive Sense & Sensibility 240## visualize it

bing_word_counts |>

group_by(book, sentiment) |>

slice_max(order_by = n, n = 5) |>

ungroup() |>

mutate(word = fct_reorder(parse_factor(word), n)) |>

ggplot(aes(word, n, fill = sentiment)) +

geom_col(show.legend = FALSE) +

facet_wrap(sentiment ~ book, scales = "free_y") +

labs(

y = "Contribution to sentiment",

x = NULL

) +

coord_flip() +

scale_fill_viridis_d(end = .9)

Notice the words are now different for each book but are all in the same order, without regard to how often they appear in each book.

- All the scales are the same so the negative words are compressed compared to the more common positive words.

There are two new functions in the {tidytext package} to create a different look.

reorder_within(), inside a mutate, allows you to reorder eachwordby the facetedbookandsentimentbased on thecount.scale_x_reordered()will then update thexaxis to accommodate the new orders.

Use scales = "free" inside the facet_wrap() to allow both x and y scales to vary for each part of the facet.

bing_word_counts |>

group_by(book, sentiment) |>

slice_max(order_by = n, n = 5) |>

ungroup() |>

mutate(word = reorder_within(word, n, book)) |>

ungroup() |>

ggplot(aes(word, n, fill = sentiment)) +

geom_col(show.legend = FALSE) +

facet_wrap(sentiment ~ book, scales = "free") +

scale_x_reordered() +

scale_y_continuous(expand = c(0, 0)) +

labs(

y = "Contribution to sentiment",

x = NULL

) +

coord_flip() +

scale_fill_viridis_d(end = .9)

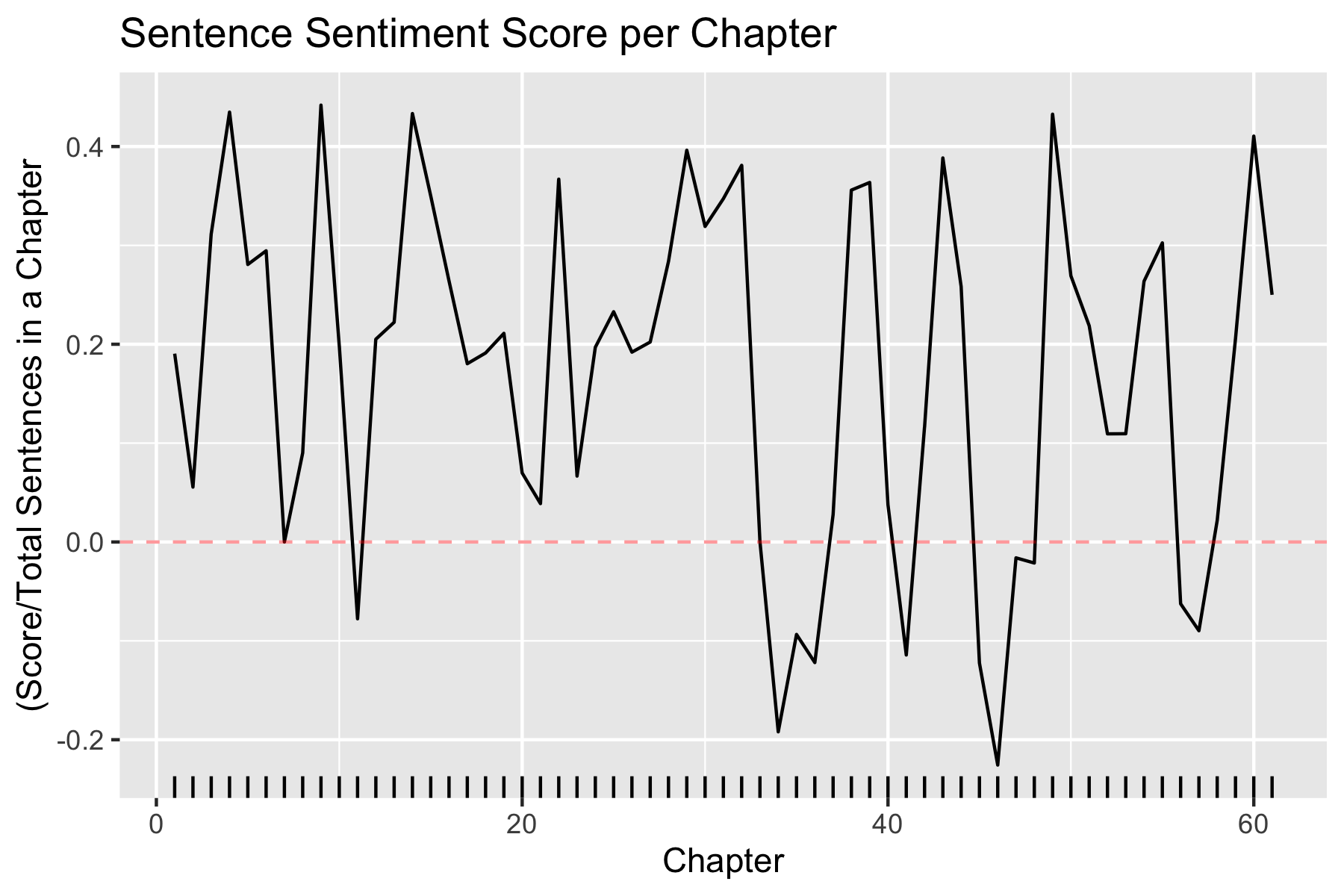

15.7.6 Analyzing Sentences and Chapters

The sentiment analysis we just did was based on single words and so did not consider the presence of modifiers such as “not” which tend to flip the context.

15.7.6.1 Example: Sentence Level

Consider the data set prideprejudice which has the complete text divided into elements of up to about 70 characters each.

If the unit for tokenizing is n-grams, skip_ngrams, sentences, lines, paragraphs, or regex, unnest_tokens() will collapse the entire input together before tokenizing unless collapse = FALSE.

Let’s add a chapter variable and also add a period after the number.

unnest_tokens()separates sentences at periods so we will get rid of periods after Mr., Mrs., and Dr. as a small clean up in addition to seperating the chapters headings.

tibble(text = prideprejudice) |>

mutate(

chapter = cumsum(str_detect(

text,

regex("^chapter [\\divxlc]", ignore_case = TRUE)

)),

text = str_replace(text, "(Chapter \\d+)", "\\1\\."),

text = str_replace_all(text, "((Mr)|(Mrs)|(Dr))\\.", "\\1")

) |>

unnest_tokens(sentence, text, token = "sentences") ->

PandP_sentencesNow we have our tokens as “complete” sentences. We have more cleaning and reshaping to do.

- Let’s add sentence numbers and un_nest at the “word” level.

- Add sentiments using bing.

- We can get rid of the cover page (Chapter 0).

- We’ll

count()the number of positive and negative words per sentence. - As before, we will

pivot_wider()to break out the sentiments. - Now we can use

case_when()to create a score for each sentence:- 1 for more positive words than negative,

- 0 for same numbers of positive and negative, and,

- -1 for more negative words than positive in the sentence.

- Finally, let’s summarize by chapter as an average score per sentence (the total score divided by the number of sentences in the chapter).

Now we can create a line plot of sentiment score by chapter to see a view of the story arc.

PandP_sentences |>

mutate(sentence_number = row_number()) |>

unnest_tokens(word, sentence) |>

inner_join(get_sentiments("bing"), by = "word",

relationship = "many-to-many") |>

filter(chapter > 0) |>

count(chapter, sentence_number, sentiment) |> ## view()

pivot_wider(

names_from = sentiment, values_from = n,

values_fill = list(n = 0)

) |> # view()

mutate(sentence_sent = positive - negative) |>

mutate(sentence_sent = case_when(

sentence_sent > 0 ~ 1,

sentence_sent == 0 ~ 0,

sentence_sent < 0 ~ -1

)) |>

group_by(chapter) |>

summarize(

chap_sent_per = sum(sentence_sent) / n(),

.groups = "keep"

) |> # view()

ggplot(aes(chapter, chap_sent_per)) +

geom_line() +

ggtitle("Sentence Sentiment Score per Chapter") +

ylab("(Score/Total Sentences in a Chapter") +

xlab("Chapter") +

geom_hline(yintercept = 0, color = "red", alpha = .4, lty = 2) +

scale_x_continuous(limits = c(1, 61)) +

geom_rug(sides = "b")

- Chapter 46 appears to be the low point.

15.7.6.2 Example: Chapter Level

Consider all the Austen books.

- Look for the most negative chapters based on number of words in the chapter.

- Take out the word “miss”.

get_sentiments("bing") |>

filter(sentiment == "negative") |>

filter(word != "miss") ->

bingnegative

tidy_books |>

group_by(book, chapter) |>

summarize(words = n(), .groups = "drop") ->

wordcounts

tidy_books |>

semi_join(bingnegative, by = "word") |>

group_by(book, chapter) |>

summarize(negativewords = n(), .groups = "drop") |>

left_join(wordcounts, by = c("book", "chapter")) |>

mutate(ratio = negativewords / words) |>

filter(chapter != 0) |>

ungroup() |>

group_by(book) |>

slice_max(order_by = ratio) |>

ungroup()# A tibble: 6 × 5

book chapter negativewords words ratio

<fct> <int> <int> <int> <dbl>

1 Sense & Sensibility 43 156 3405 0.0458

2 Pride & Prejudice 34 111 2104 0.0528

3 Mansfield Park 46 161 3685 0.0437

4 Emma 16 81 1894 0.0428

5 Northanger Abbey 21 143 2982 0.0480

6 Persuasion 4 62 1807 0.0343These are the chapters with the most negative words in each book, normalized for the number of words in the chapter.

What is happening in these chapters?

- In Chapter 43 of Sense and Sensibility Marianne is seriously ill, near death.

- In Chapter 34 of Pride and Prejudice, Mr. Darcy proposes for the first time (so badly!).

- In Chapter 46 of Mansfield Park, almost the end, everyone learns of Henry’s scandalous adultery.

- In Chapter 16 of Emma, she is back at Hartfield after her ride with Mr. Elton, and Emma plunges into self-recrimination as she looks back over the past weeks.

- In Chapter 21 of Northanger Abbey, Catherine is deep in her Gothic faux-fantasy of murder, etc..

- In Chapter 4 of Persuasion, the reader gets the full flashback of Anne refusing Captain Wentworth and sees how sad she was and now realizes it was a terrible mistake.

We have seen multiple ways to use sentiment analysis in single words and large blocks of text to analyze the flow of sentiment within and across large works of text.

The same concepts and techniques can work with analyzing Reddit comments, tweets, Yelp reviews, etc..

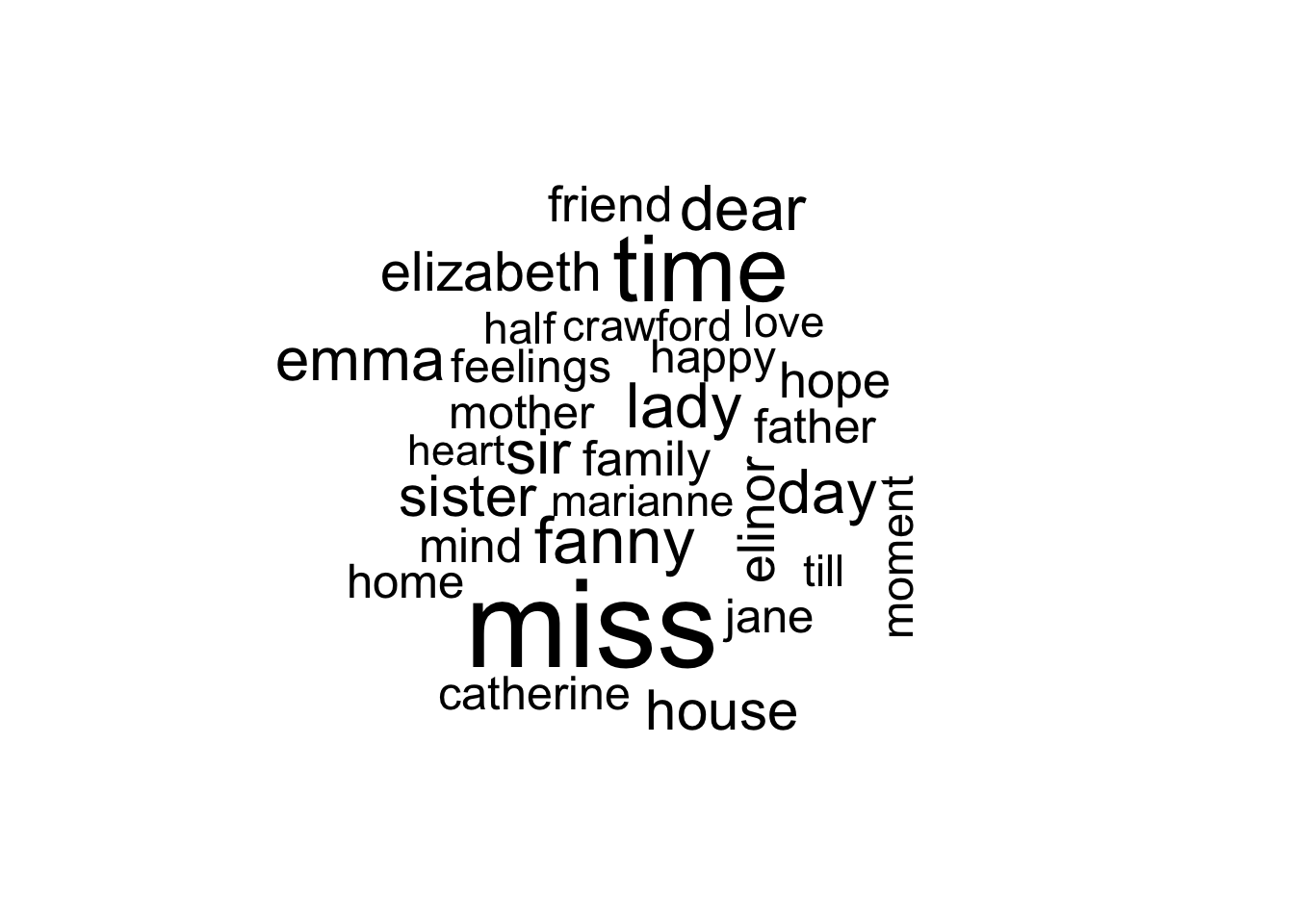

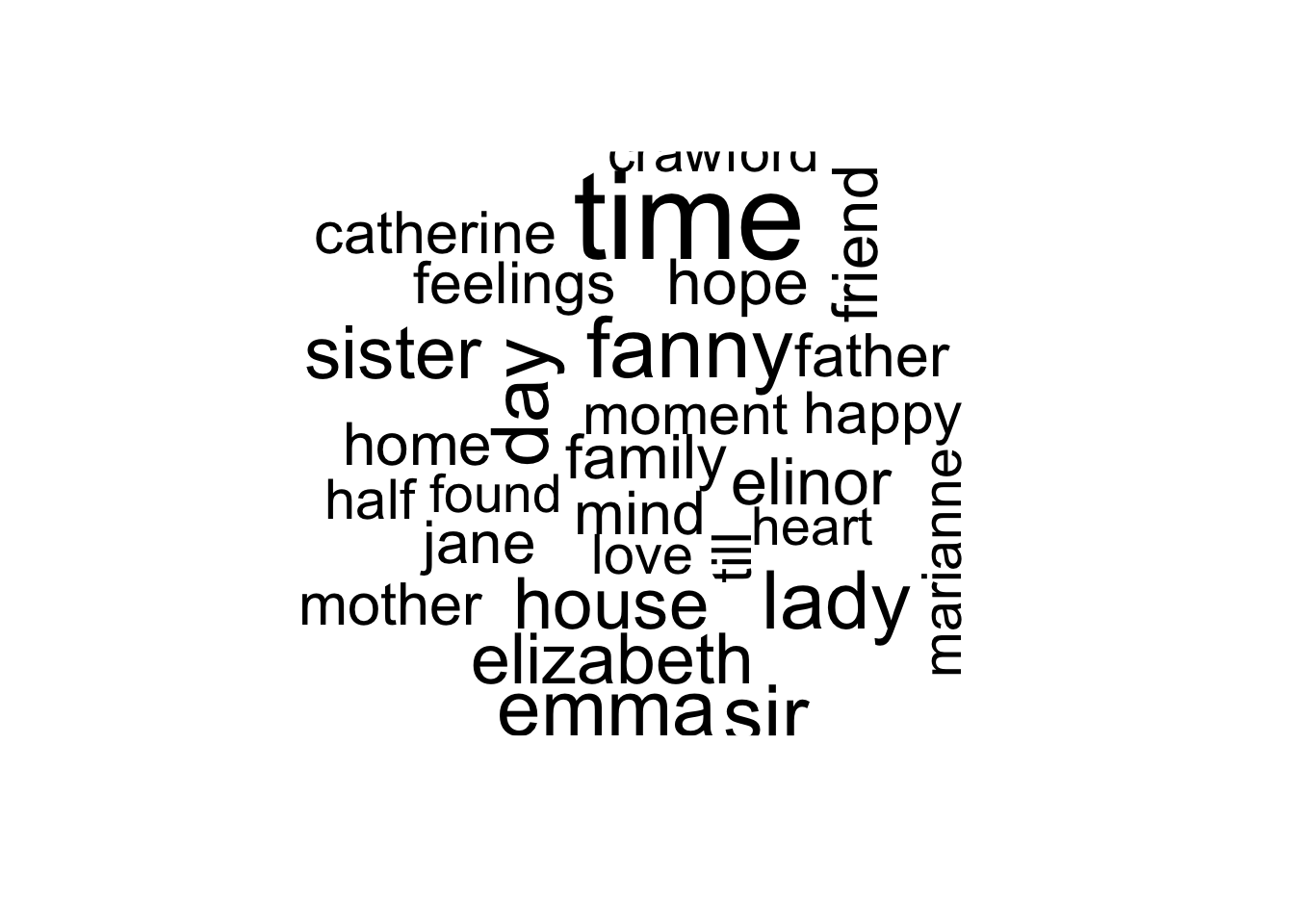

15.8 Word Cloud Plots

Word Clouds are a popular graphical method for displaying Word Frequency in a non-statistical way that can be useful for identifying the most frequent words in a document.

The {wordcloud} package (Fellows (2018)) uses base R graphics to create Word Clouds.

- It includes functions to create “commonality clouds” or “comparison clouds” for comparing words across multiple documents.

Install the package using the console and load into your file.

Let’s create a word cloud of tidy_books without the stop words.

library(wordcloud)

tidy_books |>

anti_join(stop_words, by = "word") |>

count(word) |>

with(wordcloud(word, n, max.words = 30))

## Custom Stop Words - no miss

tidy_books |>

anti_join(custom_stop_words, by = "word") |>

count(word) |>

with(wordcloud(word, n, max.words = 30))

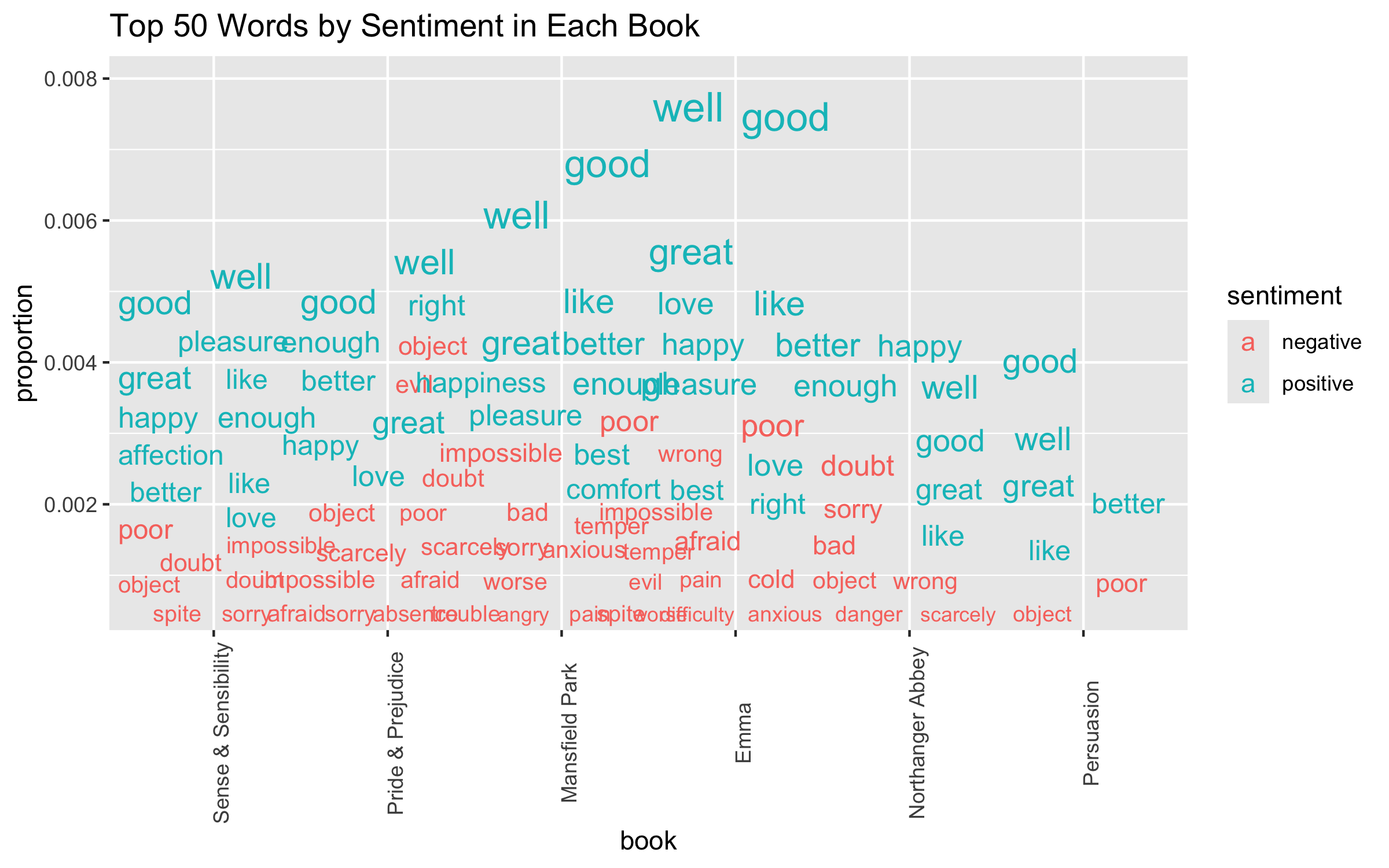

Word Clouds are popular and you can make them, but should you?

As an alternative, consider the ChatterPlot.

Try a repeat of top 50 Jane Austen words by sentiment and books.

library(ggrepel) ## to help words "repel each other

tidy_books |>

inner_join(bing_no_miss, by = "word",

relationship = "many-to-many") |>

count(book, word, sentiment, sort = TRUE) |>

mutate(proportion = n / sum(n)) |>

group_by(sentiment) |>

slice_max(order_by = n, n = 50) |>

ungroup() ->

tempp

tempp |>

ggplot(aes(book, proportion, label = word)) +

## ggrepel geom, make arrows transparent, color by rank, size by n

geom_text_repel(

segment.alpha = 0,

aes(

color = sentiment, size = proportion,

## fontface = as.numeric(as.factor(book))

),

max.overlaps = 50

) +

## set word size range & turn off legend

scale_size_continuous(range = c(3, 6), guide = "none") +

theme(axis.text.x = element_text(angle = 90)) +

ggtitle("Top 50 Words by Sentiment in Each Book") +

scale_color_viridis_d(end = .9)

At times, you may be asked to create a Word Cloud and it is straightforward to do so. However, it really only provides a visual display where the top few words can be seen and comparisons may be difficult.