4 Classification Methods

ISLRv2 Chapt 4

4.1 Introduction

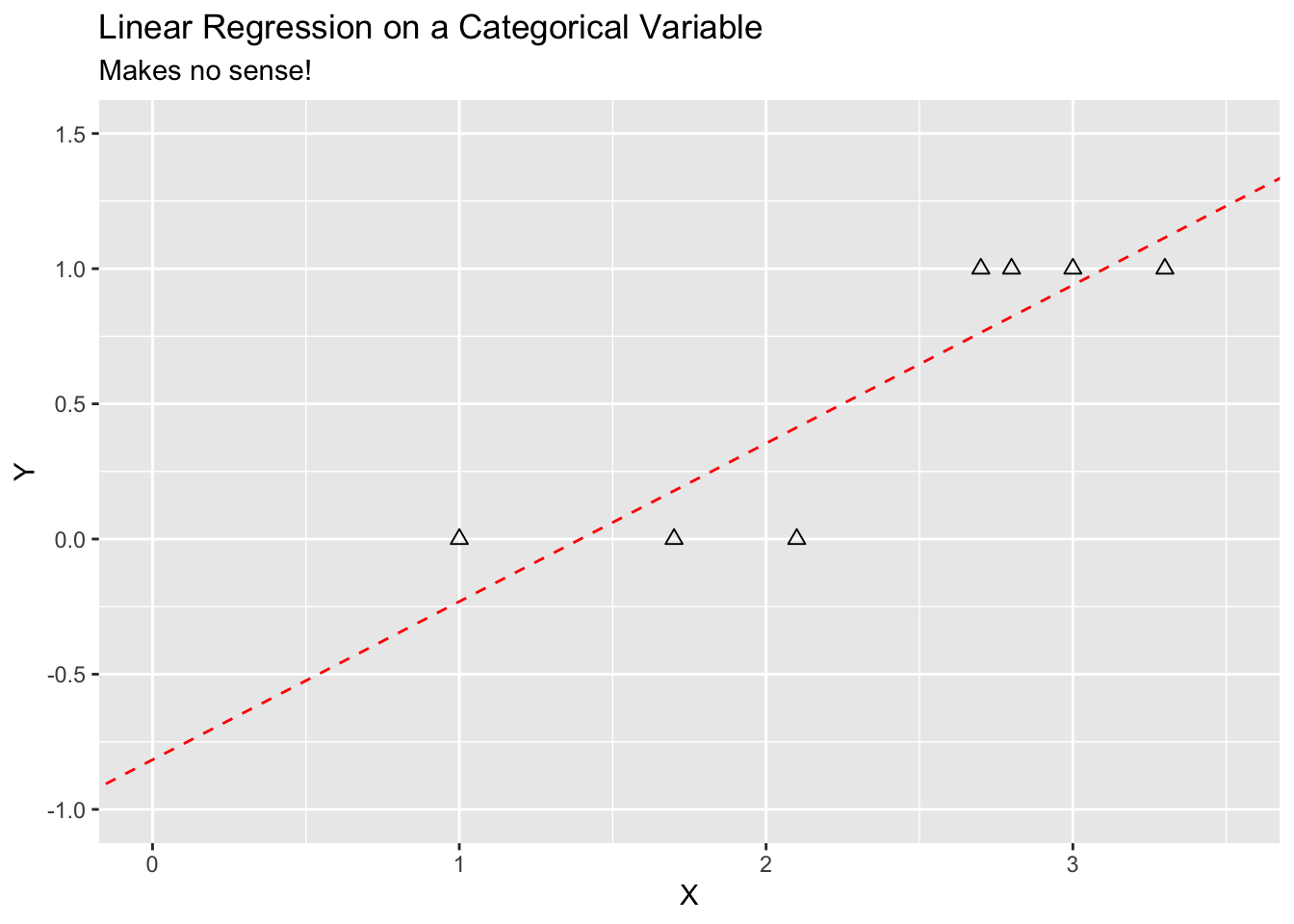

Classification is a form of regression where your response variable \(Y\) is categorical – not a number.

It does not make sense to fit a linear regression as \(Y\) has a limited number of values and the \(\hat{Y}\) will not.

Show code

df <- tribble(

~"X", ~"Y",

1., 0,

1.7, 0,

2.1, 0,

2.7, 1,

3, 1,

2.8, 1,

3.3, 1)

lm_out <- lm(Y ~ X, data = df)

p <- ggplot(df, aes(X, Y)) +

geom_point(pch = 2, size = 2) +

geom_abline(intercept = lm_out$coefficients[[1]],

slope = lm_out$coefficients[[2]],

color = "red", lty = 2) +

labs(title = "Linear Regression on a Categorical Variable",

subtitle = "Makes no sense!") +

xlim(0, 3.5) +

ylim(-1, 1.5)

p

Categorical variables often have no order and if they do, it is not clear the “distance” between levels is the same, e.g., the difference from Low to Medium = the difference from Medium to High.

One cannot calculate, let alone minimize, \(SSE_{Err} = \sum(\hat{y}_i - y_i)^2\) if \(y\) is not a number.

What can we do? Let’s minimize another measure of model error - the error classification rate.

4.2 Error Classification Rate

Instead of measuring a distance like we can do with continuous space, let’s measure a binary response; close does not count. Either the estimate is correct or it is not.

Mathematically, we are interested in the probability of a wrong prediction (or mis-classification), denoted as:

\[ P(\hat{y}_i \neq y_i) \quad \equiv \quad P(\text{predicted response}_i \neq \text{actual response}_i) \tag{4.1}\]

We want to minimize our overall error rate.

This is equivalent to maximizing the correct classification rate (the complement) since

\[

P(\hat{y} \neq y) = 1 - P(\hat{y} = y).

\]

Since we don’t know the true form of \(Y = f(X)\), we don’t know the true probability, so we estimate it.

Let’s define the error classification rate as the number of observations that are mis-classified, divided by the sample size \(n\).

\[ \frac {1}{n} \sum_{i=1}^n I (\hat{Y}_i \neq y_i)\quad \text{ where } \quad I(\hat{Y}_i \neq y_i) = \cases{1 \text{ if } \hat {Y}_i \neq y_i \\ 0 \text{ otherwise}} \tag{4.2}\]

We will use this metric with the KNN Classification Method where “KNN” stands for K Nearest Neighbors.

4.3 KNN Classification

For any given \(X\) and a \(Y\) with \(j\) levels, we will estimate \(P(Y = j) \, \forall\, j\) by using the \(k\) data points “closest” to the given \(X\).

- These \(k\) points are the Nearest Neighbors of \(X\) and we get to choose \(k\), a tuning or hyper-parameter.

- We will define “closest” in terms of the usual Euclidean distance between the two points in \(X_p\) space.

\[ \text{Euclidean Distance}(\vec{X}_1, \vec{X}_2) = \sqrt{\sum_{i = 1}^{p}(X_{2_i} - X_{1_i})^2} \]We then choose the category level \(j\) with the highest (or maximum) estimated probability as the best estimate for \(\hat{Y}\).

\[ \hat{Y}_{x_i,k} = j \text{ where } \frac{1}{n}\sum_{m=1}^{k}I(y_m) \text{ is maximum}\quad \text{with } I(y_m) = \cases{1 \text{ if } y_m =j \\ 0 \text{ otherwise}} \]

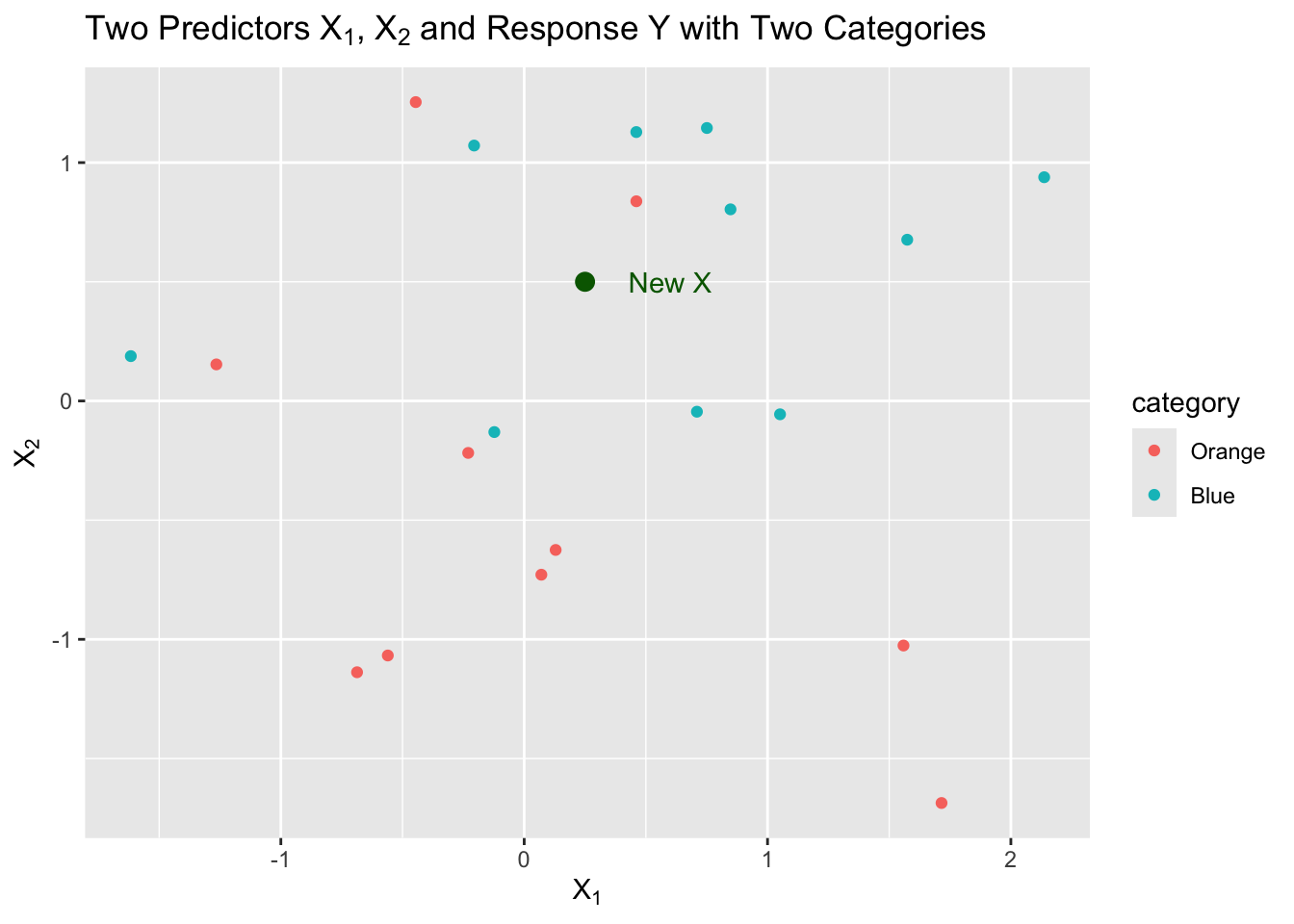

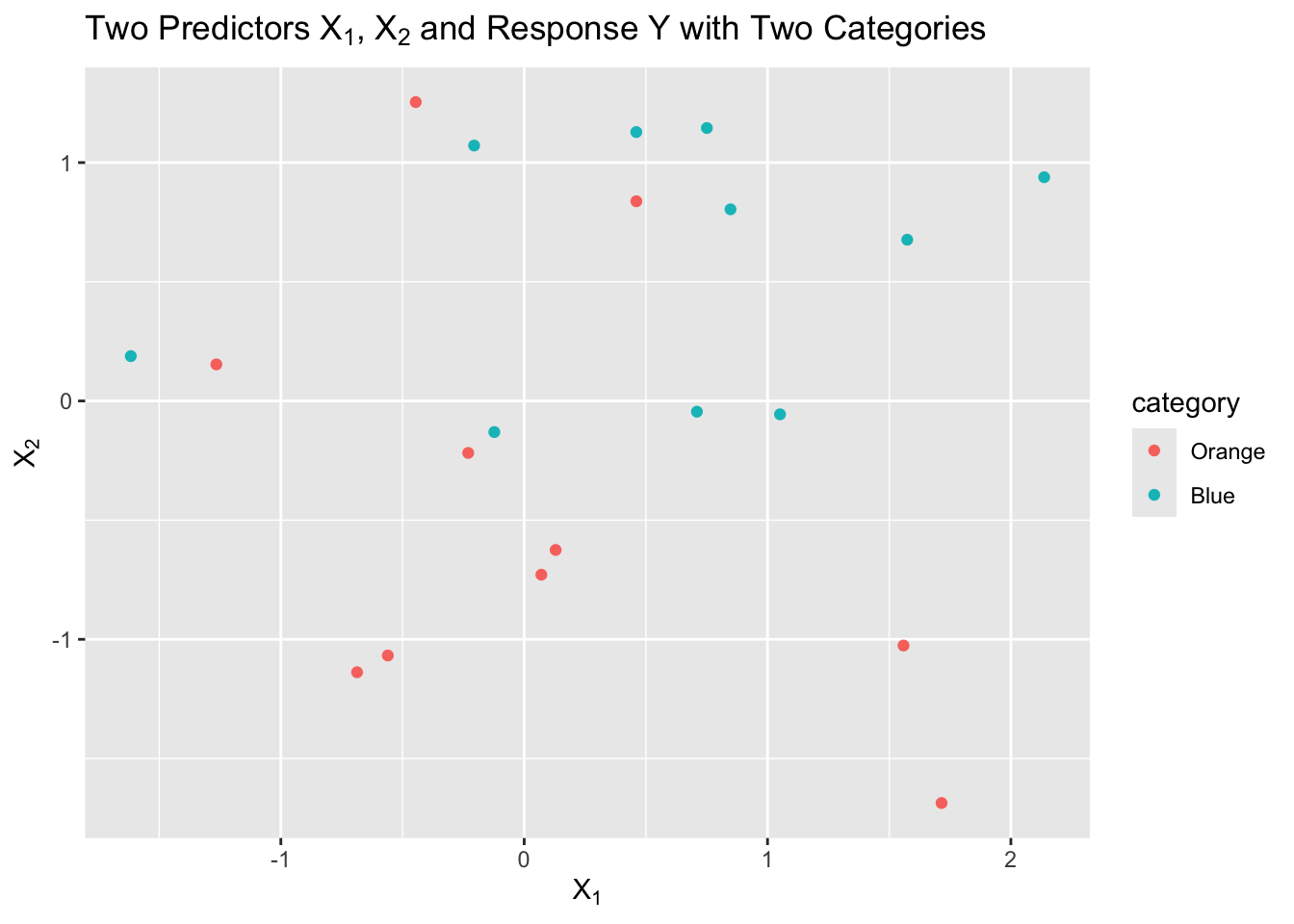

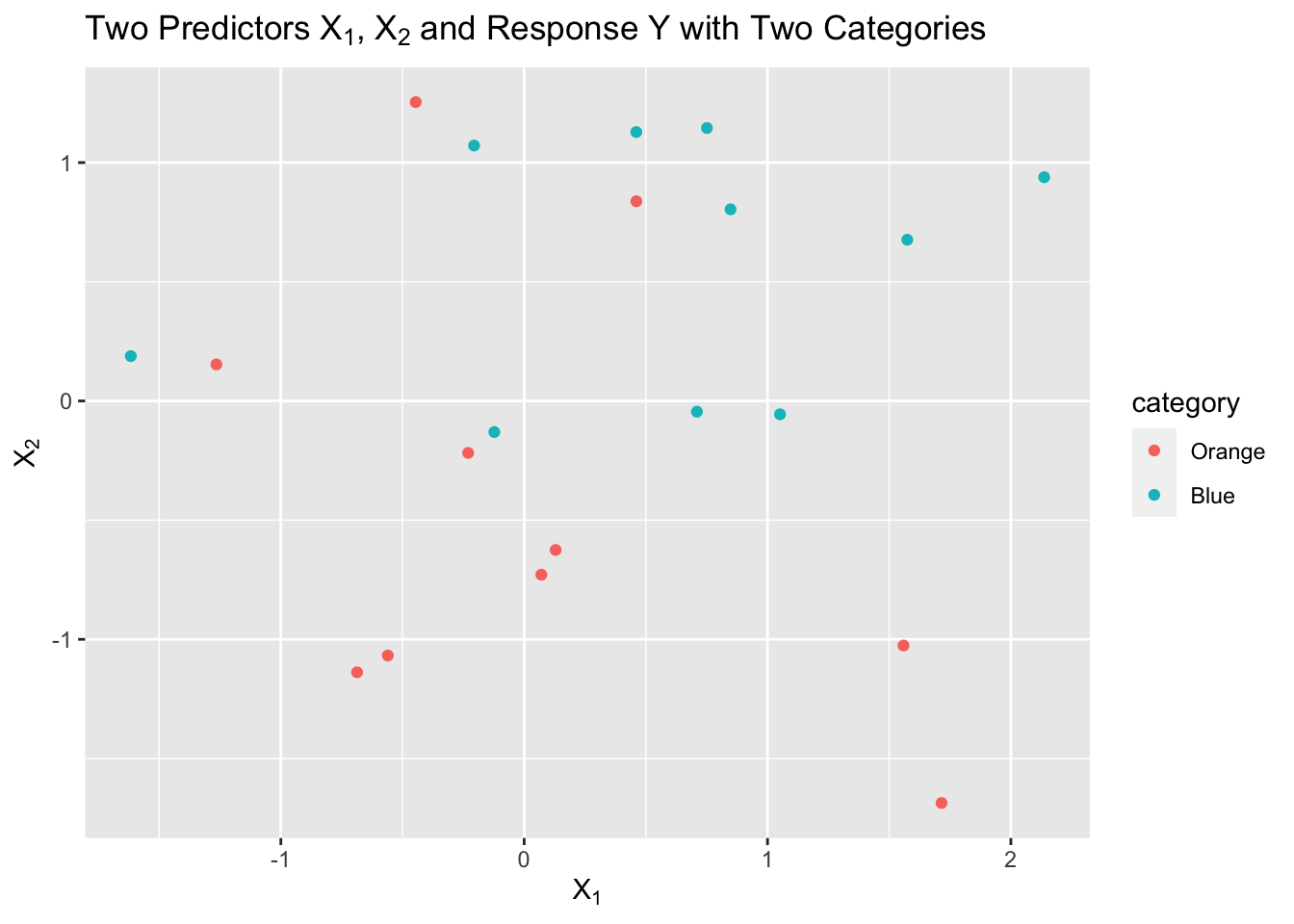

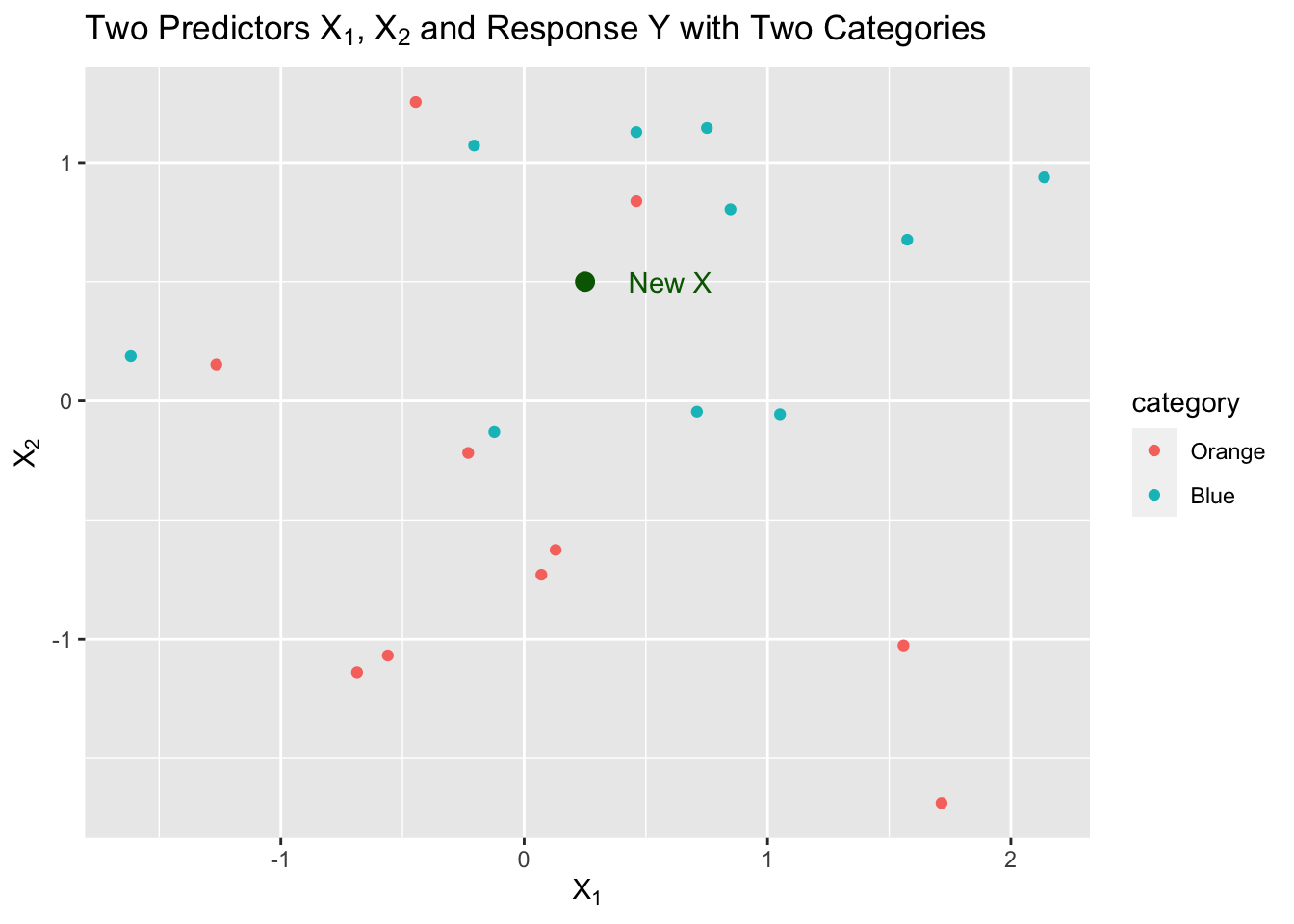

Let’s say we have a sample of 20 observations of two predictors, \(X1\) and \(X2\), with a response \(Y\) that has two categories, “Orange” and “Blue”.

We can visualize this as follows:

Show code

set.seed(123)

x1 <- c(rnorm(10), rnorm(10) + .35)

x2 <- c(rnorm(10), rnorm(10) + .25)

category <- parse_factor(c(rep("Orange", 10), rep("Blue", 10)))

df <- tibble("x1" = x1,

"x2" = x2,

"category" = category)

p <- ggplot(df, aes(x1, x2, color = category)) +

geom_point() +

labs(title = latex2exp::TeX("Two Predictors $X_1$, $X_2$ and Response Y with Two Categories")) +

xlab(latex2exp::TeX("$X_1$")) +

ylab(latex2exp::TeX("$X_2$"))

p

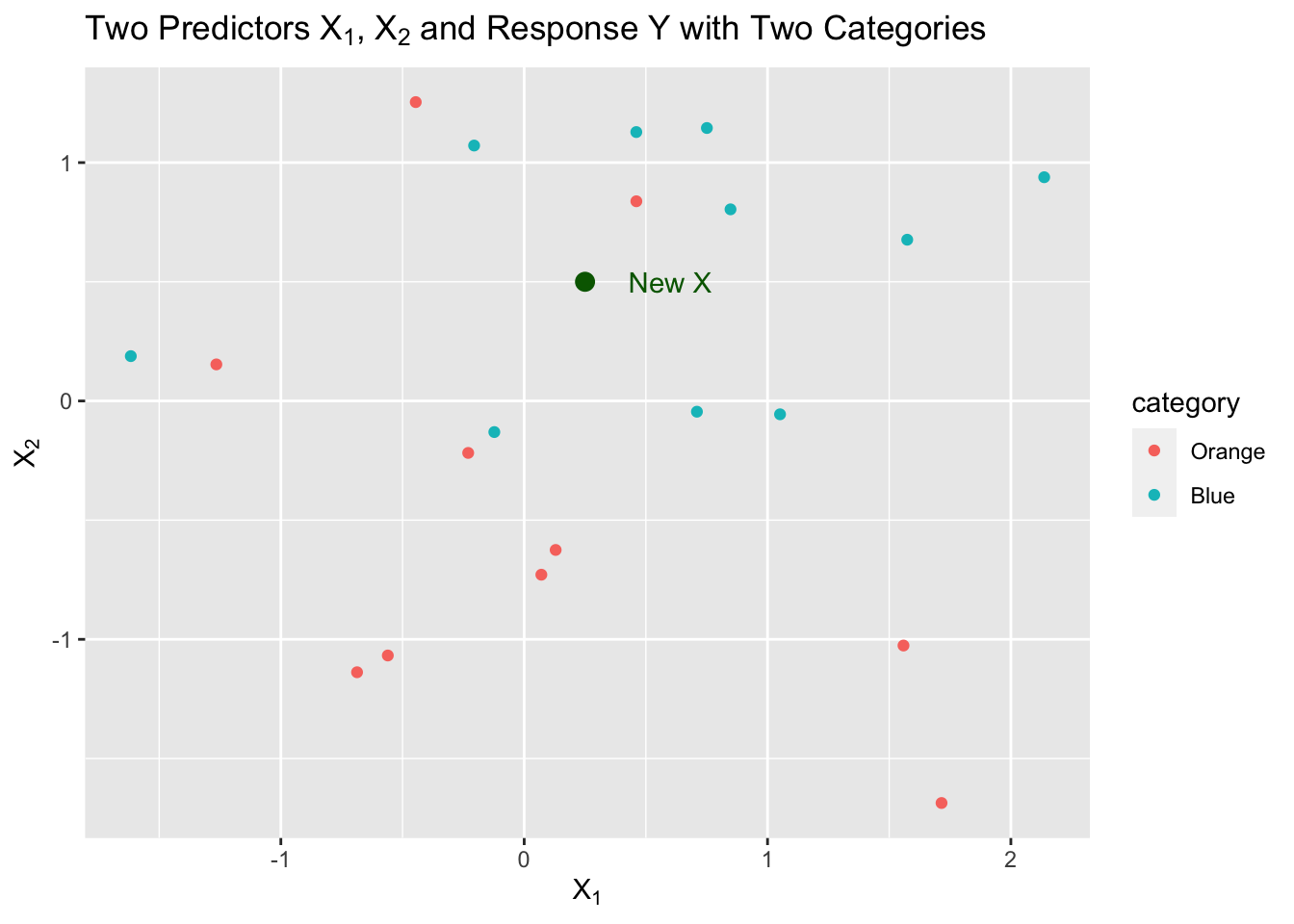

We now want to predict a response for a new point in our two dimensional \(X\) space.

Show code

We decide we want to start by using \(k=5\), the five closest neighbors to \(X\). That is the neighborhood.

Show code

We have three neighbors from Blue and two from Orange so we estimate as follows:

\[ \begin{align} \text{3 Blue} & \implies \hat{P}(Y = 2) = \frac{3}{5}\\ \text{2 Orange} & \implies \hat{P}(Y = 1) = \frac{2}{5}\\ \end{align} \] These are estimates of the probability of a correct classification based on the sample proportion.

We want to maximize this probability (which minimizes our error classification rate).

- What is our \(\hat{Y}\) in this example? Which probability is higher?

- In this case, it is Blue, or category 2, since \(\frac{3}{5} > \frac{2}{5}\).

Let’s look at an example.

4.3.1 KNN Example with NFL Field Goal Data

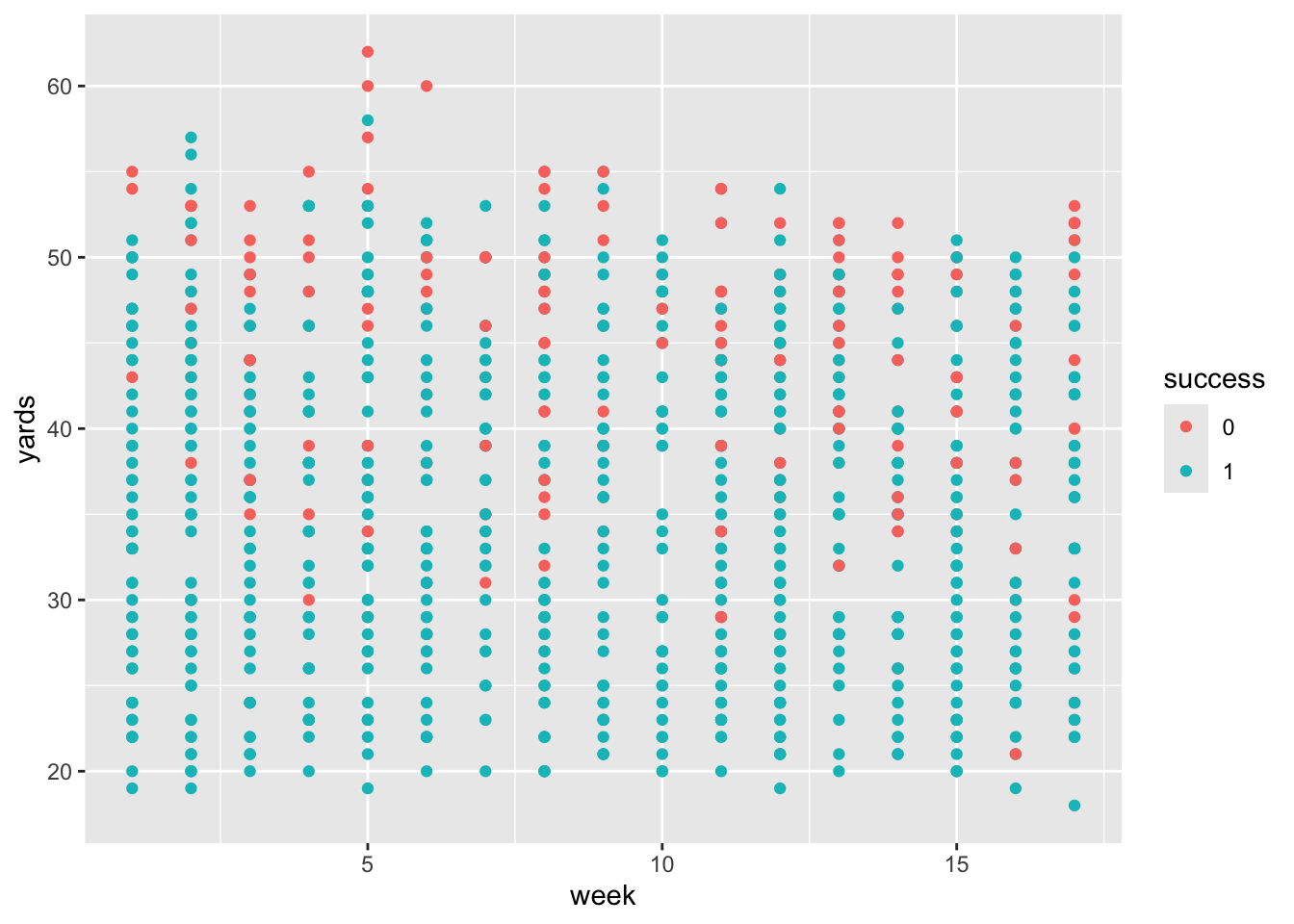

Professor Baron has a data set from ESPN on all of the field goal attempts in the National Football League in 2003.

Read in the data from the following url (http://fs2.american.edu/baron/www/627/R/Field%20goals.txt), while adding in column names for

yards,successandweek.Use

glimpse()andsummmary()to look at the data.

Show code

Rows: 948

Columns: 3

$ yards <dbl> 30, 41, 50, 22, 33, 44, 40, 55, 49, 51, 27, 39, 26, 38, 36, 50…

$ success <dbl> 1, 1, 1, 1, 1, 1, 1, 0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0,…

$ week <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,… yards success week

Min. :18.0 Min. :0.0000 Min. : 1.000

1st Qu.:28.0 1st Qu.:1.0000 1st Qu.: 5.000

Median :37.0 Median :1.0000 Median : 9.000

Mean :36.5 Mean :0.7975 Mean : 8.989

3rd Qu.:45.0 3rd Qu.:1.0000 3rd Qu.:13.000

Max. :62.0 Max. :1.0000 Max. :17.000 The data is numeric but it looks like success should be categorical so let’s convert to a factor and check the levels.

Show code

[1] "0" "1" [1] 2 2 2 2 1 2 2 2 2 2 1 2 2 2 2 2 2 1 1 2Since we have two predictors, let’s plot and color code by our categorical response variable.

What is the average success rate across the season?

0 1

192 756 # A tibble: 2 × 2

f n

<fct> <int>

1 0 192

2 1 756 n

1 0.7974684[1] 0.7974684Can we do better if we include week and yards as predictors? How can we tell if they are “significant” predictors?

This is a standard coaching decision that has many factors but we will try to see if we can do better than the average.

A knn() function is in the {class} package. Install and load the package.

Let’s first just look at overall performance (training data is the entire data set) and predict a kick in week 8 for 55 yards using 10 nearest neighbors - the 10 most similar situations.

Show code

[1] 0

Levels: 0 1What if we chose a smaller \(k\)?

What if we chose a larger \(k\)?

What would you expect with a \(k\) this large for any week or yardage? Is it helpful?

We could also change the yardage and week.

Show code

[1] 1

Levels: 0 1What are the implications for using a model like this early in the season say in week 3?

Can we choose a \(k\) so our predictions are better than 0.7974684 accuracy rate?

4.3.2 Training and Testing Split

Let’s split the data into two sets for training and testing.

- Set a seed for the random number generator

- Create a random vector

Zof indexes for rows from the data by sampling without replacement. - Let’s use 50% of the data for training and 50% for testing.

- We can use

Zand-Zto select the training and the testing data so no rows appear in both.

Now let’s recreate our KNN results

- We can test a single data point as before, only now we know the data was not used in training the model.

- We can use predict for entire test data set.

Show code

[1] 1

Levels: 0 1[1] 0

Levels: 0 1Show code

[1] 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 1 1 1 1 1 1 1 1 1 1

[38] 1 1 1 1 1 1 1 1 1 1 1 1 1 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

[75] 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

[112] 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 1 1 1 1 1 1 1 1 1

[149] 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 1 1 1 1 1 1 0 1 1 1 1 1 1 1 1 1 1 1 1 1

[186] 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 1 0 1 1 1 1 1 1 0 1 1 1 1 1

[223] 1 1 1 1 1 1 0 0 1 1 1 1 1 1 1 1 1 0 1 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

[260] 1 1 0 1 1 1 1 1 1 1 1 0 1 1 1 1 1 1 1 1 1 1 0 1 0 1 1 0 1 1 1 1 1 1 1 1 0

[297] 1 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1

[334] 1 1 1 1 1 1 1 1 1 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 1 1 1 1 1 1 1 1 0 1

[371] 1 1 1 1 1 1 1 1 1 1 1 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

[408] 1 1 1 1 1 1 1 1 1 1 0 1 1 0 1 1 1 1 1 1 1 0 1 1 1 0 1 0 1 1 0 1 1 1 1 1 1

[445] 1 1 1 1 0 1 1 1 1 1 1 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

Levels: 0 1[1] 4744.3.3 Example by Hand

We want to predict if a new drug will have serious side effects based on two quantitative predictors.

We have the following data for 5 randomly chosen test subjects.

| Subjects | S1 | S2 | S3 | S4 | S5 |

|---|---|---|---|---|---|

| \(x_1\) | 0 | 0 | 1 | 1 | 0 |

| \(x_2\) | 2 | 3 | 3 | 4 | 4 |

| Side Effects (YesNo) | N | Y | Y | N | N |

- Subjects are broken into two sets: train(1, 3, 4) and test (2,5)

- Create test set predictions for two models with \(k=1\) and \(k=3\).

- Calculate the Misclassification rate for each model.

| Test | \(x_1\) | \(x_2\) | \(Y\) | Dist to S2(0,3) | Dist to S5 (0,4) | \(k=1\) | \(k=1\) | \(k= 3\) | \(k = 3\) |

|---|---|---|---|---|---|---|---|---|---|

| S2 Neigh | S5 Neigh | S2 Neigh | S5 Neigh | ||||||

| S1 | 0 | 2 | N | \(1=\sqrt{0^2 + 1^2}\) | \(2=\sqrt{0^2 + 2^2}\) | 1/2 | 1 | 1 | |

| S3 | 1 | 3 | Y | \(1=\sqrt{1^2 + 0^2}\) | \(\sqrt{2}=\sqrt{1^2 + 1^2}\) | 1/2 | 1 | 1 | |

| S4 | 1 | 4 | N | \(\sqrt{2}=\sqrt{1^2 + 1^2}\) | \(1=\sqrt{1^2 + 0^2}\) | 1 | 1 | 1 |

| Test | \(x_1\) | \(x_2\) | \(Y\) | Dist to S2(0,3) | Dist to S5 (0,4) | \(k=1\) | \(k=1\) | \(k= 3\) | \(k = 3\) |

|---|---|---|---|---|---|---|---|---|---|

| S2 Neigh | S5 Neigh | S2 Neigh | S5 Neigh | ||||||

| S1 | 0 | 2 | N | \(1\) | \(2\) | 1 /2 | 1 | 1 | |

| S3 | 1 | 3 | Y | \(1\) | \(\sqrt{2}\) | 1/2 | 1 | 1 | |

| S4 | 1 | 4 | N | \(\sqrt{2}\) | \(1\) | 1 | 1 | 1 |

| \(\hat{y}\) | 1/2 Y 1/2 N | N | N | N | |||||

| \(y\) | Y | N | Y | N | |||||

| Mis-Class | 1/2 | 0 | 1 | 0 | |||||

| Rate | .5/2= .25 | 1/2=.5 |

There is no single method for breaking ties in either the nearest neighbor or the majority rules. Options include:

- Randomly chose one candidate from the set of ties,

- Choose them all.

- Increase \(k\) until the tie is broken for that point.

The class::knn() function includes all the candidates and then breaks ties at random in the majority rules.

This is usually not a major issue for large data sets for either prediction or calculation of performance metrics.

So in general, how do we evaluate performance, and tune our model? Which \(k\) is better?

4.4 Flexible or Restrictive

Recall optimizing the bias-variance trade off requires finding the right level of flexibility or restrictiveness in the model.

As you think about KNN, how does the model flexibility change with \(k\)?

- Model tries to follow the sample data more closely.

- With \(k = 1\), \(\hat{Y}\) is based on a single value from the sample.

- Lower \(k\) is more flexible.

- Higher \(k\) uses more and more data points so if \(k = n\) it is estimating the sample mean.

- Higher \(k\) is more restrictive.

- This is opposite of linear regression where more parameters is more flexible.

4.5 Evaluating Classification Model Performance

To tune a KNN classification model ( to find the “best” \(k\)), we have to be able to quantitatively evaluate the model performance on the testing data and compare to other models that may be more restrictive (higher \(k\)) or more flexible (lower \(k\)).

We have \(X_1, \ldots, X_p\) predictor variables and \(Y\) is our categorical response variable.

We are estimating the true form of \(Y=f(X)\) by estimating \(\hat{Y}\) as a categorical response.

Using the complement form of Equation 4.1, we get a form of Equation 4.2 such that

\[ \text{Classification Rate}\,= \frac {1}{n} \sum_{i=1}^n I (\hat{Y}_i = y_i)\quad \text{ where } \quad I(\hat{Y}_i = y_i) = \cases{1 \text{ if } \hat {Y}_i = y_i \\ 0 \text{ otherwise}} \]

This is interpreted as the number of times we predicted correctly divided by the total sample size, or, the sample proportion of correct predictions.

- The error classification rate is the complement of the classification rate. It is the proportion of incorrect predictions.

- We can calculate either of these metrics on the training data (from 1 to 100% of the data) or on the test data used for prediction.

We can use this metric to tune the model by choosing the best \(k\); the \(k\) that minimizes the Testing (aka Prediction) Error Rate.

If the \(k\) is too little (too flexible), the estimate \(\hat{y}\) may not be reliable. It is based on small sets of data which leads to overfitting with High Variance for \(\hat{y}\).

If the \(k\) is too large (too restrictive), the estimate \(\hat{y}\) is based on irrelevant, remote points which leads to underfitting with high Bias for \(\hat{y}\).

4.6 Binary Classification Confusion Matrices

4.6.1 Types of Classification Methods

We will consider two types of classification methods.

- Some classification methods make direct predictions of the outcome, e.g., true or false. KNN is one of those.

- Other classification methods estimate the probability or likelihood of an event being true or false based on the “strength of the signal”. Logistics Regression is an example.

- This strength of signal (versus noise), could be an estimated probability or the ratio of dark pixels in an area to light pixels in the area.

- Methods that estimate the strength of the signal have to set or choose a threshold to convert the estimated strength to a final prediction.

- As an example, if the strength (or probability) is greater than some value, predict TRUE, otherwise FALSE.

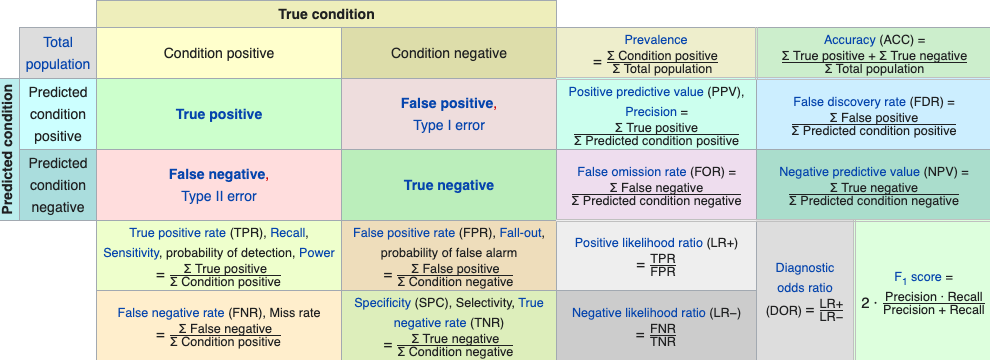

By ensuring each classification method results in a prediction, we can examine the prediction performance using a Confusion Matrix.

- Confusion Matrices organize the counts of various outcomes in a table so one can calculate error rates or other metrics to assess performance and tune the model (aka the classifier).

When we have binary response data (positives and negatives) we can calculate two metrics based solely on the number of samples or observations, \(n\), the number of positives, \(P\), and the number of negatives, \(N\).

- Actual Positive Rate: \(P/n\) - the average number of positives in the sample.

- Actual Negative Rate: \(N/n\) - the average number of negatives in the sample.

4.6.2 Binary Classification Outcomes

When we create a model with \(p\) predictors \(X\), for a binary classification problem, (True/False, Yes/No, Positive/Negative, …), there are four possible outcomes for predictions.

We will define the following metrics in terms of TRUE and FALSE but the same definitions apply for YES/NO or SUCCESS/FAILURE etc..

True Positive: a predicted value of \(\hat{y}_i= \text{TRUE}\) is correct as the actual value is \(y_i = \text{TRUE}\) or \(\hat{y}_i = y_i= \text{TRUE}\).

-

False Positive: a predicted value of \(\hat{y}_i= \text{TRUE}\) is incorrect as the actual value is \(y_i = \text{FALSE}\) or \(\hat{y}_i \neq y_i\).

- Also known as Type I error

-

False Negative: a predicted value of \(\hat{y}_i= \text{FALSE}\) is incorrect as the actual value is \(y_i = \text{TRUE}\) or \(\hat{y}_i \neq y_i\).

- Also known as Type II error

True Negative: a predicted value of \(\hat{y}_i= \text{FALSE}\) is correct as the actual value is \(y_i = \text{FALSE}\) or \(\hat{y}_i = y_i= \text{FALSE}\).

4.6.3 2x2 Confusion Matrices

A 2x2 confusion matrix shows the performance of a model on a sample of size \(n\) by organizing the \(n\) predictions into each of the four possible outcomes and showing the counts for each outcome.

- A confusion matrix allows one to see how much or how little a model “confuses” the two classifications.

| Actual \ Predicted | Positive (PP) | Negative (PN) |

|---|---|---|

| Positive (P) | True Positive (TP) count | False Negative (FN) count |

| Negative (N) | False Positive (FP) count | True Negative (TN) count |

Columns and Rows add up to their top/left totals

- \(\text{PP} + \text{PN} = n\)

- \(\text{TP} + \text{FN} = P\)

- \(\text{FP} + \text{TN} = N\)

- \(\text{TP} + \text{FP} = PP\), \(\text{FN} + \text{TN} = PN\)

- \(\text{TP} + \text{FN} + \text{FP} + \text{TN} = n\)

4.6.4 Confusion Matrix Performance Metrics

Given the counts, we can then define corresponding rates for each of the four outcomes as:

-

True Positive Rate: \(TPR = TP/P\): ratio of True Positive Predictions to actual Positives.

- Other names: Sensitivity, Hit Rate, Probability of Detection something that is actually there, or Power.

- \(P(\hat{Y}) = 1\, |\, Y = 1)\) as a Conditional Probability.

-

False Positive Rate: \(FPR = FP/N\): ratio of False Positive Predictions to actual Negatives.

- Other names: False Alarm rate, Overestimation Rate

- \(P(\hat{Y}) = 1\, |\, Y = 0)\)

-

False Negative Rate: \(FNR = FN/P\): ratio of False Negative Predictions to actual Positives.

- Other names: Miss rate

- \(P(\hat{Y}) = 0\, |\, Y = 1)\)

-

True Negative Rate: \(TNR = TN/N\): ratio of True Negative Predictions to actual Negatives.

- Other names: Specificity

- \(P(\hat{Y}) = 0\, |\, Y = 0)\)

Each of these rates can be used as a performance metric for tuning to optimize the model performance based on question being asked and the purpose of the model.

-

Other metrics are also defined and used for tuning or optimizing the model.

- Accuracy: \((TP + TN)/n\): ratio of correct predictions to total predictions or average correct classification rate.

- Here are some examples from a Wikipedia discussion on confusion matrices in machine learning, Binary Classification Confusion Matrix Metrics

- We can use multiple metrics in a Receiver Operating Characteristic (ROC) curve to help balance the bias and variance in a model or and choose between models.

4.7 The Receiver Operating Characteristic Curve

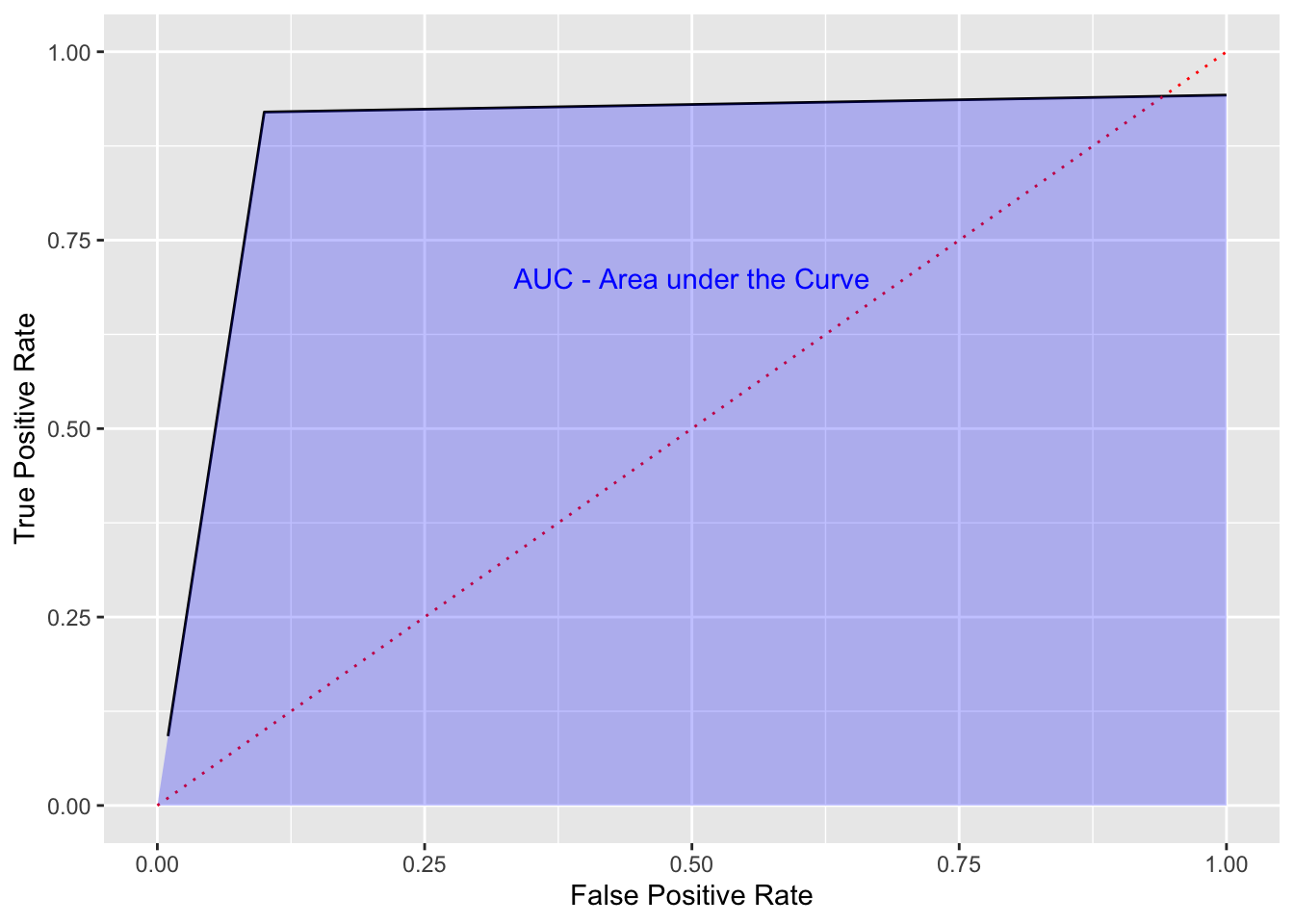

The Receiver Operating Characteristic curve or ROC curve is a graphical method for describing the performance of a “receiver” based on two performance characteristics and a range of thresholds.

- ROC Curves have been used since World War II when they were originally developed to help compare different radar systems ability to differentiate between signal (Success) and noise (Failure).

- The receiver refers to the system under study.

- The characteristics are usually a desirable performance characteristic and an undesirable performance characteristic where the two characteristics are linked such that increasing one can also increase the other so there is a trade off to be made.

ROC curves are useful in binary classification methods where a threshold is used to determine if an event is a success or failure based on the strength of signal (hit or miss, signal or noise, cancer or non-cancer, … .

- ROC curves are used in many different fields for a variety of binary classification problems to compare models.

- Standard ROC curves use as characteristics the True Positive Rate and the False Positive Rate of the receiver when it is operating under fixed conditions (here, our \(k\)).

The ROC is a plot of desirable True Positive Rate (TPR), for a given threshold, on the \(Y\) axis, against the undesirable False Positive Rate (FPR) for that same threshold on the \(X\) axis.

- The TPR and FPR are calculated for many different threshold values to create the ROC curve.

- The TPR can be considered the “Benefit” while the FPR can be considered the “Cost” of the model outcomes for that threshold.

- We want the TPR to be high and the FPR to be low but there is a trade off.

- An abline with slope 1 indicates when \(TPR = FPR\) or equal performance between good and bad.

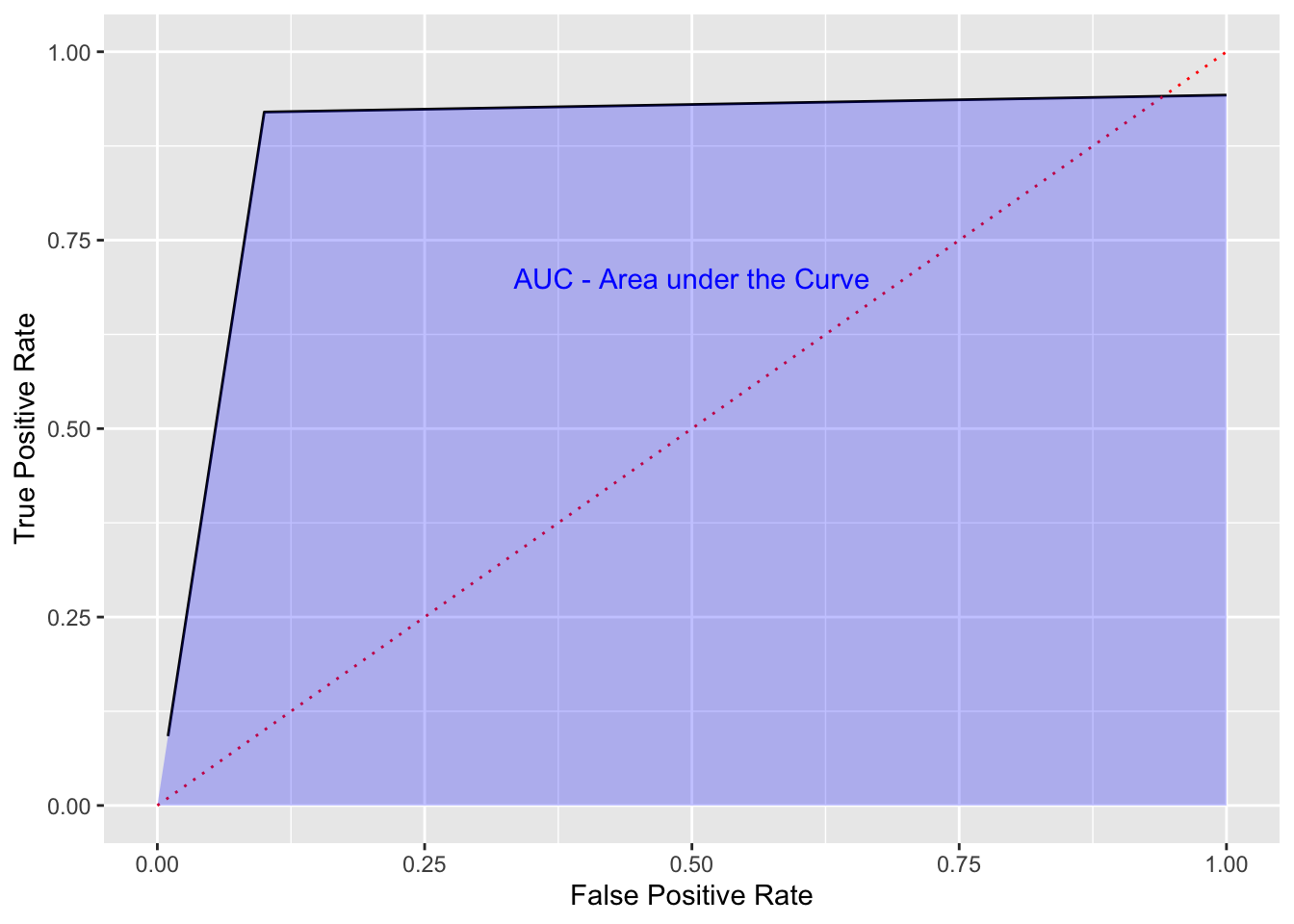

The Area Under the Curve (AUC) is the total benefit of a model across the range of thresholds.

We would like an ROC curve to look something like the following:

- We want a sharply rising curve on the left so a high TPR at a low FPR.

- The greater the AUC, the better the performance of the model. This is often used when comparing multiple models, each with their own ROC curve.

Show code

df <- tibble(x = seq(1:100)/100, y = c(9.2*x[1:10], (rep(.92, 90) + (seq(1:90)/4000))))

ggplot(df, aes(x, y)) +

geom_line() +

ylim(0, 1) +

xlim(0, 1) +

xlab("False Positive Rate") +

ylab("True Positive Rate") +

geom_line(data = tibble(x = c(0,1), y = c(0,1)), aes(x, y), color = "red",

lty = 3) +

# geom_line(data = tibble(x = c(.05,.15), y = c(.85,1)), aes(x, y),

# color = "darkgreen", lty = 1, linewidth = 2) +

# annotate("text", x = .15, y = .95, label = "Elbow in the Curve", color = "darkgreen",

# hjust = 0) +

geom_area(data = tibble(x = c(0, .1, 1), y = c(0, .92, max(df$y))),

aes(x = x, y = y), fill = "blue", alpha = .25) +

annotate("text", x = .5, y = .7, label = "AUC - Area under the Curve", color = "blue")

- Normally curves are not as straight or as nice.

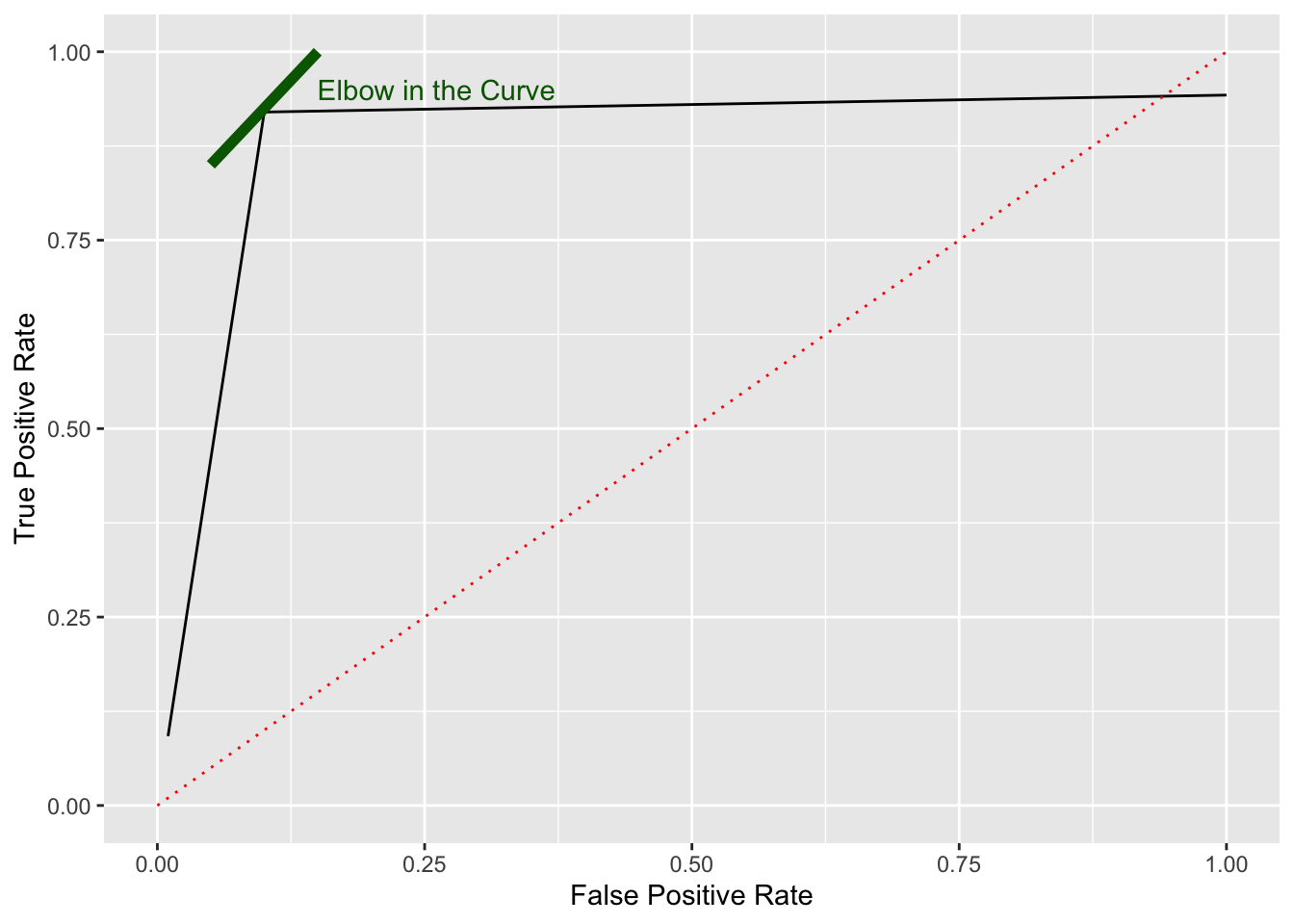

We can use the ROC curve to look for the “best” threshold based on an “elbow” or ’knee” in the curve.

This is the threshold where the later increase in TPR is minimal (the benefit) compared to the later increase in FPR (the cost).

- Choose \(k\) to match the elbow in the curve.

Show code

ggplot(df, aes(x, y)) +

geom_line() +

ylim(0, 1) +

xlim(0, 1) +

xlab("False Positive Rate") +

ylab("True Positive Rate") +

geom_line(data = tibble(x = c(0,1), y = c(0,1)), aes(x, y), color = "red",

lty = 3) +

geom_line(data = tibble(x = c(.05,.15), y = c(.85,1)), aes(x, y),

color = "darkgreen", lty = 1, linewidth = 2) +

annotate("text", x = .15, y = .95, label = "Elbow in the Curve", color = "darkgreen",

hjust = 0) #+

4.7.1 Finding a Good \(k\) Using ROC Curves

Now in this case, \(k\) is not really a threshold. In fact we are comparing different KNN models, not one model across different thresholds.

- That means the ROC curve may not be a “relatively smooth” monotonic curve, but it could be useful.

Our Steps

- Pick a \(k.\)

- Identify the neighborhood.

- Predict \(\hat{y}_i\) for each point in the test data and count the number of each of the four outcomes by comparing \(\hat{y}_i\) to \(y_i.\)

- Calculate the True Positive Rate and the False Positive Rate for that \(k.\)

- Repeat for each \(k\) of interest.

- Plot the Error Classification Rate for each \(k\) and then the ROC Curve.

Let’s use a for-loop to calculate TPR and FPR for \(k\) from 1 to 100 on the Field Goal data.

Then, select a good \(k\) based on the error classification rate.

[1] 948Z = sample(nrow(fg), training_pct*nrow(fg))

# Set our test and training data

Xtrain = fg[Z,c("yards", "week")] # Our training set X

Ytrain = fg$success[Z] # Our training set y

Xtest = fg[-Z,c("yards", "week")] # The test data set

Ytest = fg$success[-Z]

# Initialize data

err_class <- rep(1:100)

tpr <- rep(1:100)

fpr <- rep(1:100)

# run the loop

for (k in 1:100) {

Yhat <- knn(Xtrain, Xtest, Ytrain, k = k)

err_class[k] <- mean(Yhat != Ytest) # The prediction is not correct

tpr[k] <- sum(Yhat == 1 & Ytest == 1) / sum(Ytest == 1) # TP/P

fpr[k] <- sum(Yhat == 1 & Ytest == 0) / sum(Ytest == 0) # FP/N

}

ggplot(tibble(err_class, k = 1:100), aes(x = k, y = err_class)) +

geom_line()

[1] 19[1] 0.185654[1] 0.814346# calculate the accuracy for the best k

Yhat <- knn(Xtrain, Xtest, Ytrain, k = which.min(err_class))

table(Ytest, Yhat) # the Confusion Matrix Yhat

Ytest 0 1

0 21 75

1 16 362[1] 0.8080169- Note the variability if we change seed or size of the training set.

Use the table() function with \(Y_i^{test}\) and \(\hat{Y}_i\) to get a confusion matrix for the predicted outcomes.

Yhat

Ytest 0 1

0 0 96

1 0 378[1] 0.7974684With \(k=100\), our neighborhood is so large we always predict Success.

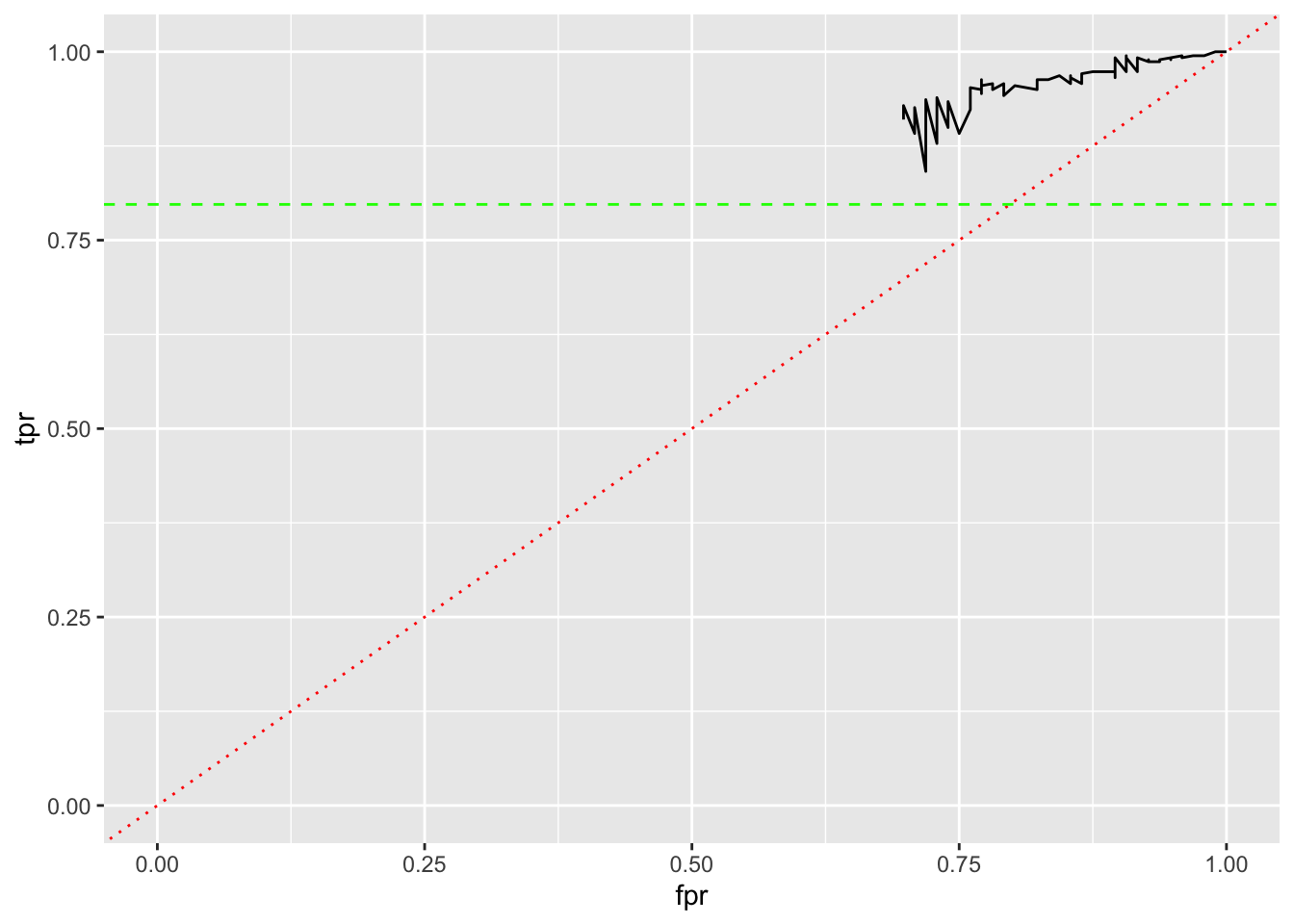

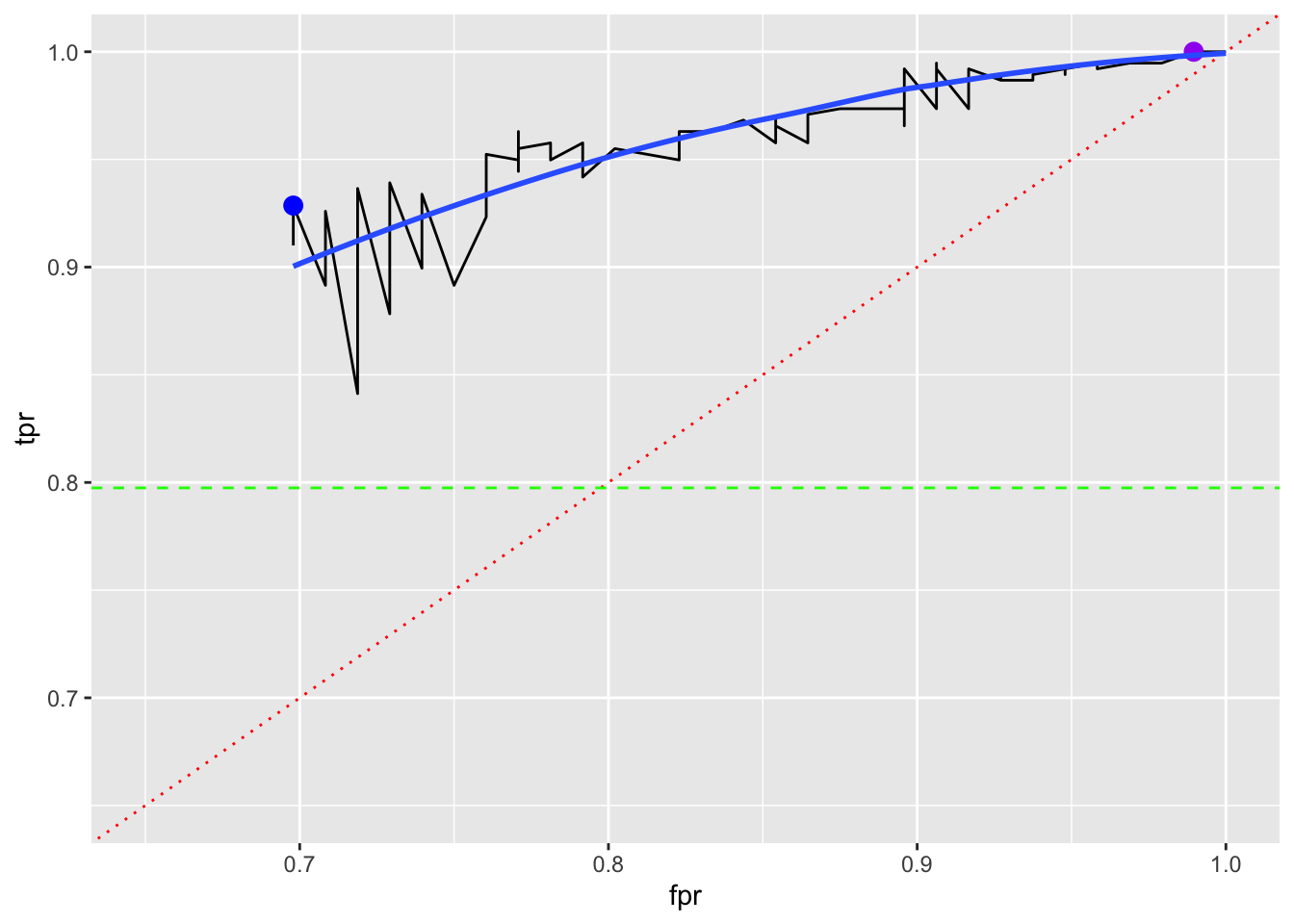

Let’s look at an ROC curve and see if it helps identify a “best” K based on FPR and TPR.

- Can we see an elbow in the black line?

- What do the red and green lines represent on this graph?

- How do we interpret the black line?

Show code

Yhat

Ytest 0 1

0 22 74

1 16 362Show code

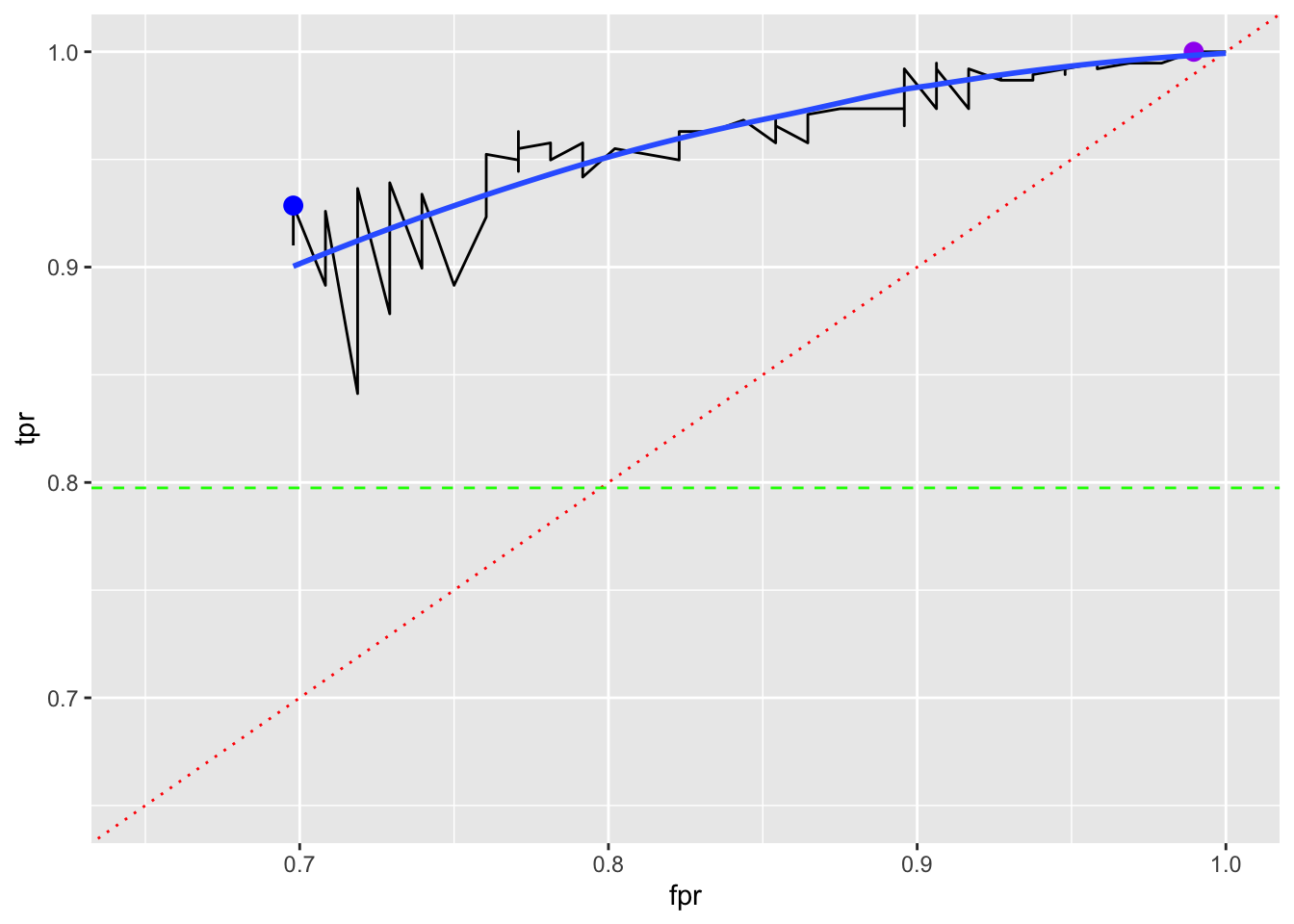

If we believe the point with the lowest FPR and then the highest TPR is the best, we can find the \(k\) for it.

# A tibble: 1 × 3

k fpr tpr

<int> <dbl> <dbl>

1 12 0.698 0.929If we believe the point with the highest TPR and then the lowest FPR is the best, we can find the \(k\) for it.

# A tibble: 17 × 3

k fpr tpr

<int> <dbl> <dbl>

1 84 0.990 1

2 85 1 1

3 86 1 1

4 87 1 1

5 88 1 1

6 89 1 1

7 90 1 1

8 91 1 1

9 92 1 1

10 93 1 1

11 94 1 1

12 95 1 1

13 96 1 1

14 97 1 1

15 98 1 1

16 99 1 1

17 100 1 1Plotting those points with a LOESS smoother shows a range of options with no clear “best”.

Show code

Yhat

Ytest 0 1

0 22 74

1 15 363Show code

ggplot(tibble(tpr, fpr), aes(x = fpr, y = tpr)) +

geom_line() +

geom_abline(color = "red", lty = 3) +

ylim(0.65, 1) + xlim(0.65, 1) +

geom_hline(yintercept = mean(as.numeric(fg$success)-1), color = "green", lty = 2) +

geom_point(data = filter(tibble(k = seq_along(fpr), tpr, fpr), k == 12),

size = 3, color = "blue") +

geom_point(data = filter(tibble(k = seq_along(fpr), tpr, fpr), k == 84),

size = 3, color = "purple") +

geom_smooth(se = FALSE)

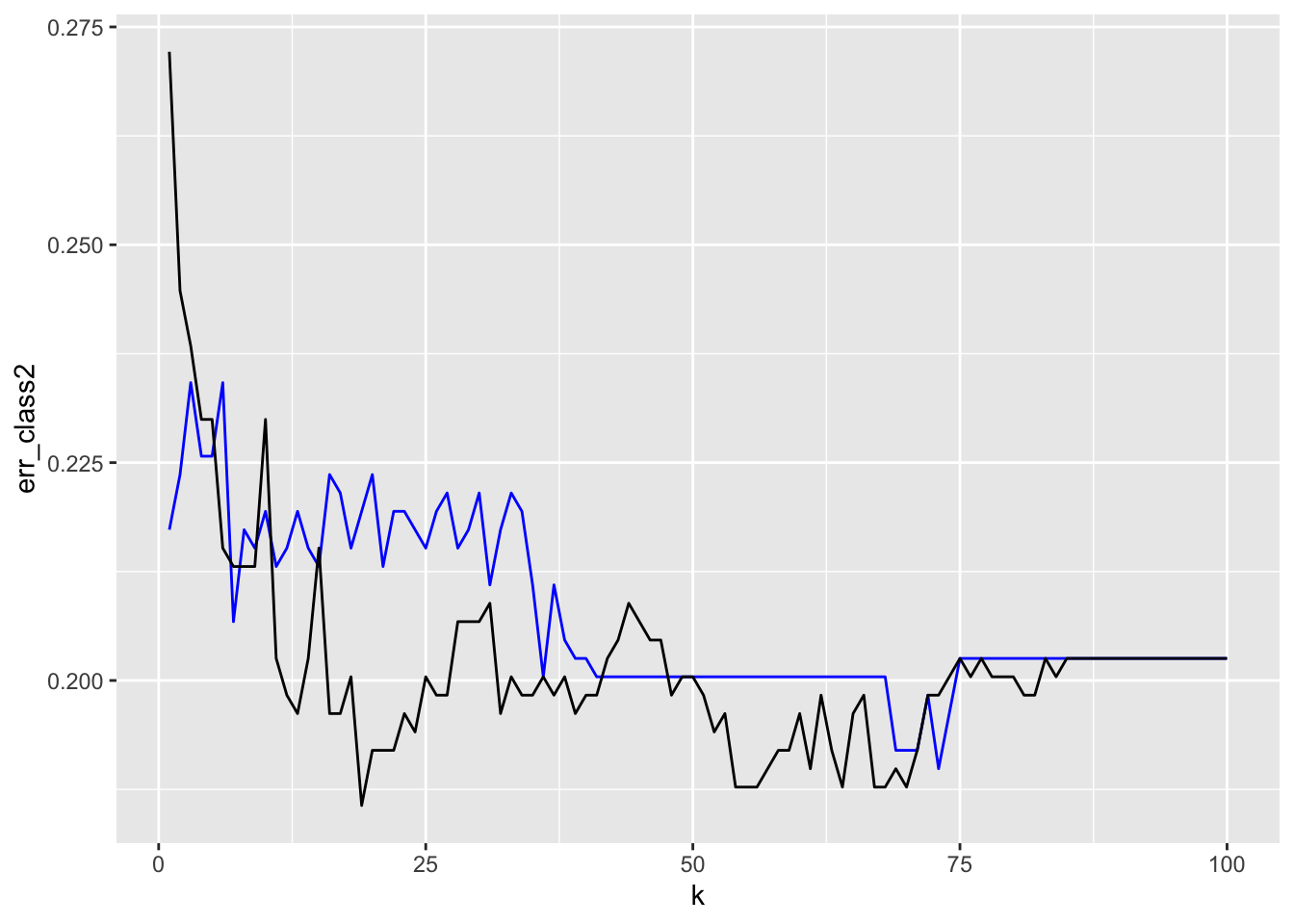

4.7.2 Let’s Try with a Reduced Model with Just yards.

-

knn()requires at least two variables so we can add a dummy variable of 1.

Show code

fg1 <- tibble(dummy = rep(1, nrow(fg)), yards = fg$yards)

err_class2 <- tpr2 <- fpr2 <- rep(1:100)

Xtrain <- fg1[Z,]

Xtest <- fg1[-Z,]

for (k in 1:100) {

Yhat <- knn(Xtrain, Xtest, Ytrain, k = k)

err_class2[k] <- mean(Yhat != Ytest) # The prediction is not correct

tpr2[k] <- sum(Yhat == 1 & Ytest == 1) / sum(Ytest == 1) # TP/P

fpr2[k] <- sum(Yhat == 1 & Ytest == 0) / sum(Ytest == 0) # FP/N

}

ggplot(tibble(err_class2, k = 1:100), aes(x = k, y = err_class2)) +

geom_line(color = "blue") +

geom_line(data = tibble(err_class, k = 1:100), mapping = aes(x = k, y = err_class))

[1] 0.814346[1] 0.8101266ROC Curve

Show code

ggplot(tibble(tpr, fpr), aes(x = fpr, y = tpr)) +

geom_line() +

geom_abline(color = "red", lty = 3) +

ylim(0.65, 1) + xlim(0.65, 1) +

geom_hline(yintercept = mean(as.numeric(fg$success)-1), color = "green", lty = 2) +

geom_line(data = tibble(tpr2, fpr2), mapping = aes(x = fpr2, y = tpr2),

color = "blue")

The points are Not in the order of the \(k\).

How would you interpret this plot in comparing the two models?

Different models but the results are close although the Full model gives access to lower FPR.

4.7.3 Summary of KNN

A non-parametric classification method useful for predicting a categorical outcome based on multiple predictors.

4.7.4 Foreshadowing Logistic Regression

- In contrast to KNN, Logistic Regression is a parametric method for classification we will discuss next.

Show code

1

2.055085 Use the argument type = "response" to get the prediction in the \(Y\) space or as a probability.

1

0.9260122 1

0.8572845 Note we can use summary here as well to check the “significance” of the predictors.

Call:

glm(formula = success ~ yards + week, family = "binomial", data = fg)

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 6.29969 0.51105 12.327 <2e-16 ***

yards -0.11274 0.01076 -10.477 <2e-16 ***

week -0.05243 0.01832 -2.862 0.0042 **

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 955.38 on 947 degrees of freedom

Residual deviance: 808.86 on 945 degrees of freedom

AIC: 814.86

Number of Fisher Scoring iterations: 5Now let’s look at Logistic Regression in more detail.

4.8 Logistic Regression (Chap 4.3)

4.8.1 Logistics Regression is Useful for a Binary Response

Logistic Regression is a classification method for categorical response data.

- When we had a categorical predictor, we used \(k-1\) dummy variables of 0 and 1 for \(k\) category levels.

For a categorical response variable, OLS will not work for \(k>2\).

We could use dummy variables and fit \(k-1\) logistics regression equations but this can be onerous.

- This is why logistics regression is mostly used for \(k =2\), binary classification, and other methods are more popular for \(k>2\).

- KNN, or Linear or Quadratic Discriminant analysis as well as decision trees or Support Vector Machines (SVM) are more popular for \(k>2\).

- With \(k=2\) we need just one dummy variable.

We could fit a linear regression using OLS for \(k=2\), so \(Y = 0 \text{ or } 1\).

- OLS will calculate residuals and sum the squared differences.

- However, as we saw in Figure 4.1, the OLS prediction can be unbounded and values of \(\hat{y} <0\) or \(\hat{y} >1\) do not make sense.

In the binary case of logistic regression, we are not trying to predict the category level directly, e.g., 0 or 1, but predict the probability or likelihood of a Success, a 1.

- A Bernoulli trial is situation with a binary outcome \(Y\) for each trial. The value of the outcome (1 or 0) is a random variable where it has the value 1 (a success) with probability \(p\).

- These random variables are described by a Bernoulli Distribution where the \(E[Y] = P(Y=1) = p\).

- The Bernoulli distribution is a subfamily of the Binomial Distribution.

- The Binomial Distribution describes the number of successes in \(n\) independent Bernoulli trials where each success has probability \(p\).

In Logistic Regression with \(k=2\), the response is a Bernoulli random variable with parameter \(p\) as the probability of success, which is the same as Binomial\((n=1, p)\).

For \(0<\hat{y} <1\), if we we think of \(Y\) as having a Bernoulli distribution, it can be shown that \(\hat{y}\) is the expected value of \(Y\) or \(E[Y] = P(y =1)\).

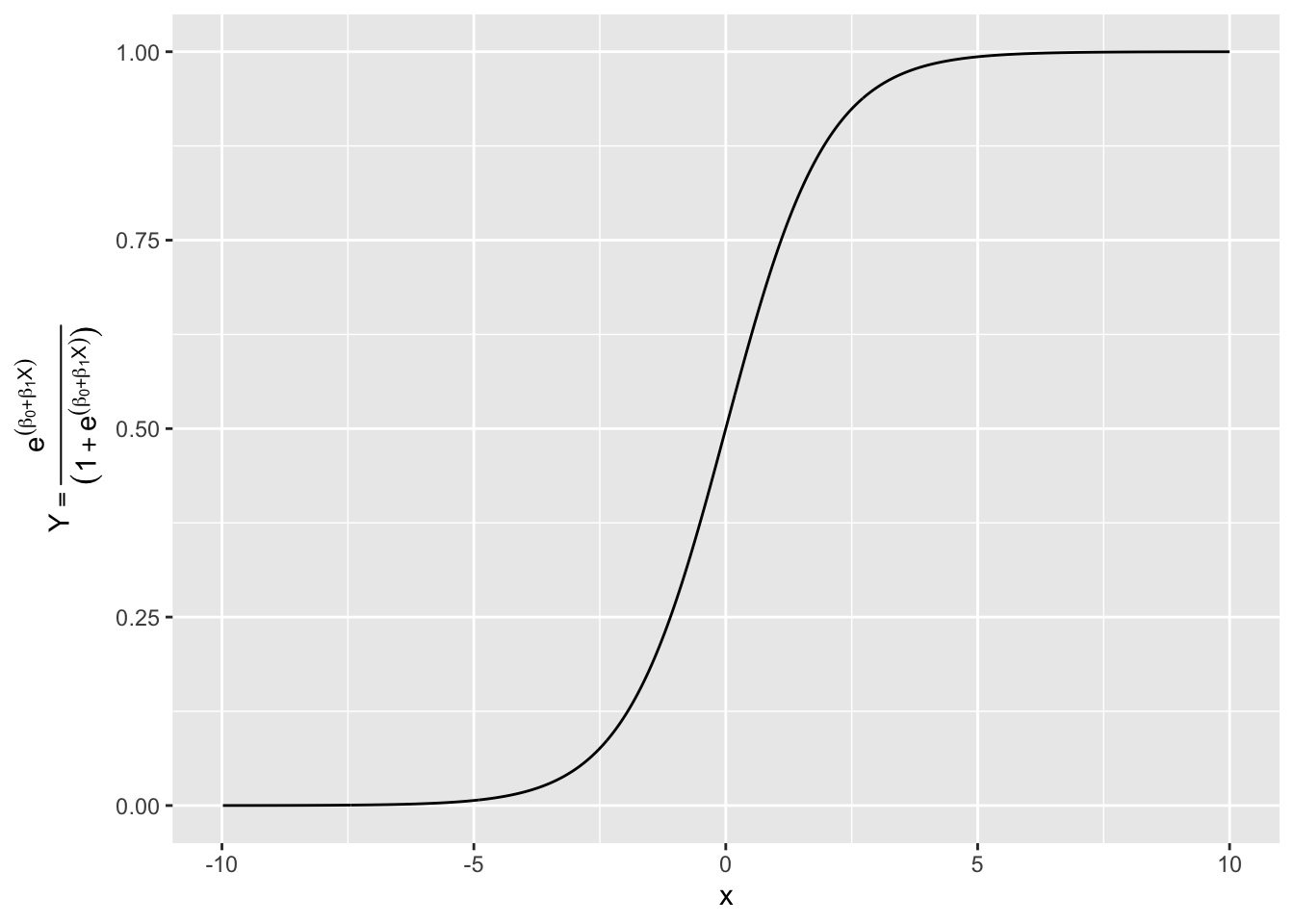

4.8.2 Estimating Probabilities with the Logistic Function

We want to use Logistic Regression to estimate the probability of a success.

Let \(p(X)\) be a function denoting the conditional probability of a success \(Y=1\) given the values of a predictor \(X\), or

\[ p(X) = P(Y=1|X) \]

If we try to fit \(P(X) = \beta_0 + \beta_1 X\) we get the unbounded results in Figure 4.1 (where the \(Y\) axis represents a probability).

To estimate probabilities, we need a function that is bounded between 0 and 1. While many functions could do this, in Logistic Regression we use the Logistic Function.

- The Logistic Function (aka a Logistic Curve or an S-curve) has the following formula.

\[ p(X) = \frac{e^{\beta_0 + \beta_1X}}{1 + e^{\beta_0 + \beta_1X}} \tag{4.3}\]

- This function is always positive and the difference between the top and bottom of the ratio is 1 so \(0<p(X)<1\).

With a little algebra Equation 4.3 gives us

\[ \frac{p(X)}{1-p(X)} = e^{\beta_0 + \beta_1X} \tag{4.4}\]

Equation 4.4 is known as the Odds or Odds Ratio as it is the ratio of the odds (the probabilities).

- Note it can range from \(0 \rightarrow \infty\).

If we take the log() of both sides of Equation 4.4, we get

\[ \ln{\frac{p(X)}{1-p(X)}} = \beta_0 + \beta_1X \tag{4.5}\]

- The left side of Equation 4.5 is known as the logit or log(odds).

- This can range from \(-\infty\) to \(\infty\).

- Note that the right side of Equation 4.5 is linear in \(X\) as we saw in OLS.

- Here, increasing \(X\) by one unit changes the log odds by \(\beta_1\).

- This means the amount that \(p(X)\) changes due to a one-unit change in \(X\) depends on the current value of \(X\).

If we solve the logistic regression using Equation 4.5, we can do the reverse algebra to get \(p(Y=1\)) as

\[ \begin{align} \ln{\frac{p(X)}{1-p(X)}} &= \beta_0 + \beta_1X &\implies \\ \frac{p(X)}{1-p(X)} &= e^{\beta_0 + \beta_1X} &\implies \\ p(X) &= (1-p(X))e^{\beta_0 + \beta_1X} &\implies \\ p(X) &= e^{\beta_0 + \beta_1X} - p(X)e^{\beta_0 + \beta_1X} &\implies \\ p(X) + p(X)e^{\beta_0 + \beta_1X} &= e^{\beta_0 + \beta_1X} &\implies \\ p(X)(1 + e^{\beta_0 + \beta_1X}) &= e^{\beta_0 + \beta_1X} &\implies \\ p(X) &= \frac{e^{\beta_0 + \beta_1X}} {(1 + e^{\beta_0 + \beta_1X})} \end{align} \] which matches Equation 4.3.

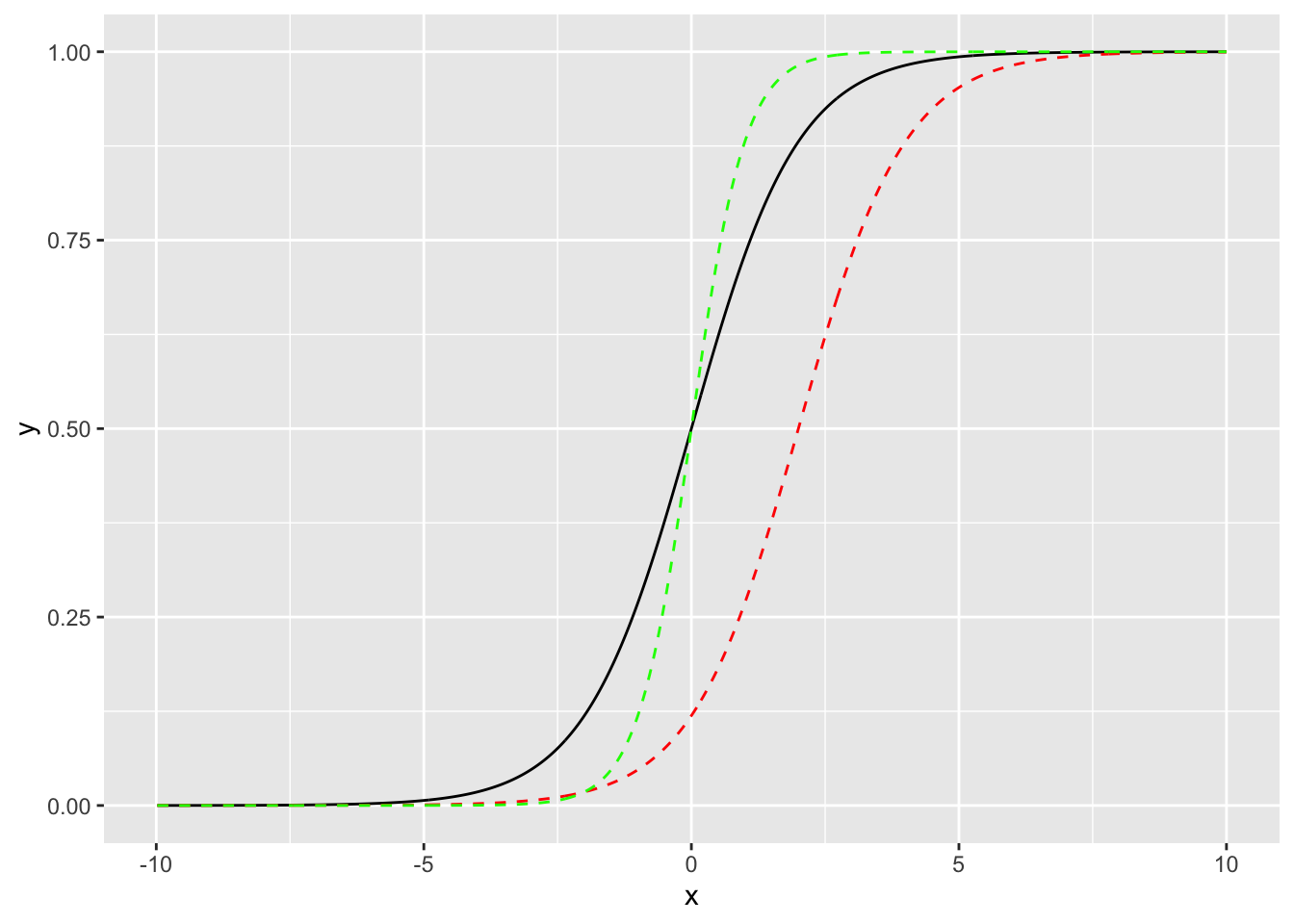

4.8.3 Examples of Logistic Function Curves

Show code

What happens if we change the intercept to \(\beta_0 = 2\)

Show code

Note the shift to the right. The \(\beta_0\) represents the logit of the probability when \(x=0\).

What happens if we change the slope to \(\beta_1 = 2.\)

Show code

To explore the effect of changes in the \(\beta_j\) on the shape of the logistic curve, try the app at Explore the Logistic Curve.

4.8.4 Why the Logit Function?

We can see the logistic curves have some nice properties.

- Our curve gives reasonable predictions when we are close to 0 and 1.

- When \(Y\) is close to 0, there is very little change in the curve.

- When \(Y\) is close to 1, there is very little change in the curve.

We can describe this mathematically (using some calculus terms) where the derivative of \(Y\) with respect to \(X\) (or the change in \(Y\) as \(X\) changes by a small amount) has the following relationship (\(k\) is a proportionality constant for scale).

\[ \frac{dY}{dX} =k * Y (1-Y) \]

This relationship means whenever \(Y\) is close to 0 or 1, the change in \(Y\) is small for small changes in \(X\).

One can use methods from calculus to figure out what function \(Y\) has this derivative.

- We can solve this differential equation for \(Y\), which is \(p(X)\), so we know \(0<Y<1\).

- Let’s separate the variables and integrate each side and then manipulate with algebra to get

\[ \begin{align} \int \frac{dY}{Y(1 - Y)} &= \int k dX \\ \int\left(\frac{1}{Y} + \frac{1}{1-Y}\right)dY &=kX + c \\ \int\frac{dY}{Y} + \int\frac{dY}{1-Y} &=kX + c \\ \ln(Y) - \ln(1-Y) &=kX + c \quad \text{we can drop the |Y| as we know Y is in [0,1]}\\ \ln\left(\frac{Y}{1-Y}\right) &= kX + c \quad \text{this is the logit or log odds of the probability Y}\\ &\text{Let's solve for Y} \\ \frac{Y}{1-Y} &= e^{kX + c} \\ Y &= e^{kX + c} - Ye^{kX + c} \\ Y (1 + e^{kX + c}) &= e^{kX + c} \\ Y &= \frac{e^{kX + c}} {(1 + e^{kX + c})} \end{align} \]

- Rearrange the exponent term and use \(\beta_0\) for \(c\) and \(\beta_1\) for \(k\) and we have Equation 4.3.

Taking the log of Equation 4.3 now makes the logit a linear function of the \(X_i\) as in Equation 4.5, so we can estimate our parameters.

4.8.5 Multiple Logistic Regression

Equation 4.3 can be generalized for multiple predictors as

\[ p(X) = \frac{e^{\beta_0 + \beta_1X + \ldots, \beta_pX_p}}{1 + e^{\beta_0 + \beta_1X + \ldots, \beta_pX_p}} \tag{4.6}\]

To interpret the coefficients

- \(\beta_0\): We estimate \(\widehat{E[Y]} = \hat{p} = \frac{e^{\beta_0}}{1+e^{\beta_0}}\) when all \(X_i = 0\) or

\[ \hat{\beta}_0 = \ln\frac{\hat{p}}{1-\hat{p}} \quad \text{the logit of }\hat{p} \text{ when all }X_i = 0 \]

- \(\beta_i\): The larger the value of an individual \(\beta_i\) the greater the effect of the \(X_i\) on the expected response, either positive or negative, all other variables held the same.

4.8.6 Estimating \(\hat{p}\) Using Maximum Likelihood

We could try to use OLS to minimize the Mean Squared Error for the residuals from the logistic curve.

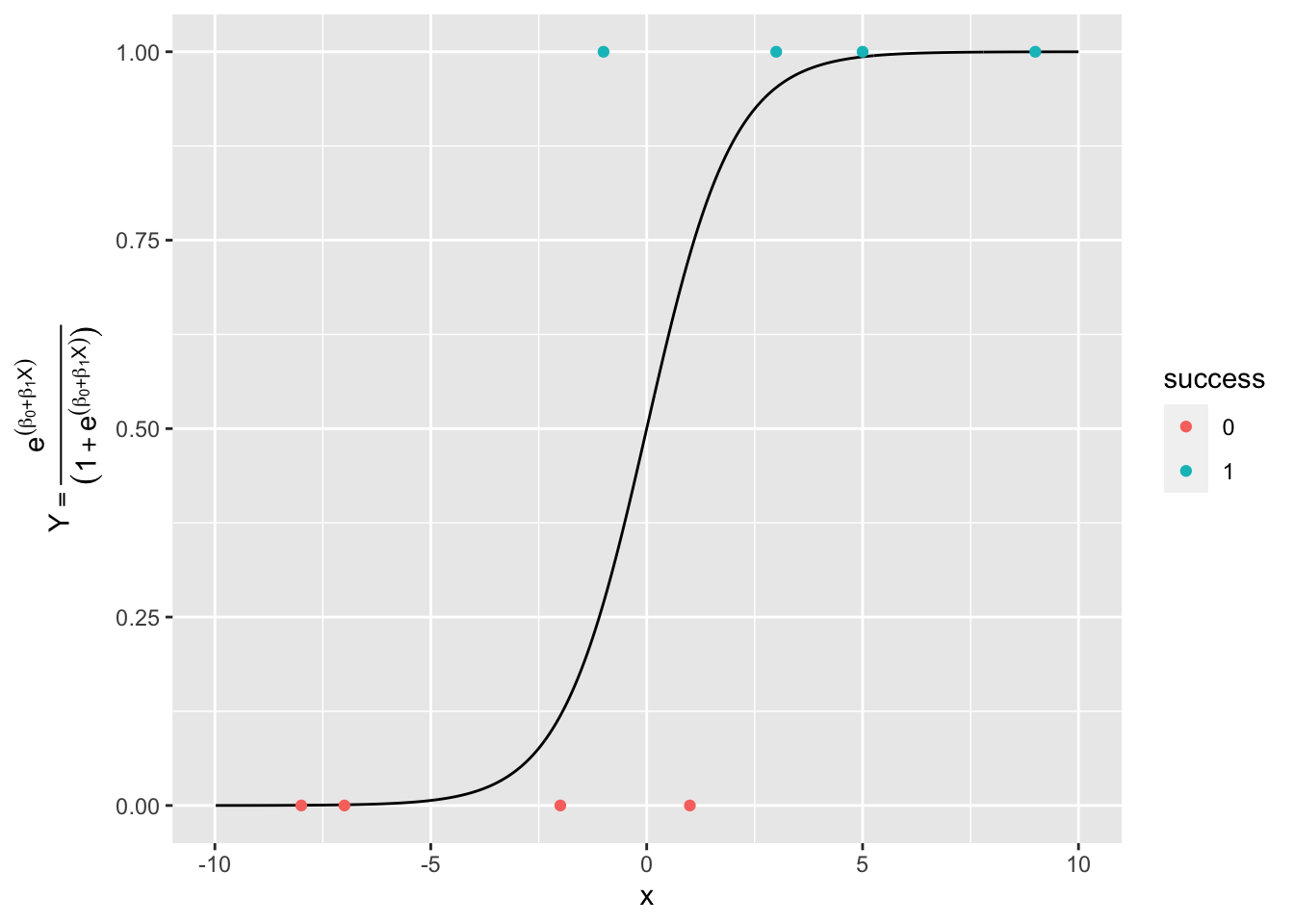

Consider Figure 4.2 with some sample points on it. This shows the probability space for \(Y\) for our data.

Show code

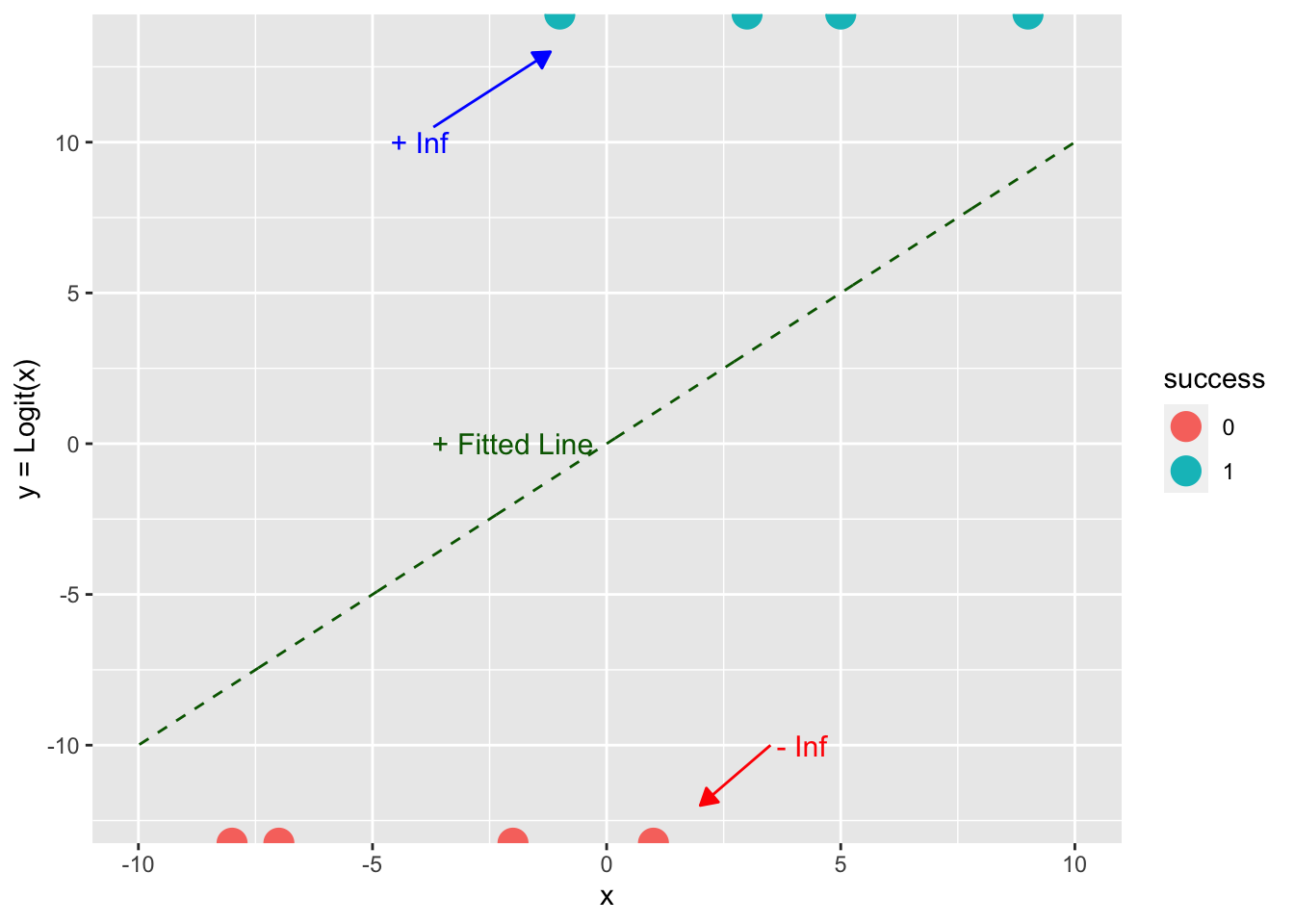

Let’s apply the logit transform to our data to get to the log-odds or logit view of \(Y\).

- What is the value of \(\ln(1/(1-1)\) or \(\ln(0/(1-0)\)?

Show code

df_example$logit_y <- log(df_example$y/(1-df_example$y))

df$logit_line <- log(df$y/(1-df$y))

ggplot() +

geom_line(df, mapping = aes(x, logit_line), color = "darkgreen", lty = 2) +

geom_point(df_example, mapping = aes(x, logit_y, color = success), size = 5) +

annotate("text", x = -2, y = 0, label = "+ Fitted Line", size = 4, color = "darkgreen") +

annotate("text", x = -4, y = 10, label = "+ Inf", size = 4, color = "blue") +

annotate("text", x = 4, y = -10, label = " - Inf", size = 4, color = "red") +

geom_segment(aes(x = 3.5, y = -10, xend = 2, yend = -12), color = "red",

arrow = arrow(ends = "last", type = "closed",

length = unit(.1, "inches"))) +

geom_segment(aes(x = -3.7, y = 10.5, xend = -1.2, yend = 13), color = "blue",

arrow = arrow(ends = "last", type = "closed",

length = unit(.1, "inches"))) +

ylab("y = Logit(x)")

What does this mean for the residuals??

- All the \(Y = \text{Logit}(X)\) values are \(\pm\,\infty\) so we can’t calculate \((\hat{y}_i - y)^2.\)

Thus we will use Maximum Likelihood Estimation.

For any set of \(\beta_0, \beta_1, \ldots, \beta_p\), define the likelihood as:

\[ \text{Likelihood} = \text{Probability of Observing the original } y_i \text{ with their values of 0 or 1}. \]

We assume the \(y_i\) are independent, so we can calculate the individual probabilities and multiply them together.

- This gives us a Likelihood Function for our estimates.

\[ \mathrm{L}(\beta_0, \beta_1, \ldots, \beta_p) = \prod_{i = 1}^{n} \underbrace{p_i^{y_i}}_{\text{Prob }y_i=1}\underbrace{(1-p_i)^{1-y_i}}_{\text{ Prob }y_i = 0} \tag{4.7}\]

Note what happens for \(y_i = 1\) and \(y_i =0\).

We can rewrite Equation 4.7 using Equation 4.6 for the logistic function as

\[ \begin{align} \mathrm{L}(\beta_0, \beta_1, \ldots, \beta_p) &= \prod_{i = 1}^{n} \underbrace{p_i^{y_i}}_{\text{Prob }y_i=1}\underbrace{(1-p_i^{1-y_i})}_{\text{ Prob }y_i = 0} \\ \mathrm{L}(\beta_0, \beta_1, \ldots, \beta_p) &= \prod_{i = 1}^{n}\left( \frac{e^{\beta_0 + \beta_1X_1 + \ldots, + \beta_pX_p}} {(1 + e^{\beta_0 + \beta_1X_1 + \ldots, + \beta_pX_p})}\right)^{y_i} \left( \frac{1}{(1 + e^{\beta_0 + \beta_1X_1 + \ldots, + \beta_pX_p})}\right)^{1-y_i} \end{align} \tag{4.8}\]

R will try to maximize Equation 4.8 by finding the values for the \(\beta\)s that are most consistent with the observed values.

- R takes the logit of this expression and estimates \(\beta_j\) parameters for an initial fitted line in the logit space (Figure 4.4).

- R then projects the \(x_i\) onto the fitted line in logit space to find the \(\text{Logit}(\hat{y}_i)\) on the \(\text{Logit}(Y)\) axis.

- R then transforms the \(\text{Logit}(\hat{y}_i)\) values back to the probability space using Equation 4.3 to calculate likelihood value using Equation 4.8.

- There is no closed-form simple solution to Equation 4.8 as in OLS so software packages use an iterative method to converge on the optimal solution.

- R then updates the estimated \(\beta_j\) parameters of the fitted line in the logit space, in a sense “rotating the line” in Figure 4.4, doing the projections onto the new line to get the new \(\text{Logit}(\hat{y}_i)\), transforming to probabilities to calculate the new Likelihood value.

- R keeps rotating the line and calculating the likelihood function until it converges (reaches a threshold for minimal change in the likelihood.

- The maximum likelihood function is convex so the iterative method uses a weighted form of the linear regression solutions to calculate the next step in estimating the \(\beta_j\)s which means it usually converges in a few iterations.

- R then provides the estimates for the set of \(\beta_j\) that gives the maximum likelihood and calculates the deviances.

4.8.7 Example of Using R for Logistic Regression

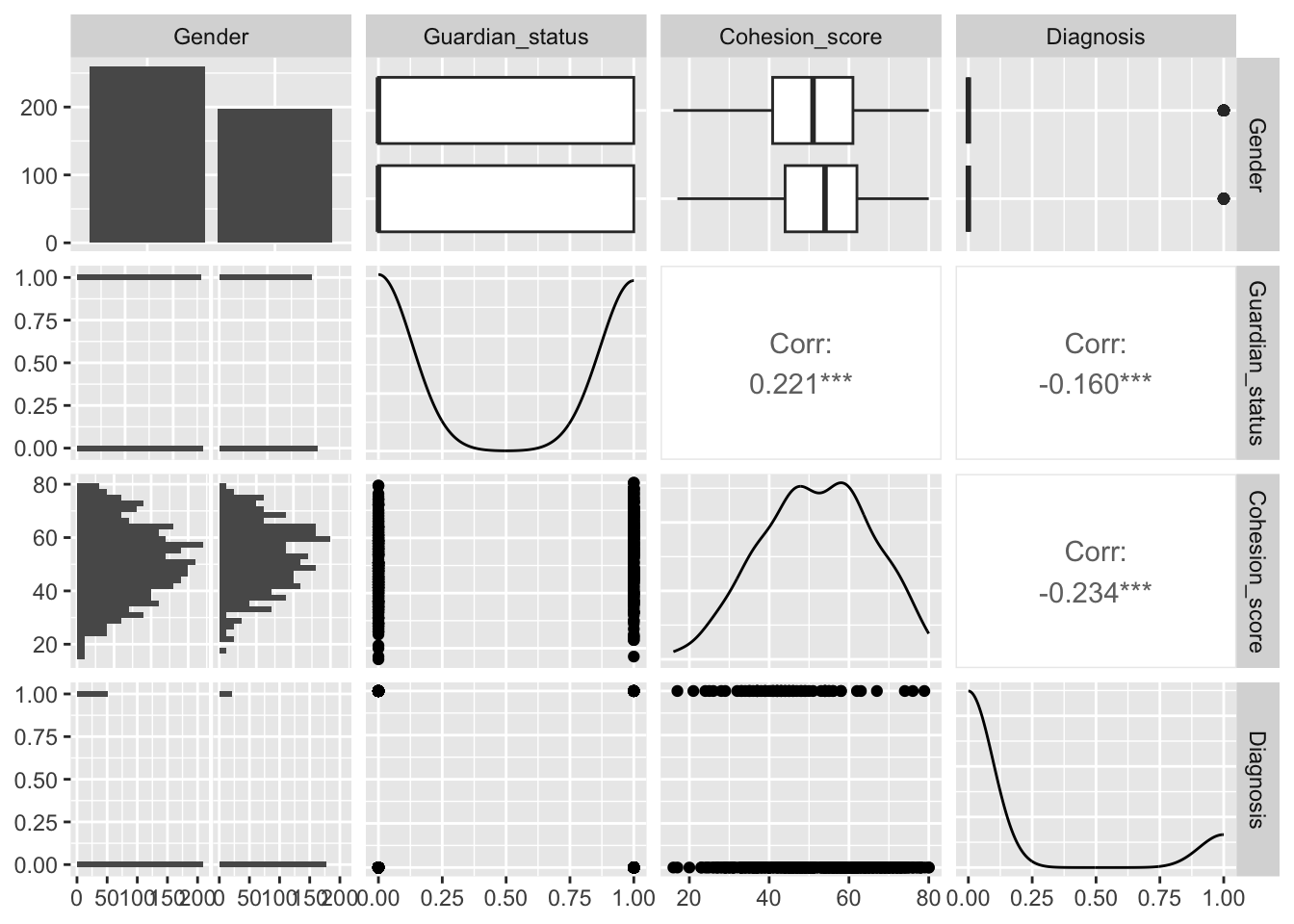

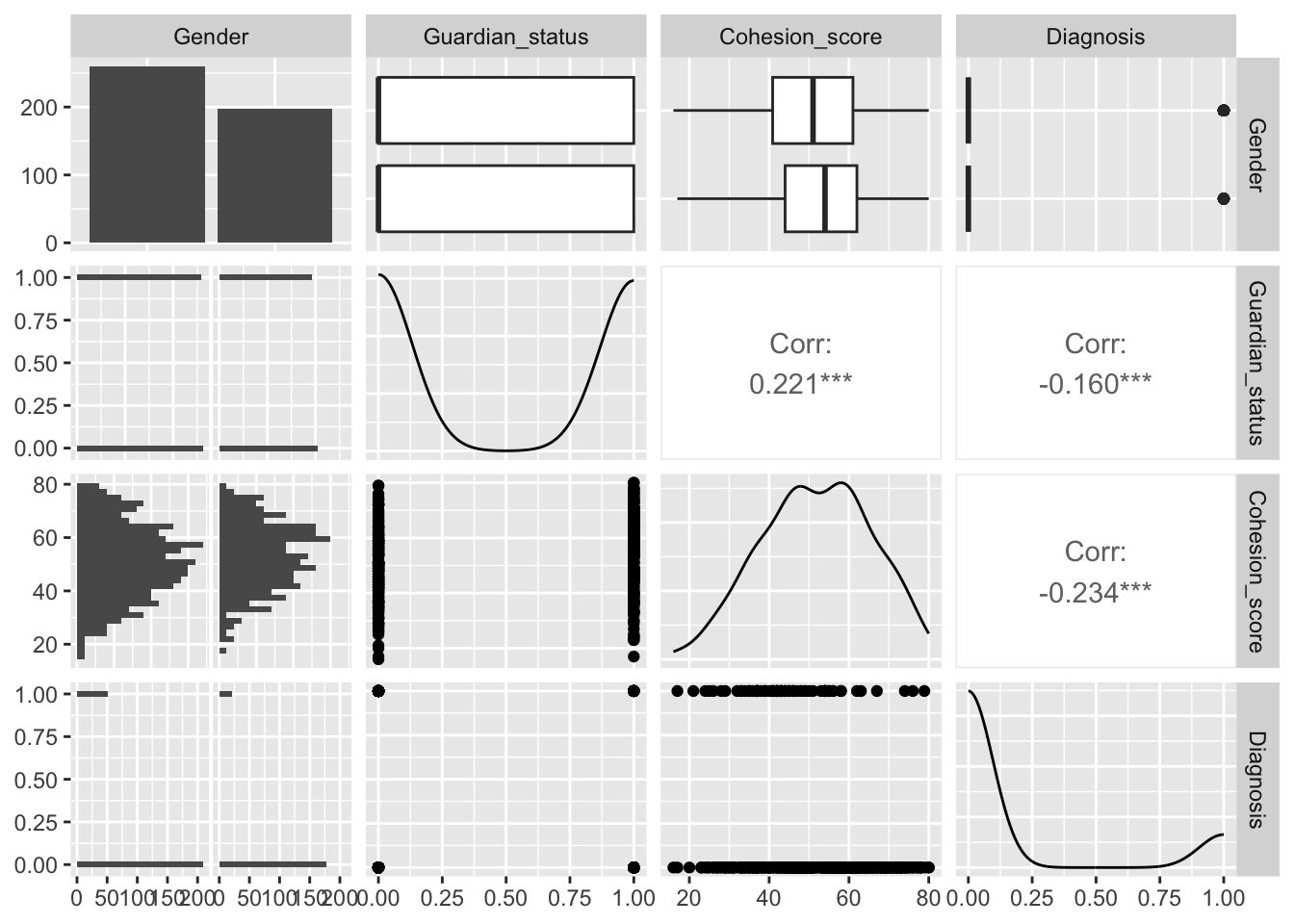

Professor Baron has a data set from a study of 1,594 high school students with values for socio-economic and family factors that may be associated with stress and depression.

| Column | Variable | Notes |

|---|---|---|

| 1 | Participant’s identification number | |

| Predictors | ||

| 2 | Gender | Female or Male |

| 3 | Guardian status | 0 = does not live with both natural parents, 1 = lives with both natural parents |

| 4 | Community Cohesion Score: how strongly the participant is connected to the community | 16-80 |

| Response variables | ||

| 5 | Total Depression Score | 0 - 60 |

| 6 | Clinical Diagnosis of Major Depression (or the clinical sample of patients only | 1 = positive diagnosis, 0 = negative diagnosis |

We are interested in predicting the probability of a Clinical Diagnosis of Major Depression based on the possible predictors of Gender, Guardian Status, and Community Cohesion Score.

4.8.7.1 Get and Review the Data

- Read in the data from the following url (https://raw.githubusercontent.com/rressler/data_raw_courses/main/depression_data.csv).

- Use

glimpse()andsummary()to look at the data.

Show code

Rows: 3,189

Columns: 6

$ ID <dbl> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16…

$ Gender <chr> "Male", "Male", "Male", "Female", "Male", "Female", "…

$ Guardian_status <dbl> 1, 1, 0, 1, 0, 1, 1, 0, 1, 0, 1, 0, 0, 1, 1, 1, 1, 0,…

$ Cohesion_score <dbl> 80.0, 57.1, 36.3, 44.0, 46.0, 40.0, 60.0, 64.0, 76.0,…

$ Depression_score <dbl> 3, 17, 17, 17, 19, 31, 26, 2, 10, 30, 17, 6, 3, 3, 18…

$ Diagnosis <dbl> NA, NA, NA, NA, NA, NA, NA, NA, NA, 0, NA, NA, 0, NA,… ID Gender Guardian_status Cohesion_score

Min. : 1 Length:3189 Min. :0.0000 Min. :16.00

1st Qu.: 800 Class :character 1st Qu.:0.0000 1st Qu.:48.00

Median :1597 Mode :character Median :1.0000 Median :58.00

Mean :1597 Mean :0.5177 Mean :56.24

3rd Qu.:2394 3rd Qu.:1.0000 3rd Qu.:66.00

Max. :3191 Max. :1.0000 Max. :80.00

Depression_score Diagnosis

Min. : 0.00 Min. :0.0000

1st Qu.: 8.00 1st Qu.:0.0000

Median :14.00 Median :0.0000

Mean :15.56 Mean :0.1572

3rd Qu.:21.00 3rd Qu.:0.0000

Max. :54.00 Max. :1.0000

NA's :2731 It appears not all students were diagnosed so Diagnosis has a lot of NAs. Let’s get rid of them using drop_na() for the specific variable.

Rows: 458

Columns: 6

$ ID <dbl> 10, 13, 28, 30, 40, 45, 47, 78, 79, 87, 102, 105, 117…

$ Gender <chr> "Female", "Male", "Male", "Male", "Female", "Male", "…

$ Guardian_status <dbl> 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 1, 0, 0, 0, 0, 0, 0, 0,…

$ Cohesion_score <dbl> 69.0, 72.0, 78.0, 56.5, 28.0, 62.0, 43.0, 29.0, 54.0,…

$ Depression_score <dbl> 30, 3, 9, 12, 46, 15, 35, 54, 33, 21, 8, 46, 44, 32, …

$ Diagnosis <dbl> 0, 0, 0, 0, 0, 1, 0, 1, 1, 0, 0, 1, 1, 1, 1, 0, 0, 0,… ID Gender Guardian_status Cohesion_score

Min. : 10.0 Length:458 Min. :0.0000 Min. :16.00

1st Qu.: 884.2 Class :character 1st Qu.:0.0000 1st Qu.:42.00

Median :1720.0 Mode :character Median :0.0000 Median :51.10

Mean :1686.7 Mean :0.4913 Mean :51.78

3rd Qu.:2580.2 3rd Qu.:1.0000 3rd Qu.:62.00

Max. :3182.0 Max. :1.0000 Max. :80.00

Depression_score Diagnosis

Min. : 0.00 Min. :0.0000

1st Qu.:12.00 1st Qu.:0.0000

Median :23.00 Median :0.0000

Mean :22.86 Mean :0.1572

3rd Qu.:32.00 3rd Qu.:0.0000

Max. :54.00 Max. :1.0000 It looks like Diagnosis and Gender should be categorical so we could convert to factors but let’s wait for a few minutes.

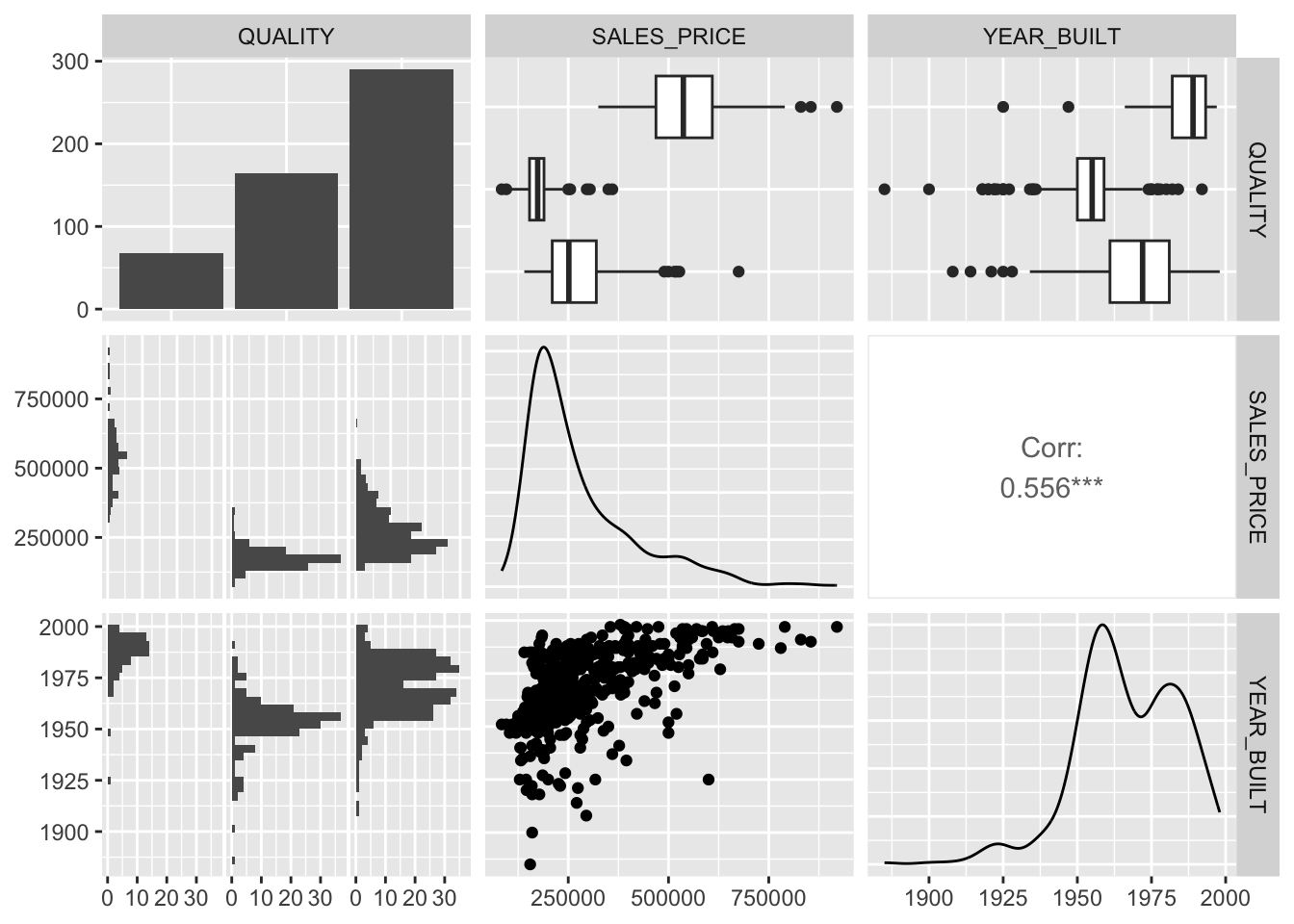

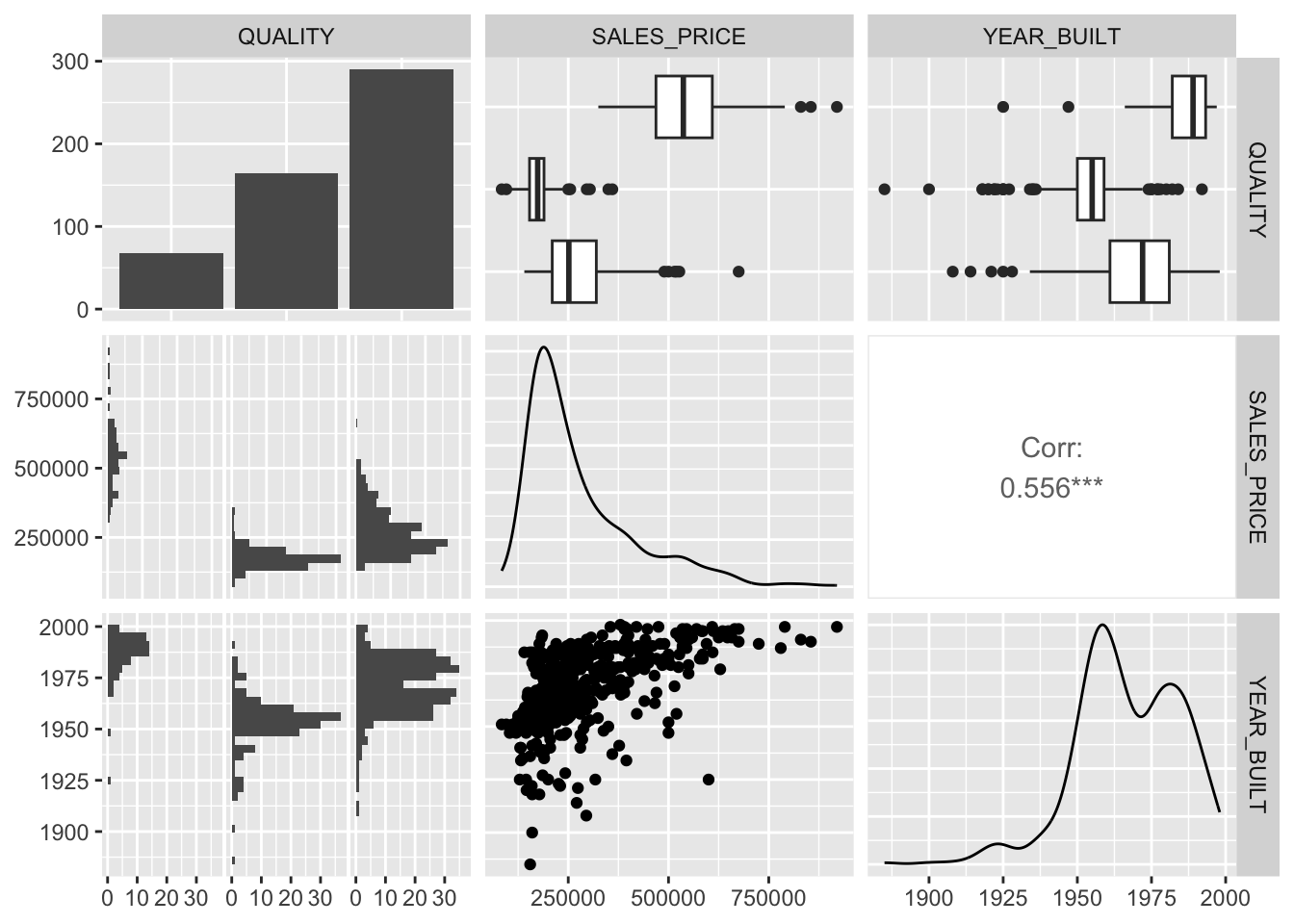

Let’s use GGally::ggpairs() to look at our four variables of interest.

4.8.7.2 Build and Interpret Results for a Logistic Regression Model in R

We can use the stats::glm() function to run a logistic regression model.

The default for the family = argument is “gaussian” which means this default version of glm() runs a standard OLS model where \(Y\) is NOT categorical, just a double, with values of 0, 1.

The summary() of a glm() output object looks similar to a lm() output object with a few differences at the bottom.

Call:

glm(formula = Diagnosis ~ Gender + Cohesion_score + Guardian_status,

data = depr)

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.512988 0.065434 7.840 3.25e-14 ***

GenderMale -0.080745 0.033179 -2.434 0.0153 *

Cohesion_score -0.005386 0.001241 -4.340 1.76e-05 ***

Guardian_status -0.085443 0.033627 -2.541 0.0114 *

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for gaussian family taken to be 0.123044)

Null deviance: 60.681 on 457 degrees of freedom

Residual deviance: 55.862 on 454 degrees of freedom

AIC: 346.12

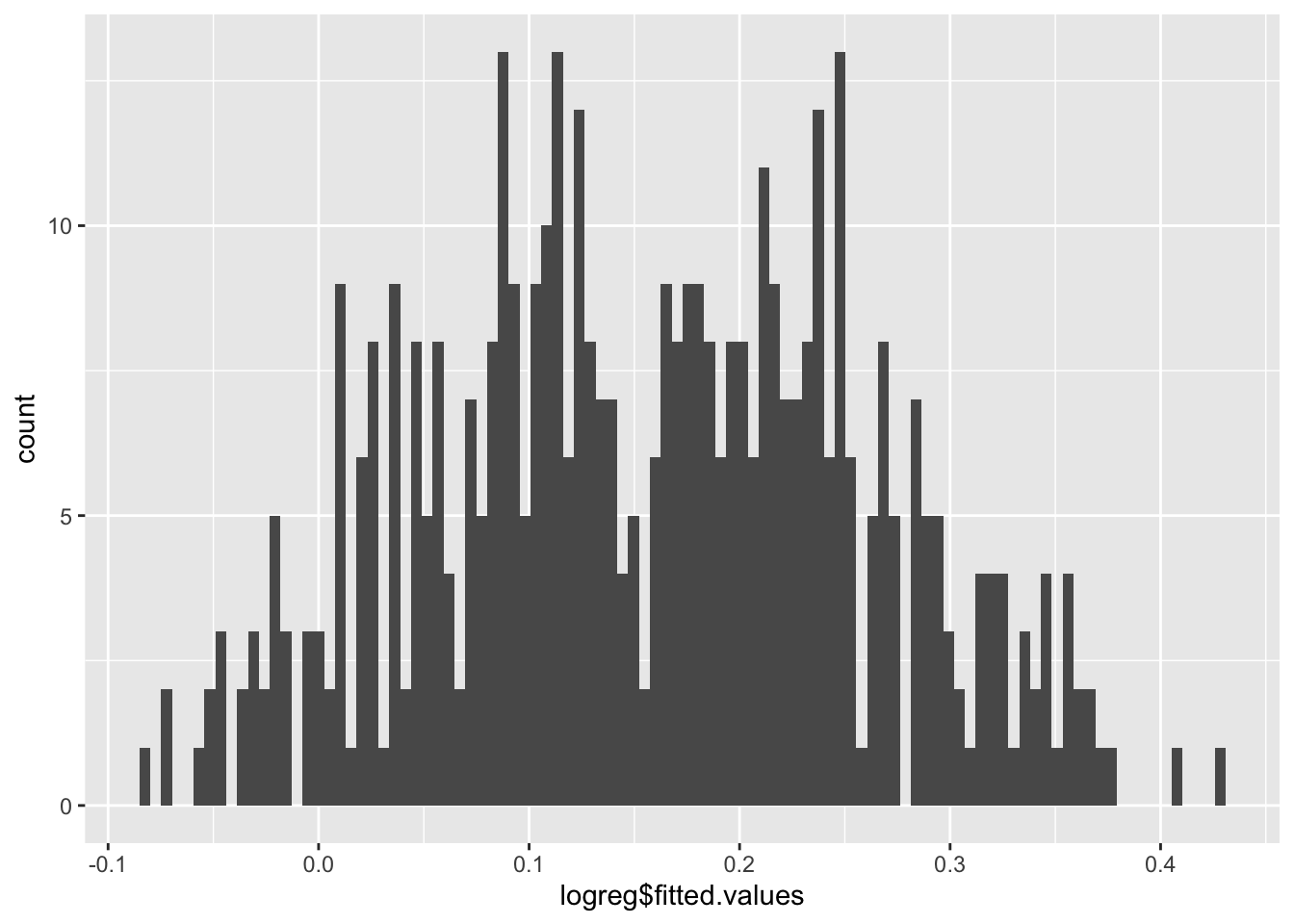

Number of Fisher Scoring iterations: 2Let’s look at a histogram of the fitted values and the plots of the OLS results.

Not very satisfactory; note the predicted probabilities where \(\hat{y}<0.\) Let’s re-run as a logistic regression.

We could convert Gender and Diagnosis to factors, but R will treat response variables with binary values (character or numeric) as factors when using Logistic Regression, so it will create dummy variables for \(Y\) behind the scenes.

Show code

Call:

glm(formula = Diagnosis ~ Gender + Cohesion_score + Guardian_status,

family = binomial, data = depr)

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 1.00832 0.50478 1.998 0.04577 *

GenderMale -0.68744 0.28848 -2.383 0.01718 *

Cohesion_score -0.04358 0.01046 -4.167 3.09e-05 ***

Guardian_status -0.74835 0.28602 -2.616 0.00889 **

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 398.47 on 457 degrees of freedom

Residual deviance: 360.38 on 454 degrees of freedom

AIC: 368.38

Number of Fisher Scoring iterations: 5Three slopes. All are negative. How do we interpret the slopes?

-

Increasing the value of \(X\) with a negative slope will reduce the probability.

- Males have lower probability of depression than females.

- Increasing cohesion will lower the probability of depression.

- Having a parent or guardian will lower the probability of depression.

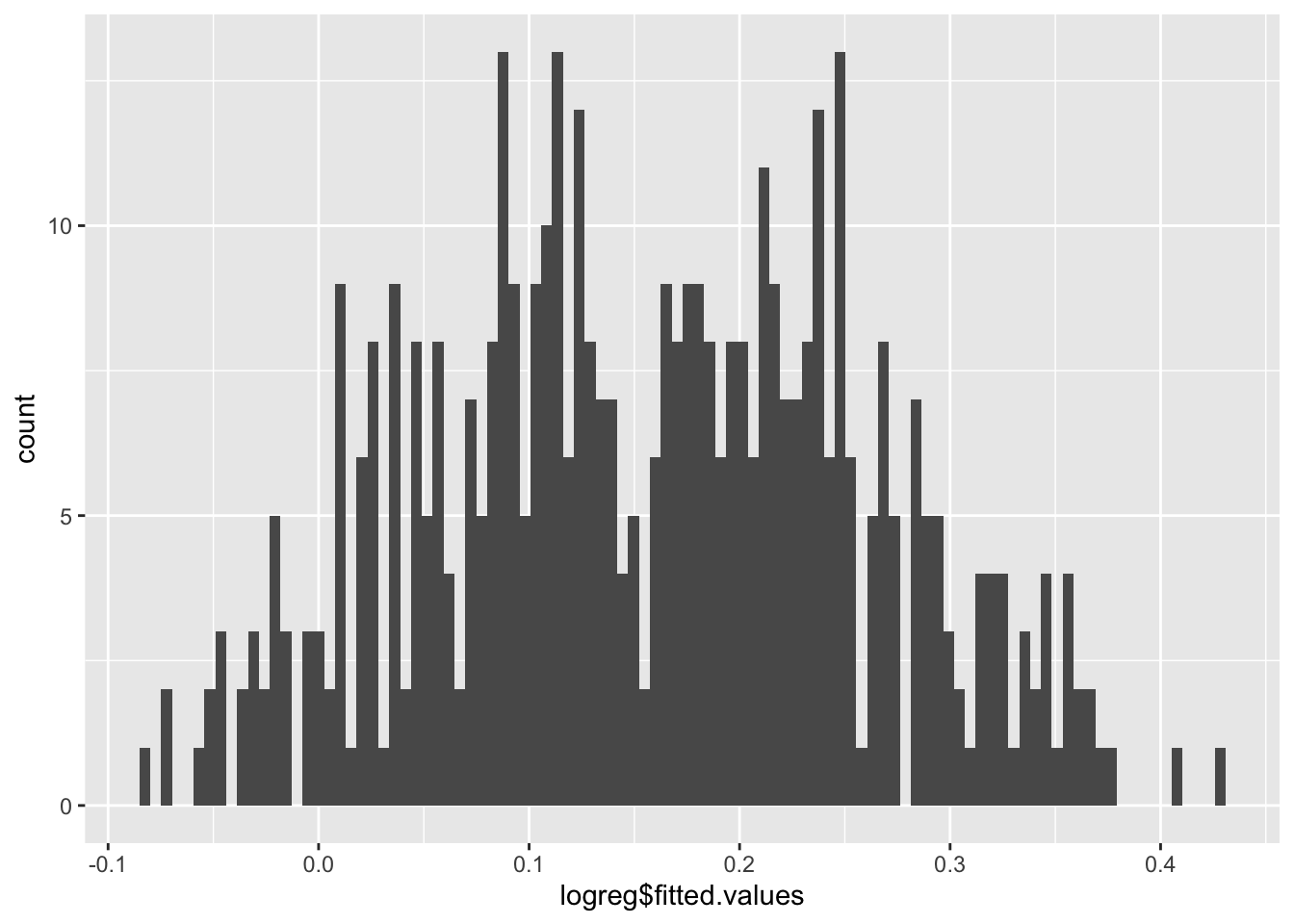

We can plot the fitted values using a histogram.

Show code

Now the predicted probabilities are \(0<\hat{y}<1.\)

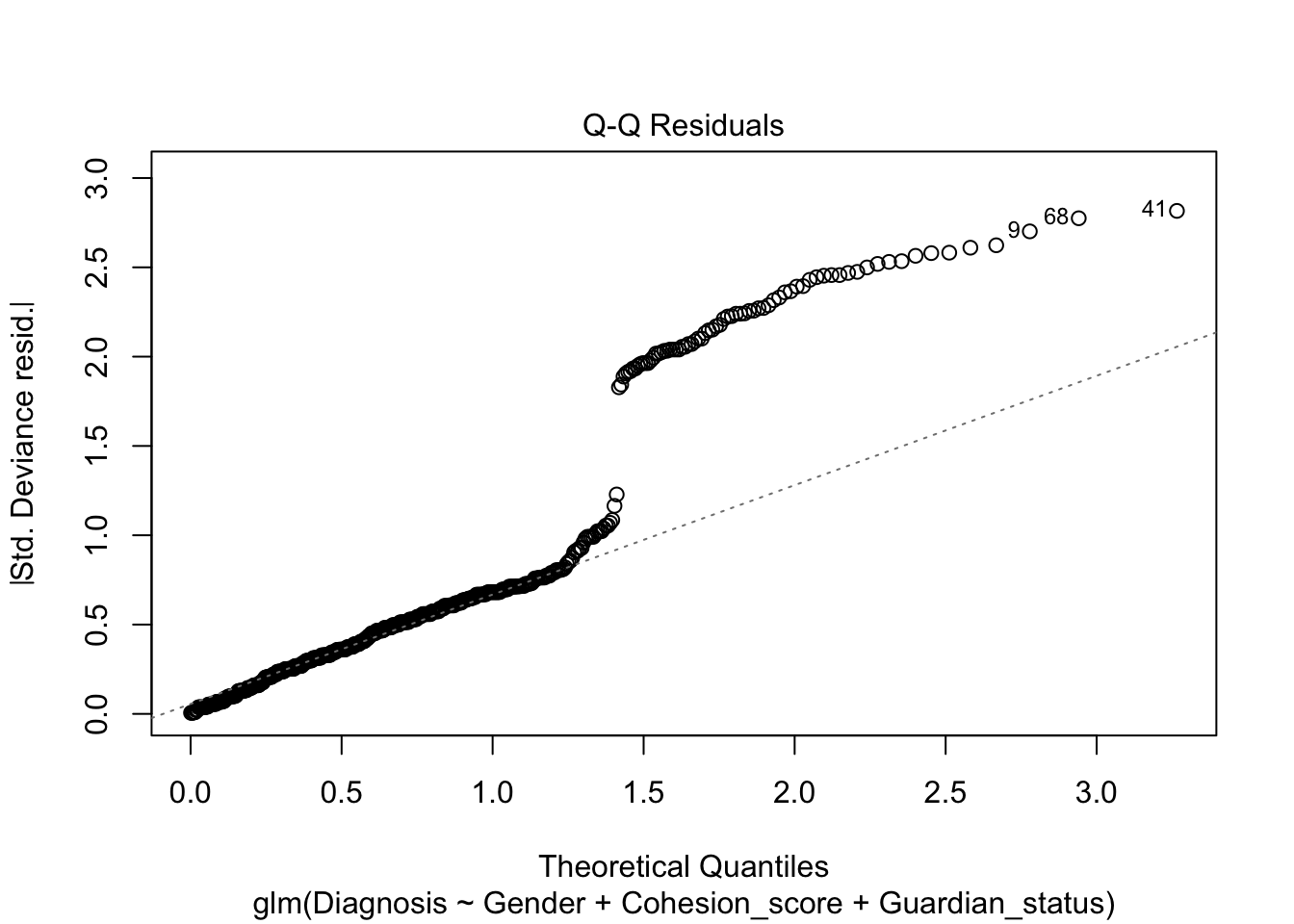

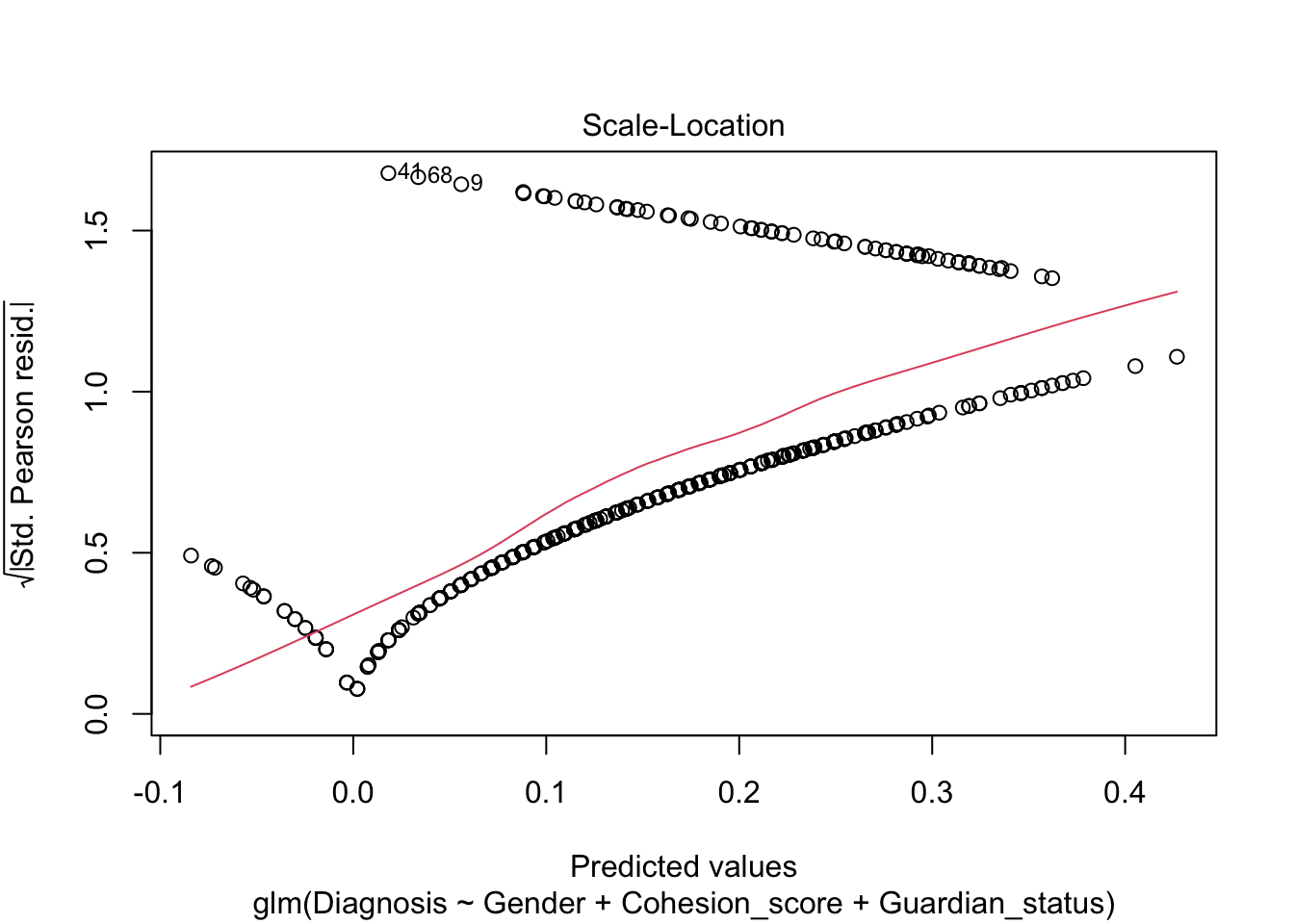

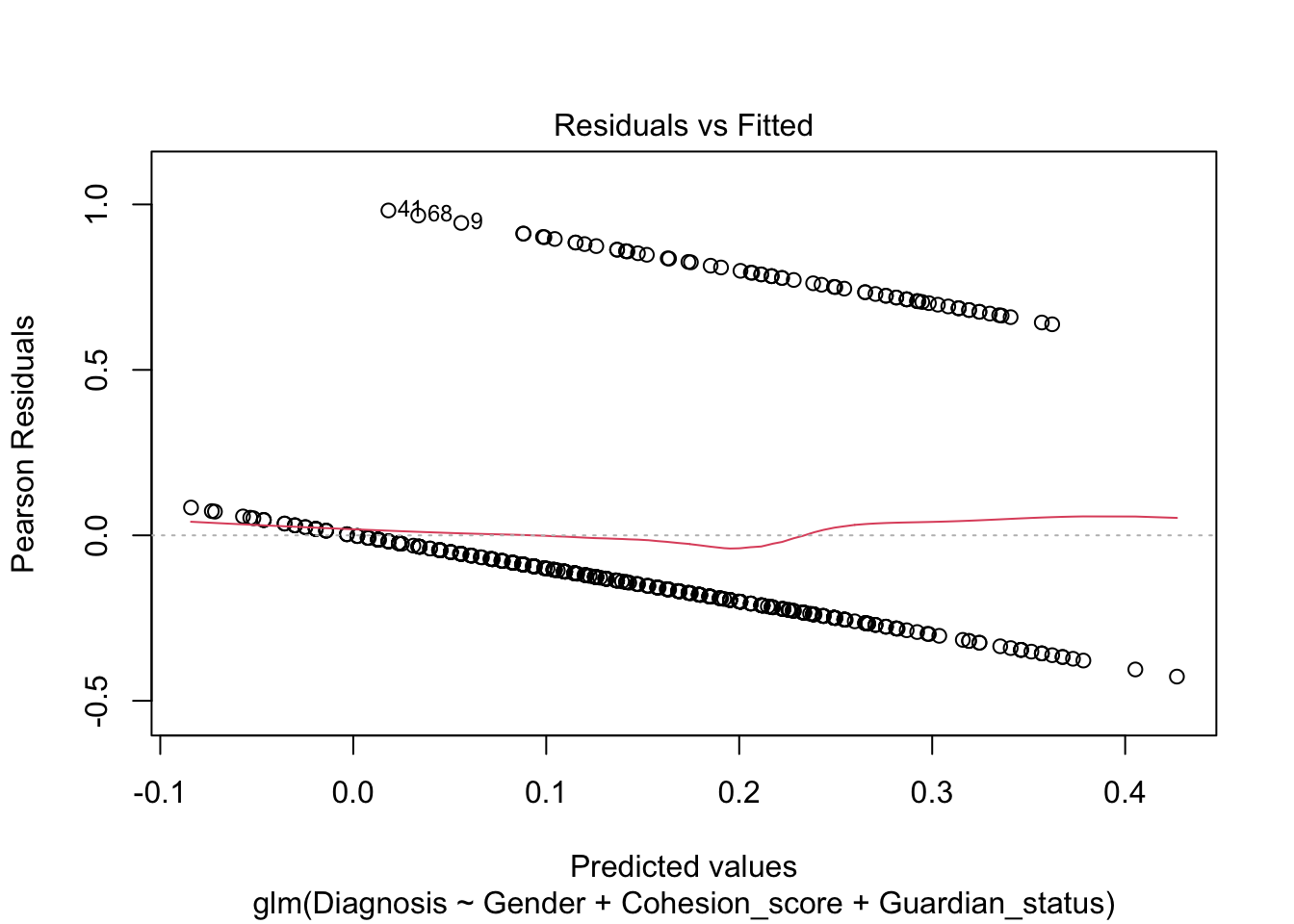

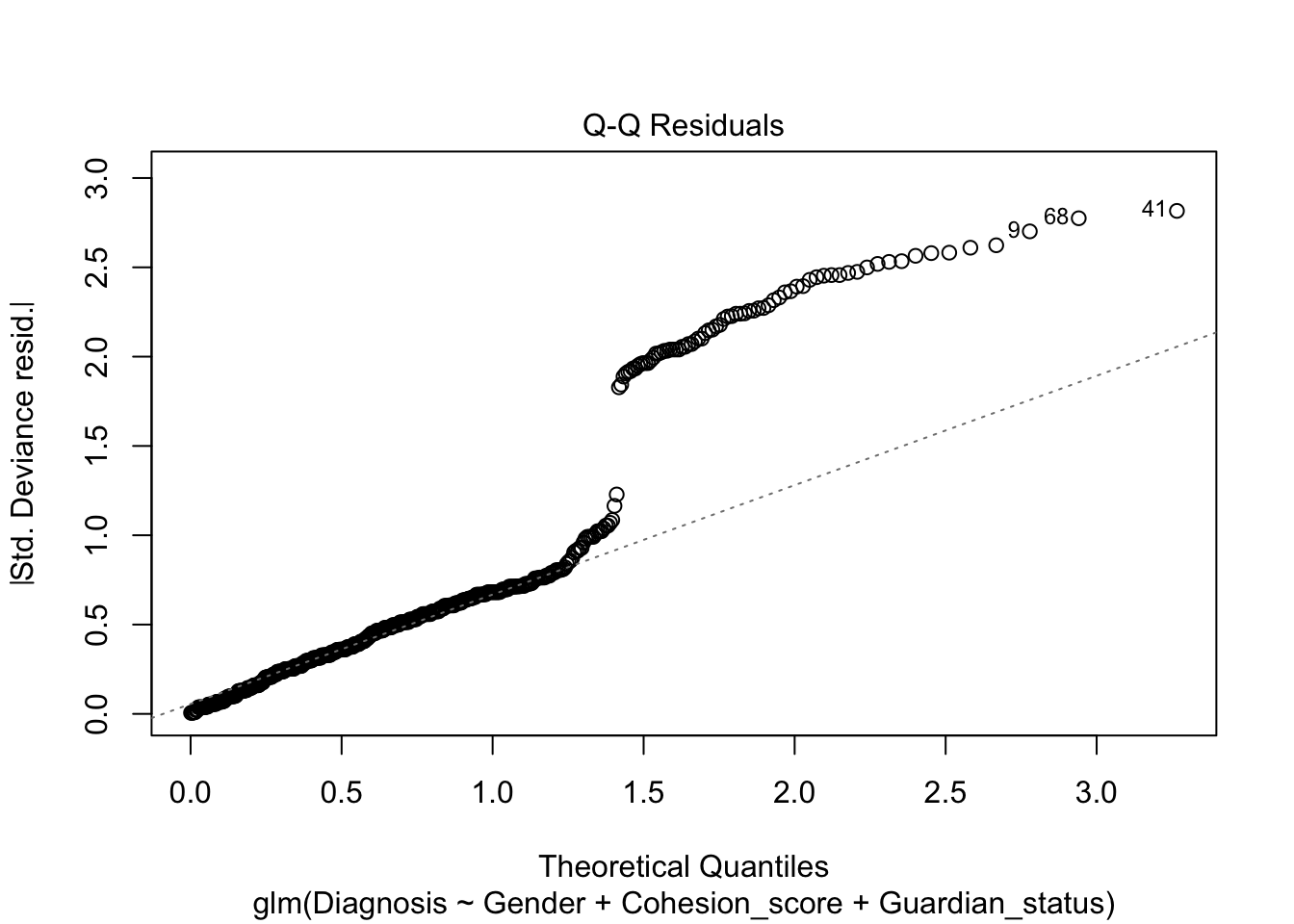

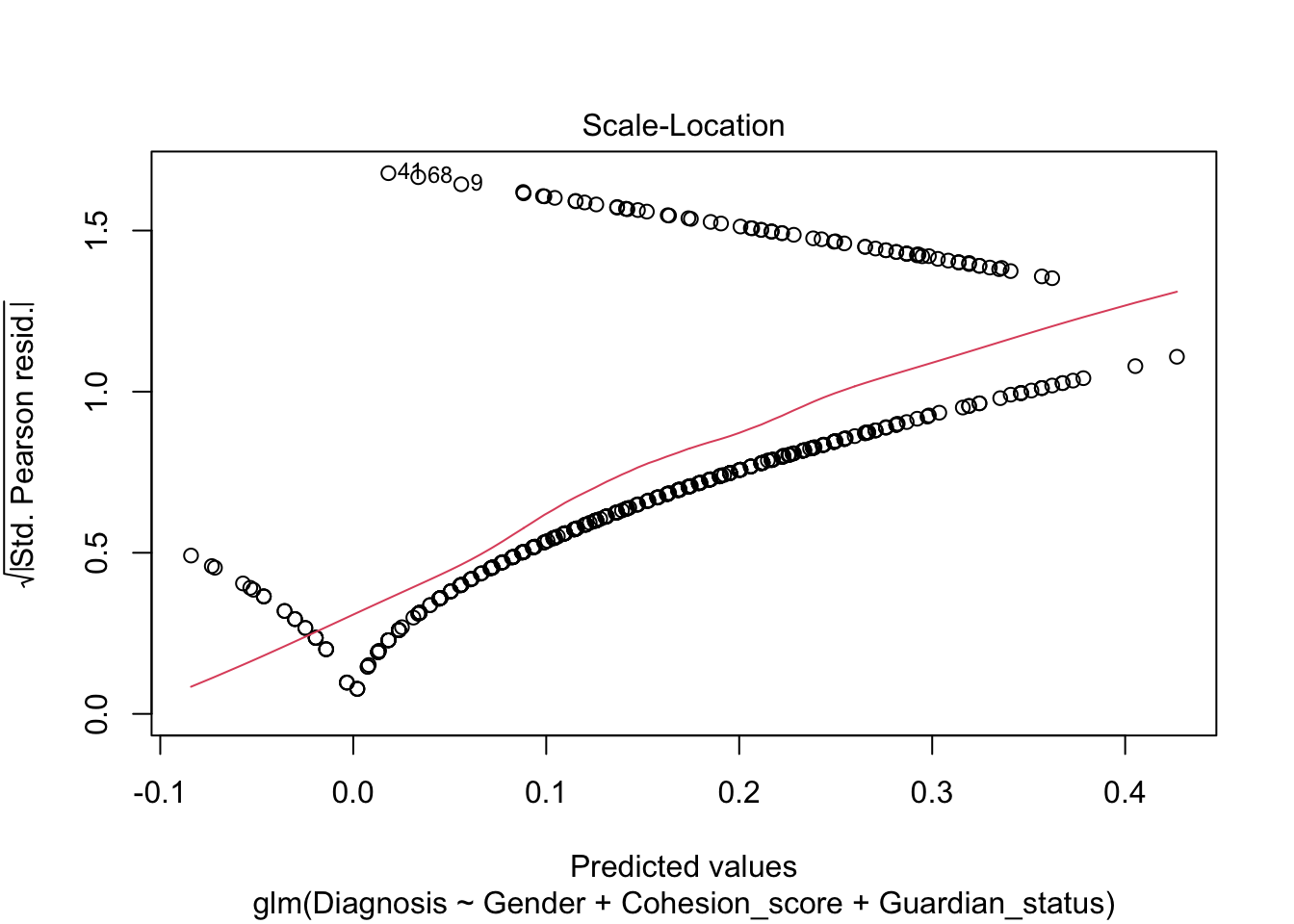

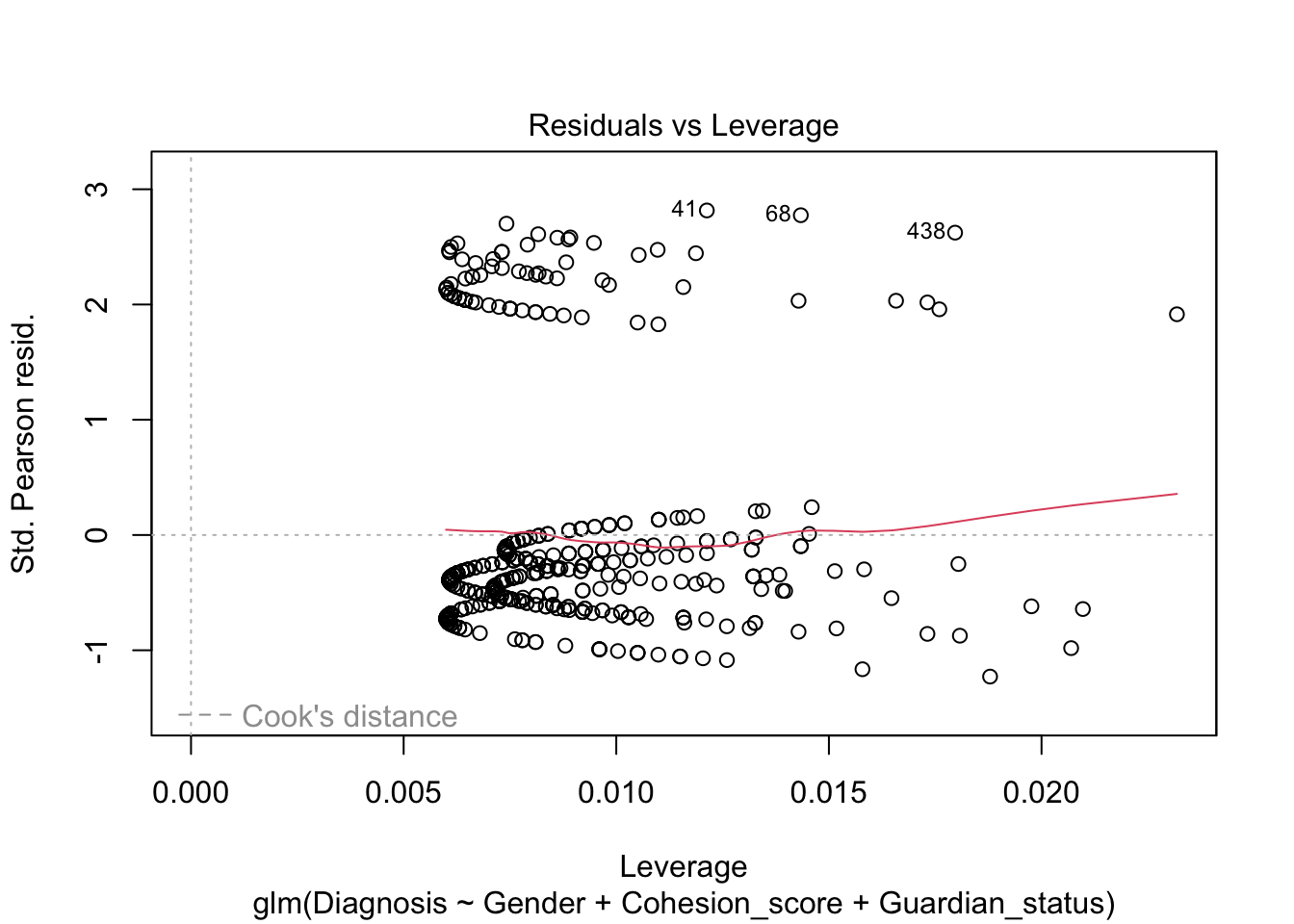

Note, the plot() defaults for the residuals are still not very useful.

We see a measure of “Deviance” in bottom of the summary. What is this instead of \(R^2\)?

4.8.8 Deviance

Deviance is a measure of the goodness of fit used in general linear models.

- It can be considered a replacement for, or extension of, the concept of Error Sum of Squares (aka SSE or Residual Sum of Squares (RSS)) when using maximum likelihood methods.

- There are multiple approaches to using and calculating deviance. We are using the one used in R.

For logistic regression, deviance is considered the sum of the individual deviance for each \(\hat{y}_i\) , \(D(y, \hat{y}) = \sum_i d(y_i, \hat{y}_i)\).

- The Total Deviance for a fit model, where the likelihood \(L\) has been maximized, is calculated using the following formula :

\[ D(y, \hat{y}) = D_\text{saturated}-2\,\ln\left(\mathrm{L}(\hat{\beta_0}, \hat{\beta}_1, \ldots, \hat{\beta}_p)\right) \tag{4.9}\]

- The \(D_\text{saturated}\) is the deviance for a perfect saturated model where every point as an individual fit. It is usually scaled so \(D_\text{saturated}=0.\)

- The 2 in Equation 4.9 is needed so the distribution of the deviance is approximately \(\chi^2\).

- The log is used to help scale the results.

The Null Deviance is the calculated deviance for the Null (Dull) Hypothesis model with only an intercept term \(\hat{\beta}_0\) and assuming all the other \(\beta_i = 0\) i.e., they have no predictive power in explaining the variance of the logit(\(y\)).

The Residual Deviance is deviance for the fitted model, with the best fitting \(\hat{\beta}_i.\)

The lower the Residual Deviance the better the fit.

Since the calculated deviance is always a comparison with a perfect, saturated model, the Null Hypothesis is that if all the assumptions are true, the fitted model is a good fit, close to “perfect”.

Thus we want the \(p\)-value to be close to 1 so there is no evidence to reject the model.

- The deviances follow a \(\chi^2\) distribution with \(df = n - p\) degrees of freedom.

- We can use this with the output of the fitted model object to calculate a \(p\)-value for the Null Hypothesis being true.

[1] 0.9774268[1] 0.9995629The closer the \(p\)-value is to 1, the closer the model corresponds to a “perfect” saturated model.

4.8.9 Predicting New Values

We have a model, so we can predict for new cases using the estimates produced by our model.

Call:

glm(formula = Diagnosis ~ Gender + Cohesion_score + Guardian_status,

family = binomial, data = depr)

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 1.00832 0.50478 1.998 0.04577 *

GenderMale -0.68744 0.28848 -2.383 0.01718 *

Cohesion_score -0.04358 0.01046 -4.167 3.09e-05 ***

Guardian_status -0.74835 0.28602 -2.616 0.00889 **

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 398.47 on 457 degrees of freedom

Residual deviance: 360.38 on 454 degrees of freedom

AIC: 368.38

Number of Fisher Scoring iterations: 5We can get the logit manually, using Equation 4.5 as follows:

\[ \begin{align} \text{logit}(Y) = \ln\frac{p}{1-p} &= \beta_0 + \beta_1 X_1 + \beta_2 X_2 + \beta_3 X_3 \\ &= 1.00832 - 0.68744 * \text{GenderMale} - 0.04358 * \text{Cohesion score}- 0.74835 * \text{Guardian status} \\ \end{align} \]

If we predict for a Male, living with parents and cohesion score of 20, we get a logit (aka log odds) of

Using R we enter the new data into the predict() function.

Show code

1

-1.29902 This result is the logit of the probability.

We could convert to the probability for \(Y = 1\) using Equation 4.3 as follows:

\[ \begin{align} p &= \frac{e^{\beta_0 + \beta_1 X_1 + \beta_2 X_2 + \beta_3 X_3}}{1 + e^{\beta_0 + \beta_1 X_1 + \beta_2 X_2 + \beta_3 X_3}} \\ &= \frac{e^{1.00832 - 0.68744 * \text{GenderMale}- 0.04358 * \text{Cohesion score}- 0.74835 * \text{Guardian status}}}{1 + e^{1.00832 - 0.68744 * \text{GenderMale} - 0.04358 * \text{Cohesion score}- 0.74835 * \text{Guardian status}}} \\ \end{align} \]

- With a result of

Or, we can use the predict() function’s argument type = "response" to have R transform the output into the original space of the \(Y\).

Show code

1

0.21433 Show code

1

0.3516983 Show code

1

0.534139 What can we do to help college students?

Assuming fixed gender and parental/guardian situations, focus on Cohesion Score.

4.8.10 Converting Logistic Regression Probabilities into Predictions (Classifications)

Logistic Regression is a useful parametric classification method for binary response variables where we are trying to estimate the probability of a success.

- Categorical, binary, response is represented by dummy variable with values 0 or 1.

- The Logistic Regression Model from Equation 4.6 can be seen as:

\[ E[Y] = p(Y = 1|X) = p(X) = \frac{e^{\beta_0 + \beta_1X + \ldots, \beta_pX_p}}{1 + e^{\beta_0 + \beta_1X + \ldots, \beta_pX_p}} \]

- All \(\beta_j\) are estimated by the method of Maximum Likelihood Estimate (MLE), not Least Squares.

- MLE means choosing values for the parameters (\(\beta\)s) that make what we observe in the \(Y\) and \(X\) have the highest probability of occurring.

- We get estimates for \(\hat{\beta}_j\) which results in \(\hat{p}(Y = 1)\) but this is a probability of an outcome, not a prediction.

4.8.11 Convert \(p(Y=1)\) into a Classification Prediction

There are several ways to convert to a classification:

- We could just say if \(\hat{p}(Y = 1) \geq .5\) predict a success. That approach is not robust and it leaves out information about the risks of the outcomes.

- We could tune the model to select the “best” threshold.

- We could use a Decision-theoretic approach which considers benefits and risks or costs in terms of gains and losses. We’ll discuss this in a few minutes.

4.8.12 Tuning the Model to Select a Threshold: Example with Titanic Data

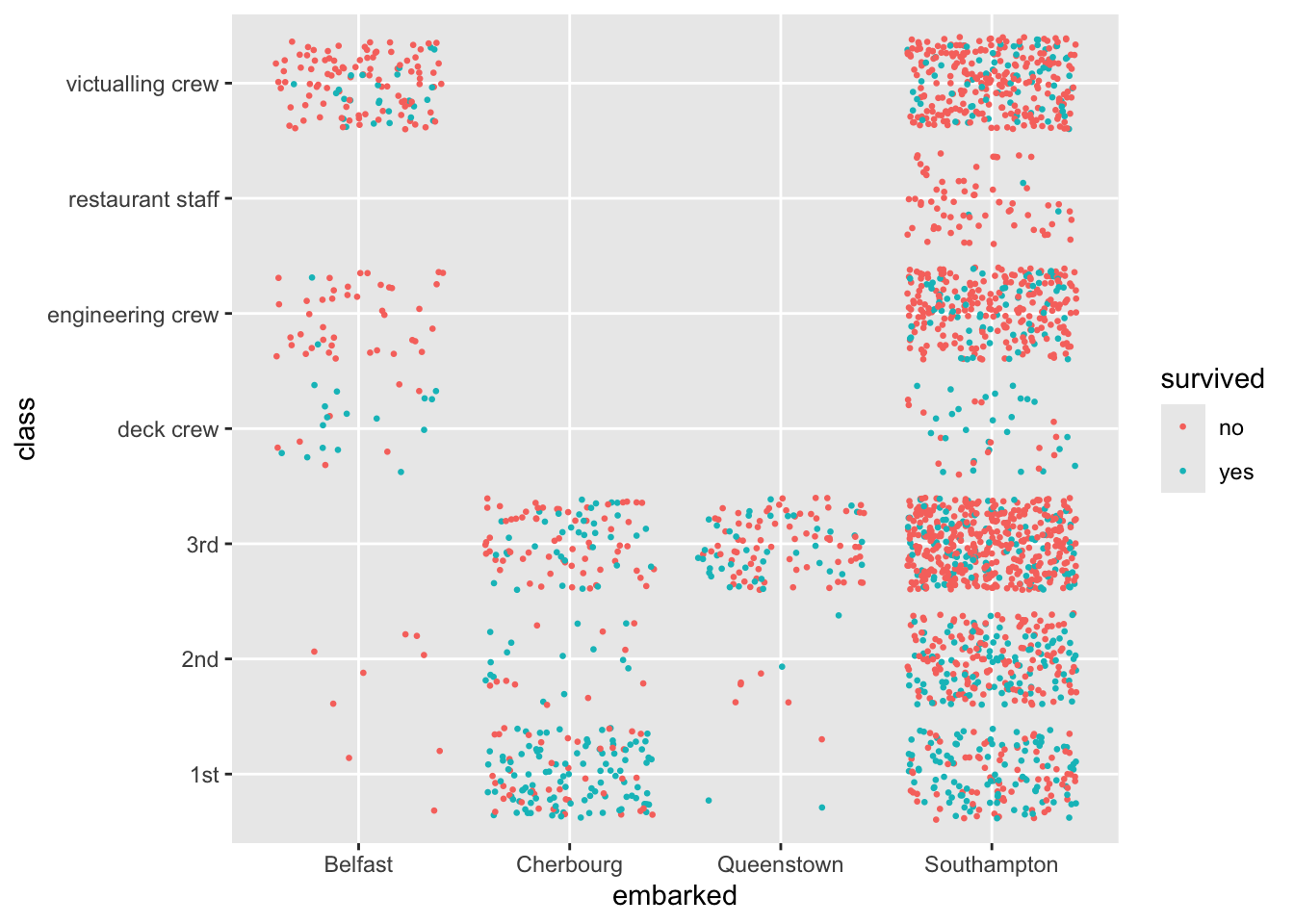

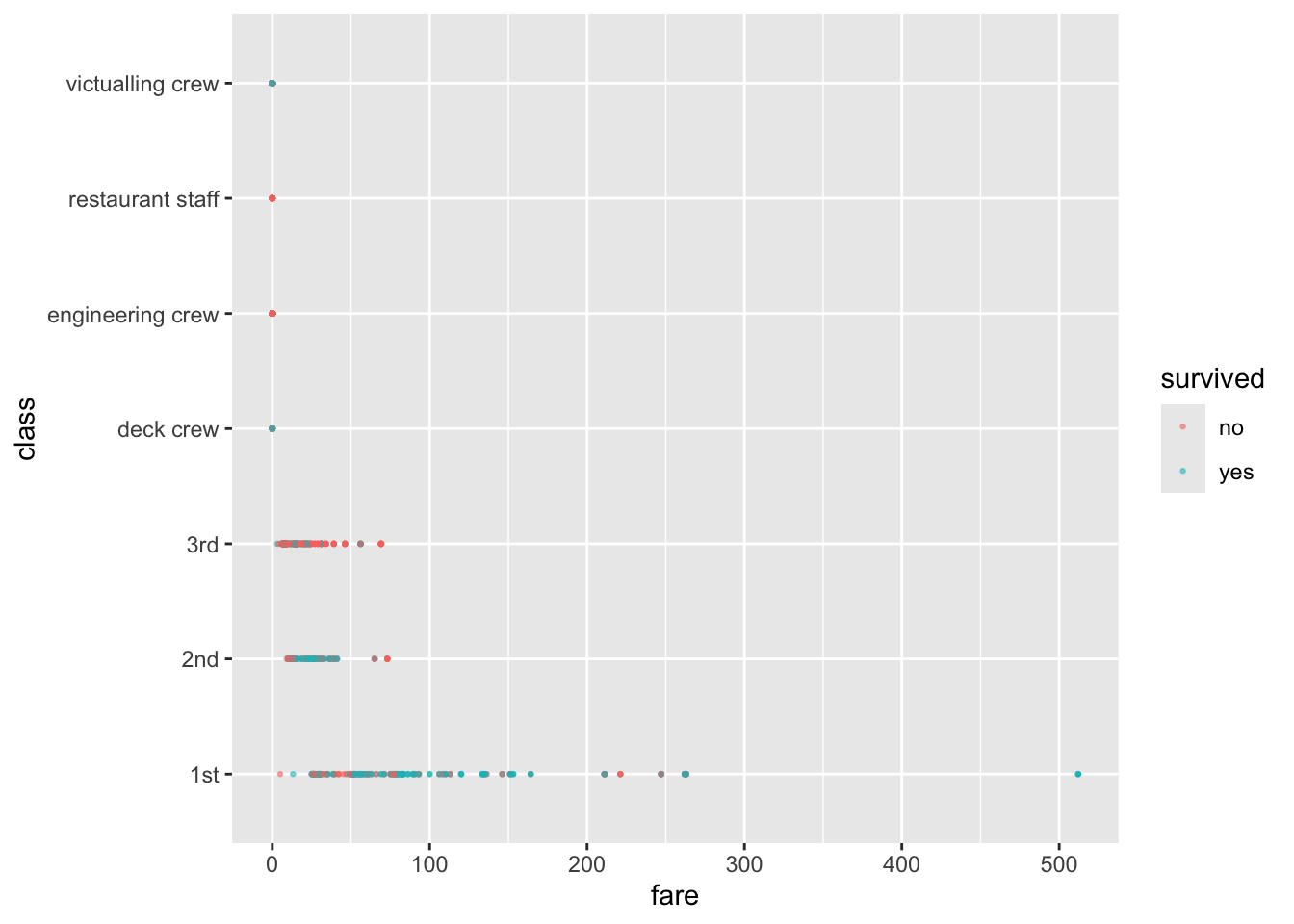

Let’s consider data about the outcomes of the ill-fated voyage of the RMS Titanic as an example (Trailer Clip). The ship had 2,207 passengers and crew and only 711 survived.

The {DALEX} package’s titanic data set includes a complete list of the passengers and crew. You can get it from the package or from https://raw.githubusercontent.com/rressler/data_raw_courses/main/titanic_dalex.csv/

Show code

Rows: 2,207

Columns: 9

$ gender <chr> "male", "male", "male", "female", "female", "male", "male", "…

$ age <dbl> 42.0000000, 13.0000000, 16.0000000, 39.0000000, 16.0000000, 2…

$ class <chr> "3rd", "3rd", "3rd", "3rd", "3rd", "3rd", "2nd", "2nd", "3rd"…

$ embarked <chr> "Southampton", "Southampton", "Southampton", "Southampton", "…

$ country <chr> "United States", "United States", "United States", "England",…

$ fare <dbl> 7.1100, 20.0500, 20.0500, 20.0500, 7.1300, 7.1300, 24.0000, 2…

$ sibsp <dbl> 0, 0, 1, 1, 0, 0, 1, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0…

$ parch <dbl> 0, 2, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 0…

$ survived <chr> "no", "no", "no", "yes", "yes", "yes", "no", "yes", "yes", "y… gender age class embarked

Length:2207 Min. : 0.1667 Length:2207 Length:2207

Class :character 1st Qu.:22.0000 Class :character Class :character

Mode :character Median :29.0000 Mode :character Mode :character

Mean :30.4367

3rd Qu.:38.0000

Max. :74.0000

NA's :2

country fare sibsp parch

Length:2207 Min. : 0.000 Min. :0.0000 Min. :0.0000

Class :character 1st Qu.: 0.000 1st Qu.:0.0000 1st Qu.:0.0000

Mode :character Median : 7.151 Median :0.0000 Median :0.0000

Mean : 19.773 Mean :0.2972 Mean :0.2294

3rd Qu.: 20.111 3rd Qu.:0.0000 3rd Qu.:0.0000

Max. :512.061 Max. :8.0000 Max. :9.0000

NA's :26 NA's :10 NA's :10

survived

Length:2207

Class :character

Mode :character

no yes

1496 711 # A tibble: 1 × 9

gender age class embarked country fare sibsp parch survived

<int> <int> <int> <int> <int> <int> <int> <int> <int>

1 0 2 0 0 81 26 10 10 0Variable Notes

-

pclass: A proxy for socio-economic status (SES): 1st = Upper, 2nd = Middle, 3rd = Lower. -

age: Age is fractional if less than 1. If the age is estimated, is it in the form of xx.5. -

sibsp: Sibling = brother, sister, stepbrother, stepsister; Spouse = husband, wife (mistresses and fiancés were ignored) -

parch: Parent = mother, father; Child = daughter, son, stepdaughter, or stepson. Some children traveled only with a nanny, thereforeparch=0for them. -

embarked: Port of embarkation (C = Cherbourg, Q = Queenstown, S = Southampton)

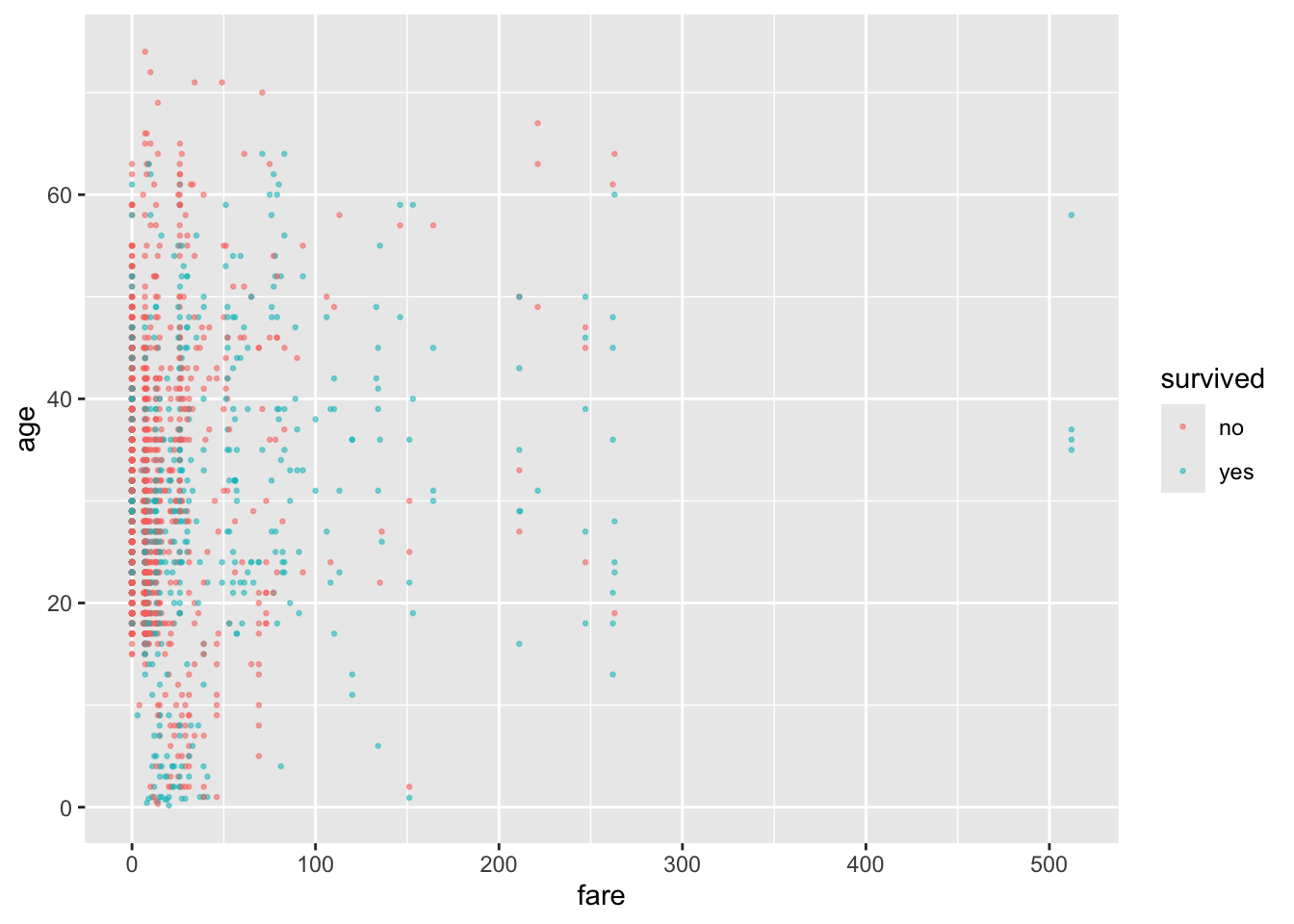

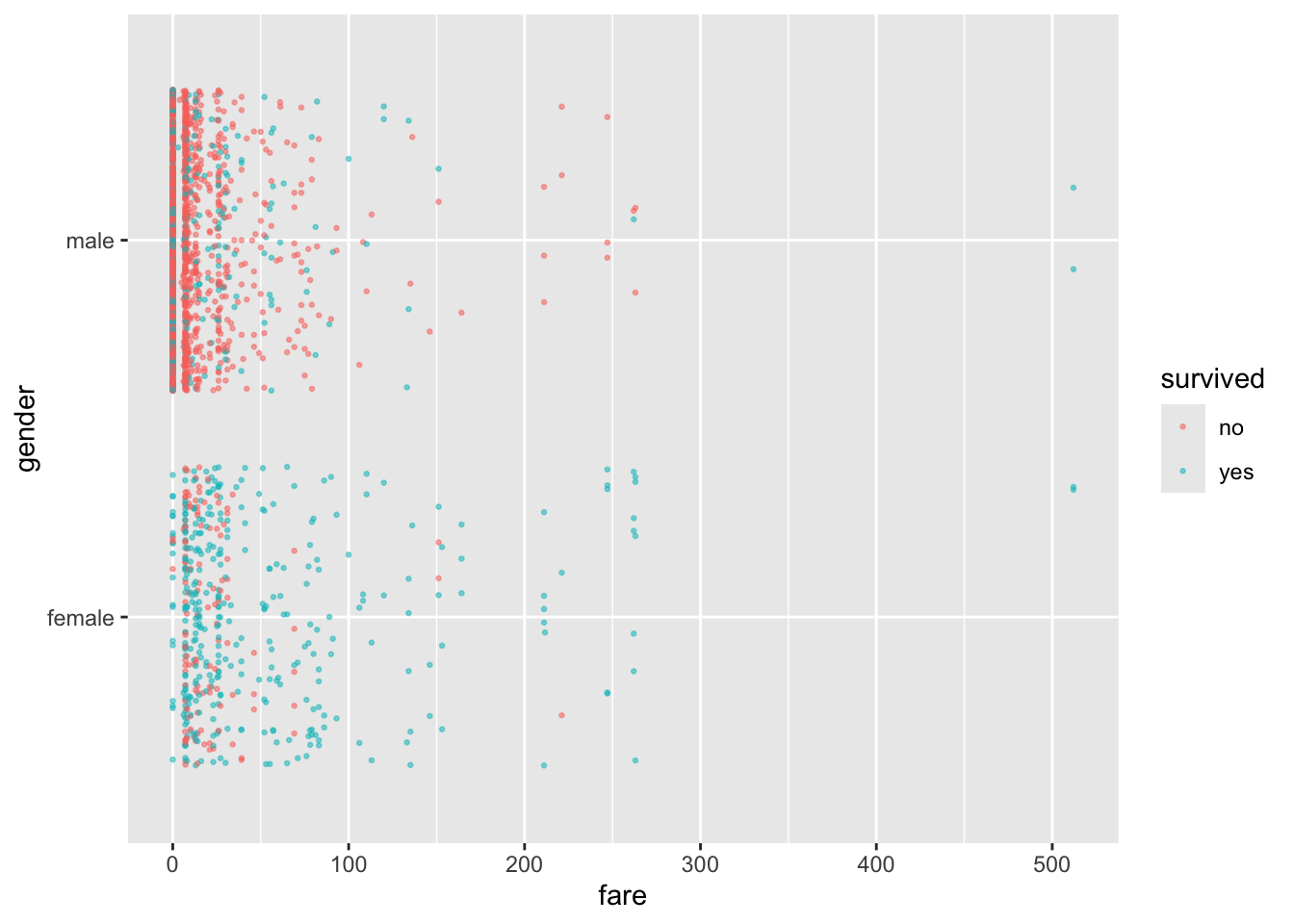

Let’s plot some of the data.

Let’s create an initial logistic regression model using glm().

logreg <- glm(as.factor(survived) ~ age + gender + class + fare + embarked,

data = titanic, family = "binomial")

summary(logreg)

Call:

glm(formula = as.factor(survived) ~ age + gender + class + fare +

embarked, family = "binomial", data = titanic)

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 3.1774860 0.4078547 7.791 6.66e-15 ***

age -0.0317519 0.0051464 -6.170 6.84e-10 ***

gendermale -2.5835288 0.1486233 -17.383 < 2e-16 ***

class2nd -1.1103673 0.2455656 -4.522 6.14e-06 ***

class3rd -2.2245019 0.2425002 -9.173 < 2e-16 ***

classdeck crew 1.0555309 0.3449171 3.060 0.002212 **

classengineering crew -0.9877114 0.2573233 -3.838 0.000124 ***

classrestaurant staff -3.2304528 0.6489321 -4.978 6.42e-07 ***

classvictualling crew -1.1119024 0.2540240 -4.377 1.20e-05 ***

fare -0.0008393 0.0017167 -0.489 0.624923

embarkedCherbourg 0.7398312 0.2867817 2.580 0.009887 **

embarkedQueenstown 0.2568812 0.3322973 0.773 0.439495

embarkedSouthampton 0.1106313 0.2152828 0.514 0.607330

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 2749.3 on 2178 degrees of freedom

Residual deviance: 2038.9 on 2166 degrees of freedom

(28 observations deleted due to missingness)

AIC: 2064.9

Number of Fisher Scoring iterations: 5What can we say about the significance of the slopes?

-

fareandembarkeddo not seem significant. Why do you think that is?

Let’s predict for the entire data set and look at the summary().

Min. 1st Qu. Median Mean 3rd Qu. Max.

0.0166 0.1338 0.2210 0.3254 0.5182 0.9670 We can make a reduced model without fare and embarked.

- When we do this, recall that

agehad twoNAs so we will remove those rows before running our model.

titanic <- tidyr::drop_na(titanic, age)

logreg <- glm(as.factor(survived) ~ age + gender + class,

data = titanic, family = "binomial")

summary(logreg)

Call:

glm(formula = as.factor(survived) ~ age + gender + class, family = "binomial",

data = titanic)

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 3.481343 0.276971 12.569 < 2e-16 ***

age -0.032148 0.005088 -6.319 2.63e-10 ***

gendermale -2.608988 0.144603 -18.042 < 2e-16 ***

class2nd -1.300607 0.210413 -6.181 6.36e-10 ***

class3rd -2.275826 0.197398 -11.529 < 2e-16 ***

classdeck crew 0.862530 0.297321 2.901 0.00372 **

classengineering crew -1.157325 0.200923 -5.760 8.41e-09 ***

classrestaurant staff -3.392692 0.628902 -5.395 6.87e-08 ***

classvictualling crew -1.297206 0.190148 -6.822 8.97e-12 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 2772.6 on 2204 degrees of freedom

Residual deviance: 2071.0 on 2196 degrees of freedom

AIC: 2089

Number of Fisher Scoring iterations: 5Let’s predict for Rose and Jack from the movie.

1

0.9495412 1

0.1111957 1

0.2491098 1

0.5491511 Let’s tune the model by creating training and testing data and then searching for the threshold that reduces the mis-classification rate.

Create the model using the training data and the predictions with the test data.

logreg <- glm(as.factor(survived) ~ age + gender + class,

data = titanic[Z,], family = "binomial")

summary(logreg)

Call:

glm(formula = as.factor(survived) ~ age + gender + class, family = "binomial",

data = titanic[Z, ])

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 3.317224 0.374814 8.850 < 2e-16 ***

age -0.031366 0.007075 -4.433 9.28e-06 ***

gendermale -2.445826 0.201992 -12.109 < 2e-16 ***

class2nd -1.278162 0.294380 -4.342 1.41e-05 ***

class3rd -2.175948 0.266656 -8.160 3.35e-16 ***

classdeck crew 1.027517 0.431904 2.379 0.017358 *

classengineering crew -1.153568 0.275801 -4.183 2.88e-05 ***

classrestaurant staff -3.010567 0.788051 -3.820 0.000133 ***

classvictualling crew -1.272025 0.258695 -4.917 8.78e-07 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 1379.1 on 1101 degrees of freedom

Residual deviance: 1059.4 on 1093 degrees of freedom

AIC: 1077.4

Number of Fisher Scoring iterations: 5Let’s check for a range of thresholds between 0 and 1.

- We already have the predictions, we just need to calculate the results by comparing to each threshold.

[1] 101[1] 0.00 0.01 0.02 0.03 0.04 0.05Show code

TPR <- FPR <- err.rate <- rep(0, length(threshold))

for (i in seq_along(threshold)) {

Yhat <- rep(NA_character_, nrow(titanic[-Z,]))

Yhat <- ifelse(Prob >= threshold[[i]], "yes", "no")

err.rate[i] <- mean(Yhat != titanic[-Z,]$survived)

TPR[[i]] <- sum(Yhat == "yes" & titanic[-Z,]$survived == "yes") /

sum(titanic[Z,]$survived == "yes")

FPR[[i]] <- sum(Yhat == "yes" & titanic[-Z,]$survived == "no") /

sum(titanic[-Z,]$survived == "no")

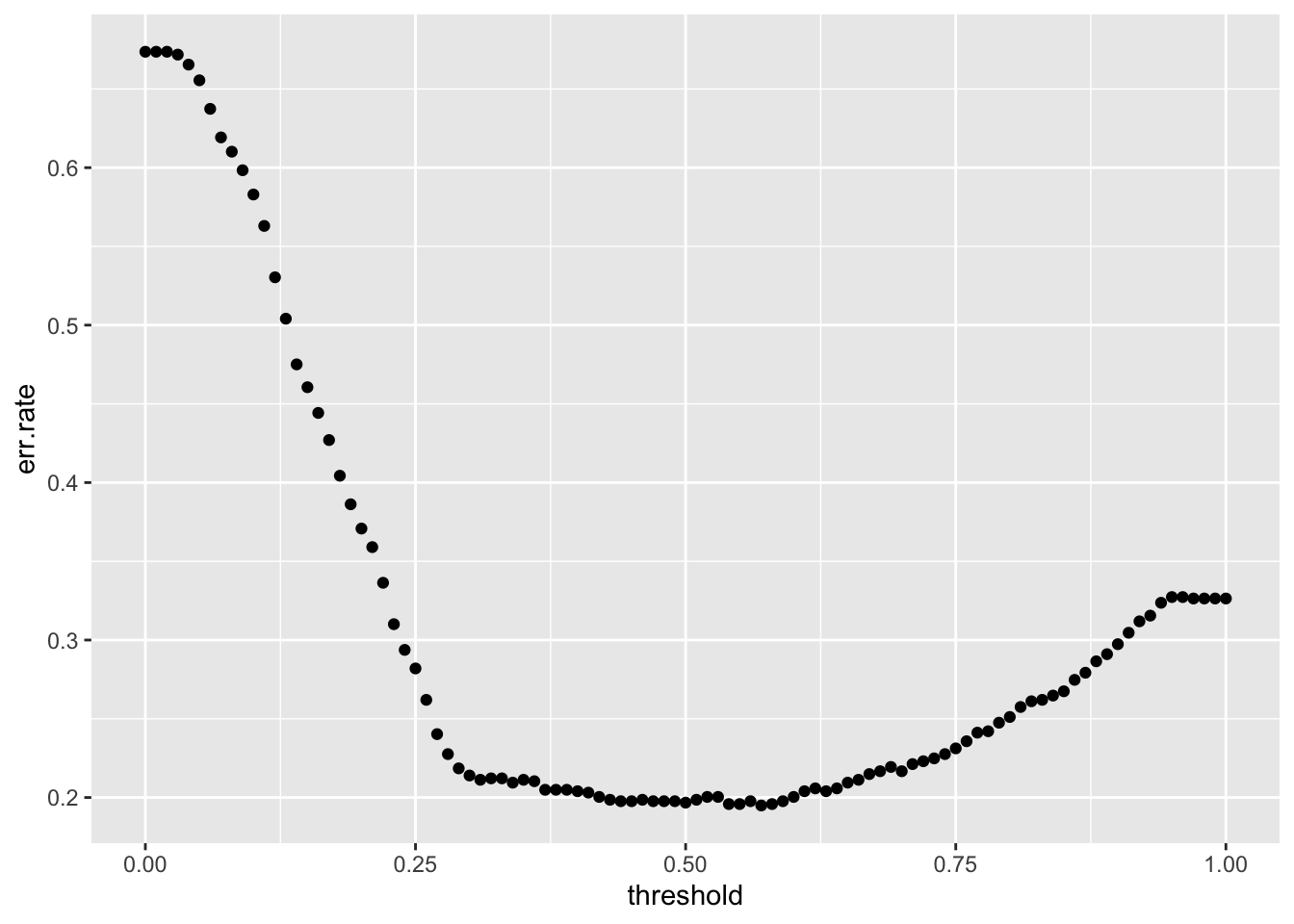

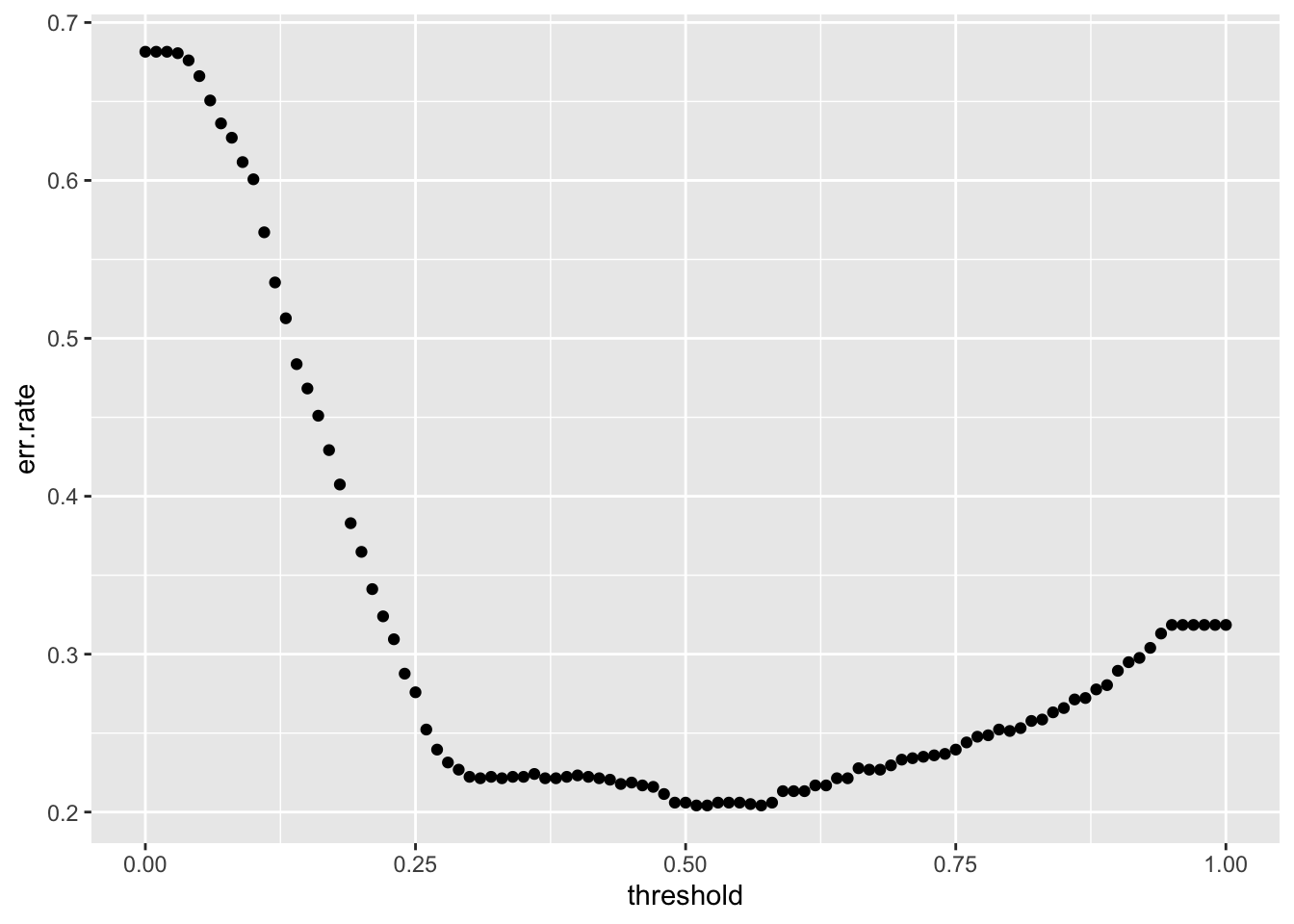

}Now we have the data to plot the error rate.

no yes

751 351 [1] 0.3185118So a low threshold says everyone will survive when in fact only 31.85% of our training data survived.

What is the best threshold?

[1] 58[1] 0.57[1] 0.1949229Let’s reset our Yhat to the threshold that minimizes the error rate and create a confusion matrix .

Show code

Yhat no yes

no 690 162

yes 53 198So a threshold of 0.57 for this sample is correct and we predict 80.51% correctly

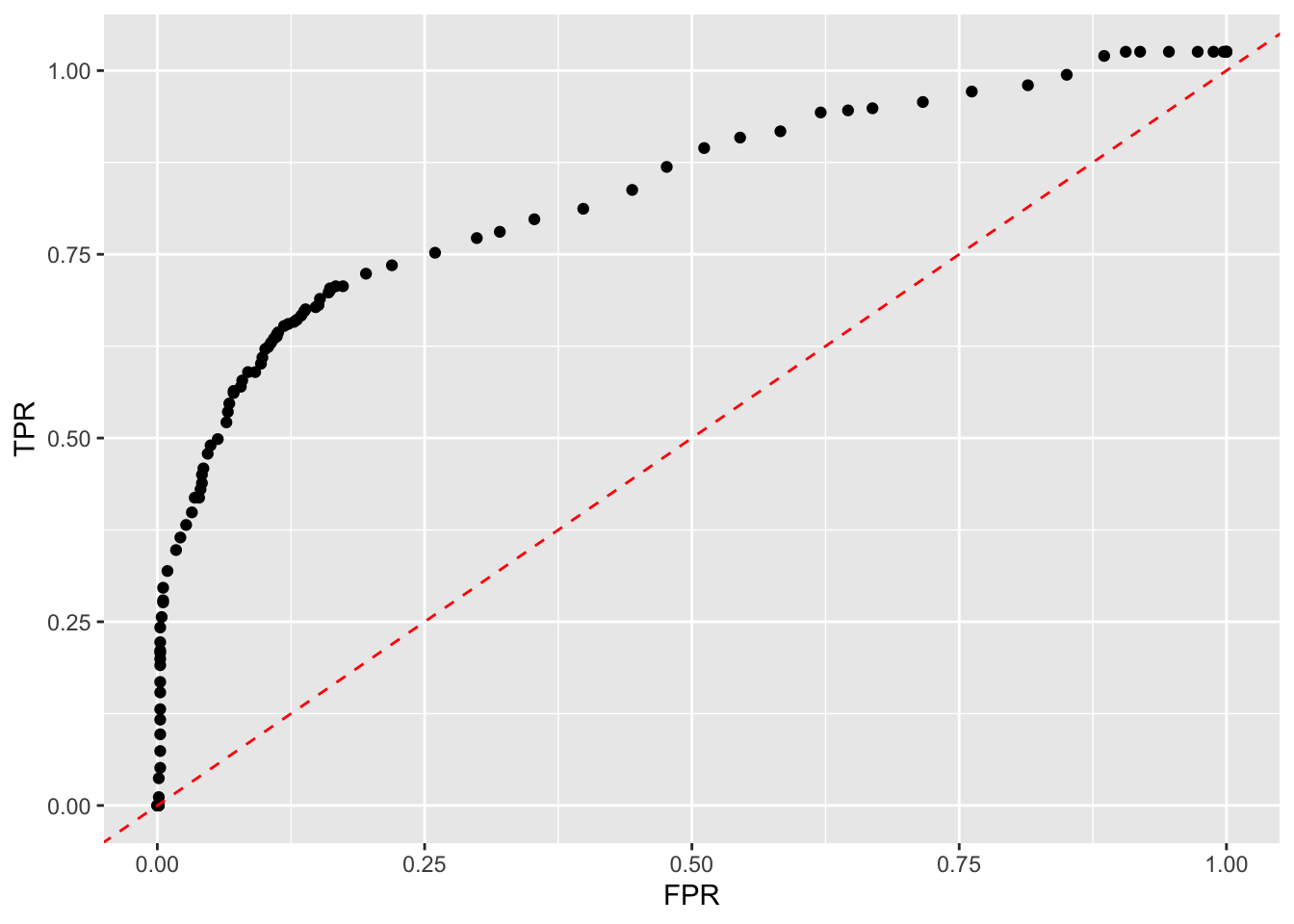

We can also plot an ROC Curve for the training data.

Show code

Note the top right of the curve depicts low thresholds since our data has a low rate of survival.

- The lower the threshold, the more likely to say someone survived so you will catch all the actual survivors, but, generate a lot of False Positives.

- The higher the threshold, the more likely to say someone died, so will not get all of the survivors (a lower True Positive Rate) but, have lower chance of a False Positive.

Show code

ggplot(tibble(TPR,FPR),

aes(FPR, TPR)) +

geom_point() +

geom_point(aes(FPR[11], TPR[11]),

color = "red", size = 5) +

annotate("text", x = .85, y = .8, label = "Low Threshold", color = "red") +

geom_point(aes(FPR[90], TPR[90]),

color = "blue", size = 5) +

annotate("text", x = .2, y = .08, label = "High Threshold", color = "blue") +

geom_point(aes(FPR[51], TPR[51]),

color = "darkgreen", size = 5) +

annotate("text", x = .3, y = .58, label = "Best Threshold", color = "darkgreen")

4.8.13 Determining Thresholds Using Loss Functions - a Decision Theoretic Approach

Let’s assume the actual value is either \(Y\) = 1, with probability \(p\), or \(Y=0\) with probability \((1-p)\). We then have the choice of predicting \(\hat{Y} = 1\) or \(\hat{Y} = 0\) based on a threshold for \(p\) that we get to choose. We want to choose a threshold for \(p\) that minimizes our decision risk or the expected loss from our where we set our threshold.

To structure our approach, let’s create a 2 x 2 matrix of the actual values and our possible predictions \(\hat{Y}\) where the intersection is one of our four possible outcomes.

We then enter the associated loss for each outcome, based on whether \(\hat{Y} = Y.\)

When we predict correctly, \(\hat{Y} = Y\), (on the main diagonal from top left to bottom right), the value of the loss function is 0, as we were correct.

-

When we predict incorrectly, \(\hat{Y} \neq Y\), (the off-main diagonal), we represent the loss functions for each error as \(L_0\) and \(L_1\).

- \(L_1\) is the loss associated with a False Positive.

- \(L_2\) is the Loss associated with a False Negative.

Our table looks like the following:

| Loss function | \(Y = 1\) | \(Y= 0\) |

|---|---|---|

| \(\hat{Y} = 1\) | 0 | \(L_1\) |

| \(\hat{Y} = 0\) | \(L_0\) | 0 |

It is not clear that \(L_1 = L_0\) in all situations.

- Consider the choice of a car insurance company. Is the loss the same when they lose a customer (don’t issue a policy) to a safe driver (False Negative) or if they issue a policy to a driver who then has an accident (False Positive)?

In a decision theory approach, we estimate \(p\) with \(\hat{p}\) and want to find a threshold for the probability \(\hat{p}\) so we can create a decision rule to minimize the risk (expected loss) of future predictions.

How do we calculate the risk for each choice from our 2x2 table?

- The actual or true \(p(Y = 1) = p\) (the expected value (mean) of the Bernoulli Distribution).

We define Risk as the Expected Value of the Loss for a prediction (or it may be referred to as the Expected Cost of a prediction).

- Many risk models are 2x2 where they estimate the probability of occurrence for a risk and the effect of the the risk if it happens.

- Organizations develop risk mitigation strategies to minimize one or both dimensions.

- They often consider risks across Cost, Performance, and Schedule.

We want to minimize the Risk (Expected Value of the Loss) over all possible decision choices (our choices are to predict 1, or 0).

- This means we want to minimize the Expected Loss expressed as:

\[ \text{Risk}= E[\text{Loss}] = \sum_{i = Choices}p(\text{Loss value}_i)\times \,\,\text{Loss value}_i \tag{4.10}\]

Equation 4.10 follows the standard approach to calculate the expected value of a discrete random variable; the sum, over all possible values, of the probability of a value \(\times\) that value.

In our example Loss function, Equation 4.10, we have two choices for a prediction (1, or 0), so we can calculate the risk for each choice.

For the choice of \(\hat{y} = 1\), we use the top row of the table to get:

\[\text{Risk}(\hat{y} = 1) = p\times 0 + (1-p)\times L_1 = (1-p)L_1. \tag{4.11}\]

- Equation 4.11 is the risk or expected loss of a False Positive.

For the choice of \(\hat{y} = 0\), we use the second (bottom) row of the table:

\[\text{Risk}(\hat{y} = 0) = = p \times L_0 + (1-p)\times 0 = p L_0 \tag{4.12}\]

- Equation 4.12 is the risk or expected loss of a False Negative.

Given the two choices for our prediction, we can compare their risk values from Equation 4.11 and Equation 4.12.

To minimize our risk, we choose the prediction with the smaller risk value as our prediction.

Combining Equation 4.11 and Equation 4.12, we can create a decision rule to minimize our prediction risk.

\[ \text{Predict } \hat{Y} = 1 \quad \text{ if }\quad (1-p)L_1 < p L_0 \tag{4.13}\]

Equation 4.13 can be read as: Predict 1 when the Risk of a False Positive is less than the Risk of a False Negative.

An equivalent decision rule is: \[ \text{Predict } \hat{Y} = 0 \quad \text{ if }\quad (1-p)L_1 > p L_0 \tag{4.14}\]

Equation 4.14 can be read as: Predict 0 when the Risk of a False Positive is greater than the Risk of a False Negative.

We can manipulate Equation 4.13 to create a ratio of the two expected losses:

\[ \text{Predict } \hat{Y} = 1 \text{ if }\quad \frac{L_1}{L_0} < \frac{p}{1-p} \quad \text{(the odds ratio)} \tag{4.15}\]

We can also interpret Equation 4.15 in terms of decision “guidance” for making a predication:

- Predict \(\hat{y} = 1\) if it has a “small” loss \(L_1\) (if it is wrong), or,

- Predict \(\hat{y} = 1\) if \(p(Y=1)\) is “large”.

We need to define what “small” and “large” mean to create a useful rule.

Let’s look at the case when \(L_1 = L_0\), so there is the same loss for either false prediction (this is what we have already been assuming in previous examples).

- When \(L_1 = L_0\), Equation 4.15 implies “large” is when the odds ratio is larger than 1 or \(1< \frac{p}{1-p}\). When does this happen?

- Let’s do the math …

\[ p \text{ is Large} \iff1 <\frac{p}{1-p} \iff (1-p)< p \iff 1< 2p \iff 1/2 < p. \]

- So, set the threshold for \(\hat{p}\) at \(\hat{p} = 1/2\) and predict \(\hat{y}_i = 1\) for any \(\hat{p}_i \geq 1/2\).

What about when \(L_1 \neq L_0\)?

Let’s assume \(L_1 = 2L_0\); the loss from a false positive is twice as bad as a false negative.

We can use Equation 4.15 to predict \(\hat{y} = 1\) when:

\[ \frac{L_1}{L_0} < \frac{p}{1-p} \iff \frac{2L_0}{L_0} < \frac{p}{1-p} \iff 2 < \frac{p}{1-p} \iff 2-2p < p \iff 2/3<p \]

- So, set the threshold for success at \(\hat{p} = 2/3\) and predict \(\hat{y}_i = 1\) for any \(\hat{p}_i \geq 2/3\).

We are setting the threshold at a specific value of equality and making decisions for values greater than or equal to the threshold. Recall that for continuous random variables, the probability that we will get any specific value is 0 so it does not change the result by using $\geq$.- We are setting a high threshold as the risk of a False Positive is relatively high and we want to minimize them.

What if the Loss for \(L_1 = cL_0\) for some positive constant \(c>0\).

\[ \frac{L_1}{L_0} < \frac{p}{1-p} \iff \frac{cL_0}{L_0} < \frac{p}{1-p} \iff c < \frac{p}{1-p} \iff c-cp < p \iff \frac{c}{1+c}<p \] What happens as \(c\) gets really large and goes to \(\infty\)?

\[ \frac{c}{1+c}\leq p \longrightarrow \frac{c}{c}\leq p \implies 1 \leq p. \] The higher the risk of a false positive to a false negative, the higher the optimal threshold until you never predict \(\hat{y} = 1\).

The ratio of the loss functions for the False Positives and False Negatives will establish the threshold for \(\hat{p}\) to determine when to decide to predict or \(\hat{y_i} = 1\) given a \(\hat{p}_i.\)

You can also extend the analysis to include gains or benefits for correct decisions.

4.8.14 Summary of Logistic Regression

Logistic Regression is a useful method with binary response variable, where \(p(Y=1) = p\) and \(p(Y=0) = (1-p)\)s and we want to predict the conditional probability \(\hat{p}=p(Y = 1|X)\).

We saw the method has s few major differences from linear regression.

- The need to transform the data back and forth between the \(p\) pace and the \(logit(p)\) space.

- The need to use maximum likelihood and iterate to a final solution for the estimates.

- The need to identify a threshold for \(\hat{p}\) such that we predict \(\hat{y}_i = 1 \iff \hat{P}_i \geq p_{threshold}\).

Our steps to get to a \(\hat{p}_i\):

To avoid challenges with estimating a binary response with linear regression, we use the logistic function to model \(\hat{p}=p(Y = 1|X) = \frac{e^{\beta_0 + \beta_1 X_1 + \cdots + \epsilon}}{1 + e^{\beta_0 + \beta_1 X_1 + \cdots + \epsilon}}\).

Since the Logistic function is non-linear in \(X\), we transform from \(p\) space to \(logit(p)\) space such that \(\log\frac{p}{1-p} = \beta_0 + \beta_1 X_1 + \cdots + \epsilon\) is linear in \(X\). This is known as the logit or log-odds transformation and it enables us to work with a linear combination of the \(X\).

The \(\text{logit}(y|x)\) has no closed form solution, so we estimate the \(\beta_i\), calculate the \(logit(p_i)\), convert that back to \(\hat{p_i}\) using the logistic function, and then maximize a Likelihood function (of the \(\hat{p}_i\)to calculate the likelihood of \(\hat{p}=p(Y = 1|X)\).

We then use the information from the likelihood to generate updates to the \(\beta_i\) and rebuild a new model in the logit space and go back to the \(\hat{p}\) space to calculate the new likelihood.

We iterate through the solutions until the difference in the likelihoods is below a threshold and declare convergence.

We then report the \(\hat{p}_i\), the \(\hat{\beta}_i\), and the Null and Residual deviance.

We use a method to determine the threshold so that we predict \(\hat{y}_i = 1 \iff \hat{P}_i \geq p_{threshold}\). We could use a method such as the decision theoretic approach if we believe the loss from a False Positive is much different than the loss from a False Negative.

Next, we will next go from this type of Decision theory approach where we consider Loss functions to Bayesian Decision Theory where we add information about the prior distribution and we want to minimize our losses.

We will translate prior distribution probabilities \(p(Y=k)\), from before we look at the \(X\) data, to posterior probabilities \(p(Y=k|X)\), where we update our prior based on the \(X\) data.

Recall Bayes Formula for conditional probabilities:

\[ p(A|B) = \frac{p(A)p(B|A)}{p(B)} \]

We will use Bayes formula as a basis for generative models such as Linear and Quadratic Discriminant Analysis.

4.9 Generative Models (Chap 4.4)

So far we have covered two classification algorithms or models.

- KNN is a non-parametric method where we estimated \(\hat{Y} = l\) by choosing the level \(l\) with the maximum probability of occurrence among the set of \(k\) nearest neighbors (the points closest to \(Y\) based on Euclidean distance).

- Logistic Regression is a parametric method where we predict \(\hat{p}(Y = 1 | X)\) based on using the “fixed” values of the \(X\) to estimate the parameters \(\beta_j\) of the \(\text{logit}(Y)\) that *maximize the likelihood of \(p(Y=1|X)\). Logistic regression is known as a discriminative model.

Discriminative models (aka conditional models) are a class of parametric methods (algorithms) that estimate the conditional probability of \(P(Y=1|X)\) to determine boundaries that discriminate (or differentiate) between regions of the points in \(X\) so one can estimate the conditional probability \(p(Y|X)\), when \(X\) is in a specific region.

- Discriminative models ignore any interactions among the \(X\) since they do not care about the joint probability distribution of \(p(Y,X)\)

- The terms discriminative and generative are used in multiple ways that can appear at times to overlap since some specific methods have features of both.

We will now go beyond the binary classification of Logistic regression to where instead of two levels, 0, 1, the response variable \(Y\) has \(m\) classes/categories/levels. We will want to predict the class \(\hat{y} = k\) where \(k\) ranges from \(1 \ldots, m\).

We will look at Linear Discriminant Analysis (LDA) and Quadratic Discriminant Analysis, two methods that do discriminate between regions but they are generative models.

We will now discuss several different kinds of generative models.

Generative models are a class of parametric methods (algorithms) for estimating the joint probability distribution \(p(Y, X)\) first and then using the joint distribution to estimate the conditional probability \(p(Y=l|X)\).

- Generative models usually require more data as there are many more parameters to estimate for the joint distribution.

- Generative models can be more flexible as one can estimate interactions among the \(X\) and non-linear relationships.

- Given an estimated joint probability distribution, generative models allow one to generate new samples of training data based on that joint probability distribution.

4.9.1 Conditional Probability and Bayes’ Theorem (Rule/Law/Formula)

To work with generative models, we should understand a bit about Joint and Conditional Probabilities.

Given a response variable \(Y\) and a set of predictor variables \(X,\) we are interested in the possibility that there exists a joint probability distribution between \(Y\) and \(X\) such that knowing something about the value of \(X\) gives us information about the value of \(Y\).

Probability theory defines the conditional probability of two events \(A\) and \(B\) as the probability that \(A\) occurs given \(B\) is known or assumed to have occurred (so \(P(B) \neq 0\)). This can be written as:

\[ P(A|B) = \frac{P(A \text{ and } B)}{P(B)} \iff P(A|B)P(B) = P(A \text{ and } B) \tag{4.16}\]

- \(P(A \text{ and } B)\) can be thought of spatially as the overlap of \(P(A)\) and \(P(B)\), e.g., in a Venn diagram.

What does it mean if \(A\) and \(B\) are independent?

- Then there is no overlap, and \(P(A|B) = P(A)\).

It can also be seen, by reversing \(A\) and \(B\) in Equation 4.16, that

\[ P(B|A) = \frac{P(A \text{ and } B)}{P(A)} \iff P(B|A)P(A) = P(A \text{ and } B) \tag{4.17}\]

Combining Equation 4.16 and Equation 4.17, we get

\[ P(A|B)P(B) = P(B|A)P(A) \] Which then leads to:

\[ P(A|B) = \frac{P(B|A)P(A)}{P(B)} = \frac{P(A)P(B|A)}{P(B)}. \tag{4.18}\]

The formula in Equation 4.18 is known as Bayes Theorem.

Breaking out \(P(B)\) based on conditional probabilities for \(p(A)\) and \(A\) complement \(A'\), Equation 4.18 is also written at times as:

\[ P(A|B) = \frac{P(B|A)P(A)}{P(B|A)P(A) + P(B |A')P(A')}. \tag{4.19}\]

- Bayes Theorem helps solve a number of problems in inverse probability; about how to use knowledge about events that already occurred, to learn about future events.

- His work inspired a different approach to interpretations and methods in probability theory that considered the strength of evidence about distributions instead of making the assumptions about the distributions often used in “frequentist” methods.

- This field is known as Bayesian Statistics and it gained ground in the late 20th century as computers enabled the numerous computations on large data sets it can require.

We will use Equation 4.18 to explore the joint probability distributions of \(Y\) and \(X\) in generative models for classification.

4.10 Linear Discriminant Analysis (LDA) and Quadratic Discriminant Analysis (QDA)

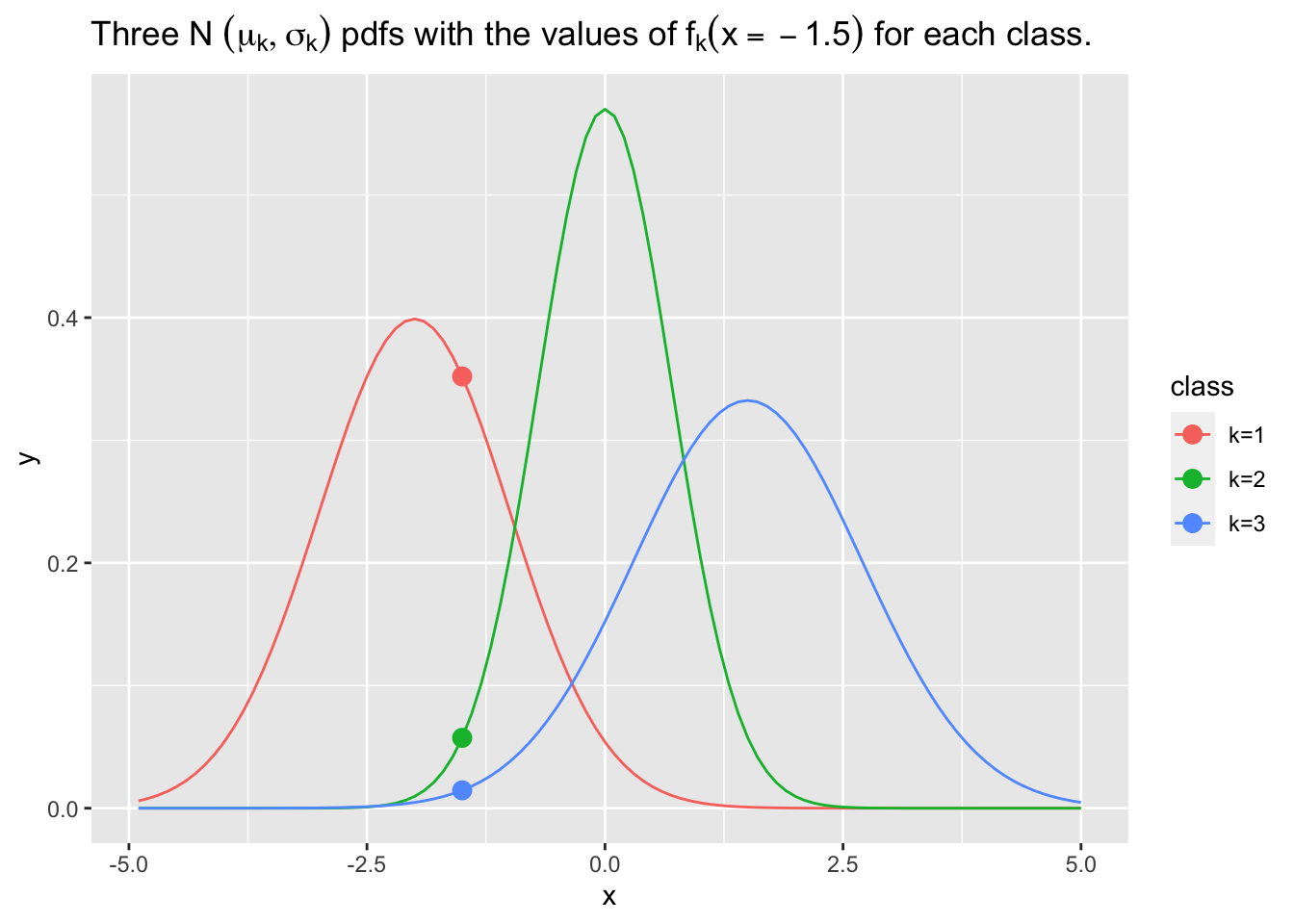

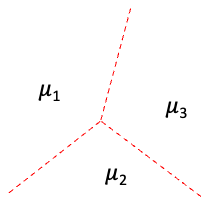

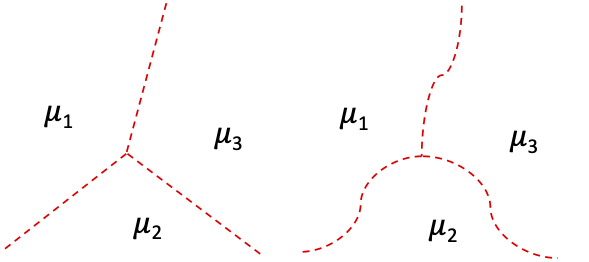

LDA and QDA are generative methods. This means they first

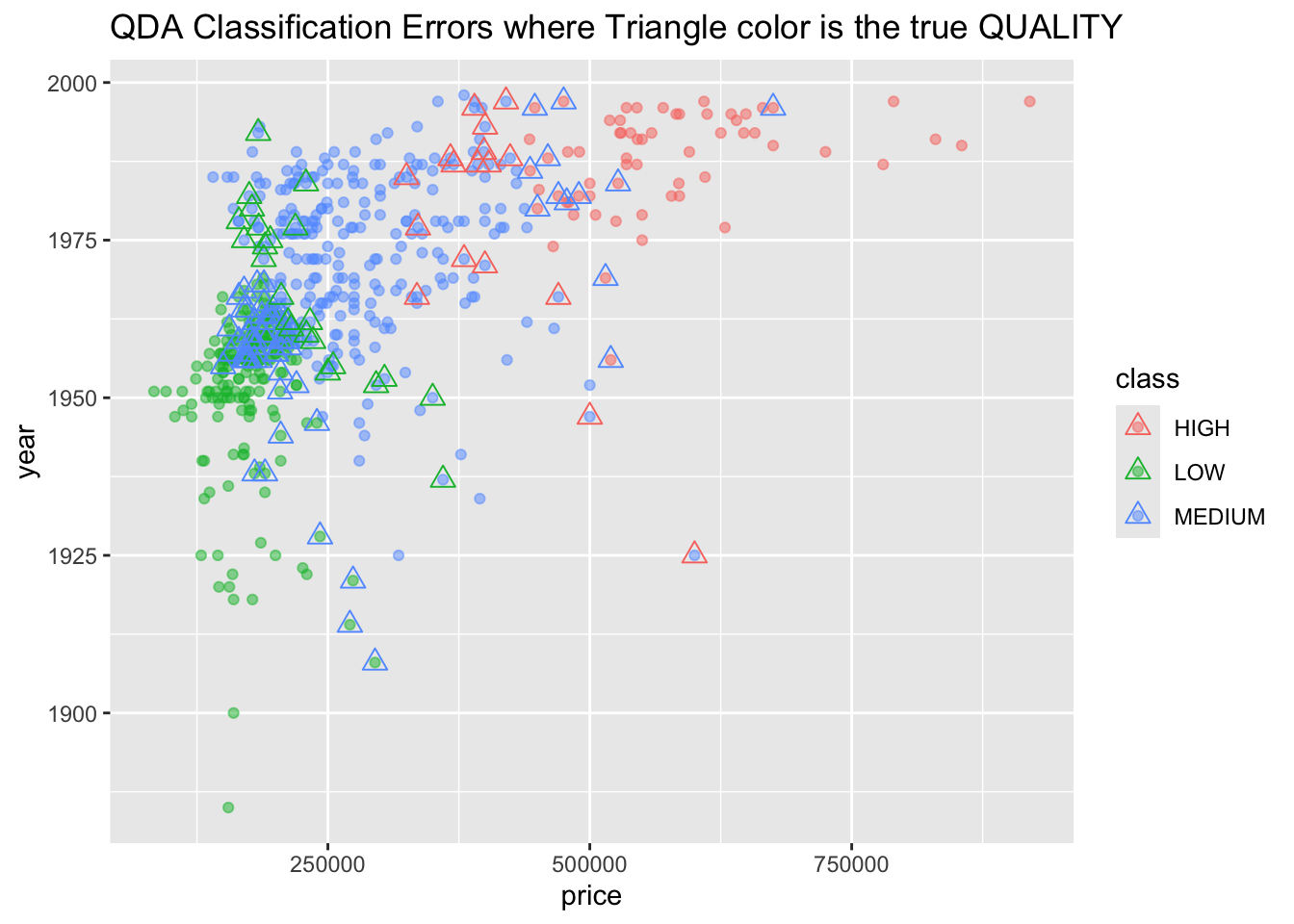

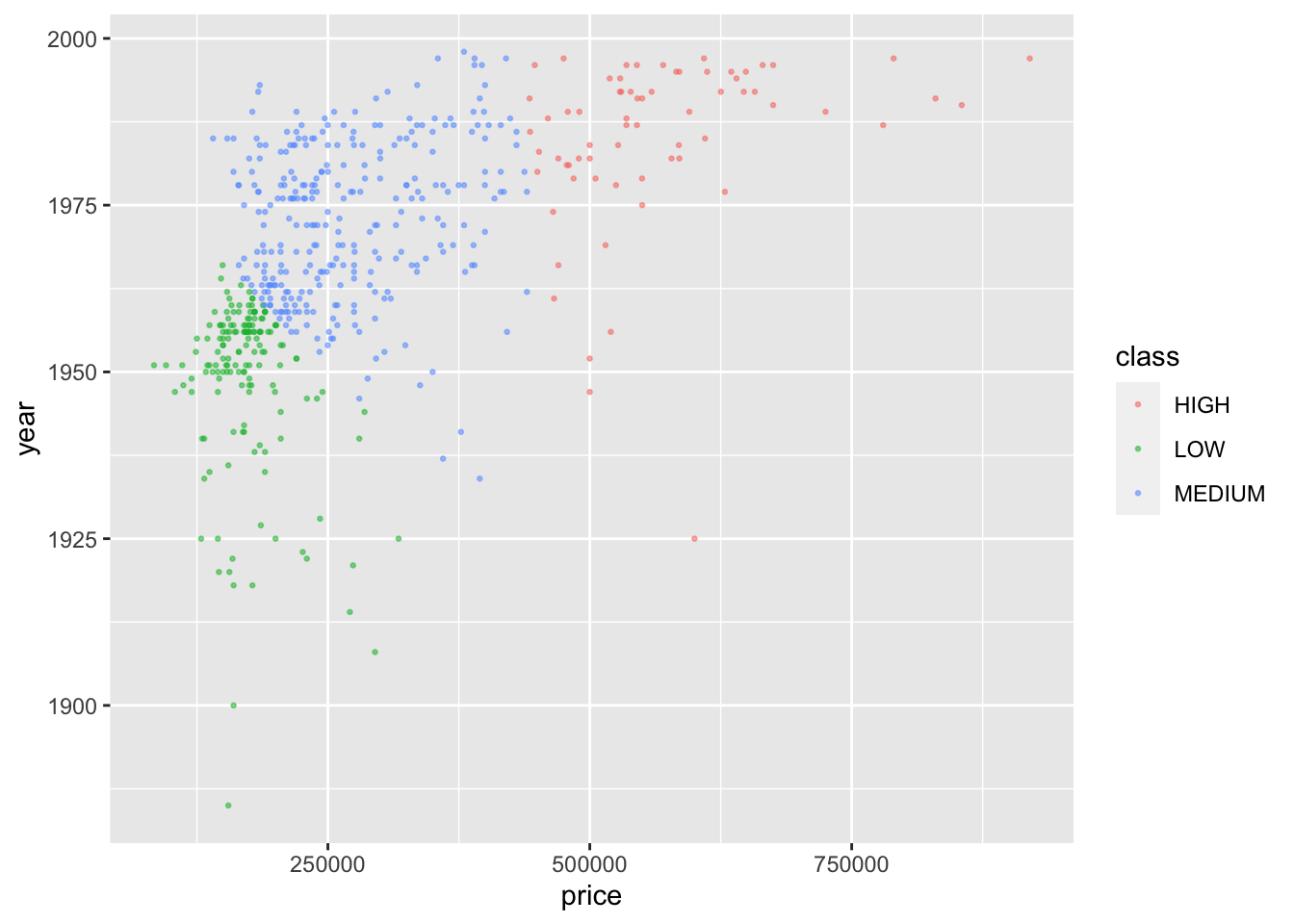

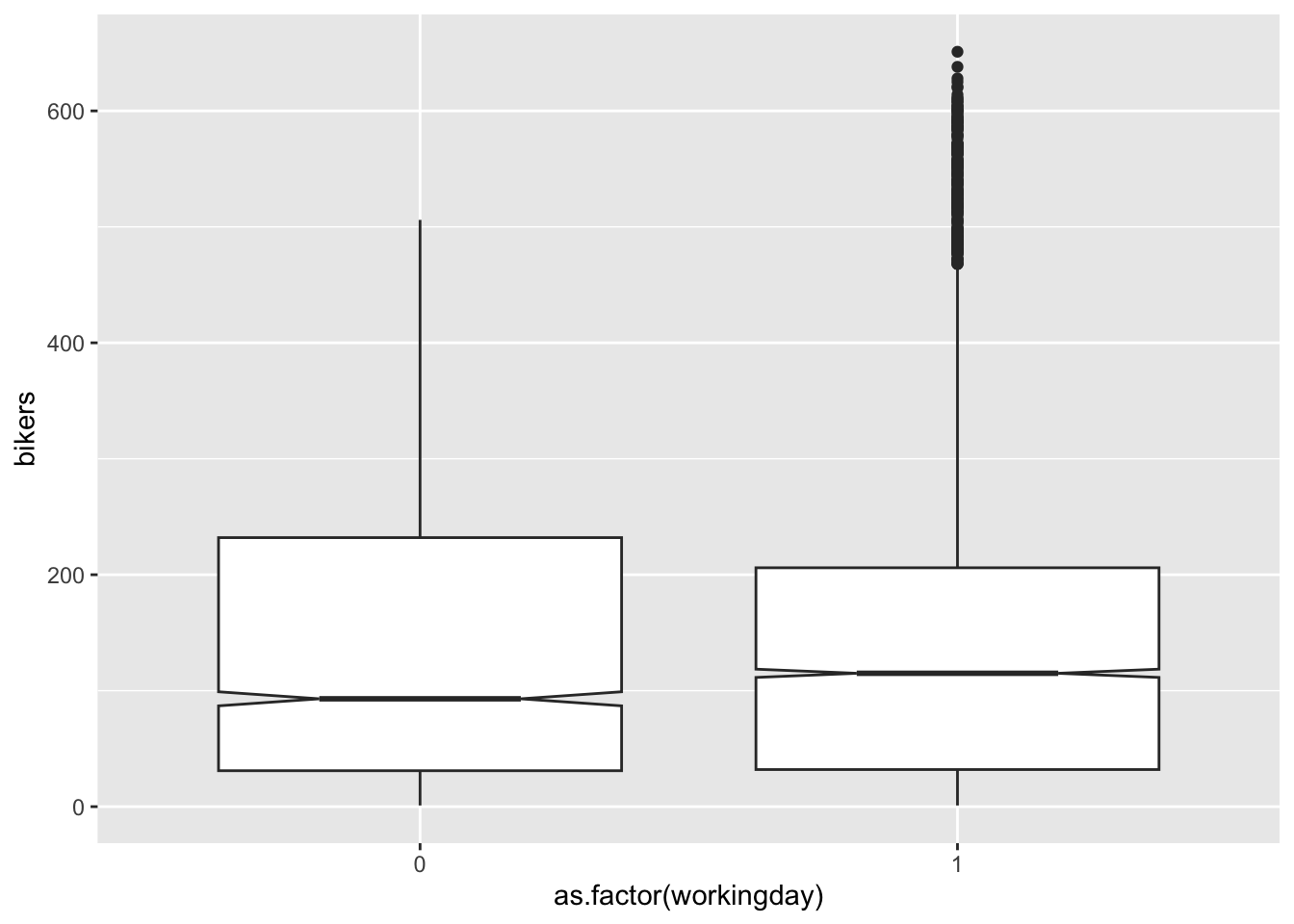

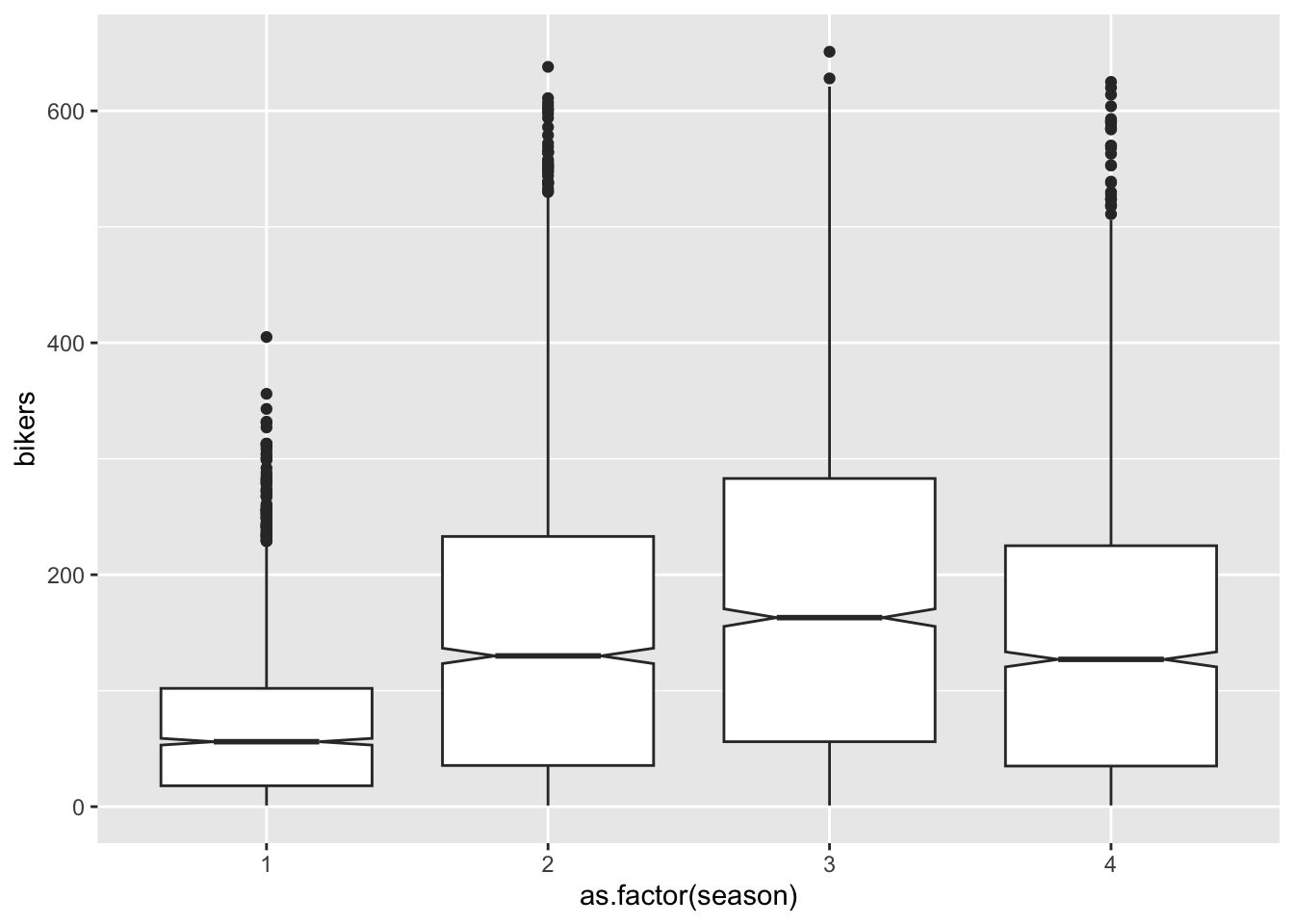

- Estimate the joint distribution of \(Y\) and \(X\).